Accessing Host-Based Data

Many organizations have their data tied up in different hardware and software platforms. While it may not be feasible or advantageous to move that data to a centralized location, there is profit in making distributed data appear as though it can be accessed from a single data source. This strategy fits in well with the multiple service programming model that allows programmers to modify their client-based applications without having to understand the particulars of where the data is stored.

ADO for the AS/400 and VSAM

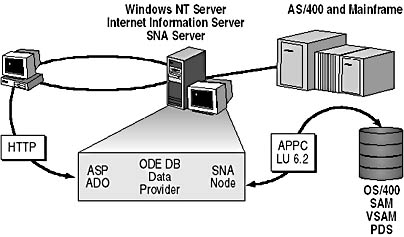

This section covers ADO's role in allowing Windows-based systems to communicate with VSAM and AS/400 systems, as illustrated in Figure 9.7. To describe how this ability works, developers need to have a basic understanding of how AS/400 and mainframe systems communicate. First, we'll briefly introduce how the communication occurs. Then, we'll describe the method IBM systems use to communicate, DDM (Distributed Data Management) and how OLE DB interacts with DDM to expose data through Microsoft SNA Server.

Most computer systems use data storage, data management, and data access methods that are unique to that system. This causes issues of interoperability when information systems professionals require different systems to share data or allow users to access data across multiple systems simultaneously. Added to this problem are the issues of networking and connecting disparate systems such as SNA Server and Windows NT.

Figure 9.7 Accessing AS/400 data

IBM devised the Distributed Data Management (DDM) architecture to provide a standardized method for data access between multiple similar hardware, dissimilar hardware systems, and multiple operating systems. DDM supports three types of data storage types: records, byte streams, and relational databases.

As mentioned, Microsoft devised OLE DB to provide a set of data access interfaces to enable multiple data stores to work seamlessly together. Independent software vendors implement OLE DB in their data providers to integrate disparate data storage, data management, and data access systems.

As noted in Figure 9.7, Microsoft has developed the OLE DB Provider for AS/400 and VSAM, an OLE DB data provider for accessing SNA data sources using DDM. This provider complies with Level 4 of the IBM DDM architecture and the OLE DB architecture. The OLE DB Provider uses SNA Server, the reliable platform for host integration as the networking bridge between the SNA host and Windows NT operating systems.

The Microsoft OLE DB Provider for AS/400 and VSAM supports the following features:

- Set attributes and a record description of a host file (column information).

- Position to the beginning record or the ending record in a file.

- Navigate to the previous or next record in a file.

- Seek to a record based on an index.

- Lock files and records.

- Change records in a file.

- Insert new records and delete records in a file.

- Preserve file and record attributes.

DDM and OLE DB

A DDM process takes place between two computer systems: the first is the source system, where the program requesting the data resides; the second is the target system, where the data resides.

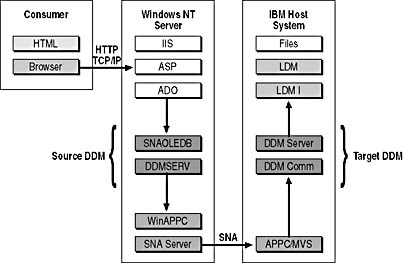

File access using DDM and OLE DB involves the source application first being written to acquire data records. These data records originate from a host file using a specific data source location that is embedded in an initialization string. The ADO run-time DLLs respond to the source application's request for an open connection by communicating with the OLE DB data provider using the information specified in the initialization string. As seen in Figure 9.8, the OLE DB Provider for AS/400 and VSAM interprets the OLE DB requests and translates them to DDM commands. The initialization string will specify the Advanced Program to Program Communication (APPC) logical units (LU) alias on an SNA Server computer, code page conversion, user ID, and password. The provider uses WinAPPC and SNA Server for host connectivity.

Figure 9.8 OLE DB access with APPC

The DDM architecture and APPC protocol takes care of issues related to security, error handling, resource locking, and flow control. When the source DDM server receives the data, it is converted from DDM to OLE DB data types native to the source system.

To read and write records within a host file, the computer application must know the record format—primarily the field size and field data format. However, on the mainframe, only the length of the records within the file is described to the system. The system has no knowledge of field definitions within the file. Mainframe files are often referred to as record-level or program-described files. Mainframe application developers embed the record format of host data files as part of the application program. The OLE DB Provider for AS/400 and VSAM accesses host record files outside of the host application. In other words, no field-level descriptions, or metadata, exist to describe the host records. The OLE DB Provider works around this problem by reading a locally stored computer file that contains the metadata mapping for the host file. The computer application developer creates this local file, which is called a host column description (HCD) file. The HCD file contains a file identifier (name), host data type (length, precision, scale), computer data type (OLE DB data type), and host CCSID (code page). With this information, the OLE DB Provider can transform the host data record into a computer data record composed of individual information fields. Additionally, the OLE DB Provider converts the host data from host EBCDIC to UNICODE, then to the target computer ANSI code page using the National Language Support API.

Files in OS/400 can be either record-level or field-level described, where the fields in the file are described to the operating system. Field-level described files are often referred to as externally described files. The OS/400 keeps a record of the field-level descriptions available for use by any application. Traditionally, AS/400 developers have used the system-stored field-level record descriptions. This reduces the amount of programming required to define records. Application programmers utilize three primary elements to describe fields within a file to the AS/400 operating system: Data Description Specification (DDS), Interactive Data Definition Utility (IDDU), and SQL. Through all of these elements, the field is described by common attributes such as name, alias, length, data type, data validity restrictions, and text description. Use of a local HCD file is not necessary to describe the record format for data stored in the AS/400, because the Microsoft OLE DB Provider for AS/400 and VSAM uses DDM commands to retrieve the record description. Optionally, a computer application developer can use an HCD file to access AS/400 program-described, otherwise known as AS/400 flat files.

Microsoft OLE DB Provider supports the following objects for AS/400 and VSAM:

- Enumerator

- DataSource

- Session

- Rowset

- Command

- View

- Index

- ErrorObject

The Transaction and TransactionOptions objects are not supported.

Microsoft ADO Provider supports the following objects for AS/400 and VSAM:

- Connection

- Recordset

- Field

- Command

- Parameter

- Collection

- Error

COMTI and Mainframe Data Integration

This section details how Windows clients can integrate well with mainframe environments such as CICS and IMS using Microsoft's Component Transaction Integrator (COMTI). COMTI is a feature of SNA Server 4.0. Working in conjunction with MTS, COMTI makes CICS and IMS programs appear as typical MTS components that can be used with other MTS components for building distributed applications. COMTI brings drag-and-drop simplicity to developing sophisticated applications that integrate Web transaction environments with mainframe transaction environments.

The basis of this new technology is the COMTI Component Builder. The Component Builder provides a COBOL Wizard which helps the developer determine what data definitions are needed from the COBOL program, and generates a component library (.tlb file) that contains the corresponding automation interface definition. Because of the strong integration with MTS, when the component library is dragged and dropped into the MTS Explorer (or MMC snap-in), a COM object is created that can invoke a mainframe transaction. This allows developers to build applications using Active Server technologies that can easily include mainframe transaction programs (TP). Similarly, mainframe developers can easily make mainframe TPs available to Windows-based Internet and intranet applications. Developers do not have to learn new APIs, nor do they have to program custom interfaces for each application and mainframe platform. Because COM Transaction Integrator for CICS and IMS does all of its processing on the Windows NT Server, there is no COMTI executable code required to run on the mainframe, and developers are not required to rewrite most mainframe COBOL programs. Client applications simply make method calls to an Automation server, and mainframe TPs simply respond as if called by another mainframe program.

COMTI saves time and effort spent programming a specialized interface with the mainframe. As a generic proxy for the mainframe, COMTI intercepts object method calls and redirects those calls to the appropriate mainframe program; it also handles the return of all output parameters and return values from the mainframe. When COMTI intercepts the method call, it converts and formats the method's parameters from the representation understandable by the Windows NT platform into the representation understandable by mainframe Transaction Programs (TPs). All COMTI processing is done on Windows NT Server, and no executable code is required to run on the mainframe. COMTI uses standard communication protocols (for example, LU6.2, provided by SNA Server) for communicating between Windows NT Server and the mainframe.

COMTI provides an interface between Automation components and mainframe-based applications. Running on Windows NT Server, COMTI-created components appear as simple Automation servers that developers can easily add to their application. Behind the scenes, COMTI functions as a proxy that communicates with an application program running on IBM's Multiple Virtual Storage (MVS) operating system.

Applications that run in part on Windows platforms and in part on the mainframe are distributed applications. COMTI supports all distributed applications that adhere to the Automation and distributed COM (DCOM) specifications, although not all parts of the application have to adhere to these standards.

A client application uses COMTI to access a transaction process (TP) running on the mainframe. The specific TPs supported in COMTI are IBM's Customer Information Control System (CICS) and IBM's Information Management System (IMS). An example of this type of distributed application might be simply reading a DB2 database on the mainframe to update data in a SQL Server database on Windows NT Server.

The client application can be running on Windows NT Server, Windows NT Workstation, Windows 95/98, or on any other platform that supports DCOM. Because DCOM is language-independent, developers can build their client application using the languages and tools with which they are most familiar, including Visual Basic, Visual Basic for Applications, Visual C++, Visual J++, Delphi, Powerbuilder, and Microfocus Object COBOL. That client can then easily make calls to the COMTI Automation object (or any other Automation objects) registered on Windows NT Server.

COMTI Makes It Easier to Extend Transactions

Although COMTI can be used with simple mainframe data accessing applications, it becomes an even more powerful tool by allowing developers to extend transactions from the Windows NT Server environment to the mainframe. Windows-based applications that use MTS can include CICS applications in MTS-coordinated transactions (with SNA Server 4.0 SP2, COMTI includes IMS transactions now that IBM provides Sync Level 2 support for IMS via IMS 6.0). COMTI integrates seamlessly with MTS so that:

- Windows developers can easily describe, execute, and administer special MTS objects that access CICS or IMS Transaction Programs (TPs).

- Mainframe developers can easily make mainframe TPs available to Windows-based Internet and intranet applications.

- MTS component designers can easily include mainframe applications within the scope of MTS, two-phase commit (2PC) transactions.

Developers using MTS in their applications can decide which parts of the application require a transaction and which parts don't. COMTI extends this choice to the mainframe as well, by handling both calls that require transactions and calls that do not. For applications that require full integration between Windows-based two-phase commit and mainframe-based Sync Level 2 transactions, COMTI provides all the necessary functionality. COMTI does this without requiring developers to change the client application, without placing executable code on the mainframe, and with little or no change to the mainframe TPs. The client application does not need to distinguish between the COMTI component and any other MTS component reference.

COMTI has two visible interfaces:

- The COMTI Management Console

- The Component Builder

The COMTI run-time proxy provides the Automation server interface for each COMTI-created component and communicates with the mainframe programs. The run time does not have a visible interface.

The COMTI Management Console collects information about the user's environment and configures COMTI for the Windows NT Server and for the MVS mainframe TP environment.

The Component Builder (CB) provides application developers an easy to use, GUI tool for creating the Windows-based component libraries (.tlb files). The CB also allows developers to either start from or create the COBOL data declarations used in the mainframe CICS and IMS programs. The CB is a stand-alone tool that does not require that any language, such as Visual Basic or C++, be installed on the same computer.

At run time, COMTI intercepts method invocations for a COMTI component library and provides the actual conversion and formatting of the parameters and sends and receives them to and from the appropriate mainframe program. COMTI uses the component created by the developer using CB at design time to transform the parameter data being passed between Automation and the mainframe transaction program. COMTI also integrates with MTS and Microsoft Distributed Transaction Coordinator to provide two-phase commit (2PC) transaction support.

The Component Builder isn't required on a deployment computer. The run-time proxy and COMTI Management Console are required on a deployment computer.

Differences Between Windows and Mainframe Terminology

The term "transaction" is defined differently depending upon the computing environment. A transaction in the MTS environment is a set of actions coordinated by the Distributed Transaction Coordinator (DTC) as an atomic unit of work. In contrast, a transaction in the CICS environment has a more general meaning. Any CICS program that uses APPC with another CICS program is referred to as a "Transaction Program" (TP). APPC is a set of protocols developed by IBM specifically for peer-to-peer networking among mainframes, AS/400s, 3174 cluster controllers, and other intelligent devices. A TP can provide any type of service, including terminal interaction, data transfer, database query, and database updates.

For a TP to communicate directly with another TP using APPC, the two programs must first establish an LU6.2 conversation with each other. LU6.2 is the de facto standard for distributed transaction processing in the mainframe environment and is used by both CICS and IMS subsystems. One program can interact with another program at one of three levels of synchronization:

- Sync Level 0 has no message integrity beyond sequence numbers to detect lost or duplicate messages.

- Sync Level 1 supports the CONFIRM-CONFIRMED verbs that allow end-to-end acknowledgment for client and server.

- Sync Level 2 supports the SYNCPT verb that provides ACID (atomicity, consistency, isolation, and durability) properties across distributed transactions via two-phase (2PC) commit.

Of the three sync levels, only Sync Level 2 provides the same guarantees provided by an MTS transaction. Thus, in the CICS and IMS environment, the term "transaction program" may or may not imply the use of 2PC. The term simply refers to the program itself. It is only when the term "transaction" is qualified as Sync Level 2 that the MTS developer and the mainframe developer can be sure that they are referring to the same thing. Likewise, a "Sync Level 2 transaction" would theoretically ensure that both the Windows and mainframe developers are working congruently.

COMTI supports both Sync Level 0 and Sync Level 2 conversations. If a method invocation is part of a DTC coordinated transaction, COMTI uses Sync Level 2 to communicate with CICS. If a method invocation is not part of a DTC coordinated transaction, then COMTI uses Sync Level 0.

EAN: N/A

Pages: 182