3.3 The DNS Root

|

| < Day Day Up > |

|

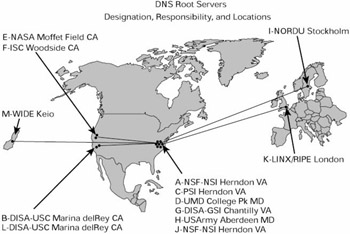

We are now in a better position to assess the technical, economic, and institutional significance of the DNS root. The term 'DNS root' actually refers to two distinct things: the root zone file and the root name servers. The root zone file is the list of top-level domain name assignments, with pointers to primary and secondary name servers for each top-level domain. The root server system, on the other hand, is the operational aspect-the means of distributing the information contained in the root zone file in response to resolution queries from other name servers on the Internet. Currently, the root server system consists of 13 name servers placed in various parts of the world. (figure 3.6). The server where the root zone file is first loaded is considered authoritative; the others merely copy its contents. The additional servers make the root zone file available more rapidly to users who are spatially distributed, and provide redundancy in case some root servers lose connectivity or crash.

Figure 3.6: Location of 13 root servers

By now it should be evident that the real significance of the DNS root has to do with the content of the root zone file. The most important thing about the DNS root is that it provides a single, and therefore globally consistent, starting point for the resolution of domain names. As long as all the world's name servers reference the same data about the contents of the root zone, the picture of the name resolution hierarchy in one part of the world will continue to match closely the picture in any other part of the world.

In many ways, the root zone file serves a function identical to the NIC in the old days of hosts.txt. It is still a central point of coordination, and there is still authoritative information that must be distributed from the central coordinator to the rest of the Internet. However, the central authority's workload has been drastically reduced, and as a corollary, so has the dependence of the rest of the Internet on that central point. The center doesn't need to be involved in every name assignment in the world, nor does it need to hold a directory capable of mapping every domain name in the world to the right IP address. It just needs to contain complete and authoritative assignment and mapping information for the top level of the hierarchy. And that information can be cached by any other name server in order to reduce its dependence on the root.

3.3.1 The Root and Internet Stability

The root server system, though vital to the domain name resolution process, is not that critical to the day-to-day operation of the Internet. As noted, all the root servers do is answer questions about how to find toplevel domains. Reliance on the root is diminished by caching and zone transfers between primary and secondary name servers. As caches accumulate, more name-to-address correlation can be performed locally. With the sharing of zone files, many names can be resolved successfully without using the root even when the primary name server is down. Indeed, the root zone file itself can be downloaded and used locally by any name server in the world. The data are not considered proprietary or sensitive (yet), and with less than 300 top-level domain names in service, it is not even a very large file. The name servers of many large ISPs directly store the IP addresses of all top-level domain name servers, so that they can bypass the root servers in resolving names. Simple scripts in the Perl programming language allow them to track any changes in the TLD name servers.

To better understand the role of the root, it is useful to imagine that all 13 of the root servers were wiped off the face of the earth instantaneously.

What would happen to the Internet? There would be a loss of functionality, but it would not be sudden, total, and catastrophic. Instead, the absence of a common root would gradually impair the ability of computers on the Internet to resolve names, and the problem would get progressively worse as time passed. Users might first notice an inability to connect to Web sites at top-level domains they hadn't visited before. Lower-level name servers need to query the root to locate TLD name servers that have not been used before by local users. Worse, cached DNS records contain a timing field that will delete the record after a certain number of days, in order to prevent name servers from relying on obsolete information. When a cached record expires, the name server must query the root to be able to resolve the name. If the root is not there, the name server must either rely on potentially obsolete records or fail to resolve the name. Another major problem would occur if any changes were made in the IP addresses or names of top-level domain name servers, or if new top-level domains were created. Unless they were published in the root, these changes might not be distributed consistently to the rest of the Internet, and names under the affected top-level domains might not resolve.

Over time, the combination of new names, cache expiration, and a failure to keep up with changes in the configuration of top-level domain servers would confine reliable name resolution to local primaries and secondaries. There would be a gradual spread of entropy throughout the Internet's domain name system. The DNS would still work, but it would become balkanized.

That hypothetical disaster scenario, however, does not take into account the possibility that Internet users would recreate a common root server system or some other form of coordination. Users and service providers are not going to stand idly by while the tremendous value of global Internet connectivity withers away. The root servers are just name servers, after all, and there are thousands of high-performance name servers scattered throughout the global Internet. The critical information held by the root name servers-the root zone file-is not that hard to get. So it is likely that some organizations, most likely larger Internet service providers or major top-level domain name registries, would step into the breach. They could install stored copies of the root zone in their own name servers and offer substitute root name service. Operators of top-level domain name registries would have a powerful incentive to provide the necessary information about themselves to these new root servers, because otherwise their domains would be cut off from the global Internet. The most costly aspect of this transition would be getting the world's lower-level name server operators to reconfigure their software to point to the new name servers. BIND and other DNS software contain files with default values for the IP addresses of the root servers. New IP addresses would have to be manually entered into the DNS configuration files of hundreds of thousands of local name servers. That could take some time, and the process would likely result in some confusion and lack of coordination. Eventually, however, a new root could be established and functional.

If one imagines hundreds of thousands of local name server operators reconfiguring their DNS files to point to new sources of root information, the most interesting questions have to do with coordination and authority, not technology. Who would emerge as the operators of the new root servers? On what basis would they convince the world's ISPs and name server operators to point to them rather than to many other possible candidates? Would the world's name servers converge on a single, coordinated set of new root servers, or would competing groups emerge? Those questions are explored in the next section, where competing roots are discussed.

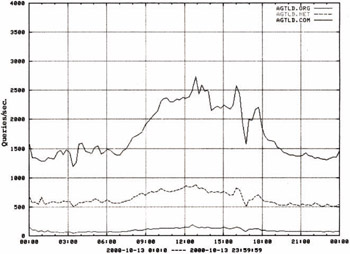

In short, DNS is fairly robust. The root server system is not a single point of failure; the hierarchical structure of the name space was designed precisely to avoid such a thing. In fact, the most serious potential for instability comes not from elimination of the root, but from software glitches that might cause the root servers or top-level domain servers to publish corrupted data. The only serious stability problems that have occurred in DNS have originated with the enormous .com zone file, a single domain that holds over 60 percent of the world's domain name registrations and probably accounts for a greater portion of DNS traffic. [11 ]

Figure 3.7 shows the number of queries per second received by a name server for .com, .net, and .org over the course of a single day (October 13, 2000). At its peak, the .com zone was queried about 2,500 times per second.

Figure 3.7: Number of .com, .net, and .org queries per second on a single day

3.3.2 Competing DNS Roots?

DNS was designed on the assumption that there would be only one authoritative root zone file. That method of ensuring technical consistency creates an institutional problem. If there can only be one zone file, who controls its content? Who decides what top-level domain names are assigned, and to whom? In effect, some person or organization must have a monopoly on this vital decision. If top-level domain assignments are economically valuable, then the decision about who gets one and who doesn't can be contentious. Monopoly control of top-level domain name assignments can also provide the leverage needed to enforce regulatory policies. Assignments can be granted only to those who agree to meet certain regulatory obligations, taxes, or fees. And because each lower level of the domain name assignment hierarchy must get its names from the administrator of a top-level domain, the regulations and taxes imposed on the toplevel assignments can be passed down all the way down. So political power, as well as economic benefit, is implicated in decisions about who or what is published in the root zone.

Some people believe that alternative or competing roots are the solution to many of the policy problems posed by ICANN. Others contend that such competition is impossible or undesirable. [12 ]That debate can be clarified by starting with a more precise definition of competition in this area and by applying known concepts from economics.

Competition at the root level means competition for the right to define the contents of the root zone file. More precisely, it means that organizations compete for the right to have their definition of the content of the root zone recognized and accepted by the rest of the Internet's name servers. As such, competition over the definition of the root zone is a form of standards competition. A dominant provider of root zone content (the U.S. Commerce Department and its contractor ICANN) publishes a particular set of top-level domains, while competing root operators strive to introduce additions or variations that will attract the support of other name server operators.

Economic theory has a lot of interesting things to say about how standards competition works. In standardization processes, user choices are affected by the value of compatibility with other users, not just by the technical and economic features of the product or service itself. A simple example would be the rivalry between the IBM and Apple computer platforms in the mid-1980s. During that time the two computer systems were almost completely incompatible. Thus, a decision to buy a personal computer had to be based not only on the intrinsic features of the computer itself but also on what platform other people were using. If all of a consumer's co-workers and friends were using Macs, for example, a buyer's choice of an IBM-compatible PC would lead to difficulties in exchanging files or communicating over a network.

There are many other historical examples of competition based on compatibility. Studies of competing railroad gauges (Friedlander 1995), alternative electric power grid standards (David and Bunn 1988), separate telegraph systems (Brock 1981), non-interconnected telephone networks (Mueller 1997), and alternative broadcast standards (Farrell and Shapiro 1992; Besen 1992) all have shown that the need for compatibility among multiple users led to convergence on a single standard or network, or to interconnection arrangements among formerly separate systems.

This feature of demand is called the network externality. It means that the value of a system or service to its users tends to increase as other users adopt the same system or service. A more precise definition characterizes them as demand-side economies of scope that arise from the creation of complementary relationships among the components of a system ( Economides 1996).

One of the distinctive features of standards competition is the need to develop critical mass. A product with network externalities must pass a minimum threshold of adoption to survive in the market. Another key concept is known as tipping or the bandwagon effect. This means that once a product or service with network externalities achieves critical mass, what Shapiro and Varian (1998) call 'positive feedback' can set in. Users flock to one of the competing standards in order to realize the value of universal compatibility, and eventually most users to converge on a single system. However, network externalities can also be realized by the development of gateway technologies that interconnect or make compatible technologies that formerly were separate and distinct.

What does all this have to do with DNS? The need for unique name assignments and universal resolution of names creates strong network externalities in the selection of a DNS root. If all ISPs and users rely on the same public name space-the same delegation hierarchy-it is likely that all name assignments will be unique, and one can be confident that one's domain name can be resolved by any name server in the world. Thus, a public name space is vastly more valuable as a tool for internetworking if all other users also rely on it or coordinate with it. Network administrators thus have a strong tendency to converge on a single DNS root.

Alternative roots face a serious chicken-and-egg problem when trying to achieve critical mass. The domain name registrations they sell have little value to an individual user unless many other users utilize the same root zone file information to resolve names. But no one has much of an incentive to point at an alternative root zone when it has so few users. As long as other people don't use the same root zone file, the names from an alternative root will be incompatible with other users' implementation of DNS. Other users will be unable to resolve the name.

Network externalities are really the only barrier to all-out competition over the right to define the root zone file. A root server system is just a name server at the top of the DNS hierarchy. There are hundreds of thousands of name servers being operated by various organizations on the Internet. In principle, any one of them could declare themselves a public name space, assign top-level domain names to users, and either resolve the names or point to other name servers that resolve them at lower levels of the hierarchy. The catch, however, is that names in an alternative space are not worth much unless many other name servers on the Internet recognize its root and point their name servers at it.

There already are, in fact, several alternative root server systems (table 3.1). Most were set up to create new top-level domain names (see chapter 6). Most alternative roots have been promoted by small entrepreneurs unable to establish critical mass; in the year 2000 only an estimated 0.3 percent of the world's name servers pointed to them. [13 ]That changed when New.net, a company with venture capital financing, created 20 new toplevel domains in the spring of 2001 and formed alliances with mid-sized Internet service providers to support the new domains. [14 ]New.net's toplevel domains may be visible to about 20 percent of the Internet users in the United States.

| Name | TLDs Claimed/Supported | Conflicts with |

|---|---|---|

| ICANN-U.S. Commerce Department root | ISO-3166-1 country codes, .com, .net, .org, .edu, .int, .mil, .arpa, .info, .biz, .coop, .aero, .museum, .pro, .name | Pacific Root (.biz) |

| Open NIC | .glue, .geek, .null, .oss, .parody, .bbs | |

| ORSC | Supports ICANN root,Open NIC, Pacific Root, and most New.net names plus a few hundred of its own, IOD's .web | Name.space |

| Pacific Root | 27 names, including .biz, .food, .online | ICANN (.biz) |

| New.net | .shop. .mp3, .inc, .kids, .sport, .family, .chat, .video, .club, .hola, .soc, .med, .law, .travel, .game, .free, .ltd, .gmbh, .tech, .xxx | Name.space |

| Name.space | 548 generic names listed | New.net, most other alternative roots |

| CN-NIC | Chinese-character versions of 'company,' 'network,' and'organization' |

An alternative root supported by major Internet industry players, on the other hand, would be even stronger. An America Online, a Microsoft, a major ISP such as MCI WorldCom, all possess the economic and technical clout to establish an alternative DNS root should they choose to do so. If the producers of Internet browsers, for example, preconfigured their resolvers to point to a new root with an alternative root zone file that included or was compatible with the legacy root zone, millions of users could be switched to an alternative root. It is also possible that a national government with a large population that communicated predominantly with itself could establish an alternative root zone file and require, either through persuasion or regulation, national ISPs to point at it. Indeed, the People's Republic of China is offering new top-level domains based on Chinese characters on an experimental basis.

Why would anyone want to start an alternative root? Defection from the current DNS root could be motivated by the following:

-

Not enough new top-level domains

-

Technological innovation, such as non-Roman character sets, or other features

-

Political resistance to the policies imposed on registries and domain name registrants by the central authority

Technological innovation almost inevitably leads to standards competition in some form or another. Furthermore, monopolies have a tendency to become unaccountable, overly expensive, or unresponsive. Competition has a very good record of making monopolies more responsive to technical and business developments that they would otherwise ignore. So even when competitors fail to displace the dominant standard or network, they may succeed in substantially improving it. New.net, for example, may prompt ICANN to speed up its introduction of new top-level domains.

Recall, however, that the value of universal connectivity and compatibility on the Internet is immense. Those who attempt to establish alternative roots have powerful incentives to retain compatibility with the existing DNS root and offer something of considerable value to move industry actors away from the established root. Whether the value that can be achieved by root competition is worth the cost in terms of disruption and incompatibility is beyond the scope of this discussion. [15 ]

[11 ]On August 23, 2000, four root name servers- b.root-servers.net, g. rootservers.net, j.root-servers.net, and m.root-servers.net -had no name server records for the entire .com zone. The problem occurred because the BIND software interacted with Network Solutions' zone generation procedures in an unexpected way, causing the name server to remove the .com zone delegation information from the root zone held in memory. This means that the entire .com zone did not exist for about 4/13 of all the resolvers in the world that needed to refresh their .com pointers during the interval in question.

[12 ]RFC 2826 (2000), 'IAB Technical Comment on the Unique DNS Root,' is often cited in the policy debates over alternative roots as if it were the last word on the subject. The basic point of the statement is simply that 'there must be a generally agreed single set of rules for the root.' This is a good starting point for policy discussion. However, to assert that the root zone needs to be coordinated is both uncontroversial and not dispositive of the policy problem posed by competing roots. Advocates of a 'single authoritative root' need to face the reality that portions of the Internet community can and do defect from or supplement the so-called authoritative root. Asserting that a particular root server system 'should be' authoritative and singular does not make it so. One can agree on the need for coordination at the root level without necessarily agreeing on who is the sole or proper source of those rules. Nor does the general need for a single set of rules eliminate the legitimacy and benefit of competition over what those rules should be.

[13 ]But I will give my personal opinion. The value added by alternative roots that only offer new top-level domains is minimal relative to the compatibility risks unless some other innovative functionalities are added. Large providers with the ability to overcome the critical mass problem are more likely to choose strategies that work over or around DNS rather than replacing it.

[14 ]Joe Baptista, 2000, root server estimates.

[15 ]Karen Kaplan, 'Start-up Offers Alternative System for Net Addresses,' Los Angeles Times, March 6, 2001.

|

| < Day Day Up > |

|

EAN: 2147483647

Pages: 110