7.3 Component Software

7.3 Component Software

Most new software is constructed on a base of existing software (see section 4.2). There are a number of possibilities as to where the existing software comes from, whether it is modified or used without modification, and how it is incorporated into the new software. Just as end-users face the choice of making, buying, licensing, or subscribing to software, so do software suppliers. This is an area where the future industry may look very different (Szyperski 1998).

7.3.1 Make vs. License Decisions

Understanding the possible sources of an existing base of software leads to insights into the inner workings of the software creation industry. Although the possibilities form a continuum, the three distinct points listed in table 7.4 illustrate the range of possibilities. In this table, a software development project supports the entire life cycle of one coherent system or program through multiple versions (see section 5.1.2).

| Development Methodology | Description |

|---|---|

| | |

| Handcrafting | Source code from an earlier stage of the project is modified and upgraded to repair defects and add new features. |

| Software reuse | In the course of one project, modules are developed while anticipating their reuse in future projects. In those future projects, source code of existing modules from different projects is modified and integrated. |

| Component assembly | A system is assembled by configuring and integrating preexisting components. These components are modules (typically available only in object code) that cannot be modified except in accordance with built-in configuration options, often purchased from another firm. |

The handcrafting methodology was described in section 4.2. In its purest form, all the source code for the initial version is created from scratch for the specific needs of the project and is later updated through multiple maintenance and version cycles. Thus, the requirements for all the modules in such a handcrafted code base are derived by decomposition of a specific set of system requirements.

In the intermediate case, software reuse (Frakes and Gandel 1990; Gaffney and Durek 1989), source code is consciously shared among different projects with the goal of increasing both organizational and project productivity (recall the distinction made in section 4.2.3). Thus, both the architecture and requirements for at least some individual modules on one project anticipate the reuse of those modules in other projects. A typical example is a product line architecture (Bosch 2000; Jacobson, Griss, and J nsson 1997), where a common architecture with some reusable modules or components is explicitly shared (variants of the same product are an obvious case; see section 5.1.2). To make it more likely that the code will be suitable in other projects, source code is made available to the other projects, and modifications to that source code to fit distinctive needs of the other project are normally allowed. Because it is uncommon to share source code outside an organization, reuse normally occurs within one development organization. A notable exception is contract custom development, where one firm contracts to another firm the development of modules for specific equirements and maintains ownership in and source code from that development outcome.

Example A hypothetical example illustrates software reuse. The development organization of Friendly Bank may need a module that supports the acquisition, maintenance, and accessing of information relative to one customer. This need is first encountered during a project that is developing and maintaining Friendly's checking account application. However, Friendly anticipates future projects to develop applications to manage a money market account and later a brokerage account. The requirements of the three applications have much in common with respect to customer information, so the first project specifically designs the customer module, trying to meet the needs of all three applications. Later, the source code is reused in the second and third projects. In the course of those projects, new requirements are identified that make it necessary to modify the code to meet specific needs, so there are now two or three variants of this customer module to maintain.

Reused modules can be used in multiple contexts, even simultaneously. This is very different from the material world, where reuse carries connotations of recycling, and simultaneous uses of the same entity are generally impossible. The difference between handcrafted and reusable software is mostly one of likelihood or adequateness. If a particular module has been developed with a special purpose in mind, and that purpose is highly specialized or the module is of substantial but context-specific complexity, then it is unlikely to be reusable in another context. Providing a broad set of configuration options that anticipates other contexts is a way to encourage reuse.

The third option in table 7.4 is component assembly (Hopkins 2000; Pour 1998; Szyperski 1998). In this extreme, during the course of a project there is no need to implement modules. Rather, the system is developed by taking existing modules (called components), configuring them, and integrating them. These components cannot be modified but are used as is; typically, they are acquired from a supplier rather than from another project within the same organization. To enhance its applicability to multiple projects without modification, each component will typically have many built-in configuration options.

Example The needs of Friendly Bank for a customer information module are hardly unique to Friendly. Thus, a supplier software firm, Banking Components Inc., has identified the opportunity to develop a customer information component that is designed to be general and configurable; in fact, it hopes this component can meet the needs of any bank. It licenses this component (in object code format) to any bank wishing to avoid developing this function itself. A licensing bank assembles this component into any future account applications it may create.

Although the software community has seen many technologies, methodologies, and processes aimed at increasing productivity and software quality, the consensus today is that component software is the most promising approach. It creates a supply chain for software, in which one supplier assembles components acquired from other suppliers into its software products. Competition is shifted from the system level to the component level, resulting in improved quality and cost options.

It would be rare to find any of these three options used exclusively; most often, they are combined. One organization may find that available components can partly meet the needs of a particular project, so it supplements them with handcrafted modules and modules reused from other projects.

7.3.2 What Is a Component?

Roughly speaking, a software component is a reusable module suitable for composition into multiple applications. The difference between software reuse and component assembly is subtle but important. There is no universal agreement in the industry or literature as to precisely what the term component means (Brown et al. 1998; Heinemann and Councill 2001). However, the benefits of components are potentially so great that it is worthwhile to strictly distinguish components from other modules and to base the definition on the needs of stakeholders (provisioners, operators, and users) and the workings of the marketplace rather than on the characteristics of the current technology (Szyperski 1998). This leads us to the properties listed in table 7.5. Although some may still call a module that fails to satisfy one or more of these properties a component, important benefits would be lost.

| Property | Description | Rationale |

|---|---|---|

| | ||

| Multiple-use | Able to be used in multiple projects. | Share development costs over multiple uses. |

| Non-context-specific | Designed independently of any specific project and system context. | By removing dependence on system context, more likely to be general and broadly usable. |

| Composable | Able to be composed with other components. | High development productivity achieved through assembly of components. |

| Encapsulated | Only the interfaces are visible and the implementation cannot be modified. | Avoids multiple variations; all uses of a component benefit from a common maintenance and upgrade effort. |

| Unit of independent deployment and versioning | Can be deployed and installed as an independent atomic unit and later upgraded independently of the remainder of the system[a] | Allows customers to perform assembly and to mix and match components even during the operational phase, thus moving competition from the system to the component level. |

|

[a]Traditionally, deployment and installation have been pretty much the same thing. However, Sun Microsystem's EJB (a component platform for enterprise applications) began distinguishing deployment and installation, and other component technologies are following. Deployment consists of readying a software subsystem for a particular infrastructure or platform, whereas installation readies a subsystem for a specific set of machines. See Szyperski (2002a) for more discussion of this subtle but important distinction. | ||

One of the important implications of the properties listed in table 7.5 is that components are created and licensed to be used as is. All five properties contribute to this, and indeed encapsulation enforces it. Unlike a monolithic application (which is also purchased and used as is), a component is not intended to be useful in isolation; rather its utility depends on its composition with other components (or possibly other modules that are not components). A component supplier has an incentive to reduce context dependence in order to increase the size of the market, balancing that property against the need for the component to add value to the specific context. An additional implication is that in a system using components (unlike a noncomponentized system) it should be possible during provisioning to mix and match components from different vendors so as to move competition from the system level down to the subsystem (component) level. It should also be possible to replace or upgrade a single component independently of the remainder of a system, even during the operation phase, thus reducing lock-in (see chapter 9) and giving greater flexibility to evolve the system to match changing or expanding requirements.[8] In theory, the system can be gracefully evolved after deployment by incrementally upgrading, replacing, and adding components.

While a given software development organization can and should develop and use its own components, a rather simplistic but conceptually useful way to distinguish the three options in table 7.4 is by industrial context (see table 7.6). This table distinguishes modules used within a single project (handcrafted), within multiple projects in the same organization (reusable), and within multiple organizations (component).

| Methodology Type | Industrial Context | Exceptions |

|---|---|---|

| | ||

| Handcrafted | Programmed and maintained in the context of a single project. | Development and maintenance may be outsourced to a contract development firm. |

| Reusable | Programmed anticipating the needs of multiple projects within a single organization; typically several versions are maintained within each project. | A common module may be reused within different contexts of a single project. Development and maintenance of reusable modules may be outsourced. |

| Component | Purchased and used as is from an outside software supplier. | Components may be developed within an organization and used in multiple projects. |

Component assembly should be thought of as hierarchical composition (much like hierarchical decomposition except moving bottom-up rather than top-down).[9] Even though a component as deployed is atomic (encapsulated and displaying no visible internal structure), it may itself have been assembled from components by its supplier, those components having been available internally or purchased from other suppliers.[10] During provisioning, a component may be purchased as is, configured for the specific platform and environment (see section 4.4.2), and assembled and integrated with other components. As part of the systems management function during operation, the component may be upgraded or replaced, or new components may be added and assembled with existing components to evolve the system.

A component methodology requires considerably more discipline than reuse. In fact, it is currently fair to say that not all the properties listed in table 7.5 have been achieved in practice, at least on the widespread and reproducible basis. Components are certainly more costly to develop and maintain than handcrafted or reusable modules. A common rule of thumb states that reusable software requires roughly several times as much effort as similar handcrafted software, and components much more. As a corollary, a reusable module needs to be used in a few separate projects to break even, components even more.

If their use is well executed, the compensatory benefits of components can be substantial. From an economic perspective, the primary benefit is the discipline of maintenance and upgrade of a single component implementation even as it is used in many projects and organizations. Upgrades of the component to match the expanding needs of one user can benefit other users as well.[11] Multiple use, with the implicit experience and testing that result, and concentrated maintenance can minimize defects and improve quality. Components also offer a promising route to more flexible deployed systems that can evolve to match changing or expanding needs.

Economic incentives strongly suggest that purely in economic terms (neglecting technical and organizational considerations) components are more promising than reuse as a way to increase software development productivity, and that components will more likely be purchased from the outside than developed inside an organization. Project managers operate under strict budget and schedule constraints, and developing either reusable or multiuse modules is likely to compromise those constraints. Compensatory incentives are very difficult to create within a given development organization. While organizations have tried various approaches to requiring or encouraging managers to consider reuse or multiple uses, their effectiveness is the exception rather than the rule.

On the other hand, components are quite consistent with organizational separation. A separate economic entity looks to maximize its revenue and profits and to maximize the market potential of software products it develops. It thus has an economic incentive to seek the maximum number of uses, and the extra development cost is expected to be amortized over increased sales. Where reuse allows the forking of different variations on a reused module to meet the specific needs of future projects, many of the economies of scale and quality advantages inherent in components are lost. It is hardly surprising that software reuse has been disappointing in practice, while many hold out great hope for component software.

There is some commonality between the ideas of component and infrastructure. Like components, infrastructure is intended for multiple uses, is typically licensed from another company, is typically used as is, and is typically encapsulated. Infrastructure (as seen by the application developer and operator) is large-grain, whereas components are typically smaller-grain. The primary distinction between the components and infrastructure lies in composability. Infrastructure is intended to be extended by adding applications. This is a weak form of composability, because it requires that the infrastructure precede the applications, and the applications are developed specifically to match the capabilities of an existing infrastructure. Similarly, an application is typically designed for a specific infrastructure context and thus lacks the non-context-specific property. Components, on the other hand, embody a strong form of composability in that the goal is to enable the composability of two (or more) components that are developed completely independently, neither with prior knowledge of the other. Achieving this is a difficult technical challenge.

7.3.3 Component Portability

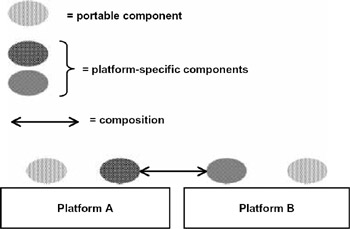

The issue of portability arises with components as well as other software (see figure 7.10). A portable component can be deployed and installed on more than one platform. But it is also possible for distributed components to compose even though they are executing on different platforms. These are essentially independent properties; a component technology can support one or the other or both of these properties, often limited to specific platforms and environments. Almost all component technologies today support distributed components that execute on the same type of platform.

Figure 7.10: Components can be portable, or they can compose across platforms, or both.

Insisting on unconditional portability undesirably limits innovation in platforms (see section 4.4.3) but also desirably increases the market for a portable component or eliminates the cost of developing variants for different platforms. Cross-platform distributed component composition offers the advantages of portability (components can participate in applications predominately executing on different platforms) without its limitations (each component can take full advantage of platform-specific capabilities). Here the trade-off is one of efficiency: performance suffers when crossing software and hardware platform boundaries. Of course, this issue also interacts with provisioning and operation (see section 7.3.7).

7.3.4 Component Evolution

There is a fundamental tension in the component methodology relating to the inherent evolution of user requirements (Lehman and Ramil 2000). Recall the distinction between specification-driven and satisfaction-driven software (see section 3.2.11). Particularly if a component is to be licensed rather than developed, it is simpler contractually to view it as specification-driven. It can then be objectively evaluated by laboratory testing, and the contractual relationship between licensee and licensor is easier to formulate in terms of objective acceptance criteria. The tension comes from the reality that applications as a whole are decidedly satisfaction-driven programs. Can such applications be assembled wholly from specification-driven components? Likely not. In practice, some components (especially those destined for applications) may be judged by satisfaction-driven criteria, greatly complicating issues surrounding maintenance, upgrade, and acceptance criteria. Worse, a successful component is assembled into multiple satisfaction-driven programs, each with distinct stakeholders and potentially distinct or even incompatible criteria for satisfaction of those stakeholders. This tension may limit the range of applicability of components, or reduce the satisfaction with componentized systems, or both. It tends to encourage small-grain components, which are less susceptible to these problems but also incur greater performance overhead. It may also encourage the development of multiple variants on a component, which undercuts important advantages.

A related problem is that a project that relies on available components may limit the available functionality or the opportunity to differentiate from competitors, who have the same components available. This can be mitigated by the ability to mix components with handcrafted or reusable modules and by incorporating a broad set of configuration options into a component.

7.3.5 An Industrial Revolution of Software?

Software components are reminiscent of the Industrial Revolution's innovation of standard reusable parts. In this sense, components can yield an industrial revolution in software, shifting the emphasis from handcrafting to assembly in the development of new software, especially applications. This is especially compelling as a way to reduce the time and cost of developing applications, much needed in light of the increasing specialization and diversity of applications (see section 3.1). It may even be feasible to enable end-users to assemble their own applications. This industrial revolution is unlikely to occur precipitously, but fortunately this is not a case of "all or nothing" because developed or acquired modules can be mixed.

This picture of a software industrial revolution is imperfect. For one thing, the analogy between a software program and a material product is flawed (Szyperski 2002a). If a program were like a material product or machine, it would consist of a predefined set of modules (analogous to the parts of a machine) interacting to achieve a higher purpose (like the interworking of parts in a machine). This was, in fact, the view implied in our discussion of architecture (see section 4.3), but in practice it is oversimplified. While software is composed from a set of interacting modules, many aspects of the dynamic configuration of an application's architecture are determined at the time of execution, not at the time the software is created. During execution, a large set of modules is created dynamically and opportunistically based on specific needs that can be identified only at that time.

Example A word processor creates many modules (often literally millions) at execution time tied to the specific content of the document being processed. For example, each individual drawing in a document, and indeed each individual element from which that drawing is composed (lines, circles, labels) is associated with a software module created specifically to manage that element. The implementers provide the set of available kinds of modules, and also specify a detailed plan by which modules are created dynamically at execution time[12] and interact to achieve higher purposes.

Implementing a modern software program is analogous not to a static configuration of interacting parts but to creating a plan for a very flexible factory in the industrial economy. At the time of execution, programs are universal factories that, by following specific plans, manufacture a wide variety of immaterial artifacts on demand and then compose them to achieve higher purposes. Therefore, in its manner of production, a program—the product of development—is not comparable to a hardware product but is more like a very flexible factory for hardware components. The supply of raw materials of such a factory corresponds to the reusable resources of information technology: instruction cycles, storage capacity, and communication bandwidth. The units of production in this factory are dynamically assembled modules dynamically derived from modules originally handcrafted or licensed as components.

There is a widespread belief that software engineering is an immature discipline that has yet to catch up with more mature engineering disciplines, because there remains such a deep reliance on handcrafting as a means of production. In light of the nature of software, this belief is exaggerated. Other engineering disciplines struggle similarly when aiming to systematically create new factories, especially flexible ones (Upton 1992). Indeed, other engineering disciplines, when faced with the problem of creating such factories, sometimes look to software engineering for insights. It is an inherently difficult problem, one unlikely to yield to simple solutions. Nevertheless, progress will be made, and the market for components will expand.

A second obstacle to achieving an industrial revolution of software is the availability of a rich and varied set of components for licensing. It was argued earlier that purchasing components in a marketplace is more promising than using internally developed components because the former offers higher scale and significant economic benefit to the developer/supplier. Such a component marketplace is beginning to come together.

Example Component technologies are emerging based on Microsoft Windows (COM+ and CLR) and Sun Microsystems' Java (JavaBeans and Enterprise JavaBeans). Several fast-growing markets now exist (Garone and Cusack 1999), and a number of companies have formed to fill the need for merchant, broker, and triage roles, including ComponentSource and FlashLine. These firms provide an online marketplace where buyers and sellers of components can come together.

One recognized benefit of the Industrial Revolution was the containment of complexity. By separating parts suppliers and offering each a larger market, economic incentives encouraged suppliers to go to great lengths to make their components easier to use by abstracting interfaces, hiding and encapsulating the complexities. New components will also tend to use existing standardized interfaces where feasible rather than creating new ones (so as to maximize the market), reducing the proliferation of interfaces. Thus, a component marketplace may ultimately be of considerable help in containing software complexity, as it has for material goods and services.

Another obstacle to an industrial revolution of software, one that is largely unresolved, is trust and risk management. When software is assembled from components purchased from external suppliers, warranty and insurance models are required to mitigate the risk of exposure and liability. Because of the complexity and characteristics of software, traditional warranty, liability laws, and insurance require rethinking in the context of the software industry, an issue as important as the technical challenges.

Another interesting parallel to component software may be biological evolution, which can be modeled as a set of integrative levels (such as molecules, cells, organisms, and families) where self-contained entities at each level (except the bottom) consist mainly of innovative composition of entities from the level below (Petterersson 1996).[13] Like new business relationships in an industrial economy, nature seems to evolve ever more complex entities in part by this innovative composition of existing entities, and optimistically components may unleash a similar wave of innovation in software technology.

It should be emphasized that these parallels to the industrial economy and to biological evolution depend upon the behavioral nature of software (which distinguishes it from the passive nature of information), leading directly to the emergence of new behaviors through module composition.

7.3.6 Component Standards and Technology

Assembling components designed and developed largely independently requires standardization of ways for components to interact. This addresses interoperability, although not necessarily the complementarity also required for the composition of components (see section 4.3.6). Achieving complementary, which is more context-specific, is more difficult. Complementarily is addressed at the level of standardization through bodies that form domain-specific reference models and, building on those, reference architectures. Reference architectures devise a standard way to divide and conquer a particular problem domain, predefining roles that contributing technologies can play. Components that cover such specified roles are then naturally complementary.

Example The Object Management Group, an industrial standardization consortium, maintains many task force groups that establish reference models and architectures for domains such as manufacturing, the health industry, or the natural sciences.

Reusability or multiple use can focus on two levels of design: architecture and individual modules. In software, a multiuse architecture is called a reference architecture, a multiuse architecture cast to use specific technology is called a framework, and a multiuse module is called a component. In all cases, the target of use is typically a narrowed range of applications, not all applications. One reason is that, in practice, both the architecture and the complementarity required for component composition requires some narrowing of application domain. In contrast, infrastructure software targets multiple-use opportunities for a wide range of applications.

Example Enterprise resource planning (ERP) is a class of application that targets standard business processes in large corporations. Vendors of ERP, such as SAP, Baan, Peoplesoft, and Oracle, use a framework and component methodology to provide some flexibility to meet varying end-user needs. Organizations can choose a subset of available components, and mix and match components within an overall framework defined by the supplier. (In this particular case, the customization process tends to be so complex that it is commonly outsourced to business consultants.)

The closest analogy to a framework in the physical world is called a platform (leading to possible confusion, since that term is used differently in software; see section 4.4.2).

Example An automobile platform is a standardized architecture and associated components and manufacturing processes that can be used as the basis of multiple products. Those products are designed by customizing elements fitting into the architecture, like the external sheet metal.

In essence a framework is a preliminary plan for the decomposition of (parts of) an application, including interface specifications. A framework can be customized by substituting different functionality in constituent modules and extended by adding additional modules through defined gateways. As discussed in section 7.3.5, a framework may be highly dynamic, subject to wide variations in configuration at execution time. The application scope of a framework is necessarily limited: no single architecture will be suitable for a wide range of applications. Thus, frameworks typically address either a narrower application domain or a particular vertical industry.

Component methodologies require discipline and a supporting infrastructure to be successful. Some earlier software methodologies have touted similar advantages but have incorporated insufficient discipline.

Example Object-oriented programming is a widely used methodology that emphasizes modularity, with supporting languages and tools that enforce and aid modularity (e.g., by enforcing encapsulation). While OOP does result in an increase in software reuse in development organizations, it has proved largely unable to achieve component assembly. The discipline as enforced by compilers and runtime environment is insufficient, standardization to enable interoperability is inadequate, and the supporting infrastructure is also inadequate.

From a development perspective, component assembly is quite distinctive. Instead of viewing source code as a collection of textual artifacts, it is viewed as a collection of units that separately yield components. Instead of arbitrarily modifying and evolving an ever-growing source base, components are individually and independently evolved (often by outside suppliers) and then composed into a multitude of software programs. Instead of assuming an environment with many other specific modules present, a component provides documented connection points that allow it to be configured for a particular context. Components can retain their separate identity in a deployed program, allowing that program to be updated and extended by replacing or adding components. In contrast, other programming methodologies deploy a collection of executable or dynamically loadable modules whose configuration and context details are hard-wired and cannot be updated without being replaced as a monolithic whole.

Even an ideal component will depend on a platform's providing an execution model to build on. The development of a component marketplace depends on the availability of one or more standard platforms to support component software development, each such platform providing a largely independent market to support its component suppliers.

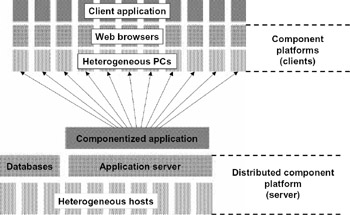

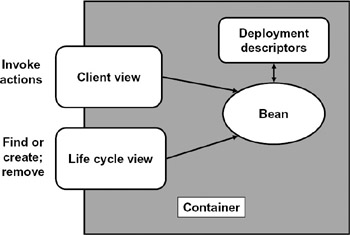

Example There are currently two primary platforms for component software, the Java universe, including Java 2 Enterprise Edition (J2EE) from Sun Microsystems, and the Windows .NET universe from Microsoft. Figure 7.11 illustrates the general architecture of Enterprise JavaBeans (EJB), part of J2EE, based on the client-server model. The infrastructure foundation is a set of (possibly distributed) servers and (typically) a much larger set of clients, both of which can be based on heterogeneous underlying platforms. EJB then adds a middleware layer to the servers, called an application server. This layer is actually a mixture of two ideas discussed earlier. First, it is value-added infrastructure that provides a number of common services for many applications. These include directory and naming services, database access, remote component interaction, and messaging services. Second, it provides an environment for components, which are called Beans in EJB. One key aspect of this environment is the component container illustrated in figure 7.12. All interactions with a given component are actually directed at its container, which in turn interacts with the component itself. This allows many additional value-added services to be transparently provided on behalf of the component, such as supporting multiple clients sharing a single component, state management, life cycle management, and security. The deployment descriptors allow components to be highly configurable, with their characteristics changeable according to the specific context. In addition, J2EE provides an environment for other Java components in the Web server (servlets) and client Web browser (applets) based on a Java browser plug-in, as well as for client-side applications. In the .NET architecture, the role of application server and EJBs is played by COM+ and the .NET Framework. The role of Web server and Web service host is played by ASP.NET and the .NET Framework. Clients are covered by client-side applications, including Web browsers with their extensions.

Figure 7.11: An architecture for client-server applications supporting a component methodology.

Figure 7.12: A component container in JavaBeans.

Component-based architectures should be modular, particularly with respect to weak coupling of components, which eases their independent development and composability (see section 4.3.3). Strong cohesion within components is less important because components can themselves be hierarchically decomposed for purposes of implementation. Market forces often intervene to influence the granularity of components, and in particular sometimes encourage course-grain components with considerable functionality bundled in to reduce the burden on component users and to help encapsulate implementation details and preserve trade secrets (see chapter 8). In some cases, it is sheer performance objectives that encourage course-grained components because there is an unavoidable overhead involved in interacting with other components through fixed interfaces.

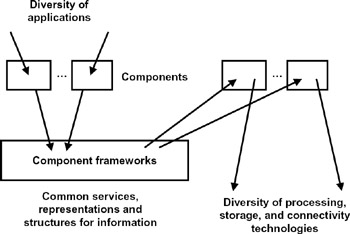

Constructing an application from components by configuring them all against one another, called a peer-to-peer architecture, does not scale beyond simple configurations because of the combinatorial explosion of created dependencies, all of which may need to be managed during the application evolution. A framework can be used to bundle all relevant component connections and partial configurations, hierarchically creating a coarser-grain module. A component may plug into multiple places in a component framework, if that component is relevant to multiple aspects of the system. Figure 7.13 illustrates how a framework can decouple disjoint dimensions.[14] This is similar to the argument for layering (see figure 7.6), standards (see section 7.2), and commercial intermediaries, all of which are in part measures to prevent a similar combinatorial explosion.

Figure 7.13: Component frameworks to separate dimensions of evolution.

Example An operating system is a framework, accepting device driver modules that enable progress below (although these are not components because they can't compose with one another) and accepting application components to enable progress above (assuming that applications allow composability). Allowing component frameworks to be components themselves creates a component hierarchy. For example, an OS-hosted application can be turned into a framework by accepting add-ins (a synonym for components).

7.3.7 Web Services

The provisioning and operation of an entire application can be outsourced to a service provider (see section 6.2). Just as an application can be assembled from components, so too can an application be assembled from the offerings of not one but two or more service providers. Another possibility is to allow end-users to assemble applications on a peer-to-peer basis by offering services to one another directly, perhaps mixed with services provided by third-party providers. This is the idea behind Web services, in which services from various service providers can be considered components to be assembled. The only essential technical difference is that these components interact over the network; among other things, this strongly enforces the encapsulation property. Of course, each component Web service can internally be hierarchically decomposed from other software components. Services do differ from components significantly in operational and business terms in that a service is backed by a service provider who provisions and operates it (Szyperski 2001), leading to different classes of legal contracts and notions of recompense (Szyperski 2002b). The idea that components can be opportunistically composed even after deployment is consistent with the Web service idea.

Distributed component composition was considered from the perspective of features and functionality (see section 7.3.3). Web services address the same issue from the perspective of provisioning and operation. They allow component composition across heterogeneous platforms, with the additional advantage that deployment, installation, and operation for specific components can be outsourced to a service provider. They also offer the component supplier the option of selling components as a service rather than licensing the software. Web services shift competition in the application service provider model from the level of applications to components.

If the features and capabilities of a specific component are needed in an application, there are three options. The component can be deployed and installed on the same platform (perhaps even the same host) as the application. If the component is not available for that platform (it is not portable), it can be deployed and installed on a different host with the appropriate platform. Finally, it can be composed into the application as a Web service, avoiding the deployment, installation, and operation entirely but instead taking an ongoing dependence on the Web service provider.

A Web service architecture can be hierarchical, in which one Web service can utilize services from others. In this case, the "customer" of one service is another piece of software rather than the user directly. Alternatively, one Web service can be considered an intermediary between the user and another Web service, adding value by customization, aggregation, filtering, consolidation, and similar functions.

Example A large digital library could be assembled from a collection of independently managed specialized digital libraries using Web services (Gardner 2001). Each library would provide its own library services, such as searching, authentication and access control, payments, copy request, and format translations. When a user accesses its home digital library and requests a document not resident there, the library could automatically discover other library services and search them, eventually requesting a document copy in a prescribed format wherever it is found and passing it to the user.

An important element of Web services is the dynamic and flexible means of assembling different services without prior arrangement or planning, as illustrated in this example. Each service can advertise its existence and capabilities so that it can be automatically discovered and those capabilities invoked. It is not realistic, given the current state of technology, to discover an entirely new type of service and automatically interact with it in a complex manner. Thus, Web services currently focus on enabling different implementations of previously known services to be discovered and accessed (Gardner 2001).

Web services could be built on a middleware platform similar to the application server in figure 7.11, where the platform supports the interoperability of components across service providers. However, this adds another level of coordination, requiring the choice of a common platform or alternatively the interoperability of different platforms. A different solution is emerging, standards for the representation of content-rich messages across platforms.

Example XML (see section 7.1.5) is emerging as a common standardized representation of business documents on the Web, one in which the meaning of the document can be extracted automatically. The interoperability of Web services can be realized without detailed plumbing standards by basing it on the passage of XML-represented messages. This high-overhead approach is suitable for interactions where there are relatively few messages, each containing considerable information.[15] It also requires additional standardization for the meaning of messages expressed in XML within different vertical industry segments. For example, there will be a standard for expressing invoices and another standard for expressing digital library search and copy requests.

The dynamic discovery and assembling of services are much more valuable if they are vendor- and technology-neutral. Assembling networked services rather than software components is a useful step in this direction, since services implemented on different platforms can be made to appear identical across the network, assuming the appropriate standards are in place.

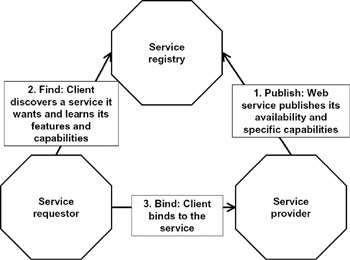

Example Recognizing the value of platform-neutral standards for Web services, several major vendors hoping to participate in the Web services market (including Hewlett-Packard, IBM, Microsoft, Oracle, and Sun) work together to choose a common set of standards. SOAP (simple object access protocol) is an interoperability standard for exchanging XML messages with a Web service, UDDI (universal description, discovery, and integration) specifies a distributed registry or catalog of available Web services, and WSDL (Web services description language) can describe a particular Web service (actions and parameters). Each supplier is developing a complete Web services environment (interoperable with others because of common standards) that includes a platform for Web services and a set of development tools.[16] UDDI and Web services work together as illustrated in figure 7.14, with three primitive operations: publish, find, and bind. First, each service provider advertises the availability of its Web services in a common service registry. A service requestor discovers a needed service in the registry, connects to the service (including functions like authentication and arranging for payment), and finally starts using it.

Figure 7.14: The Web services reference model, now on its way toward adoption as a standard, shows how UDDI supports service discovery (Gardner 2001).

[8]Preserving proper system functioning with the replacement or upgrade of a component is difficult to achieve, and this goal significantly restricts the opportunities for evolving both the system and a component. There are a number of ways to address this. One is to constrain components to preserve existing functionality even as they add new capabilities. An even more flexible approach is to allow different versions or variations of a component to be installed side-by-side, so that existing interactions can focus on the old version or variation even as future added system capabilities can exploit the added capabilities of the new one.

[9]See Ueda (2001) for more discussion of these methodologies of synthesis and development and how they are related. Similar ideas arise in the natural sciences, although in the context of analysis and modeling rather than synthesis. The top-down approach to modeling is reductionism (the emphasis is on breaking the system into smaller pieces and understanding them individually), and the bottom-up approach is emergence (the emphasis is on explaining complex behaviors as emerging from composition of elementary entities and interactions).

[10]While superficially similar to the module integration that a supplier would traditionally do, it is substantively different in that the component's implementation cannot be modified, except perhaps by its original supplier in response to problems or limitations encountered during assembly by its customers. Rather, the assembler must restrict itself to choosing builtin configuration options.

[11]As in any supplier-customer relationship in software, defects discovered during component assembly may be repaired in maintenance releases, but new needs or requirements will have to wait for a new version (see section 5.1.2). Problems arising during the composition of two components obtained independently are an interesting intermediate case. Are these defects, or mismatches with evolving requirements?

[12]Technically, it is essential to carefully distinguish those modules that a programmer conceived (embodied in source code) from those created dynamically at execution time (embodied as executing native code). The former are called classes and the latter objects. Each class must capture various configuration options as well as mechanisms to dynamically create objects. This distinction is equally relevant to components.

[13]By requiring each level (except the top) to meet a duality criterion—that entities exist both in a composition at the higher level and in independent form—nine integrative levels constituting the human condition can be identified from fundamental particles through nation-states. This model displays an interesting parallel to the infrastructure layers in software (see section 7.1.3).

[14]Although component frameworks superficially look like layers as described in section 7.2, the situation is more complex because component frameworks (unlike traditional layers) actively call components layered above them. Component frameworks are a recursive generalization of the idea of separating applications from infrastructure.

[15]This inherently high cost plus the network communication overhead is one reason for the continued factoring of Web services into internal components, since components can be efficient at a much finer granularity.

[16]These environments were initially called E-Speak (Hewlett-Packard), Web Services (IBM and Microsoft), and Dynamic Services (Oracle). The generic term Web services has now been adopted by all suppliers.

EAN: 2147483647

Pages: 145