Chapter 6. Software Penetration Testing

Chapter 6. Software Penetration Testing[1]

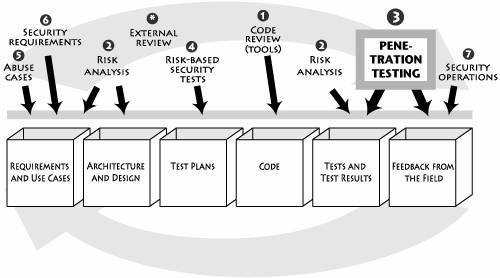

Quality assurance and testing organizations are tasked with the broad objective of ensuring that a software application fulfills its functional business requirements. Such testing most often involves running a series of dynamic functional tests late in the lifecycle to ensure that the application's features have been properly implemented. Sometimes use cases and requirements drive this testing. But no matter what does the driving, the result is the samea strong emphasis on features and functions. Because security is not a feature or even a set of features, security testing (especially penetration testing) does not fit directly into this paradigm. Security testing poses a unique problem. A majority of security defects and vulnerabilities in software are not directly related to security functionality. Instead, security issues involve often unexpected but intentional misuses of an application discovered by an attacker. If we characterize functional testing as "testing for positives"as in verifying that a feature properly performs a specific taskthen penetration testing is in some sense "testing for negatives." That is, a security tester must probe directly and deeply into security risks (possibly driven by abuse cases and architectural risks) in order to determine how the system behaves under attack. (Chapter 7 discusses how these tests can be driven by attack patterns.) At its heart, security testing needs to make use of both white hat and black hat concepts and approaches: ensuring that the security features work as advertised (a white hat undertaking) and that intentional attacks can't easily compromise the system (a black hat undertaking). That said, almost all penetration testing relies on black hat methods over white hat methods.[2] In other words, thinking like a bad guy is so essential to good penetration testing that leaving it out leaves penetration testing impotent.

In any case, testing for a negative poses a much greater challenge than verifying a positive. A set of successfully executed, plausible positive tests usually yields a high degree of confidence that a software component will perform functionally as desired. However, enumerating actions with the intention to produce a fault and reporting whether and under which circumstances the fault occurs is not a sound approach to proving the negative outcome does not exist. Got that? What I'm saying is that it's really easy to test whether a feature works or not, but it is very difficult to show whether or not a system is secure enough under malicious attack. How many tests do you do before you give up and declare "secure enough"? If negative tests do not uncover any faults, this only offers proof that no faults occur under particular test conditions; this by no means proves that no faults exist. When applied to penetration testing where lack of security vulnerability is the negative we're interested in, this means that "passing" a software penetration test provides very little assurance that an application is immune to attack. One of the main problems with today's most common approach to penetration testing is a misunderstanding of this subtle point. As a result of this problem in testing philosophy, penetration testing often devolves into a feel-good exercise in pretend security. Things go something like this. A set of reformed hackers is hired to carry out a penetration test. We know all is well because they're reformed. (Well, they told us they were reformed, anyway.) The hackers work a while until they discover a problem or two in the software, usually relating to vulnerabilities in base technology such as an application framework or a basic misconfiguration problem. Sometimes this discovery activity is as simple as trawling BugTraq to look up known security issues associated with an essential technology linked into the system.[3]

The hackers report their findings. They look great because they found a "major security problem." The software team looks pretty good because they graciously agreed to have their baby analyzed and broken, and they even know how to fix the problem. The VP of Yadda Yadda looks great because the security box is checked. Everybody wins! The problem? No clue about security risk. No idea whether the most critical security risks have been uncovered, how much risk remains in the system, and how many bugs are lurking in the zillions of lines of code. Finding a security problem and fixing it is fine. But what about the rest of the system? Imagine if we did normal software testing like this! The software is declared "code complete" and thrown over the wall to testing. The testers begin work right away, focusing on very basic edge conditions and known failure modes from previous testing on version one. They find one or two problems right off the bat. Then they stop, the problems get fixed, and the testing box gets checked off. Has the software been properly tested? Run this scenario by any professional tester, and once the tester is done laughing, think about whether penetration testing as practiced by most organizations works. |

EAN: 2147483647

Pages: 154