Section 17.5. Mutex Locks

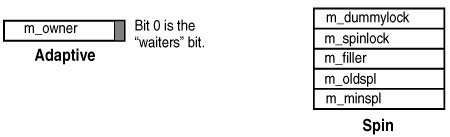

17.5. Mutex LocksMutual exclusion, or mutex locks, are the most common type of synchronization primitive used in the kernel. Mutex locks serialize access to critical data, when a kernel thread must acquire the mutex specific to the data region being protected before it can read or write the data. The thread is the lock owner while it is holding the lock, and the thread must release the lock when it has finished working in the protected region so other threads can acquire the lock for access to the protected data. 17.5.1. OverviewIf a thread attempts to acquire a mutex lock that is being held, it can basically do one of two things: it can spin or it can block. Spinning means the thread enters a tight loop, attempting to acquire the lock in each pass through the loop. The term spin lock is often used to describe this type of mutex. Blocking means the thread is placed on a sleep queue while the lock is being held and the kernel sends a wakeup to the thread when the lock is released. There are pros and cons to both approaches. The spin approach has the benefit of not incurring the overhead of context switching, required when a thread is put to sleep and also has the advantage of a relatively fast acquisition when the lock is released, since there is no context-switch operation. It has the downside of consuming CPU cycles while the thread is in the spin loopthe CPU is executing a kernel thread (the thread in the spin loop) but not really doing any useful work. The blocking approach has the advantage of freeing the processor to execute other threads while the lock is being held; it has the disadvantage of requiring context switching to get the waiting thread off the processor and a new runnable thread onto the processor. There's also a little more lock acquisition latency, since a wakeup and context switch are required before the blocking thread can become the owner of the lock it was waiting for. In addition to the issue of what to do if a requested lock is being held, the question of lock granularity needs to be resolved. Let's take a simple example. The kernel maintains a process table, which is a linked list of process structures, one for each of the processes running on the system. A simple table-level mutex could be implemented, such that if a thread needs to manipulate a process structure, it must first acquire the process table mutex. This level of locking is very coarse. It has the advantages of simplicity and minimal lock overhead. It has the obvious disadvantage of potentially poor scalability, since only one thread at a time can manipulate objects on the process table. Such a lock is likely to have a great deal of contention (become a hot lock). The alternative is to implement a finer level of granularity: a lock-per-process table entry versus one table-level lock. With a lock on each process table entry, multiple threads can be manipulating different process structures at the same time, providing concurrency. The disadvantages are that such an implementation is more complex, increases the chances of deadlock situations, and necessitates more overhead because there are more locks to manage. In general, the Solaris kernel implements relatively fine-grained locking whenever possible, largely due to the dynamic nature of scaling locks with kernel structures as needed. The kernel implements two types of mutex locks: spin locks and adaptive locks. Spin locks, as we discussed, spin in a tight loop if a desired lock is being held when a thread attempts to acquire the lock. Adaptive locks are the most common type of lock used and are designed to dynamically either spin or block when a lock is being held, depending on the state of the holder. We already discussed the trade-offs of spinning versus blocking. Implementing a locking scheme that only does one or the other can severely impact scalability and performance. It is much better to use an adaptive locking scheme, which is precisely what we do. The mechanics of adaptive locks are straightforward. When a thread attempts to acquire a lock and the lock is being held, the kernel examines the state of the thread that is holding the lock. If the lock holder (owner) is running on a processor, the thread attempting to get the lock will spin. If the thread holding the lock is not running, the thread attempting to get the lock will block. This policy works quite well because the code is such that mutex hold times are very short (by design, the goal is to minimize the amount of code to be executed while a lock is held). So, if a thread is holding a lock and running, the lock will likely be released very soon, probably in less time than it takes to context-switch off and on again, so it's worth spinning. On the other hand, if a lock holder is not running, then we know that minimally one context switch is involved before the holder will release the lock (getting the holder back on a processor to run), and it makes sense to simply block and free up the processor to do something else. The kernel will place the blocking thread on a turnstile (sleep queue) designed specifically for synchronization primitives and will wake the thread when the lock is released by the holder. (See Section 17.7.) The other distinction between adaptive locks and spin locks has to do with interrupts, the dispatcher, and context switching. The kernel dispatcher is the code that selects threads for scheduling and does context switches. It runs at an elevated Priority Interrupt Level (PIL) to block interrupts (the dispatcher runs at priority level 11 on SPARC systems). High-level interrupts (interrupt levels 1115 on SPARC systems) can interrupt the dispatcher. High-level interrupt handlers are not allowed to do anything that could require a context switch or to enter the dispatcher (we discuss this further in Section 3.4). Adaptive locks can block, and blocking means context switching, so only spin locks can be used in high-level interrupt handlers. Also, spin locks can raise the interrupt level of the processor when the lock is acquired. struct kernel_data { kmutex_t klock; char *forw_ptr; char *back_ptr; uint64_t data1; uint64_t data2; } kdata; void function() . mutex_init(&kdata.klock); . mutex_enter(&kdata.klock); klock.data1 = 1; mutex_exit(&kdata.klock); The preceding block of pseudocode illustrates the general mechanics of mutex locks. A lock is declared in the code; in this case, it is embedded in the data structure that it is designed to protect. Once declared, the lock is initialized with the kernel mutex_init() function. Any subsequent reference to the kdata structure requires that the klock mutex be acquired with mutex_enter(). Once the work is done, the lock is released with mutex_exit(). The lock type, spin or adaptive, is determined in the mutex_init() code by the kernel. Assuming an adaptive mutex in this example, any kernel threads that make a mutex_enter() call on klock will either block or spin, depending on the state of the kernel thread that owns klock when the mutex_enter() is called. 17.5.2. Solaris Mutex Lock ImplementationThe kernel defines different data structures for the two types of mutex locks, adaptive and spin, as shown below. /* * Public interface to mutual exclusion locks. See mutex(9F) for details. * * The basic mutex type is MUTEX_ADAPTIVE, which is expected to be used * in almost all of the kernel. MUTEX_SPIN provides interrupt blocking * and must be used in interrupt handlers above LOCK_LEVEL. The iblock * cookie argument to mutex_init() encodes the interrupt level to block. * The iblock cookie must be NULL for adaptive locks. * * MUTEX_DEFAULT is the type usually specified (except in drivers) to * mutex_init(). It is identical to MUTEX_ADAPTIVE. * * MUTEX_DRIVER is always used by drivers. mutex_init() converts this to * either MUTEX_ADAPTIVE or MUTEX_SPIN depending on the iblock cookie. * * Mutex statistics can be gathered on the fly, without rebooting or * recompiling the kernel, via the lockstat driver (lockstat(7D)). */ typedef enum { MUTEX_ADAPTIVE = 0, /* spin if owner is running, otherwise block */ MUTEX_SPIN = 1, /* block interrupts and spin */ MUTEX_DRIVER = 4, /* driver (DDI) mutex */ MUTEX_DEFAULT = 6 /* kernel default mutex */ } kmutex_type_t; typedef struct mutex { #ifdef _LP64 void *_opaque[1]; #else void *_opaque[2]; #endif } kmutex_t; See sys/mutex.h The 128-bit mutex object is used for each type of lock, as shown in Figure 17.5. Figure 17.5. Solaris 10 Adaptive and Spin Mutex In Figure 17.5, the m_owner field in the adaptive lock, which holds the address of the kernel thread that owns the lock (the kthread pointer), plays a double role, in that it also serves as the actual lock; successful lock acquisition for a thread means it has its kthread pointer set in the m_owner field of the target lock. If threads attempt to get the lock while it is held (waiters), the low-order bit (bit 0) of m_owner is set to reflect that case. Because kthread pointers values are always word aligned, they do not require bit 0, allowing this work. /* * mutex_enter() assumes that the mutex is adaptive and tries to grab the * lock by doing a atomic compare and exchange on the first word of the mutex. * If the compare and exchange fails, it means that either (1) the lock is a * spin lock, or (2) the lock is adaptive but already held. * mutex_vector_enter() distinguishes these cases by looking at the mutex * type, which is encoded in the low-order bits of the owner field. */ typedef union mutex_impl { /* * Adaptive mutex. */ struct adaptive_mutex { uintptr_t _m_owner; /* 0-3/0-7 owner and waiters bit */ #ifndef _LP64 uintptr_t _m_filler; /* 4-7 unused */ #endif } m_adaptive; /* * Spin Mutex. */ struct spin_mutex { lock_t m_dummylock; /* 0 dummy lock (always set) */ lock_t m_spinlock; /* 1 real lock */ ushort_t m_filler; /* 2-3 unused */ ushort_t m_oldspl; /* 4-5 old pil value */ ushort_t m_minspl; /* 6-7 min pil val if lock held */ } m_spin; } mutex_impl_t; See sys/mutex_impl.h The spin mutex, as we pointed out earlier, is used at high interrupt levels, where context switching is not allowed. Spin locks block interrupts while in the spin loop, so the kernel needs to maintain the priority level the processor was running at before entering the spin loop, which raises the processor's priority level. (Elevating the priority level is how interrupts are blocked.) The m_minspl field stores the priority level of the interrupt handler when the lock is initialized, and m_oldspl is set to the priority level the processor was running at when the lock code is called. The m_spinlock fields are the actual mutex lock bits. Each kernel module and subsystem implementing one or more mutex locks calls into a common set of mutex functions. All locks must first be initialized by the mutex_init() function, whereby the lock type is determined on the basis of an argument passed in the mutex_init() call. The most common type passed into mutex_init() is MUTEX_DEFAULT, which results in the init code determining what type of lock, adaptive or spin, should be used. It is possible for a caller of mutex_init() to be specific about a lock type (for example, MUTEX_SPIN). If the init code is called from a device driver or any kernel module that registers and generates interrupts, then an interrupt block cookie is added to the argument list. An interrupt block cookie is an abstraction used by device drivers when they set their interrupt vector and parameters. The mutex_init() code checks the argument list for an interrupt block cookie. If mutex_init() is being called from a device driver to initialize a mutex to be used in a high-level interrupt handler, the lock type is set to spin. Otherwise, an adaptive lock is initialized. The test is the interrupt level in the passed interrupt block; levels above LOCK_LEVEL (10 on SPARC systems) are considered high-level interrupts and thus require spin locks. The init code clears most of the fields in the mutex lock structure as appropriate for the lock type. The m_dummylock field in spin locks is set to all 1's (0xFF). We'll see why in a minute. The primary mutex functions called, aside from mutex_init() (which is only called once for each lock at initialization time), are mutex_enter() to get a lock and mutex_exit() to release it. mutex_enter() assumes an available, adaptive lock. If the lock is held or is a spin lock, mutex_vector_enter() is entered to reconcile what should happen. This is a performance optimization. mutex_enter() is implemented in assembly code, and because the entry point is designed for the simple case (adaptive lock, not held), the amount of code that gets executed to acquire a lock when those conditions are true is minimal. Also, there are significantly more adaptive mutex locks than spin locks in the kernel, making the quick test case effective most of the time. The test for a lock held or spin lock is very fast. Here is where the m_dummylock field comes into play: mutex_enter() executes a compare-and-swap instruction on the first byte of the mutex, testing for a zero value. On a spin lock, the m_dummylock field is tested because of its positioning in the data structure and the endianness of SPARC processors. Since m_dummylock is always set (it is set to all 1's in mutex_init()), the test will fail for spin locks. The test will also fail for a held adaptive lock since such a lock will have a nonzero value in the byte field being tested. That is, the m_owner field will have a kthread pointer value for a held, adaptive lock. If the lock is an adaptive mutex and is not being held, the caller of mutex_enter() gets ownership of the lock. If the two conditions are not true, that is, either the lock is held or the lock is a spin lock, the code enters the mutex_vector_enter() function to sort things out. The mutex_vector_enter() code first tests the lock type. For spin locks, the m_oldspl field is set, based on the current Priority Interrupt Level (PIL) of the processor, and the lock is tested. If it's not being held, the lock is set (m_spinlock) and the code returns to the caller. A held lock forces the caller into a spin loop, where a loop counter is incremented (for statistical purposes; the lockstat(1M) data), and the code checks whether the lock is still held in each pass through the loop. Once the lock is released, the code breaks out of the loop, grabs the lock, and returns to the caller. Adaptive locks require a little more work. When the code enters the adaptive code path (in mutex_vector_enter()), it increments the cpu_sysinfo.mutex_adenters (adaptive lock enters) field, as is reflected in the smtx column in mpstat(1M). mutex_vector_enter() then tests again to determine if the lock is owned (held), since the lock may have been released in the time interval between the call to mutex_enter() and the current point in the mutex_vector_enter() code. If the adaptive lock is not being held, mutex_vector_enter() attempts to acquire the lock. If successful, the code returns. If the lock is held, mutex_vector_enter() determines whether or not the lock owner is running by looping through the CPU structures and testing the lock m_owner against the cpu_thread field of the CPU structure. (cpu_thread contains the kernel thread address of the thread currently executing on the CPU.) A match indicates the holder is running, which means the adaptive lock will spin. No match means the owner is not running, in which case the caller must block. In the blocking case, the kernel turnstile code is entered to locate or acquire a turnstile, in preparation for placement of the kernel thread on a sleep queue associated with the turnstile. The turnstile placement happens in two phases. After mutex_vector_enter() determines that the lock holder is not running, it makes a turnstile call to look up the turnstile, sets the waiters bit in the lock, and retests to see if the owner is running. If yes, the code releases the turnstile and enters the adaptive lock spin loop, which attempts to acquire the lock. Otherwise, the code places the kernel thread on a turnstile (sleep queue) and changes the thread's state to sleep. That effectively concludes the sequence of events in mutex_vector_enter(). Dropping out of mutex_vector_enter(), either the caller ended up with the lock it was attempting to acquire or the calling thread is on a turnstile sleep queue associated with the lock. In either case, the lockstat(1M) data is updated, reflecting the lock type, spin time, or sleep time as the last bit of work done in mutex_vector_enter(). lockstat(1M) is a kernel lock statistics command that was introduced in Solaris 2.6. It provides detailed information on kernel mutex and reader/writer locks. The algorithm described in the previous paragraphs is summarized in pseudocode below. mutex_vector_enter() if (lock is a spin lock) lock_set_spl() /* enter spin-lock specific code path */ increment cpu_sysinfo.ademters. spin_loop: if (lock is not owned) mutex_trylock() /* try to acquire the lock */ if (lock acquired) goto bottom else continue /* lock being held */ if (lock owner is running on a processor) goto spin_loop else lookup turnstile for the lock set waiters bit if (lock owner is running on a processor) drop turnstile goto spin_loop else block /* the sleep queue associated with the turnstile */ bottom: update lockstat statistics When a thread has finished working in a lock-protected data area, it calls the mutex_exit() code to release the lock. The entry point is implemented in assembly language and handles the simple case of freeing an adaptive lock with no waiters. With no threads waiting for the lock, it's a simple matter of clearing the lock fields (m_owner) and returning. The C language function mutex_vector_exit() is entered from mutex_exit() for anything but the simple case. In the case of a spin lock, the lock field is cleared and the processor is returned to the PIL level it was running at before entering the lock code. For adaptive locks, a waiter must be selected from the turnstile (if there is more than one waiter), have its state changed from sleeping to runnable, and be placed on a dispatch queue so it can execute and get the lock. If the thread releasing the lock was the beneficiary of priority inheritance, meaning that it had its priority improved when a calling thread with a better priority was not able to get the lock, then the thread releasing the lock will have its priority reset to what it was before the inheritance. Priority inheritance is discussed in Section 17.7. When an adaptive lock is released, the code clears the waiters bit in m_owner and calls the turnstile function to wake up all the waiters. Readers familiar with sleep/wakeup mechanisms of operating systems have likely heard of a particular behavior known as the "thundering herd problem," a situation in which many threads that have been blocking for the same resource are all woken up at the same time and make a mad dash for the resource (a mutex in this case)like a herd of large, four-legged beasts running toward the same object. System behavior tends to go from a relatively small run queue to a large run queue (all the threads have been woken up and made runnable) and high CPU utilization until a thread gets the resource, at which point a bunch of threads are sleeping again, the run queue normalizes, and CPU utilization flattens out. This is a generic behavior that can occur on any operating system. The wakeup mechanism used when mutex_vector_exit() is called may seem like an open invitation to thundering herds, but in practice it turns out not to be a problem. The main reason is that the blocking case for threads waiting for a mutex is rare; most of the time the threads will spin. If a blocking situation does arise, it typically does not reach a point where very many threads are blocked on the mutexone of the characteristics of the thundering herd problem is resource contention resulting in a lot of sleeping threads. The kernel code segments that implement mutex locks are, by design, short and fast, so locks are not held for long. Code that requires longer lock-hold times uses a reader/writer write lock, which provides mutual exclusion semantics with a selective wakeup algorithm. There are, of course, other reasons for choosing reader/writer locks over mutex locks, the most obvious being to allow multiple readers to see the protected data. |

EAN: 2147483647

Pages: 244