Section 11.2. Kernel Memory Allocation

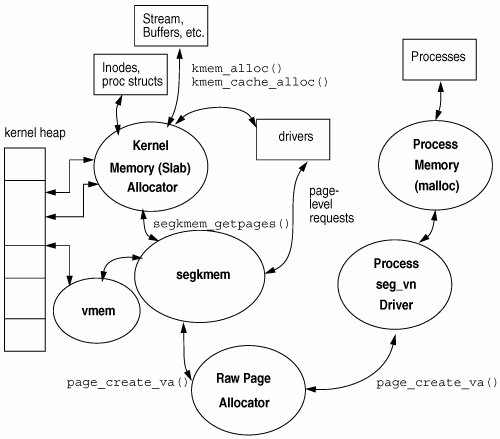

11.2. Kernel Memory AllocationKernel memory is allocated at different levels, depending on the desired allocation characteristics. At the lowest level is the page allocator, which allocates unmapped pages from the free lists so that the pages can then be mapped into the kernel's address space for use by the kernel. Allocating memory in pages works well for memory allocations that require page-sized chunks, but there are many places where we need memory allocations smaller than one page; for example, an in-kernel inode requires only a few hundred bytes per inode, and allocating one whole page (8 Kbytes) would be wasteful. For this reason, in addition to the page-level allocator, Solaris has an object-level kernel memory allocator, which is stacked on top of the page-level allocator, to allocate arbitrarily sized requests. The kernel also needs to manage where pages are mapped, a function that is provided by the resource map allocator. The high-level interaction between the allocators is shown in Figure 11.3. Figure 11.3. Different Levels of Memory Allocation 11.2.1. The Kernel HeapWe access memory in the kernel by acquiring a section of the kernel's virtual address space and then mapping physical pages to that address. We can acquire the physical pages one at a time from the page allocator by calling page_create_va(), but to use these pages, we first need to map them into our address space. A section of the kernel's address space, known as the kernel heap, is set aside for general-purpose mappings. (See Figure 11.1 for the location of the sun4u kernel heap; see also Appendix A for kernel heaps on other platforms.) The kernel heap is a separate kernel memory segment containing a large area of virtual address space that is available to kernel consumers that require virtual address space for their mappings. Each time a consumer uses a piece of the kernel heap, we must record some information about which parts of the kernel map are free and which parts are allocated so that we know where to satisfy new requests. To record the information, we use a general-purpose allocator to keep track of the start and length of the mappings that are allocated from the kernel map area. The allocator we use is the vmem allocator, which is used extensively for managing the kernel heap virtual address space, but since vmem is a universal resource allocator, it is also used for managing other resources (such as task, resource, and zone IDs). We discuss the vmem allocator in detail in Section 11.3. 11.2.2. The Kernel Memory Segment DriverThe segkmem segment driver performs two major functions. It manages the creation of general-purpose memory segments in the kernel address space, and it also provides functions that implement a page-level memory allocator by using one of those segmentsthe kernel map segment. The segkmem segment driver implements the segment driver methods described in Section 9.5, to create general-purpose, nonpageable memory segments in the kernel address space. The segment driver does little more than implement the segkmem_create method to simply link segments into the kernel's address space. It also implements protection manipulation methods, which load the correct protection modes via the HAT layer for segkmem segments. The set of methods implemented by the segkmem driver is shown in Table 11.4.

The second function of the segkmem driver is to implement a page-level memory allocator by combined use of the resource map allocator and page allocator. The page-level memory allocator within the segkmem driver is implemented with the function kmem_getpages(). The kmem_getpages() function is the kernel's central allocator for wired-down, page-sized memory requests. Its main client is the second-level memory allocator, the slab allocator, which uses large memory areas allocated from the page-level allocator to allocate arbitrarily sized memory objects. We cover more on the slab allocator later in this chapter. The kmem_getpages() function allocates page-sized chunks of virtual address space from the kernelmap segment. The kernelmap segment is only one of many segments created by the segkmem driver, but it is the only one from which the segkmem driver allocates memory. The vmem allocator allocates portions of virtual address space within the kernelmap segment but on its own does not allocate any physical memory resources. It is used together with the page allocator, page_create_va(), and the hat_memload() functions to allocate physical mapped memory. The vmem allocator allocates some virtual address space, the page allocator allocates pages, and the hat_memload() function maps those pages into the virtual address space provided by vmem. A client of the segkmem memory allocator can acquire pages with kmem_getpages and then return them to the heap with kmem_freepages, as shown in Table 11.5.

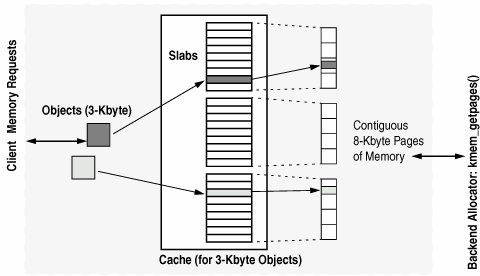

Pages allocated through kmem_getpages are not pageable and are one of the few exceptions in the Solaris environment where a mapped page has no logically associated vnode. To accommodate that case, a special vnode, kvp, is used. All pages created through the segkmem segment have kvp as the vnode in their identitythis allows the kernel to identify wired-down kernel pages. 11.2.3. The Kernel Memory Slab AllocatorIn this section, we introduce the general-purpose memory allocator, known as the slab allocator. We begin with a quick walk-through of the slab allocator features, then look at how the allocator implements object caching, and follow up with a more detailed discussion on the internal implementation. 11.2.3.1. Slab Allocator OverviewSolaris provides a general-purpose memory allocator that provides arbitrarily sized memory allocations. We refer to this allocator as the slab allocator because it consumes large slabs of memory and then allocates smaller requests with portions of each slab. We use the slab allocator for memory requests that are

The slab allocator was introduced in Solaris 2.4, replacing the buddy allocator that was part of the original SVR4 UNIX. The reasons for introducing the slab allocator were as follows:

The slab allocator solves those problems and dramatically reduces overall system complexity. In fact, when the slab allocator was integrated into Solaris, it resulted in a net reduction of 3,000 lines of code because we could centralize a great deal of the memory allocation code and could remove a lot of the duplicated memory allocator functions from the clients of the memory allocator. The slab allocator is significantly faster than the SVR4 allocator it replaced. Table 11.6 shows some of the performance measurements that were made when the slab allocator was first introduced.

The slab allocator provides substantial additional functionality, including the following:

The slab allocator uses the term object to describe a single memory allocation unit, cache to refer to a pool of like objects, and slab to refer to a group of objects that reside within the cache. Each object type has one cache, which is constructed from one or more slabs. Figure 11.4 shows the relationship between objects, slabs, and the cache. The example shows 3-Kbyte memory objects within a cache, backed by 8-Kbyte pages. Figure 11.4. Objects, Caches, Slabs, and Pages of Memory The slab allocator solves many of the fragmentation issues by grouping different-sized memory objects into separate caches, where each object cache has its own object size and characteristics. Grouping the memory objects into caches of similar size allows the allocator to minimize the amount of free space within each cache by neatly packing objects into slabs, where each slab in the cache represents a contiguous group of pages. Since we have one cache per object type, we would expect to see many caches active at once in the Solaris kernel. For example, we should expect to see one cache with 440 byte objects for UFS inodes, another cache of 56 byte objects for file structures, another cache of 872 bytes for LWP structures, and several other caches. The allocator has a logical front end and back end. Objects are allocated from the front end, and slabs are allocated from pages supplied by the backend page allocator. This approach allows the slab allocator to be used for more than regular wired-down memory; in fact, the allocator can allocate almost any type of memory object. The allocator is, however, primarily used to allocate memory objects from physical pages by using kmem_getpages as the backend allocator. Caches are created with kmem_cache_create(), once for each type of memory object. Caches are generally created during subsystem initialization, for example, in the init routine of a loadable driver. Similarly, caches are destroyed with the kmem_cache_destroy() function. Caches are named by a string provided as an argument, to allow friendlier statistics and tags for debugging. Once a cache is created, objects can be created within the cache with kmem_cache_alloc(), which creates one object of the size associated with the cache from which the object is created. Objects are returned to the cache with kmem_cache_free(). 11.2.3.2. Object CachingMost of the time, objects are heavily allocated and deallocated, and many of the slab allocator's benefits arise from resolving the issues surrounding allocation and deallocation. The allocator tries to defer most of the real work associated with allocation and deallocation until it is really necessary, by keeping the objects alive until memory needs to be returned to the back end. It does this by telling the slab allocator what the object is being used for so that the allocator remains in control of the object's true state. So, what do we really mean by keeping the object alive? If we look at what a subsystem uses memory objects for, we find that a memory object typically consists of two common components: the header, or description of what resides within the object and associated locks; and the actual payload that resides within the object. A subsystem typically allocates memory for the object, constructs the object in some way (writes a header inside the object or adds it to a list), and then creates any locks required to synchronize access to the object. The subsystem then uses the object. When finished with the object, the subsystem must deconstruct the object, release locks, and then return the memory to the allocator. In short, a subsystem typically allocates, constructs, uses, deallocates, and then frees the object. If the object is being created and destroyed often, then a great deal of work is expended constructing and deconstructing the object. The slab allocator does away with this extra work by caching the object in its constructed form. When the client asks for a new object, the allocator simply creates a new one or finds an available constructed object. When the client returns an object, the allocator does nothing other than mark the object as free, leaving all the constructed data (header information and locks) intact. The object can be reused by the client subsystem without the allocator needing to construct or deconstructthe construction and deconstruction is only done when the cache needs to grow or shrink. Deconstruction is deferred until the allocator needs to free memory back to the backend allocator. To allow the slab allocator to take ownership of constructing and deconstructing objects, the client subsystem must provide a constructor and destructor method. This service allows the allocator to construct new objects as required and then to deconstruct objects later asynchronously to the client's memory requests. The kmem_cache_create() interface supports this feature by providing a constructor and destructor function as part of the create request. The slab allocator also allows slab caches to be created with no constructor or destructor, to allow simple allocation and deallocation of simple raw memory objects. The slab allocator moves a lot of the complexity out of the clients and centralizes memory allocation and deallocation policies. At some points, the allocator may need to shrink a cache as a result of being notified of a memory shortage by the VM system. At this time, the allocator can free all unused objects by calling the destructor for each object that is marked free and then returning unused slabs to the backend allocator. A further callback interface is provided in each cache so that the allocator can let the client subsystem know about the memory pressure. This callback is optionally supplied when the cache is created and is simply a function that the client implements to return, by means of kmem_cache_free(), as many objects to the cache as possible. A good example is a file system, which uses objects to store the inodes. The slab allocator manages inode objects; the cache management, construction, and deconstruction of inodes are handed over to the slab allocator. The file system simply asks the slab allocator for a "new inode" each time it requires one. For example, a file system could call the slab allocator to create a slab cache, as shown below. inode_cache = kmem_cache_create("inode_cache", sizeof (struct inode), 0, inode_cache_constructor, inode_cache_destructor, inode_cache_reclaim, NULL, NULL, 0); struct inode *inode = kmem_cache_alloc(inode_cache, 0); The example shows that we create a cache named inode_cache, with objects of the size of an inode, no alignment enforcement, a constructor and a destructor function, and a reclaim function. The backend memory allocator is specified as NULL, which by default allocates physical pages from the segkmem page allocator. We can see from the statistics exported by the slab allocator that the UFS file system uses a similar mechanism to allocate its inodes. We use the kstat command to dump the statistics. (We discuss allocator statistics in more detail in Section 11.2.3.9.) sol8# kstat -n ufs_inode_cache module: unix instance: 0 name: ufs_inode_cache class: kmem_cache align 8 alloc 7357 alloc_fail 0 buf_avail 8 buf_constructed 0 buf_inuse 7352 buf_max 7360 buf_size 368 buf_total 7360 chunk_size 368 crtime 64.555291515 depot_alloc 0 depot_contention 0 depot_free 2 empty_magazines 0 free 7 full_magazines 0 hash_lookup_depth 0 hash_rescale 0 hash_size 0 magazine_size 3 slab_alloc 7352 slab_create 736 slab_destroy 0 slab_free 0 slab_size 4096 snaptime 21911.755149204 vmem_source 20 The allocator interfaces are shown in Table 11.7. Caches are created with the kmem_cache_create() function, which can optionally supply callbacks for construction, destruction, and cache reclaim notifications. The callback functions are described in Table 11.8.

11.2.3.3. General-Purpose AllocationsIn addition to object-based memory allocation, the slab allocator provides backward-compatible, general-purpose memory allocation routines. These routines allocate arbitrary-length memory by providing a method to malloc(). The slab allocator maintains a list of various-sized caches to accommodate kmem_alloc() requests and simply converts the kmem_alloc() request into a request for an object from the nearest-sized cache. The sizes of the caches used for kmem_alloc() are named kmem_alloc_n, where n is the size of the objects within the cache (see Section 11.2.3.9). The functions are shown in Table 11.9.

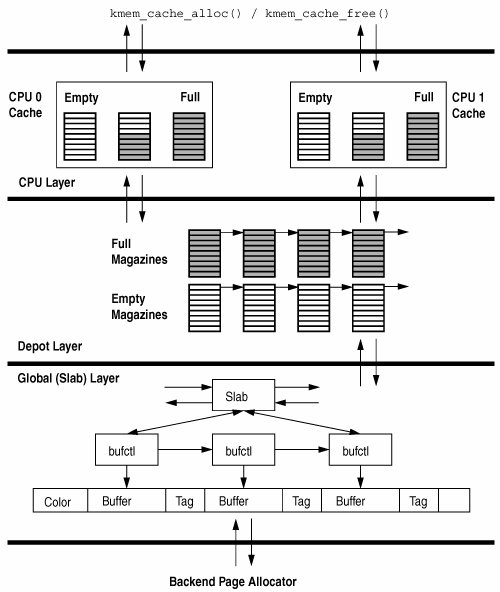

11.2.3.4. Slab Allocator ImplementationThe slab allocator implements the allocation and management of objects to the front-end clients, using memory provided by the backend allocator. In our introduction to the slab allocator, we discussed in some detail the virtual allocation units: the object and the slab. The slab allocator implements several internal layers to provide efficient allocation of objects from slabs. The extra internal layers reduce the amount of contention between allocation requests from multiple threads, which ultimately allows the allocator to provide good scalability on large SMP systems. Figure 11.5 shows the internal layers of the slab allocator. The additional layers provide a cache of allocated objects for each CPU, so a thread can allocate an object from a local per-CPU object cache without having to hold a lock on the global slab cache. For example, if two threads both want to allocate an inode object from the inode cache, then the first thread's allocation request would hold a lock on the inode cache and would block the second thread until the first thread has its object allocated. The per-CPU cache layers overcome this blocking with an object cache per CPU to try to avoid the contention between two concurrent requests. Each CPU has its own short-term cache of objects, which reduces the amount of time that each request needs to go down into the global slab cache. Figure 11.5. Slab Allocator Internal Implementation The layers shown in Figure 11.5 are separated into the slab layer, the depot layer, and the CPU layer. The upper two layers (which together are known as the magazine layer) are caches of allocated groups of objects and use a military analogy of allocating rifle rounds from magazines. Each per-CPU cache has magazines of allocated objects and can allocate objects (rounds) from its own magazines without having to bother the lower layers. The CPU layer needs to allocate objects from the lower (depot) layer only when its magazines are empty. The depot layer refills magazines from the slab layer by assembling objects, which may reside in many different slabs, into full magazines. 11.2.3.5. The CPU LayerThe CPU layer caches groups of objects to minimize the number of times that an allocation will need to go down to the lower layers. This means that we can satisfy the majority of allocation requests without having to hold any global locks, thus dramatically improving the scalability of the allocator. Continuing the military analogy: Three magazines of objects are kept in the CPU layer to satisfy allocation and deallocation requestsa full, a half-allocated, and an empty magazine are on hand. Objects are allocated from the half-empty magazine, and until the magazine is empty, all allocations are simply satisfied from the magazine. When the magazine empties, an empty magazine is returned to the magazine layer, and objects are allocated from the full magazine that was already available at the CPU layer. The CPU layer keeps the empty and full magazine on hand to prevent the magazine layer from having to construct and deconstruct magazines when on a full or empty magazine boundary. If a client rapidly allocates and deallocates objects when the magazine is on a boundary, then the CPU layer can simply use its full and empty magazines to service the requests, rather than having the magazine layer deconstruct and reconstruct new magazines at each request. The magazine model allows the allocator to guarantee that it can satisfy at least a magazine size of rounds without having to go to the depot layer. 11.2.3.6. The Depot LayerThe depot layer assembles groups of objects into magazines. Unlike a slab, a magazine's objects are not necessarily allocated from contiguous memory; rather, a magazine contains a series of pointers to objects within slabs. The number of rounds per magazine for each cache changes dynamically, depending on the amount of contention that occurs at the depot layer. The more rounds per magazine, the lower the depot contention, but more memory is consumed. Each range of object sizes has an upper and lower magazine size. Table 11.10 shows the magazine size range for each object size.

A slab allocator maintenance thread is scheduled every 15 seconds (controlled by the tunable kmem_reap_interval) to recalculate the magazine sizes. If significant contention has occurred at the depot level, then the magazine size is bumped up. Refer to Table 11.11 for the parameters that control magazine resizing.

11.2.3.7. The Global (Slab) LayerThe global slab layer allocates slabs of objects from contiguous pages of physical memory and hands them up to the magazine layer for allocation. The global slab layer is used only when the upper layers need to allocate or deallocate entire slabs of objects to refill their magazines. The slab is the primary unit of allocation in the slab layer. When the allocator needs to grow a cache, it acquires an entire slab of objects. When the allocator wants to shrink a cache, it returns unused memory to the back end by deallocating a complete slab. A slab consists of one or more pages of virtually contiguous memory carved up into equal-sized chunks, with a reference count indicating how many of those chunks have been allocated. The contents of each slab are managed by a kmem_slab data structure that maintains the slab's linkage in the cache, its reference count, and its list of free buffers. In turn, each buffer in the slab is managed by a kmem_bufctl structure that holds the free list linkage, the buffer address, and a back-pointer to the controlling slab. For objects smaller than 1/8th of a page, the slab allocator builds a slab by allocating a page, placing the slab data at the end, and dividing the rest into equalsized buffers. Each buffer serves as its own kmem_bufctl while on the free list. Only the linkage is actually needed, since everything else is computable. These are essential optimizations for small buffers; otherwise, we would end up allocating almost as much memory for kmem_bufctl as for the buffers themselves. The free list linkage resides at the end of the buffer, rather than the beginning, to facilitate debugging. This location is driven by the empirical observation that the beginning of a data structure is typically more active than the end. If a buffer is modified after being freed, the problem is easier to diagnose if the heap structure (free list linkage) is still intact. The allocator reserves an additional word for constructed objects so that the linkage does not overwrite any constructed state. For objects greater than 1/8th of a page, a different scheme is used. Allocating objects from within a page-sized slab is efficient for small objects but not for large ones. The reason for the inefficiency of large-object allocation is that we could fit only one 4-Kbyte buffer on an 8-Kbyte pagethe embedded slab control data takes up a few bytes, and two 4-Kbyte buffers would need just over 8 Kbytes. For large objects, we allocate a separate slab management structure from a separate pool of memory (another slab allocator cache, the kmem_slab_cache). We also allocate a buffer control structure for each page in the cache from another cache, the kmem_bufctl_cache. The slab/bufctl/buffer structures are shown in the slab layer in Figure 11.5. The slab layer solves another common memory allocation problem by implementing slab coloring. If memory objects all start at a common offset (e.g., at 512-byte boundaries), then accessing data at the start of each object could result in the same cache line being used for all of the objects. The issues are similar to those discussed in Section 10.2.7. To overcome the cache line problem, the allocator applies an offset to the start of each slab so that buffers within the slab start at a different offset. This approach is also shown in Figure 11.5 by the color offset segment that resides at the start of each memory allocation unit before the actual buffer. Slab coloring results in much better cache utilization and more evenly balanced memory loading. 11.2.3.8. Slab Cache ParametersThe slab allocator parameters are shown in Table 11.11 for reference only. We recommend that none of these values be changed. 11.2.3.9. Slab Allocator StatisticsTwo forms of slab allocator statistics are available: global statistics and per-cache statistics. The global statistics are available through the mdb debugger and display a summary of the entire cache list managed by the allocator. # mdb -k Loading modules: [ unix krtld genunix specfs dtrace ufs ip sctp usba s1394 fcp fctl nca lofs nfs audiosup sppp random crypto logindmux ptm fcip md cpc zpool ] > ::memstat ^C > ::kmastat cache buf buf buf memory alloc alloc name size in use total in use succeed fail ------------------------- ------ ------ ------ --------- ---------- ----- kmem_magazine_1 16 10410 21672 352256 1170957 0 kmem_magazine_3 32 5712 7560 245760 512141 0 kmem_magazine_7 64 5677 11214 729088 682328 0 kmem_magazine_15 128 7098 13237 1748992 666480 0 kmem_magazine_31 256 607 660 180224 27736 0 kmem_magazine_47 384 65 110 45056 21842 0 kmem_magazine_63 512 143 217 126976 8941 0 kmem_magazine_95 768 0 25 20480 4595 0 kmem_magazine_143 1152 15 33 45056 2829 0 kmem_slab_cache 56 45443 146880 8355840 723032 0 kmem_bufctl_cache 24 308453 787416 19197952 2575391 0 kmem_bufctl_audit_cache 192 0 0 0 0 0 kmem_va_4096 4096 48978 174080 713031680 973680 0 kmem_va_8192 8192 1500 3568 29229056 232825 0 . . zio_buf_131072 131072 9210 9605 1258946560 2333601 0 dmu_buf_impl_t 432 83995 105003 47788032 4994346 0 dnode_t 768 5742 12255 10039296 1361264 0 zfs_znode_cache 168 4592 13488 2301952 1453000 0 zil_dobj_cache 40 0 101 4096 11692545 0 zil_itx_cache 136 7 9309 1314816 10550304 0 zil_lwb_cache 120 1 66 8192 188 0 zfs_acl_cache 40 0 0 0 0 0 ------------------------- ------ ------ ------ --------- --------- ----- Total [hat_memload] 1687552 1364579 0 Total [kmem_msb] 31047680 6396644 0 Total [kmem_va] 873070592 1361767 0 Total [kmem_default] 1753763840 1290871216 0 Total [kmem_io_2G] 8429568 2103 0 Total [kmem_io_16M] 12288 30454 0 Total [bp_map] 1310720 10327220 0 Total [id32] 4096 21 0 Total [segkp] 393216 1613 0 Total [ip_minor_arena] 256 10768 0 Total [spdsock] 64 1 0 Total [namefs_inodes] 64 44 0 ------------------------- ------ ------ ------ --------- --------- ----- The ::kmastat dcmd shows summary information for each statistic and a systemwide summary at the end. The columns are shown in Table 11.12.

A more detailed version of the per-cache statistics is exported by the kstat mechanism. You can use the kstat command to display the cache statistics, which are described in Table 11.13.

sol8# kstat -n ufs_inode_cache module: unix instance: 0 name: ufs_inode_cache class: kmem_cache align 8 alloc 7357 alloc_fail 0 buf_avail 8 buf_constructed 0 buf_inuse 7352 buf_max 7360 buf_size 368 buf_total 7360 chunk_size 368 crtime 64.555291515 depot_alloc 0 depot_contention 0 depot_free 2 empty_magazines 0 free 7 full_magazines 0 hash_lookup_depth 0 hash_rescale 0 hash_size 0 magazine_size 3 slab_alloc 7352 slab_create 736 slab_destroy 0 slab_free 0 slab_size 4096 snaptime 21911.755149204 vmem_source 20 |

EAN: 2147483647

Pages: 244