Design Issues of Intelligent Agents as Facilitators

|

| < Day Day Up > |

|

In this section, we discuss design issues of facilitator agents in distributed collaborative-learning environments, including problems that often occur in the collaboration process, awareness, and how to present the awareness information and advice effectively and nonintrusively.

Software Agents and Pedagogical Agents

The term “agents” has been used in a variety of fields of computer science and artificial intelligence. It has been applied in many different ways in different situations for different purposes. However, there is no commonly accepted notion of what it is that constitutes an agent. As Shoham (1993) pointed out, the number of diverse uses of the term “agent” are so many that it is almost meaningless without reference to a particular concept of agent.

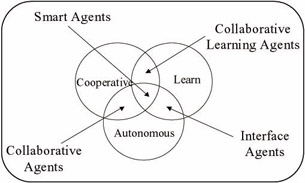

Many researchers have attempted to address this problem by characterizing agents along certain dimensions. For example, Franklin and Graesser (1997) constructed an agent taxonomy aimed at identifying the key features of agent systems in relation to different branches of the field. They then classified existing notions of agents within a taxonomic hierarchy. Nwana (1996) classified agents according to three ideal and primary attributes that agents should exhibit: autonomy, cooperation, and learning. Autonomy refers to the principle that agents can operate on their own without the need for human guidance. They “take initiative” instead of acting simply in proactive response to their environments (Wooldridge & Jennings, 1998). Cooperation refers to the ability to interact with other agents and possibly humans via some communication language, which means they should possess a social ability. Agent learning refers to agents’ capability of improving their performance over time. Using the three characteristics, Nwana derived four types of agents in the agent typology: collaborative agents, collaborative learning agents, interface agents, and smart agents (Figure 1).

Figure 1: Agent topology Source: Nwana (1996).

Although the facilitator agents described in this paper have the ability to learn and to act autonomously, their ability to communicate with users is simple. In this sense, the facilitator agents fall into the interface agent category.

Malone, Grant, and Lai (1997) reviewed their experience in designing agents to support humans working together (sharing information and coordination). From the experience, they found two design principles:

-

Semiformal systems: Do not build computational agents that try to solve complex problems all by themselves. Instead, build systems where the boundary between what the agents do and what the humans do is flexible.

-

Radical tailorability: Do not build agents that try to figure out for themselves things that humans could easily tell them. Instead, try to build systems that make it as easy as possible for humans to see and modify the same information and reasoning processes their agents are using.

The design of our facilitator agents follows these two principles. On one hand, the agents are designed not to replace instructors but to work together with them to support the collaboration. On the other hand, the agents can be started, stopped, and turned off at the will of the users (students or instructors). We will also allow the users to customize the services provided by agents.

There are two more concerns when agents are built: competence and trust (Maes, 1997). Competence refers to how an agent acquires the knowledge it needs to decide when, what, and how to perform the task. In our case, will the agent depend only on the rules written by the instructor? Should it be able to improve its performance by learning? For agent systems to be truly “smart,” we believe that they would have to learn as they react and interact with their external environments. The ability to learn is a key attribute for intelligent agents. Trust refers to how we can guarantee that the user, in our case the instructor, feels comfortable in following the advice of the agent or delegating tasks to the agent, for example, letting the agents send e-mails to students directly without the instructor’s confirmation. It is probably not a good idea to give a user an interface agent that is sophisticated, qualified, and autonomous from the start (Maes, 1997). That would leave the user with a feeling of loss of control and understanding. We have tried different methods. One of our solutions is that at the beginning, the agents work together with the instructor, providing advice and explaining its reasoning process. Gradually the agents learn from the instructor’s feedback on its advice, improve their performance over time, and build a trust relationship, until a point is reached where the agents are allowed to perform actions without confirmation from the instructor.

Pedagogical agents are defined, according to Johnson et al. (2000), as “autonomous and/or interface agents that support human learning in the context of an interactive learning environment.” They are built upon previous research on intelligent tutoring systems (ITSs) (Wenger, 1987). Many researchers have designed and developed pedagogical agents for ITSs (Johnson & Rickel, 1997; Lester et al., 1999; Cassell, 2000), where the agents play the role of a guide or tutor. They tell the students what to do and lead them through the process of performing a task. Most of them tend to dominate the interface and constantly require the student’s attention. Unlike the agents of many ITSs, the facilitator agents of distributed collaborative-learning environments work in the background. They monitor the collaboration, collect data, compute statistics, and provide students and instructors with awareness information and advice, which can be ignored if it is considered of low priority. This makes the agents less intrusive so that the students can concentrate on their collaboration without feeling disturbed.

Awareness

Awareness of individual and group activities is critical to successful collaboration. Dourish and Bellotti (1992) defined awareness as “an understanding of the activities of others, which provides a context for your own activity.” They further explained that the context is used to ensure that individual contributions are relevant to the group’s activity as a whole and to evaluate individual actions with respect to group goals and progress. The information, then, allows groups to manage the process of collaborative working. Awareness information is always required to coordinate group activities.

Gutwin and Greenberg (1995) identified four types of student awareness: social awareness, task awareness, concept awareness, and workspace awareness. Social awareness is the awareness that students have about the social connections within the group. Task awareness is the awareness of how the task will be completed. Concept awareness is the awareness of how a particular activity or piece of knowledge fits into the student’s existing knowledge. Workspace awareness is the up-to-the-moment understanding of another person’s interaction with a shared workspace. It involves knowledge about such things as who is in the workspace, where they are working, and what they are doing. They further point out that ”Workspace awareness reduces the effort needed to coordinate tasks and resources, helps people move between individual and shared activities, provides a context in which to interpret utterances and allow anticipation of others’ actions.” They demonstrated that workspace awareness widgets facilitate coordination for synchronous groupware (Gutwin et al., 1996; Gutwin & Greenberg, 1998). Other proposals facilitate coordination for asynchronous groupware (Roseman & Greenberg, 1996; Tam et al., 2000).

Awareness is taken for granted in everyday face-to-face environments, but when the setting changes to distributed environments, many of the normal cues and information sources that people use to maintain mutual awareness are lost. This awareness is important in collaborative learning for two reasons (Gutwin et al., 1995). First, it reduces the overhead of working together, allowing learners to interact more naturally and more effectively. Second, it enables learners to engage in the practices that allow collaborative learning to occur.

Various mechanisms are used to provide awareness among participants. Some provide explicit facilities through which participants inform each other of their activities. Others provide explicit role support, which gives awareness among participants of each other’s possible activities. In their research on distributed groupware systems, Gutwin and his colleagues (Gutwin et al., 1995) observed that an excess of awareness information can result in awareness overload. Often, when large amounts of information are presented, users have trouble discerning between useful information and unimportant information. Too much awareness information will also distract users from their work. Therefore, the choices and presentation of awareness information are very important. These design considerations are discussed in the Agents as Facilitators section. In our research, the facilitator agents provide awareness information to students and instructors so that students can regulate their collaborations themselves, and instructors can use the information to gain an overview of the collaboration and detect possible problems more easily.

Coordination

Coordination, along with communication, is one main component of collaboration. Malone and Crowston (1994) described coordination theory as a research area focused on the interdisciplinary study of how coordination can occur in diverse kinds of systems. They also proposed an agenda for coordination research, where “designing new technologies for supporting human coordination” is considered to be one of the methodologies useful in developing coordination theory. In CSCW, understanding how computer systems can contribute to reducing the complexity of coordinating cooperative activities has been a major research issue and has been investigated by a range of eminent CSCW researchers (Carstensen & S rensen, 1996; Divitini et al., 1996; Malone et al., 1997).

In distributed collaborative learning, challenges to provide coordination and scaffold effective collaboration have been intensively investigated (Bourdeau & Wasson, 1997; Wasson, 1998; Mildrad et al., 1999; Baggetun et al., 2001; Chen & Wasson, 2003). After examining the social psychological literature, Salomon (1992) identified several problems that often occur in a collaborative learning process:

-

“Free rider” effect: Where one team member just leaves it to the others to complete the task (Kerr, 1983; Kerr & Bruun, 1983).

-

“Sucker” effect: Where a more active or able member of a team discovers that he or she is taken for a free ride by other team members (Kerr & Bruun, 1983).

-

“Status sensitivity” effect: Where able or very active members take charge and thus, have an increasing impact on the team’s activities and products (Dembo & McAuliffe, 1987).

-

“Ganging up on the task”: Where team members collaborate with each other to get the whole task over with as easily and as fast as possible (Salomon & Globerson, 1987).

If these problems are not solved properly, effective outcomes cannot be obtained by the collaboration learning. Salomon further recommended that collaborative-learning environments should be designed to encourage mindful engagement among participants through genuine interdependence. Following Salomon’s recommendation and Malone and Crowston’s coordination theory (Malone & Crowston, 1994), Boudeau and Wasson (1997) extended the definition of coordination as “managing dependencies between activities and supporting (inter-)dependencies among actors.” By analyzing the dependencies between actors in three collaborative telelearning scenarios, Wasson (1998) suggested two types of coordination agents: coordination managers and coordination facilitators. Coordination managers mediate administrative aspects of collaboration for individual actors and groups or teams, while coordination facilitators support students involved in collaborative-learning activities by mediating processes. These analyses stimulated our design of facilitator agents in distributed collaborative learning.

Agents as Facilitators

Many research efforts on agents in CSCL environments have implemented agents that possess characteristics of a facilitator. Such characteristics are as follows (Hmelo-Silver, 2002):

-

Monitoring: Monitor progress, collaboration, participation, and time consumption; detect misunderstandings and/or disagreement

-

Group dynamics: Responsible for making the users follow an outlined scenario or method, directing users into activities that happen at certain stages in the collaboration

-

Clarifications: Encourage discussions when or if disagreements are detected by the monitoring responsibilities

-

Direct manipulations: Initiate breakdown when or if the collaborative processes have run into a deadlock

-

Change behavior: Observe the learners’ reactions as a result of the interaction, thus enabling the agent to judge and adapt its behavior

In addition to these characteristics, designing facilitator agents also need to take into consideration how agents should interact with users (including instructors and students). We identified four factors in this interaction:

-

Relevance: What information should facilitator agents present to users? It depends on the context of the users and their preferences. To avoid information overload (including awareness overload), facilitator agents should tailor the information.

-

Schedule: When should facilitator agents present information to users? Should the agents give immediate response to the user’s action, or should the agents wait until the user has repeated the same action several times? Should the agent’s intervention repeatedly happen or just once in a while?

-

Presentation: In what format should facilitator agents present information to users? Should the agents use text or speech to interact with users? If using text, should the information be presented in a pop-up window that needs the user to acknowledge it before he/she can continue, or should the information be in a fixed text area that the user can choose to ignore?

Cao and Greer (2003) developed a system that allows users to specify the rules that the agent will use to present the awareness information. Alarcon and Fuller (2002) mapped users’ actions to a semantic network in order to find out what awareness information is relevant. As a principle in a distributed-learning environment, the facilitator agents should allow users to minimize the interruption of their current tasks while remaining aware of their communications and work contexts.

|

| < Day Day Up > |

|

EAN: 2147483647

Pages: 121