The Unified Dimensional Model

With SQL Server 2005, Microsoft introduces a new technology called a Unified Dimensional Model (UDM). A UDM is designed to provide all the benefits of an OLAP system with multidimensional storage and preprocessed aggregates. A UDM, however, avoids a number of the drawbacks of more traditional OLAP systems.

Structure

A UDM is a structure that sits over the top of a data mart and looks exactly like an OLAP system to the end user. One of the major advantages of a UDM, however, is that it does not require a data mart. You can also build a UDM over one or more OLTP systems. You can even mix data mart and OLTP system data in the same UDM. The UDM can even include data from databases from other vendors and XML-formatted data.

A UDM can define measures, dimensions, hierarchies, and cubes either from star and snowflake schemas or directly from relational database tables. This latter capability enables us to provide business intelligence without first having to build a data mart. However, there are still some reasons for choosing to build a data mart which we discuss in Chapter 6.

Data Sources

A UDM begins with one or more data sources. A data source stores the information necessary to connect to a database that provides data to the UDM. The data source includes the server name, database name, and the database logon credentials, among other things. A number of OLE DB providers are available for accessing different databases including Oracle and Microsoft Directory Services.

Data Views

Once the data sources have been defined, data views are used to determine which tables and fields are utilized. The data view can combine tables and fields from a number of different data sources. For instance, tables from the order processing system can be combined with tables from the sales management system, so a measure of actual sales versus sales quota can be created. This multiple data source capability of data views is what puts the "Unified" in Unified Dimensional Model.

Once the tables and fields have been added to the data view, the data view can then be used to filter out unnecessary items in the database. Only those tables and fields being used to create business intelligence are included in the view. The underlying table structures in the data sources are not changed. The data view merely controls what is available as building blocks for the next step in the definition process.

This is especially helpful when a data source is a large, highly normalized OLTP system. The data view makes available only those tables that contain the data for the measures, dimensions, and hierarchies we need to define. The same is true within a table. Only those fields that contain required data are visible. All the extraneous tables and fields are filtered out by the data view.

To make the data view even easier to understand, user-friendly names and descriptions can be assigned to tables and fields. This metadata is used throughout the UDM. Any measures, dimensions, and hierarchies created from these fields will utilize the user-friendly names.

In addition, we can use the data view to make virtual additions to the table and field structures. These additions are not made in the database itself, but in the virtual view that exists only in the UDM. Therefore, we can make these virtual additions without any fear of breaking the OLTP systems that use the relational tables.

One example of these virtual additions is the creation of a relationship between two tables that is not defined in the database itself. Another example is the addition of a calculated field in a database table. For example, a calculation involving a number of different fields from different tables can be created for use as a measure. Or, a number of strings can be concatenated together for use as a dimension. These calculated fields are given names and descriptions, so they appear just like any other field included in the UDM.

Once the data view is completed, its content is used to create measures, dimensions, hierarchies, and cubes.

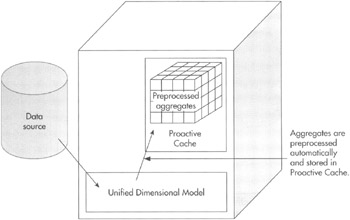

Proactive Caching

To obtain the same performance benefits of traditional OLAP systems, a UDM uses preprocessed aggregates. To facilitate high availability, these preprocessed aggregates are stored in the proactive cache.

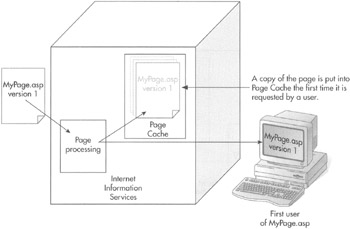

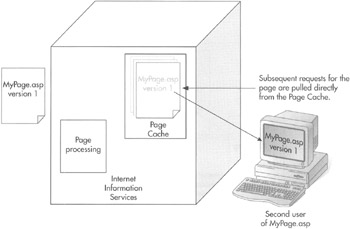

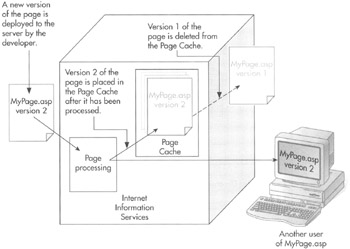

This structure is referred to as cache because it is created when needed and is changed when the underlying data or the underlying structure changes. It works much the same way Internet Information Services (IIS) caches web pages as shown in Figure 4-5. The results of a processed web page are stored in the IIS page cache, so subsequent accesses to that page are faster as shown in Figure 4-6. When changes are made to the underlying page, that page is deleted and eventually re-created in the page cache as in Figure 4-7.

Figure 4-5: Creating a cached web page in Internet Information Services

Figure 4-6: Retrieving a web page from the Internet Information Services Page Cache

Figure 4-7: Updating the Internet Information Services Page Cache when a web page is modified

The major difference between UDM caching and other caching mechanisms, such as the IIS example, is summed up in the word "proactive." With IIS, a page is put into cache only after it has been accessed the first time. The first user to request a particular page must wait until the page is read from the disk and processed before the user receives the contents of the page as you see in Figure 4-5.

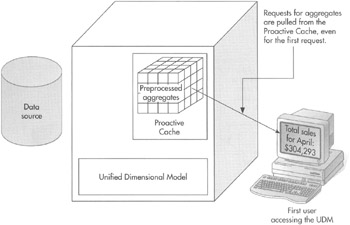

The UDM, on the other hand, uses proactive caching. Items are created in the cache before they have been requested by a user. With UDM, the preprocessed aggregates are created automatically as shown in Figure 4-8. Even the first users receive their requested aggregates from the proactive cache as in Figure 4-9.

Figure 4-8: Proactive caching with a Unified Dimensional Model

Figure 4-9: Even the first user receives the requested aggregate from the Proactive Cache.

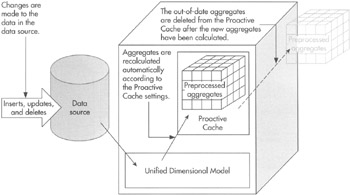

The UDM monitors the data in the data source. As this data is modified, the UDM checks the options selected for the associated proactive cache. We look at just what these caching options are in Chapter 9. At the appropriate time, as defined by the caching options, the UDM deletes the current cache and rebuilds it with up-to-date values as shown in Figure 4-10.

Figure 4-10: The Proactive Cache is deleted and re-created in response to changes in the data source.

The proactive cache can be built using MOLAP, ROLAP, or HOLAP. The UDM provides an easy mechanism to assist you with the decision among these three architectures. It points out the tradeoffs these three architectures make between latency and responsiveness. This enables you to determine which architecture to use based on the business needs without getting lost in the technical details.

XML Definitions

The definitions of all the objects in the UDM are stored as XML text files. Each of the data source, data view, dimension, and cube definitions is stored in its own text file. These XML text files do not contain any of the data for the object—the dimension text file does not contain any of the members of the dimension, the cube text file does not contain any of the preprocessed aggregates. The XML text files simply contain the definitions—the dimension text file tells which table and field holds the members of the dimension, the cube text file contains the information on how the preprocessed aggregates will be managed.

In short, these XML text files act as the source code for the UDM.

Advantages

The UDM features a novel architecture boasting a unique set of capabilities. These capabilities provide us with a number of advantages over more traditional OLAP implementations. They go a long way toward solving the problems and frustrations that often accompany business intelligence implementation.

OLAP Built on Transactional Data

The UDM allows OLAP cubes to be built directly on top of transactional data. The UDM does not need a strict star or snowflake schema data mart as its data source. Instead, any well-structured, relational database works just fine.

This eliminates the effort necessary to copy data from the OLTP system into a data mart. With UDM, the data can be utilized directly from the OLTP system without a large extract, transform, and load process. This greatly simplifies an OLAP implementation project, providing savings of both time and money.

Extremely Low Latency

By building our OLAP system directly on top of the OLTP system, we can eliminate much of the latency required in data warehousing systems. In fact, we can have real-time or near real-time performance. However, we must take care to balance the need for real-time business intelligence with the load that is placed on the OLTP system. Still, if the computing power is available, real-time performance is attainable in a straightforward environment.

Ease of Creation and Maintenance

The UDM architecture removes much of the complexity that accompanies most OLAP projects. The UDM eliminates the need to create and maintain a data mart, and it does away with the extract, transform, and load routines that must accompany it. This alone makes creating business intelligence with the UDM orders of magnitude simpler than other OLAP architectures.

On top of this, the Business Intelligence Development Studio provides a user-friendly environment for creating, reviewing, and maintaining all of the items in a UDM. Dimension and Cube Wizards guide you step-by-step through the creation of these objects, making suggestions along the way. In short, it has never been easier to provide the benefits of an OLAP system to your decision makers.

Design Versioning with Source Control

Because the definition for each of the objects in the UDM is stored in its own XML text file, the objects in the UDM can be managed by a source code utility. The source code utility can track these objects as you develop your UDM. It can also provide version control in case you need to undo a change to an object's definition and roll it back to an earlier version.

EAN: 2147483647

Pages: 112