10.13 INFRASTRUCTURE DESIGN STREAM

|

| < Day Day Up > |

|

10.13 INFRASTRUCTURE DESIGN STREAM

10.13.1 Define the Infrastructure

Imagine the situation: the metrics team have spent months sorting out the requirements, they have derived metrics and evaluated products such as cost estimation tools and they have built up an awareness in the organization about Software Metrics. What happens next? Obviously, they will write a report and perhaps they will issue "standards" explaining their metrics and how they should be used. All they have to do now is sit back and wait for it to all happen. One year later they are still waiting!

I will say it again, people generally need help to change. Even with the best will in the world they will revert to their normal way of working unless there is someone around to facilitate and support change. This does not just apply to Software Metrics programs.

If you need convincing that standards are rarely used in the full sense then take a walk around your development area and ask to see the development process manual. Assuming the person you ask even knows where it is you may like to wonder why it is in such pristine condition. Do you really think that it is because everyone knows it inside out?

Once you accept that you need to support the process of change you have to consider how that support should be provided. There are a number of options and I will discuss some of these now.

An obvious choice is to retain the team that has developed your metrics program. They assume the responsibility for implementing the program.

The main advantage with this approach is that you do have, and can continue to develop, a center of expertise within the organization. A centralized group can also act as a focus for the initiative ensuring that there is a single point in the organization responsible for implementation and who can ensure coordination across different groups. After all, it makes little sense for one group to measure reliability by defects per thousand lines of code while another uses mean time to failure!

However, there are two major disadvantages with this scenario. First, the group may well be seen as being external to the software development process. Ownership of the metrics initiative by those responsible for software development is very important and this approach can generate a response along the lines of "not invented here so it's not for us." Secondly, if your organization is large in terms of the number of development teams a centralized group can find it difficult to provide adequate support to all those involved. Phasing obviously helps but this can slow down the implementation process to an unacceptable level.

A second option is to use a centralized group to quickly develop expertise within a small number of development teams and to then let these new experts spread the gospel to the others. This approach is very attractive to management as it costs very little. In fact, it would seem that you get the implementation for free. Believe me, there is no such thing as a free lunch.

This approach seldom works without the continued support of a central group and, unless the support is carefully planned it often becomes engaged in firefighting which demoralizes the central team because they know they should be out of it by now, and it demoralizes the development teams because they never get the level of support they believe they need.

The reasons why this approach has so many problems are complex. You must remember that any metrics initiative has many customer groups within the organization, not just the development teams. This means that a large number of groups and individuals with differing requirements need to be supported. This requires some form of central coordination or you get anarchy — or worse, apathy. Also, if one area does develop a level of expertise how can you ensure that expertise is made available to other areas? Remember, there will be a great deal of pressure on individuals with expertise to get on with their real work because metrics will almost certainly, in this scenario be seen as an extra, lower priority task.

So how can you put in an infrastructure that will really work? Let me outline an approach I believe will work for most large organizations, perhaps with some modification to suit your own specific structure. I will use the model organization described previously as the basis for this discussion.

Your starting position is this: you have a metrics program development team in place, even if this is only one person; you have a development customer authority; and hopefully you have a Metrics Coordination Group or the equivalent. I suggest that you should establish a centralized support group and the obvious choice for this is to staff it from within the development team. After all, these people have spent time developing exactly the kind of metrics-related technical knowledge that will be needed. How large a group will be needed depends on the size of the organization it will service, the scope of the program it will help implement and the speed at which the organization wishes to implement the program. This group can also take on responsibility for the ongoing development and extension of the metrics program. Remember that you may need to do some centralized analysis and feedback of results. More junior staff can be used for this provided they operate with guidance from the central group.

You will also need a customer authority for the implementation. This could be the same individual who has filled the role of development customer authority or it may make sense to target another person in the organization. The customer authority should be as senior a manager as possible provided that you can still ensure involvement. Remember you are only designing the infrastructure at this point so you need only target such an individual. Getting his or her commitment comes later.

The implementation customer authority should act as the chair for the Metrics Coordination Group and this group may well undergo some changes as you move towards implementation. These changes will relate to composition and terms of reference but I will discuss these specifically later.

In terms of our model organization, I see the support group reporting to either the Systems Development Director (the ideal case), or to the R&D manager. This may seem strange given what I have said about the "not-invented-here" syndrome but it does mean that the support group can be seen as impartial. We get over the NIH syndrome another way. If you are really lucky, the Systems Development Director will take on the role of implementation customer authority.

Within each functional grouping you should identify a local metrics coordinator. This person takes on the responsibility of implementing the metrics initiative for their group under the guidance and direction of the centralized group. The intention is to transfer expertise from the central group to these local coordinators as quickly as possible. In terms of our model organization I would expect to see local coordinators appointed to cover Support Development, possibly Operational Support, and Planning. You may choose to appoint a single coordinator for Product Development or you may appoint different individuals to cover Software Development and Test and Integration.

You have to decide whether or not to appoint these local coordinators on a full-time or part-time basis. Which option you choose depends very much on the size of the function they are going to support. If you have only a couple of Product Development teams it would be wasteful to appoint full-time staff but you do need to be realistic. Do not fall into the trap of expecting change to happen automatically. No one can argue, for example, against the proposition that quality is the responsibility of everyone within the organization, but to generate a quality culture does demand the dedicated resources of support staff; otherwise you end up paying lip service to the ideals of quality without seeing any visible improvements. The same is true of Software Metrics.

Of course, even with local coordinators appointed to support all of your major functional groups within the organization you are still failing to cover the needs of one very important group: senior management. Given the fact that this group is diverse in both its requirements and position within the organization, the central support group is ideally placed to supply the local coordination function for senior management. This is especially true when you realize that senior managers are the recipients rather than the suppliers of information.

Your local metrics coordinators form the foundation of the Metrics Coordination Group for the implementation stage of the program. During the implementation stage, the Metrics Coordination Group should also adopt a more proactive role than it has during development of the initiative. Its' terms of reference need to be very clear and should demonstrate that proactive role. A possible set of bullet points for a terms of reference is listed below.

The Metrics Coordination Group should:

-

Own all metrics standards used within the organization

-

Supply the requirements for additional work elements within the metrics program

-

Prioritize those work elements

-

Act as custodian of the metrics program plans

-

Determine the strategy of the metrics program

-

Act as a forum for the sharing of results

-

Act as a liaison point with other organizational initiatives

-

Champion the implementation of metrics.

This set of terms of reference implies that you may need a wider membership within the MCG. As well as the local metrics coordinators you may consider it worth having representatives of various customer groups.

Ownership of the Software Metrics program by the engineering function is vital to its success. To generate this ownership you may like to consider an additional level of implementation support within the metrics initiative. The concept of local facilitators is a very useful one in this context. Each development and support team will be expected to supply some information or data to the central support group, possibly through their local coordinators but to support and police this activity can become extremely onerous if you attempt to do it from the outside. Much better to have one individual within each team charged with this responsibility. This person can also act as a focal point for metrics within the team.

The role of local facilitator is, for all but the largest teams, a part-time one. Initially, the local facilitator will need to spend some time helping to set up the necessary collection procedures and can perhaps assist with local process definition but, as the program beds down, this role becomes less costly. You may well have realized that the local facilitators can also form a local Metrics Coordination Group within each functional area.

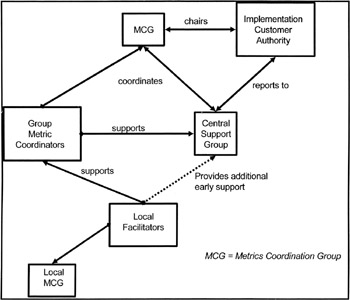

The infrastructure that supports the implementation of the Software Metrics program within the organization is shown in Figure 10.2.

Figure 10.2: Example Organization of Staff Support Infrastructure

There are two comments that need to be made regarding this infrastructure model. First, such a structure may not be possible during the early implementation stage. At this time it may be a target or ideal that you move towards. How you actually support the early implementation of a metrics initiative depends very much on how you phase that implementation. If your initial implementation is more akin to a pilot, say within two or three product development teams only, then centralized support may be sufficient. Alternatively, if you are only using a small set of management statistics based on raw data taken from existing systems within your first-phase implementation then a central support group working with local coordinators will probably be enough. However, if your program is wide-ranging both in terms of what it implements and who it affects, and many successful metrics initiatives are large in both these dimensions, then be sure that you address the question of support structure early.

The other point that needs to be borne in mind is that you should not expect your local coordinators and facilitators to spring fully armed from the organization on the day that implementation starts. They will need time to develop their roles and expertise. While this development continues they will need a relatively high degree of support from somewhere. A central support group staffed by individuals who have gained experience of Software Metrics theory and practice, even if only from pilot work, is ideally placed to provide this. This is shown by the dotted line in Figure 10.2 and you, as the manager of the program should budget accordingly.

10.13.2 Define Support Training

Which brings us nicely to the initial training that should be given to the individuals that make up the support infrastructure for the metrics initiative.

If you decide to adopt the infrastructure model described above then you will have two specific training requirements that will need to be addressed.

The local coordinators require a significant amount of training if they are to fill their roles effectively. The best training that anyone can receive comes from experience, but these individuals will need something to quickly bring them up to a level where they can, at least, operate with support. Such training needs to address the following areas:

-

Motivation of the local coordinators

-

Background addressing the theory of those areas within the domain of Software Metrics that are included within your own initiative

-

General background covering other areas within Software Metrics. After all, your program, if successful, will grow and develop

-

Practical advice regarding the analysis and representation of data

-

Training in the procedures developed within your own metrics initiative and this can include training in the provision of training.

This is a tall order, made worse when you realize how little material there is around that you can make use of directly. There are some training courses available from external sources that you can use. These do, however, tend to address the theory of metrication. For example, you will find courses that teach the use of specific techniques such as Function Point Analysis or McCabe metrics. You will also find some courses that cover the majority of accepted metrics techniques as a single package. There are also some courses available that can help motivate individuals as to the benefits of using Software Metrics. These courses tend to be given by individuals with experience in the field and can be very useful in providing that initial practical view of the discipline but, being given by individuals, they tend to be biased towards that individuals' favored techniques.

Unless some other organization starts to offer training directly geared to the needs of those people responsible for the implementation of metrics initiatives, covering the areas outlined above, then you may well have to devise your own training program that blends external offerings with internal material. I believe that it takes at least six months to turn an individual into an effective metrics implementor just through on-the-job training and you need to reduce this once you start implementation.

A mix of external courses to teach specific techniques that form part of your own initiative, with reading material and a tailored course designed and built by yourselves, is probably the best option at this stage. The internal course will probably need a duration of about four days to adequately cover what needs to be addressed. If you have doubts about your ability to prepare and present such a course then you should consider making use of any internal training function that exists or you may wish to bring in some external consultancy. If starting from scratch, such a course will probably take you about five weeks to develop but it can be a worthwhile investment.

You also need to train the local facilitators. This is less of a problem because you will be supporting these individuals directly. In fact, the main aim of the initial training is to provide the necessary motivation. It is very possible that an external course will be sufficient.

10.13.3 Drawing the Streams Together, or Consolidation

Having designed the many facets of your Software Metrics program you now need to bring them together to form an integrated and complete picture. This will be taken forward to management for approval to proceed. It is possible that you already have authority to build the program or you may need full management approval for the next stage. If the former is the case, you still need to review your proposals with your customer authority. If the latter is the case, you will probably need to add in a cost/benefit analysis.

Bearing in mind that your requirements analysis will have probably identified a number of requirements now addressed through sub-projects or component designs within your overall metrics initiatives, two elements form the basis of your design. These are the sub-project or program component specific elements and the elements related to the overall initiative.

As far as the sub-projects are concerned I would suggest that you view the results of each of these as a "product." The reasoning behind this goes back to the idea of linking each requirement to a customer within the Software Metrics program. If you have an identified customer for each requirement then the way in which you satisfy each requirement can be viewed by the customer as a product that is being delivered to him or her. Of course, the product will not be traditional in the sense that it is a black box but, if you think about it, most products are not "traditional" in this sense anyway.

Assume that you have a requirement for a washing machine. More correctly, you have a requirement for some system that will clean your washable clothes. Traditionally you satisfy this requirement by going out and purchasing a washing machine, in this case a "white box." However, these days you are likely to purchase a white box with an associated service package. For example, the deal you negotiate may include delivery, installation, training through documentation together with a maintenance contract or service guarantee. The "product" is much more than just a white box.

In the same way, you can satisfy a requirement within a Software Metrics program with a package of deliverables which the customer can view as a product. For example, there may be a requirement within your initiative for some way to monitor, control and reduce the complexity of generic software systems, that is, systems that have existed for some time and undergo continual enhancement. An obvious way to satisfy this requirement is for the development team to purchase a static analysis tool that will, perhaps, reverse-engineer code to produce semiformal documentation such as structure charts, flowgraphs, etc. and that will perhaps analyze these in terms of specific metrics, McCabe Cyclomatic and Essential complexity metrics are obvious choices.

Fine — off you go and pay the money and you ship the tool out to your customers, the development teams. I believe that very little will happen as a result of this initiative. In this case, the tool is the white box but to get the most out of your solution you need to do more than just make this available to users. You will need to document how the output of the tools should be analyzed and used within your environment. You will also need to provide some background on the theory behind the metrics. This is especially true in a technical environment where people do not accept things just because someone "says" they work. You may also need to provide some training for the engineers to support your documentation. You will almost certainly have to provide some form of support or hand-holding to get development teams started in the use of these new techniques.

As you see, your solution now contains a number of distinct elements. You have documentation, perhaps in the form of in-house guidelines, training, on-going support and, lest we forget, you also have the tool. This will be true for most of your requirements and is certainly true for cost estimation, Applied Design Metrics, project control and management statistics. So, the design you take forward for review should include, against each requirement, information about the core solution, which may be a tool or a set of techniques or even a set of metrics, in-house documentation, the provision of training and on-going support.

These are the sub-project or program component-specific elements of your design.

You will also have prepared another set of proposals relating to the overall metrics program and how that will be implemented. These include your marketing plan and your business plan covering internal publicity together with high-level education and the implementation strategy respectively. You also have proposals for an infrastructure to be established to support the implementation strategy. You may also have some pilot results to add credence to your claims.

These elements of your design relate to the metrics program overall rather than to specific sub-projects within it, but please remember that they are a vital part of your proposals — at least as important as your specific "metrics" solutions.

How you actually present your design for approval will depend upon the culture of your own organization and its normal operating practices. You will almost certainly be expected to prepare your proposals as some form of document. Remember to link your solutions to requirements and business needs associated with specific customers or customer groupings within your organization. One approach that seems to be acceptable is to structure your design document as an interim report in the following way:

-

Introduction: A high-level description of why the Software Metrics program was initiated. Keep it short!

-

Requirement Identification: What specific requirements have been identified, which are addressed by this phase of the program; whose needs are addressed (the link to customers).

-

Product Description: For each requirement, the core solution, in-house documentation, the provision of training and ongoing support along with the results of any pilot work that supports your solution. Remember that you are seeking approval to build so do not go into great detail about the pilots, simply use results to support your design solution.

-

Implementation Strategy: Covering the marketing and business plan together with your proposals for the supporting infrastructure. Do not forget to describe the training requirements for individuals involved in this infrastructure.

If you have to seek senior management approval and your organizational culture requires it, you may have to carry out a cost/benefit analysis. This is very difficult to do for a Software Metrics program because there is very little material available from external sources that describes the costs and benefits of a wide-ranging metrics initiative. Why is this? I believe that there are a number of answers to this question.

One reason must be the difficulty in quantifying the benefits that accrue from some of the traditional areas of applied Software Metrics. For example, what is the financial benefit of supplying external customers with accurate cost and duration estimates for the development of software systems? You may be able to construct a model based on assumptions of lost business that results from over-optimistic estimates or you may operate under fixed-price contracts which certainly makes the cost of inaccurate estimates more accessible. Even in this case, you may also be operating under "lowest tender" conditions of bidding which can distort results. The reality is that we do not know the true benefits of accurate estimation in financial terms but we do know the cost of inaccuracy in qualitative terms. Basically, if we can demonstrate to end customers that our estimates are accurate, what we say it will cost is what it will cost, then that gives us a competitive edge over others in the industry. It makes us more professional, but this is difficult to model in terms of a cost/benefit analysis.

Another problem is the differences between metrics programs. It is very unlikely that your initiative will be sufficiently close to that of other organizations for you to be able to use their data. While you will almost certainly find similar elements between programs that can enable you to justify parts of your own program in terms of costs and benefits, you will find problems when you try to carry out a cost/benefit analysis for the whole thing.

Finally, we must realize that the use of Software Metrics within the industry is still in its infancy. Most organizations have only been using metrics as a practical solution to problems for a short period of time. Many of them are only beginning to realize the benefits now.

It may be that by looking at what organizations that are perceived to be successful are doing we can also get ahead of the game. These organizations are realizing benefits from the practical use of Software Metrics and they demonstrate this by their continued investment in metrics initiatives. Perhaps one of the most forceful arguments for the use of Software Metrics is not to answer the question "can we afford to do this?" but instead to ask the question "can we afford NOT to do this?"

In many cases your cost/benefit analysis can be replaced by ensuring that you have the commitment of the potential customers of metrics within your own organization. You get this by directing solutions towards their own specific requirements and by making sure that they are aware of this. You talk to them!

All of which is no help if your organization demands that you still supply a traditional cost/benefit analysis. If this is the case then the following may be of some help but I will be honest and say that this is not an easy problem to solve.

The budget necessary for the implementation of a Software Metrics program can be considered from a number of viewpoints. First, the work to define and build the program can be considered separately to the actual implementation.

Whether budgets are being considered for a particular stage or for the full program, the person preparing the budget should examine the likely staff and ancillary costs.

Within staff costs there will be two distinct elements. First, there will be the staff costs of those people directly involved in defining, building and implementing the program. We can call these people the metrics team. Second, there will be staff costs associated with the additional effort required from the software production function within the organization. These costs can be consumed by pilots, reviews of the program deliverables such as the design document, data collection and data analysis.

Ancillary costs cover many items including additional hardware such as PCs, software for data storage and analysis, proprietary packages such as cost estimation packages, consultancy for training and other purposes such as "buying in" statistical analysis skills and membership of external organizations such as metrics user groups.

Most organizations have standards covering the presentation of budgets for management approval and these should be used whenever they apply.

A checklist is provided below, Table 10.1, to assist with the identification of costs.

| Metrics team effort |

| Engineering effort |

| Hardware |

| Software |

| Proprietary packages |

| Training |

| Consultancy |

| Conferences |

| Special Interest Group membership |

| Travel |

It is always advisable to build a contingency into budgets to cover additional work that may be identified as the program progresses.

Beware of giving "off-the-cuff" budget estimates. While it is not always possible to avoid this, it is common for such estimates to become the budget for the program. Generally, such figures are underestimates of the true cost and can quickly become a serious constraint on the work being done.

It is tempting to think that pilot activities will provide enough evidence of the benefits of Software Metrics to enable extrapolation to model the benefits of wider implementation. Unfortunately this is rarely the case. The reason for this is that many of the financial benefits depend on improved quality, and this may not be evident until a system has been in the field for six or even twelve months. Another reason is that pilot activities tend to address one aspect of Software Metrics and this may only show limited benefits.

Consider a development project that pilots the use of McCabe metrics to control quality. Assume that the project lasts six months from initiation to first live use. It may take a further six months to demonstrate a lower defect level than the norm for the organization, assuming that this norm is actually known. It may take twelve months of field use, and enhancement, to demonstrate financial benefits obtained from greater maintainability. This implies that it will take eighteen months to quantify the financial benefits arising from the use of McCabe metrics. It is almost certain that a cost/benefit analysis will have to be produced long before this point.

If we cannot use pilot results to build our cost/benefit analysis then the only route left open to us is the use of assumption.

It should be possible to estimate the cost of the program once the scope of the work has been defined. This is a good case for considering implementation separately as the earlier stages contribute to the scope definition for that stage.

When considering the benefit side it is now generally accepted, among metrics practitioners, that "the act of measurement will improve the process or product being measured." This is sometimes cited as the Hawthorn effect and this can be present. However, it is more often due to the results of the measurement activity being used to alter the process or product rather than any psychological phenomena. Beware that an alternative argument may be used against you, that "measurement itself has no intrinsic value" and be prepared to counter this.

If the basic assumption above is accepted it is possible to define financial benefits of a Software Metrics program as follows.

You should establish an average fault rate for the organization. You will also need to derive the average cost per fault perhaps by identifying the average effort involved to fix such a fault and the charge-out rate used by the organization's accountants. This type of information may well be available within the organization

Make an assumption that the implementation of Software Metrics will reduce the fault rate by some amount and calculate what this will save the organization based on the amount of software produced during a given period.

Because the Software Metrics program will probably require a relatively high investment during the first year or two, including the purchase of software packages and perhaps a higher level of consultancy support, you may find that the program "runs at a loss" for the first year or two. Because of this it is wise to supply a cumulative cost/benefit figure to show when returns can be expected. Senior managers tend not to query the detail of a cost/benefit analysis very closely provided any assumptions are made clear. They are much more interested in the bottom line that provides evidence that the investment is justified and has been considered in "business" terms. Be prepared to support your assumptions possibly using evidence from other organizations.

It is also worth describing the additional benefits of the program. The cost/benefit analysis as described above does not quantify the value of improved management information or more accurate cost estimates, other than to assume that these contribute to a reduced defect rate. These enabling aspects of a Software Metrics program often carry as much, if not more weight, than the cost/benefit analysis but, as discussed earlier, this can depend on the culture of the organization.

|

| < Day Day Up > |

|

EAN: 2147483647

Pages: 151