Final Thoughts and Philosophies on Staffing for the Testing Discipline

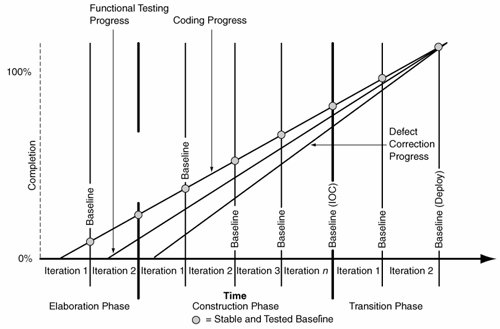

| When I entered the fascinating world of software development years ago, I observed certain attitudes related to testing that I considered puzzling. Testing as a discipline was considered to have less status and importance than development. It was considered a stepping-stone to "more important" activities, such as coding. It was also where junior personnel aspiring to be developers and coders began their careers. This attitude must change. I suspect that this attitude originated in earlier years when the software testing discipline was in its infancy and few tools or formal techniques were available. Most testing consisted of running the software and observing the output for correct results. Furthermore, software systems as a whole were far less complex and complicated than they are now. Back then, testers simply did not need much knowledge or skill. This is no longer the case. Some systems support tens of thousands of users. Other systems are safety-critical, or perhaps downtime can cost thousands of dollars per minute. The consequences of failure are much higher. At the same time, the complexity of software systems has grown exponentially. We have certainly moved far beyond the point where testers can merely observe the system's behavior for correct results. Today's testing tools are very sophisticated and require much knowledge, skill, and training to properly deploy and use. Some tools mentioned earlier in this chapter, such as code profiling tools, require a working knowledge of the underlying code to properly interpret the results. Some projects compensate for this by having the developers test their own code. Although this may make sense in some cases, the workload on most developers and the constant pressure to be productive runs counter to thorough and complete testing by the developers themselves. In addition to tools available for testing, a number of scripting languages, such as Perl, VBScript, and JavaScript, aid in the testing effort. These are requisite skills for modern testers. I consider the best testing groups to be staffed by people who have the talent and skill to be developers, yet they choose to be testers. They do not view testing as a stepping-stone to other pursuits. Instead, these professionals recognize the importance of the testing discipline and the value a strong testing effort can bring to a project. These types of testers not only find the less obvious problems in a product, but they also can suggest solutions and work closely with the development team to help prevent and solve these problems. They can also add value in a proactive manner, working with the requirements team to define requirements that are easily testable and verifiable. Fortunately, testing is increasingly being recognized as a key discipline on projects. As a result, testing is beginning to attract highly talented engineers. Enlightened companies seek out these individuals and compensate them accordingly. Some companies separate testing and quality assurance from product development. The testing and quality assurance groups report to a different person in the organization's management chain. I consider this a wise strategy, because it reduces the chance of the testing and quality assurance group being forced to approve a release simply to meet a deadline. It also ensures that the importance of testing does not take a backseat to development. What if a Separate Team, Perhaps Offshore, Performs the Testing?On some large projects, particularly government projects, a different team performs testing, possibly under a separate contract. Other projects, such as some projects in the commercial domain, may outsource testing to an offshore company. The tactics for managing these two situations are quite similar. The first tactic is to determine how the test team will become fluent and comfortable with the concept of the system. Much as with requirements elicitation, this cannot be done by simply supplying manuals and documents. The test team must understand the problem domain itself and then understand how the system to be tested solves a specific issue in the problem domain. This process is similar to the process undertaken by the development team. If possible, arrange for members of the test team to meet with selected end users of the new system, as well as members of the development team. After they understand the customer and the problem domain (and have had time to digest this information), they should view demonstrations of any proof of concept. The second tactic is to incorporate the use of a technique in which testing of a given iteration occurs after it is delivered. On small- to medium-sized projects where the test team is colocated with the development team, an iteration should be tested during the iteration. This requires close collaboration between the test team and the development team. When testing is outsourced to a team separated by distance, this may not be practical. Instead, a technique sometimes called pipelining can be used. With pipelining, the release produced by an iteration is delivered to the testing team at the iteration's conclusion. Functional and performance testing is then performed by the test team while the development team begins developing the next iteration. The amount of time allocated for the test team to complete its testing should be kept the same as the length of the iteration used by the development team. Thus, when the development team completes an iteration and delivers its release, the testing team completes its testing of the previous iteration and has reported all its results. This process is illustrated in Figure 11-5. Figure 11-5. Testing progress in a "pipelined" process For this to work efficiently, consider the following:

Provided that the test team is not left to fend for themselves in a vacuum and the practices here are employed, testing by a separate team can work effectively. Testing Efforts Gone AwryUnfortunately, I have been witness to many ineffective testing regimes. Worse, I have seen some testing efforts that were a complete waste of time and resources. Consider the following scenarios. Your organization will not do these things, right? Little or No Performance/Load/Stress TestingFor any project with a requirement to support a community of users, you should have some method of proving that the system can meet the requirement. As mentioned earlier, customers should insist on seeing proof that the system can meet these requirements. I actually witnessed a group that provided this "proof" by simply having two users manually access the system simultaneously and comparing the response times with those when only one user used the system. Then, they extrapolated the results to hundreds of users, claiming this proved that the system could handle the required load. This is at best naive and at worst just plain wrong. The relationship between system response times when plotted against the number of users is seldom linear. It may be linear part of the time and then grow exponentially after the number of users reaches a certain point. Succumbing to PressureSome organizations, when pressured by deadlines, still deliver a system, regardless of the test results. This is the "throw it over the wall" strategy. It may satisfy a short-term deadline, but in the long run, it seldom works. This often happens when the testers and quality assurance personnel report to the same managers as the development organization does. Tell the customer that you have completed development (assuming you have) but that your testing and quality assurance organization has identified issues that need to be corrected before delivery. Most reasonable customers, although perhaps initially upset, will ultimately respond positively. If you must deliver a system with known issues, at least document the issues and indicate that they are already known. This is better than simply hoping the customer will not find them. Another form of succumbing to pressure is for the development organization to release a product with little or no testing. This is usually associated with testing in a Waterfall lifecycle model, because nearly all testing is performed at the tail end of the development lifecycle. When development and integration fall behind schedule, testing is severely curtailed or eliminated to meet the final deadline. Adopting an iterative lifecycle process can help prevent this. Relying on Developers for All TestingThis is seldom a wise strategy. Most developers do not like to perform testingthat's why many of them became developers in the first place. Furthermore, developers' natural response to schedule pressure is to skimp on testing and get the development done first. Furthermore, you lose the independent perspective that formal testing provides. Without a second set of eyes looking at the problem, bugs can slip through the cracks. Ineffective Third-Party TestingSome customers believe they can obtain independent, unbiased results by having a third party handle testing. In fact, some contractors specialize in these and related activities, known as Independent Validation and Verification (IV&V). Although this may provide the customer with an added level of assurance, they must remember that these third parties will require that a complete and extensive set of documentation be delivered to them long before testing begins. If this third-party contractor is offshore, the need for this documentation is even more important. Here are some examples of the documentation that would be needed:

The contractor also needs to replicate the production environment and, therefore, needs instances of the hardware and software to create the testing environment. Make sure the IV&V contractor has these and any other resources it needs to be successful. |

EAN: 2147483647

Pages: 166