3. New Trends in Image and Video Compression

3. New Trends in Image and Video Compression

Before going any further, the following question has to be raised: if digital storage is becoming so cheap and so widespread and the available transmission channel bandwidth is increasing due to the deployment of cable, fiber optics, and ADSL modems, why is there a need to provide more powerful compression schemes? The answer is, with no doubt, mobile video transmission channels and Internet streaming. For a discussion on the topic see [33], [34].

3.1 Image and Video Coding Classification

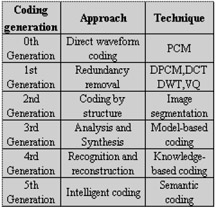

In order to have a broad perspective, it is important to understand the sequence of image and video coding developments expressed in terms of "generation-based" coding approaches. Table 39.3 shows this classification according to [35]. It can be seen from this classification that the coding community has reached third generation image and video coding techniques. MPEG-4 provides segmentation-based approaches as well as model-based video coding in the facial animation part of the standard.

|

|

3.2 Coding Through Recognition and Reconstruction

Which techniques fall within the "recognition and reconstruction" fourth generation approaches? The answer is coding through the understanding of the content. In particular, if we know that an image contains a face, a house, and a car we could develop recognition techniques to identify the content as a previous step. Once the content is recognized, content-based coding techniques can be applied to encode each specific object. MPEG-4 provides a partial answer to this approach by using specific techniques to encode faces and to animate them [5]. Some researchers have already addressed this problem. For instance, in [36] a face detection algorithm is presented which helps to locate the face in a videoconference application. Then, bits are assigned in such a way that the face is encoded with more quality than the background.

3.3 Coding Through Metadata

If it is clear that understanding the visual content helps provide advanced image and video coding techniques then the efforts of MPEG-7 may also help in this context. MPEG-7 strives at specifying a standard way of describing various types of audio-visual information. Figure 39.1 gives a very simplified picture of the elements that define the standard. The elements that specify the description of the audio-visual content are known as metadata.

Figure 39.1: MPEG-7 standard.

Once the audio-visual content is described in terms of the metadata, the image is ready to be coded. Notice that what is coded is not the image itself but the description of the image (the metadata). An example will provide further insight.

Let us assume that automatic tools to detect a face in a video sequence are available. Let us further simplify the visual content by assuming that we are interested in high quality coding of a videoconference session. Prior to coding, the face is detected and represented using metadata. In the case of faces, some core experiments in MPEG-7 show that a face can be well represented by a few coefficients, for instance by using the projection of the face on an eigenspace previously defined. The image face can be well reconstructed, up to a certain quality, by coding only a very few coefficients. In the next section, we will provide some very preliminary results using this approach.

Once the face has been detected and coded, the background remains to be coded. This can be done in many different ways. The simplest case is when the background is roughly coded using conventional schemes (1st generation coding). If the background is not important, then it can not even be transmitted and the decoder adds some previously stored background to the transmitted image face.

For more complicated video sequences, we need to recognize and to describe the visual content. If this is available, then coding is "only" a matter of assigning bits to the description of each visual object.

MPEG-7 will provide mechanisms to fully describe a video sequence (in this section, a still image is considered a particular case of video sequence). This means that knowledge of color and texture of objects, shot boundaries, shot dissolves, shot fading and even scene understanding of the video sequence will be known prior to encoding. All this information will be very useful to the encoding process. Hybrid schemes could be made much more efficient, in the motion compensation stage, if all this information is known in advance. This approach to video coding is quite new. For further information see [37], [33].

It is also clear that these advances in video coding will be possible only if sophisticated image analysis tools (not part of the MPEG-7 standard) are developed. The deployment of new and very advanced image analysis tools are one of the new trends in video coding. The final stage will be intelligent coding implemented through semantic coding. Once a complete understanding of the scene is achieved, we will be able to say (and simultaneously encode): this is a scene that contains a car, a man, a road, and children playing in the background. However we have to accept that we are still very far from this 5th generation scheme.

3.4 Coding Through Merging of Natural and Synthetic Content

In addition to the use of metadata, future video coding schemes will merge natural and synthetic content. This will allow an explosion of new applications combining these two types of contents. MPEG-4 has provided a first step towards this combination by providing efficient ways of face encoding and animation. However, more complex structures are needed to model, code, and animate any kind of object. The needs arisen in [38] are still valid today. No major step has been made concerning the modeling of any arbitrary-shaped object. For some related work see [39].

Video coding will become multi-modal and cross-modal. Speech and audio will come to the rescue of video (or vice versa) by combining both fields in an intelligent way. To the best of our knowledge, the combination of speech and video for video coding purposes has not yet been reported. Some work has been done with respect to video indexing [40], [41], [42].

3.5 Other Trends in Video Compression: Streaming and Mobile Environments

The two most important applications in the future will be wireless or mobile multimedia systems and streaming content over the Internet. While both MPEG-4 and H.263+ have been proposed for these applications, more work needs to be done.

In both mobile and Internet streaming one major problem that needs to be addressed is: how does one handle errors due to packet loss and should the compression scheme adapt to these types of errors? H.263+ [43] and MPEG-4 [44] both have excellent error resilience and error concealment functionalities.

The issue of how the compression scheme should adapt is one of both scalability and network transport design. At a panel on the "Future of Video Compression" at the Picture Coding Symposium held in April 1999, it was agreed that rate scalability and temporal scalability were important for media streaming applications. It also appears that one may want to design a compression scheme that is tuned to the channel over which the video will be transmitted. We are now seeing work done in this area with techniques such as multiple description coding [45], [46].

MPEG-4 is proposing a new "fine grain scalability" mode and H.263+ is also examining how multiple description approaches can be integrated into the standards. We are also seeing more work in how the compression techniques should be "matched" to the network transport [47], [48], [49].

3.6 Protection of Intellectual Property Rights

While the protection of intellectual property rights is not a compression problem, it will have impact on the standards. We are seeing content providers demanding that methods exist for both conditional access and copy protection. MPEG-4 is studying watermarking and other techniques. The newly announced MPEG-21 will address this in more detail [50].

EAN: 2147483647

Pages: 393