6. Quality-of-Service Monitoring at Client

6. Quality-of-Service Monitoring at Client

In the previous sections we described how the delivery of media data can be efficiently and robustly delivered over distributed networks. However, to many end-users (clients) the only thing that matters is the quality-of-service (QoS) of the received video. QoS can be in the form of perceived quality of the received content, the response time, the latency/delay, and the playback experiences. For example, received content quality can vary extensively depending on a number of factors, including encoder sophistication, server load, intermediate transformations (re-encoding and transcoding), and channel characteristics (packet loss, jitter, etc).

The optimized delivery and robust wireless streaming mechanisms proposed in Sections 5.1 and 5.2 are aimed to enhance QoS from system resource optimization perspectives, taking into account the practical constraints such as unreliable and error-prone channels. However, in order to address QoS end-to-end, we need to evaluate the QoS at the client side to validate the results. This is especially important in the events of paid delivery services to guarantee the QoS at the receiving end.

6.1 Quality Metrics for Video Delivery

For QoS investigations, many in the networking research communities have relied on metrics such as bit and/or packet erasure characteristics. It is more realistic to investigate errors in the form of packet drops if we focus on video delivery over common TCIP/IP infrastructure. Unlike ASCII data, multimedia data, when encoded (compressed), can pose special challenges when the packets are dropped - the degradation of the quality is not uniform. The image/video quality can vary significantly based on loss packets - some packets would render a significant portion of the image fail to display, while others would only cause slight degradation to some small locally confined pixel areas. The quantitative measurements like bit/packet erasure rates and variances are not representative of perceived visual quality, and, of course, QoS in general, to the end users. While scalable encoding/compression aim to solve the fluctuations of quality caused by packet losses, it does not lessen the needs for client side quality monitoring.

While QoS can embrace many different metrics to describe user perceived level of services, we will focus on image/video visual quality in this section.

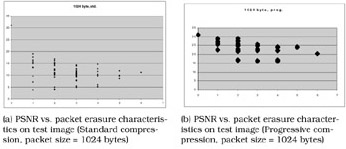

Figure 38.13 shows the extent of quality fluctuations versus packet erasure measurements for standard encoding and also for scalar (progressive) encoding, in one example image. We use PSNR (peak-signal-to-noise-ratio) as the objective quality metrics, although other metrics can be used for perceptual quality measurements. Here we simulate the characteristics of packet erasure channels (Section 5.2). In the event that we have an average of 1 packet erasure (1024 bytes) per image (JEPG encoded), the PSNR of the received image frame can vary from under 10dB to close to 20dB - a 10dB differential! Scalable encoding improves quality despite the packet drops, but the PSNR can still show a 5 to 10dB differential for most erasure levels. (Similar characteristics have been observed for other images in our experiments.) This example demonstrates the needs for finding good quality metrics and tools for assessing and monitoring perceived quality (visual in this case) of the received content.

Figure 38.13: PSNR vs. packet erasure characteristics.

In order to verify that the results of delivery (through a combination of packet loss, transcoding, or re-encoding, among other operations), we need to first focus on how to reliably assess a client's received content quality. For example, distortion assessment tools could be applied to quality-based real-time adaptation of streaming services. A streaming server could increase or decrease the bandwidth/error correction assigned to a stream based on a client's estimated perceptual quality, while given a corrupted frame, a streaming client could decide whether to attempt to render that frame, or instead repeat a previous uncorrupted frame. Similarly, encoders or transcoders could use automated quality monitoring to ensure that certain quality bounds are maintained during content transformations such as those of Section 5.1.

6.2 Watermarking for Automatic Quality Monitoring

For client side applications, it is often not feasible for the client devices to have access to the original content for quality comparison or assessment purposes. Thus, a general quality assessment scheme should require no use of the original uncorrupted signal. To tackle the challenge, we propose the use of digital watermarks as a means of quality assessment and monitoring [10].

Digital watermarking has previously been proposed as a key technology for copyright protection and content authentication. Watermarking considers the problem of embedding an imperceptible signal (the watermark) in a cover signal (most commonly image, video, or audio content) such that the embedded mark can later be recovered and retrieved, generally to make some assertion about the content, e.g., to verify copyright ownership, content authenticity, and authorization of use, among others [7][5]. Typically, a watermark is embedded by perturbing the host content by some key-dependent means, e.g., by additive noise, quantization, or a similar process.

The motivation behind the approach lies in the intuition that if a host signal is distorted or perturbed, a watermark embedded within the host signal can be designed to degrade, and reflect the distortion by the degradation. By embedding known information (the watermark) in the content itself, a detector without access to the original material may be able to deduce the distortions to which the host content has been subjected, based on the distortions the embedded data appears to have encountered.

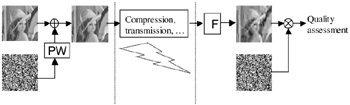

Figure 38.14 shows an overview of the general approach. If a watermark is designed well, it is possible to make a variety of assertions about the distortion to which the host signal has been subjected. In fact, the watermark can be designed to bear quantitative and qualitative correlations with perceived quality, and other QoS measurements. One approach is to embed perceptual weighting into a host signal (for example, an image) that reflects the human perception of the signals.

Figure 38.14: The system overview (PW— perceptual weighting; F— filtering)

However, there is a tradeoff. The watermark embedded has to be sufficiently robust, such that small distortions cannot render the watermark to disappear or be undetectable. Yet it has to be sufficiently "fragile" to reflect the quality degradations. To achieve the goals, we propose using the class of robust detectors with bounded distortions, such that the detector response degrades with distortion magnitude, in a predictable manner. The detected results can then correlate with quality (quantitative and/or qualitative) metrics. In addition, the distortions can be localized and quantified with perceptual impacts. We call this "distortion-dependent watermarking."

There are few studies into this area and there are many interesting questions and opportunities for further research. [10] provides some basic problem formulations and investigation results. To illustrate, and as a basic formulation, we define the quality watermark signal w as

wn = gn(Q(Xn + rn)),

where Q corresponds to a scalar quantizer with step size L, Xn is the nth host signal element, rn is chosen pseudo-randomly and uniformly over the range [0, L), and gn(○) corresponds to the output of the nth pseudo-random number generator seeded with the specified argument, i.e., quantizer output. The quality watermark signal w and a Gaussian-distributed reference watermark wref are summed and normalized, and embedded into the content using one of the many watermarking algorithms reported in the literature. This essentially constitutes a pre-processing step that is applied before the content is manipulated and delivered. In [10], a simple spatial-domain white-noise watermark was used as the underlying embedding/detection mechanism. Several related and widely used objective quality metrics can subsequently be estimated from the distorted content, for example, the sum of absolute differences (SAD). From our investigations the predicted distortion closely tracks the actual distortion measured from the experiments. Similar approaches can also be used to construct other perceptually weighted distortion metrics.

To our knowledge the problem of blind signal quality assessment has not been studied extensively, and many more sophisticated solutions are no doubt possible. As digital delivery of content becomes more prevalent in the coming years, we believe that such solutions will collectively become important enabling components in media delivery infrastructure.

EAN: 2147483647

Pages: 393