Hardware Components

The following general guidelines are no substitute for your own experience with your application, but they can help you at the outset. For simplicity, the following sections assume that your server is basically dedicated to running SQL Server, with little else going on. You can certainly combine SQL Server with other applications on the same machine, and you can administer SQL Server locally using SQL Enterprise Manager. You can support multiple databases from one SQL Server installation, and in SQL Server 2000, you can have multiple installations of SQL Server on the same machine.

Table 4-2 (adapted from the SQL Server Books Online) shows the hardware requirements for installing SQL Server 2000.

Table 4-2. SQL Server 2000 hardware requirements.

| Hardware | Minimum requirements |

|---|---|

| Computer | Intel or compatible. Pentium 166 MHz or higher. |

| Memory (RAM)1 | Enterprise Edition: 64 MB minimum; 128 MB or more recommended. Standard Edition: 64 MB minimum. Personal Edition: 64 MB minimum on Windows 2000; 32 MB minimum on all other operating systems. Developer Edition: 64 MB minimum. Desktop Engine: 64 MB minimum on Windows 2000; 32 MB minimum on all other operating systems. |

| Hard disk space2 | SQL Server database components: 95 to 270 MB; 250 MB typical. Analysis Services: 50 MB minimum; 130 MB typical. English Query: 80 MB. Desktop Engine only: 44 MB. |

| Monitor | VGA or higher resolution. 800 x 600 or higher resolution required for the SQL Server graphical tools. |

| Pointing device | Microsoft Mouse or compatible. |

| CD-ROM drive | Required. |

1 Additional memory might be required, depending on operating system requirements.

2 Actual requirements will vary based on your system configuration and the applications and features you choose to install.

The Processor

SQL Server is CPU-intensive—more so than you might think if you assume that it is mostly I/O-constrained. Plenty of processing capacity is a good investment. Your system uses a lot of processing cycles to manage many connections, manage the data cache effectively, optimize and execute queries, check constraints, execute stored procedures, lock tables and indexes, and enforce security. Because SQL Server typically performs few floating-point operations, a processor that is fabulous with such operations but mediocre with integer operations is not the ideal choice. Before you choose a processor, you should check benchmarks such as SPECint (integer)—not SPECfp (floating-point) or any benchmark that's a combination of floating-point and integer performance. Performance on SPECint correlates to the relative performance of SQL Server among different processor architectures, given sufficient memory and I/O capacity. The correlation is a bit loose, of course, because other factors (such as the size of the processor cache, the system bus efficiency, and the efficiency of the optimizing compiler used to build the system when comparing processor architectures) also play an important role. But integer-processing performance is a key metric for SQL Server.

SQL Server is built for SMP, but if your system is dedicated to SQL Server and supports fewer than 100 simultaneous users, a single CPU system will likely meet your needs. If you work with large data-warehousing applications, you can use an SMP system effectively with a small number of users. SQL Server 2000 can run complex queries in parallel, which means that the same query is processed simultaneously by multiple processors, each doing a subset of the computations. SQL Server executes queries on multiple processors if sufficient processors are available at the time of execution. (You'll see more details about how to choose the number of processors in Chapter 15.) If you're uncertain about your needs or you expect them to grow, get a single-processor system that can be upgraded to a dual (or even a quad) system.

For your server platform, you should buy the fastest single-processor system in its class. Buy tomorrow's technology, not yesterday's. With many other applications, the extra processing power doesn't make much difference, but SQL Server is CPU-intensive.

TIP

Remember that the processor's cache affects its performance. A big L2 (level 2, or secondary) cache is a good investment. You might see a difference of 20 percent or more simply by using a 512 KB L2 cache instead of a 256 KB L2 cache in a given system. The cost difference is perhaps $100, so this is money well spent.

If your application is already available or you can conduct a fairly realistic system test, you can be much more exacting in evaluating your hardware needs. Using the Windows Performance Monitor, simply profile the CPU usage of your system during a specified period of time. (Use the Processor object and the % Processor Time counter.) Watch all processors if you're using an SMP system. If your system is consistently above 70 percent usage or can be expected to grow to that level soon, or if it frequently spikes to greater than 90 percent for 10 seconds or more, you should consider getting a faster processor or an additional processor. You can use the SPECint95 guidelines to determine the relative additional processing power that would be available by upgrading to a different or an additional processor.

Memory

Memory is used for many purposes in SQL Server 2000; I mentioned some of those purposes in Chapter 3, and I'll discuss memory usage further in Chapter 17. By default, SQL Server 2000 automatically configures the amount of memory it uses based on its needs and the needs of the operating system and other running applications.

If the SQL Server buffer manager can find data in the cache (memory), it can avoid physical I/Os. As discussed earlier, retrieving data from memory is tens of thousands of times faster than the mechanical operation of performing a physical I/O. (Physical I/O operations also consume thousands more CPU clock cycles than simply addressing real memory.) As a rule, you should add physical memory to the system until you stop getting significant increases in cache hits—or until you run out of money. You can easily monitor cache hits by using Performance Monitor. (For more information, see Chapter 17.) Using the SQLServer:Buffer Manager object, watch the Buffer Cache Hit Ratio counter. If adding physical memory makes the buffer cache-hit ratio increase significantly, the memory is a good investment.

As mentioned earlier, by default SQL Server automatically adjusts its memory use to meet its needs and the needs of the rest of the system. However, an administrator can override this and configure SQL Server to use a fixed amount of memory. You should be extremely cautious if you decide to do this. Configuring memory appropriately is crucial to good performance; configuring too little or too much memory can drastically impair performance. Too little memory gives you a poor buffer cache-hit ratio. Too much memory means that the combined working sets of the operating system and all applications (including SQL Server) will exceed the amount of real memory, requiring memory to be paged to and from disk. You can monitor paging in Performance Monitor using statistics such as the Memory object's counter for Pages/Sec.

Fortunately, adding physical memory to systems is usually quite simple (you pop in some SIMMs) and economical. You should start conservatively and add memory based on empirical evidence as you test your application. SQL Server needs no single correct amount of memory, so it's pointless to try to establish a uniform memory configuration for all SQL Server applications that you might ever deploy.

SQL Server can run with a minimum 32 MB system (64 MB for SQL Server Enterprise Edition), but as is typically the case with a minimum requirement, this is not ideal. If your budget isn't constrained, you should consider starting with at least 128 MB of RAM. (If your budget is flexible or you believe that your application will be more demanding, you might want to bump up memory to 256 MB. But it's probably best not to go higher than this initially without some evidence that your SQL Server application will use it.)

When you buy your initial system, give some consideration to the configuration of the memory SIMMs, not only to the total number of megabytes, so that you can later expand in the most effective way. If you're not careful, you can get a memory configuration that makes it difficult to upgrade. For example, suppose your machine allows four memory banks for SIMMs and you want 64 MB of memory that you might upgrade in the future. If your initial configuration of 64 MB was done with four 16MB SIMMs, you're in trouble. If you want to upgrade, you have to remove some or all of the 16-MB SIMMs and buy new memory. But if you configure your 64 MB as two 32-MB SIMMs, you can add another one or two such SIMMs to get to 96 MB or 128 MB without replacing any of the initial memory. Starting with one 64-MB SIMM gives you even more flexibility in upgrading.

When deciding on the initial amount of memory, you should also consider whether your system will be more query-intensive or more transaction-intensive. Although more memory can help either way, it typically benefits query-intensive systems most, especially if part of the data is "hot" and often queried. That data tends to remain cached, and more memory allows more of the data to be accessed from the cache instead of necessitating physical I/Os. If you have a query-intensive system, you might want to start with more memory than 128 MB. You can use the memory not only to keep more of the needed data in cache, but also for some of the SQL Server 2000 query processing techniques, such as hash joins, that work best with large amounts of memory. In contrast, a transaction-intensive system might get relatively few cache hits, especially if the transactions are randomly dispersed or don't rely much on preexisting data. In addition, the query processing techniques used for transaction processing are typically much simpler and can't take advantage of extra memory for the actual processing. In such a system, the amount of memory needed might be low and adding a faster I/O subsystem might make more sense than adding more memory.

Disk Drives, Controllers, and Disk Arrays

Obviously, you need to acquire enough disk space to store all your data, plus more for working space and system files. But you shouldn't simply buy storage capacity—you should buy I/O throughput and fault tolerance as well. A single 8 GB drive stores as much as eight 1 GB drives, but its maximum I/O throughput is likely to be only about one-eighth as much as the combined power of eight smaller drives (assuming that the drives have the same speed). Even relatively small SQL Server installations should have at least two or three physical disk drives rather than a single large drive. Microsoft has benchmarked systems that can do, say, 1000 transactions per second (tps) (in a Debit-Credit scenario) using more than 40 physical disk drives and multiple controllers to achieve the I/O rates necessary to sustain that level of throughput.

If you know your transaction level or can perform a system test to determine it, you might be able to produce a good estimate of the number of I/Os per second you need to support during peak use. Then you should procure a combination of drives and controllers that can deliver that number.

Typically, you should buy a system with SCSI drives, not IDE or EIDE drives. An IDE channel supports only two devices. An EIDE setup consists of two IDE channels and can support four (2 × 2) devices. But more important, an IDE channel can work with only a single device at a time—and even relatively small SQL Server installations usually have at least two or three disk drives. If multiple disks are connected on an IDE channel, only one disk at a time can do I/O. This is especially problematic if the channel also supports a slow device such as a CD-ROM drive. Since the average access time for data on CDs is many times slower than for data on disk, your disk I/O would be delayed while the CD is accessed.

SCSI is also a lot smarter than IDE or EIDE. A SCSI controller hands off commands to a SCSI drive and then logically disconnects and issues commands to other drives. If multiple commands are outstanding, the SCSI controller queues the commands inside the associated device (if the device supports command queuing) so that the device can start on the next request as soon as the current request finishes. When a request finishes, the device notifies the controller. A SCSI channel can support multiple fast drives and allow them to work at full speed. Slow devices such as CD-ROM drives and tape drives do not hog the SCSI channel because the controller logically disconnects. A single SCSI channel can support many drives, but from a practical standpoint, you shouldn't go beyond a 5:1 ratio if you need to push the drives close to capacity. (That is, you should add an additional channel for every five drives or so.) If you're not pushing the drives to capacity, you might be able to use as many as eight to ten drives per channel—even more with some high-end controllers or random I/O patterns.

In TPC benchmarks (which are I/O-intensive, and in which the I/O is deliberately random), Microsoft generally follows a 6:1 ratio of drives per SCSI channel. (Since most of the standard benchmarks measure both performance and cost, Microsoft must balance the cost and benefits, just as they would for a real application.) Most real applications don't have such perfectly random I/O characteristics, however. When the I/O is perfectly random, a significant amount of the I/O time is spent with the disk drives seeking data rather than transferring data, which consumes the SCSI channel. Seeking is taxing only on the drive itself, not on the SCSI channel, so the ratio of drives per channel can be higher in the benchmark.

Note that this discussion is in terms of channels, not controllers. Most controllers have a single SCSI channel, so you can think of the two terms as synonymous. Some high-end cards (typically RAID controllers, not just standard SCSI) are dual-channel cards.

Some History

The original acronym RAID apparently stood for "redundant array of inexpensive disks," as evidenced in the paper presented to the 1988 ACM SIGMOD by Patterson, Gibson, and Katz called "A Case for Redundant Arrays of Inexpensive Disks (RAID)." However, the current RAIDbook, published by the RAID Advisory Board, uses independent, which seems like a better description because the key function of RAID is to make separate, physically independent drives function logically as one drive.

RAID Solutions

Simply put, RAID solutions are usually the best choice for SQL Server for these reasons:

- RAID makes multiple physical disk drives appear logically as one drive.

- Different levels of RAID provide different performance and redundancy features.

- RAID provides different ways of distributing physical data across multiple disk drives.

- RAID can be provided as a hardware solution (with a special RAID disk controller card), but it doesn't necessarily imply special hardware. RAID can also be implemented via software.

- Windows NT and Windows 2000 Server provides RAID levels 0 (striping), 1 (mirroring), and 5 (striping with parity) for implementation with SQL Server.

Some RAID levels provide increased I/O bandwidth (striping), and others provide fault-tolerant storage (mirroring or parity). With a hardware RAID controller, you can employ a combination of striping and mirroring, often referred to as RAID-10 (and sometimes as RAID-1+0 or RAID-1&0). RAID solutions vary in how much additional disk space is required to protect the data and how long it takes to recover from a system outage. The type and level of RAID you choose depends on your I/O throughput and fault tolerance needs.

RAID-0

RAID-0, or striping, offers pure performance but no fault tolerance. I/O is done in "stripes" and is distributed among all drives in the array. Instead of the I/O capacity of one drive, you get the benefit of all the drives. A table's hot spots are dissipated, and the data transfer rates go up cumulatively with the number of drives in the array. So although an 18-GB disk might do 100 random I/Os per second, an array of eight 2.1-GB disks striped with Windows NT Server or Windows 2000 Server's RAID-0 might perform more than 400 I/Os per second.

Choosing an Appropriate Backup Strategy

Although you can use RAID for fault tolerance, it's no substitute for regular backups. RAID solutions can protect against a pure disk-drive failure, such as a head crash, but that's far from the only case in which you would need a backup. If the controller fails, garbage data might appear on both your primary and redundant disks. Even more likely, an administrator could accidentally clobber a table, a disaster (such as a fire, flood, or earthquake) could occur at the site, or a simple software failure (in a device driver, the operating system, or SQL Server) could threaten your data. RAID doesn't protect your data against any of those problems, but backups do. I'll talk about SQL Server's options for backing up your data in Chapter 5.

NOTE

You could argue that RAID-0 shouldn't be considered RAID at all because it provides no redundancy. That might be why it's classified as level 0.

Windows NT and Windows 2000 provides RAID-0; a hardware RAID controller can also provide it. RAID-0 requires little processing overhead, so a hardware implementation of RAID-0 offers at best a marginal performance advantage over the built-in Windows NT and Windows 2000 capability. One possible advantage is that RAID-0 in hardware often lets you adjust the stripe size, while the size is fixed at 64 KB in Windows NT and Windows 2000 and cannot be changed.

RAID-1

RAID-1, or mirroring, is conceptually simple: a mirror copy exists for every disk drive. Writes are made to the primary disk drive and to the mirrored copy. Because writes can be made concurrently, the elapsed time for a write is usually not much greater than it would be for a single, unmirrored device. The write I/O performance to a given logical drive is only as fast as that of a single physical drive.

Therefore, you can at best get I/O rates of perhaps 100 I/Os per second to a given logical RAID-1 drive, as opposed to the much higher I/O rates to a logical drive that can be achieved with RAID-0. You can, of course, use multiple RAID-1 drives. But if a single table is the hot spot in your database, even multiple RAID-1 drives won't be of much help from a performance perspective. (SQL Server 2000 lets you create filegroups within a database for, among other purposes, placing a table on a specific drive or drives. But no range partitioning capability is available for data within a table, so this option doesn't really give you the control you need to eliminate hot spots.) If you need fault tolerance, RAID-1 is normally the best choice for the transaction log. Because transaction log writes are synchronous and sequential, unlike writes to the data pages, they're ideally suited to RAID-1.

Hardware-Based vs. Software-Based RAID

If price and performance are your only criteria, you might find that software-based RAID is perfectly adequate. If you have a fixed budget, the money you save on hardware RAID controllers might be better spent elsewhere, such as on a faster processor, more disks, more memory, or an uninterruptible power supply.Purely in terms of performance, it might not be wise to spend extra money on low-end hardware RAID (if you have sufficient CPU capacity in the server), but mid-level to high-end RAID offers many other benefits, including hot swappability of drives and intelligent controllers that are well worth the extra money—especially for systems that need to run 24 hours a day, 7 days a week.

You might also combine hardware-based and software-based RAID to great advantage. For example, if you have three hardware-based RAID units, each with seven 9 GB disks, with RAID-5 you'd have 6 x 9 = 54 GB in each, and Windows NT and Windows 2000 would see three 54 GB disks. You can then use software-based RAID on Windows NT and Windows 2000 to define a RAID-0 striped volume to get a single 162 GB partition on which to create SQL Server databases.

RAID-1 can significantly increase read performance because a read can be obtained from either the primary device or the mirror device. (A single read isn't performed faster, but multiple reads will have a faster overall time because they can be performed simultaneously.) If one of the drives fails, the other continues and SQL Server uses the surviving drive. Until the failed drive is replaced, fault tolerance is not available unless multiple mirror copies were used.

NOTE

The Windows NT and Windows 2000 RAID software doesn't allow you to keep multiple mirror copies; it allows only one copy. But some RAID hardware controllers offer this feature. You can keep one or more mirror copies of a drive to ensure that even if a drive fails, a mirror still exists. If a crucial system is difficult to access in a timely way to replace a failed drive, this option might make sense.

Windows NT and Windows 2000 Server provides RAID-1, and some hardware RAID controllers also provide it. RAID-1 requires little processing overhead, so a hardware implementation of RAID-1 offers at best a marginal performance advantage over the built-in Windows NT and Windows 2000 capability (although the hardware solution might provide the ability to mirror more than one copy of the drive).

RAID-5

RAID-5, or striping with parity, is a common choice for SQL Server use. RAID-5 not only logically combines multiple disks to act like one disk, but it also records extra parity information on every drive in the array (thereby requiring only one extra drive). If any drive fails, the others can reconstruct the data and continue without any data loss or immediate down time. By doing an Exclusive OR (XOR) between the surviving drives and the parity information, the bit patterns for a failed drive can be derived on the fly.

RAID-5 is less costly to implement than mirroring all the drives because only one additional drive is needed rather than the double drives that mirroring requires. Although RAID-5 is commonly used, it's often not the best choice for SQL Server because it imposes a significant I/O hit on write performance. RAID-5 turns one write into two reads and two writes to keep the parity information updated. This doesn't mean that a single write takes four or five times as long, because the operations are done in parallel. It does mean, however, that many more I/Os occur in the system, so many more drives and controllers are required to reach the I/O levels that RAID0 can achieve. So although RAID-5 certainly is less costly than mirroring all the drives, the performance overhead for writes is significant. Read performance, on the other hand, is excellent—it's almost equivalent to that of RAID-0.

RAID-5 makes sense, then, for the data portion of a database that needs fault tolerance, that is heavily read, and that does not demand high write performance. Typically, you shouldn't place the transaction log on a RAID-5 device unless the system has a low rate of changes to the data. (As mentioned before, RAID-1 is a better choice for the transaction log because of the sequential nature of I/O to the log.)

RAID-5 requires more overhead for writes than RAID-0 and RAID-1 do, so the incremental advantage provided by a hardware RAID-5 solution over the Windows NT and Windows 2000 software solution can be somewhat higher because more work must be offloaded to the hardware. However, such a difference would be noticeable only if the system were nearly at I/O capacity. If this is the case in your system, you probably shouldn't use RAID-5 in the first place because of the write performance penalty. You're probably better served by the combination of RAID-0 and RAID-1, known as RAID-10.

RAID-10

RAID-10, or mirroring and striping, is the ultimate choice for performance and recoverability. This capability is really not a separate type of RAID, but rather a combination of RAID-1 and RAID-0. It is sometimes also referred to as RAID-1+0 or RAID-1&0. A set of disks is striped to provide the performance advantages of RAID-0, and the stripe is mirrored to provide the fault-tolerance features and increased read performance of RAID-1. Performance for both writes and reads is excellent.

The most serious drawback to RAID-10 is cost. Like RAID-1, it demands duplicate drives. In addition, the built-in RAID software in the operating system doesn't provide this solution, so RAID-10 requires a hardware RAID controller. Some hardware RAID controllers explicitly support RAID-10, but you can achieve this support using virtually any RAID controller by combining its capabilities with those of Windows NT and Windows 2000. For example, you can set up two stripe sets of the same size and number of disks using hardware RAID. Then you can use Windows NT and Windows 2000 mirroring on the two stripe sets, which Windows NT and Windows 2000 sees as two drives of equal size. If you need high read and write performance and fault tolerance and you cannot afford an outage or decreased performance if a drive fails, RAID-10 is the best choice.

A separate RAID-10 array is usually not an appropriate choice for the transaction log. Because the write activity tends to be sequential and is synchronous, the benefits of multiple disks are not realized. A RAID-1 mirroring of the transaction log is preferable and cheaper. With internal Debit-Credit benchmarks, Microsoft has shown that the transaction log on a simple RAID-1 device is sufficient to sustain thousands of transactions per second—which is likely more than you need. Log records are packed, and a single log write can commit multiple transactions (known as group commit). In the Debit-Credit benchmarks, 40 transactions can be packed into a log record. At a rate of 100 writes per second (writes/second), the log on a RAID-1 mirror would not become a bottleneck until around 4000 tps.

In fact, this threshold is probably even higher than 4000 tps. For pure sequential I/O, 100 writes/second is a conservative estimate. Although a typical disk drive is capable of doing 100 random I/Os per second, the rate for pure sequential I/O—largely eliminating the seek time of the I/Os—is probably better than 200 writes/second.

In a few cases, a physically separate RAID-10 array for the log might make sense—for example, if your system requires high online transaction processing (OLTP) performance but you also use replication of transactions. Besides performing sequential writes to the log, the system also does many simultaneous reads of the log for replication and might benefit from having the multiple disks available. (In most cases, however, the log pages are in cache, so RAID-10 probably won't provide a significant benefit. However, if replication is in a "catch-up" mode because the distribution database isn't available or for some other reason, some significant benefit might exist.)

RAID-01

Most of the discussion in the SQL Server documentation that concerns combining RAID0 and RAID-1 assumes that two stripes will be created first, and then one of the stripes will be defined as the mirror of the other. However, there is another possibility, which is to create multiple mirrored sets of disks first and then create a stripe from the mirrors. The first solution is referred to as mirrored stripes, and is the RAID 10 solution I discussed in the previous section. The second solution is referred to as striped mirrors and can be called RAID-01.

NOTE

Both RAID-10 and RAID-01 refer to combining the capabilities of RAID-0 (striping) with RAID-1 (mirroring) so that speed and fault tolerance are achieved, without the overhead of computing a parity value. However, the terminology is not standard. As mentioned previously, some sources will refer to RAID-1&0, or RAID-1+0. Some use the term RAID-10 to refer to striping a set of mirrored volumes, and use RAID-01 to refer to mirroring a striped volume, which is the opposite of the terminology I've been using. In many cases it might not matter which is done first, striping or mirroring, at least for the discussion at hand. I hope that in situations where it does matter, the authors of whatever sources you read will define the terms they use.

Choosing the Best RAID Solution

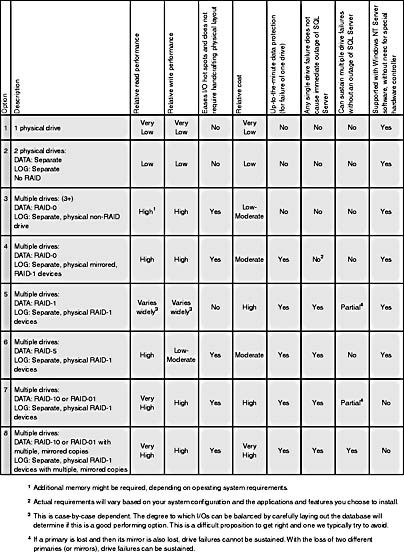

Table 4-3 summarizes the characteristics of various RAID configurations commonly used with SQL Server. Obviously, innumerable configurations are possible, but this chart will help you predict the characteristics for additional configurations as well as typical ones. You should reject Option 1 for all but the smallest environments because with this option the entire system runs with one disk drive. This is not efficient even for a low-end system, unless you have little write performance and enough memory to get a high cache-hit ratio. Unless you're running SQL Server on a laptop, there's little excuse for using only one drive.

Option 2, two physical drives and no use of RAID, represents an entry-level or development system. It offers far-from-ideal performance and no fault tolerance. But it is significantly better than having only a single drive. Because you should separate your data from your log before you even think about RAID, putting your data on one drive and your log on the other is the best you can do.

If you don't need fault tolerance and limited recovery ability is sufficient, Option 3—using RAID-0 for the data and placing the transaction log on a separate physical device (without mirroring)—is an excellent configuration. It offers peak performance and low cost. One significant downside, however, is that if the drive with the log fails, you can recover the data only to the point of your last transaction log or database backup. Because of this, Option 3 is not appropriate for many environments. On the other hand, if you lose the drive with the data, you can back up the log to the point of failure using the NO_TRUNCATE option and then restore that last log backup as the last step in restoring the whole database.

Option 4 is the entry level for environments in which it is essential that no data be lost if a drive fails. This option uses RAID-0 (striping, without parity) for the devices holding the data and uses RAID-1 (mirroring) for the transaction log, so data can always be recovered from the log. However, any failed drive in the RAID-0 array renders SQL Server unable to continue operation on that database until the drive is replaced. You can recover all the data from the transaction logs, but you experience an outage while you replace the drive and load your transaction logs. If a drive fails on either a transaction log or its mirrored copy, the system continues unaffected, using the surviving drive for the log. However, until the failed log drive is replaced, fault tolerance is disabled and you're back to the equivalent of Option 3. If that surviving drive also fails, you must revert to your last backup and you'll lose changes that occurred since the last transaction log or database backup.

Table 4-3. Characteristics of RAID configurations used with SQL Server.

Option 4 offers excellent performance—almost as good as Option 3. This might come as a surprise to those concerned with the mirroring of the log. RAID-1, of course, turns a single write into two. However, since the writes are done in parallel, the elapsed time for the I/O is about the same as it would be for a single drive, assuming that both the primary and mirrored drives are physically separate and are used only for log operations. If you need to protect all data but recovery time is not a major concern and an outage while you recover is acceptable (that is, if loading transaction logs or database backups is acceptable), Option 4 is ideal.

If you cannot tolerate an interruption of operations because of a failed drive, you should consider some form of RAID-1 or RAID-5 for your database (as well as for the master database and tempdb, the internal workspace area), as described in the following discussion of Options 5 through 8.

Option 5 uses basic RAID-1, a one-for-one mirroring of drives for the data as well as the log. I don't recommend this option because RAID-1 doesn't provide the performance characteristics of RAID-0. Recall that RAID-0 enables multiple drives to operate logically as one, with additive I/O performance capabilities. Although RAID1 can theoretically work well if you handcraft your data layout, in practice this approach has the same drawbacks as using filegroups instead of RAID-0. (See the following sidebar regarding filegroups.) This high-cost option is usually not a high-performing option, at least if the database has significant hot spots. It's appropriate only for specialized purposes in which I/O patterns can be carefully balanced.

Data Placement Using Filegroups

Are you better off simply putting all your data on one big RAID-0 striped set, or should you use filegroups to carefully place your data on specific disk drives? Unless you're an expert at performance tuning and really understand your data access patterns, I don't recommend using filegroups as an alternative to RAID. To use filegroups to handcraft your physical layout, you must understand and identify the hot spots in the database. And although filegroups do allow you to place tables on certain drives, doing so is tricky. It's currently not possible to partition certain ranges of a table to different disks, so hot spots often occur no matter how cleverly you designed your data distribution. If you have three or more physical disk drives, you should probably use some level of RAID, not filegroups. I'll discuss filegroups in detail in Chapter 5.

Option 6, using RAID-5 for the data and RAID-1 for the log, is appropriate if the write activity for the data is moderate at most and the update activity can be sustained. Remember that RAID-5 imposes a significant penalty on writes because it must maintain parity information so that the data of a failed drive can be reconstructed on the fly. Although the system does remain usable while operating with a failed drive, I/O performance is terrible: each read of the failed disk must read each of the other disks in the array and reconstruct the value from the failed drives, and each write becomes a similar read from each of the other disks, plus a write, so throughput drops precipitously when a drive fails. With RAID-5, if you lose another drive in the system before you replace the first failed drive, you can't recover because the parity information can't continue to be maintained after the first drive is lost. Until the failed drive is replaced and regenerated, continued fault-tolerance capability is lost. RAID5 uses fewer bytes for storage overhead than are used by mirroring all drives, but because disk drives are inexpensive and disks are rarely full, the performance tradeoff is often simply not worth it. Finally, RAID-5 has more exposure to operational error than mirroring. If the person replacing the failed drive in a RAID-5 set makes a mistake and pulls a good drive instead of the failed drive, the failed drive might be unrecoverable.

If you need high read and write performance, cannot lose any data, and cannot suffer an unplanned system outage when a drive fails, Option 7—RAID-10 or RAID-01 and a simple RAID-1 mirror for your log—is the way to go. As mentioned earlier, RAID-10 offers the ultimate combination of performance and fault tolerance. Its obvious disadvantage is cost. Option 7 requires not only a doubling of disk drives but also special hardware array controllers, which command a premium price.

Options 7 and 8 differ in whether additional mirrored copies are kept. Option 7 presents the typical case using either RAID-10 or RAID-01. Although both RAID-10 and RAID-01 exhibit the same behavior when all the disks are functional, there is a significant difference when things start to break, both in terms of the fault tolerance provided and in terms of performance.

Fault tolerance comparison of RAID-10 and RAID-01 I can best describe the difference in fault tolerance between RAID-10 and RAID-01 with an example. Suppose we have 20 disk drives. For RAID-10, we could first form two stripes of ten disks each and then mirror one stripe on the other. For RAID-01, we could first define ten mirrored volumes and then create a stripe from those ten volumes. Either system can survive a failure of a single disk. But what about a second disk failure? What are the chances that a second drive failure will bring down the whole system?

Using RAID-10, if one disk in each stripe fails, the entire system will fail. You can compare this to having ten red balls and ten black balls in a bucket, and asking what the chances are of pulling out two balls with different colors in the first two pulls. After you pull out the first ball, statistically you have a 10/19 chance to pull out a ball with the opposite color in the second pull. In other words, there's a 52.6 percent chance that a second failure will bring the system down.

Using RAID-01 and a stripe of ten mirrors, the system would break down only if two disks from the same mirror crash. In our bucket of balls analogy, we have ten pairs of balls, each pair with a different color. What are the chances of pulling out two balls with the same color in our first two pulls? After pulling out the first ball, statistically, we have a 1/19 chance to pull out a ball with the same color in our second pull, or a 5.3 percent chance that a second failure will bring the system down. So we can conclude that the latter configuration, using a stripe of mirrors, is much safer in terms of fault tolerance—about ten times safer.

Performance comparison of RAID-10 and RAID-01 If one of the disks in a mirrored stripe (RAID 10) fails, the whole failed stripe is unusable, leaving ten operational disks; therefore, we potentially lose 50 percent read performance when performing asynchronous I/O. On the other hand, if one of the disks in a striped mirror (RAID 01) fails, only one disk is unusable, leaving 19 operational disks; therefore, we potentially lose only 5 percent read performance when performing asynchronous I/O.

Option 7, using the combined capabilities of striping and mirroring (RAID-10 or RAID-01), is probably a better choice for many sites that currently use RAID-5. The performance and fault-tolerance capabilities are significantly better, and with today's low-priced hardware, the cost isn't prohibitive.

If you need the utmost in fault tolerance of drive failures and your application is extremely mission critical, you can consider more than a single mirrored copy of the data and log. Option 8 is overkill for most environments, but hard drive prices are low enough that this option isn't necessarily prohibitively expensive. If you have a server in a remote offsite location and it's difficult to get a technician to the site in a timely manner, building in expensive fault-tolerance capabilities might make sense.

The bottom line: buy the right system

By now, you should know there's no one correct answer to the RAID-level question. The appropriate decision depends on your performance characteristics and fault tolerance needs. A complete discussion of RAID and storage systems is beyond the scope of this book. But the most important point is that you shouldn't simply buy storage space—you should buy the system that gives you the appropriate level of redundancy and fault tolerance for your data.

SEE ALSO

For a detailed, authoritative discussion of RAID, consult the RAIDbook produced by the RAID Advisory Board. For more information about the RAID Advisory Board, see its home page at http://www.raid-advisory.com.

Hardware or software RAID?

After choosing a RAID level, you must decide whether to use the RAID capabilities of your operating system or those of a hardware controller. If you decide to use RAID10, the choice is simple. Windows NT Server RAID does not currently allow you to keep more than one mirror copy of a drive, so you must use hardware array controllers. Windows 98 doesn't support software-based RAID at all, and Windows NT Workstation and Windows 2000 Professional provide only RAID-0 support.

As for solutions using RAID-0 and RAID-1, the performance advantage of a hardware array controller over the capabilities of the operating system might be minimal. If price and performance are your main criteria, you can use standard fast-wide SCSI controllers and use the money you save to buy more drives or more memory. Several of the formal TPC benchmarks submitted using SQL Server used only the Windows NT versions of RAID-0 and RAID-1 solutions (Option 4) and standard SCSI controllers and drives, with no special hardware RAID support. Hardware RAID solutions provide the largest relative performance difference over the equivalent Windows NT and Windows 2000 software solution if you use RAID-5, but even in this case, the difference is usually marginal at best. Each hardware RAID controller can cost thousands of dollars, and a big system still needs multiple controllers. Using hardware controllers makes the most sense when you need the additional capabilities they provide, such as hot swappability (discussed in the following section).

More About Drives and Controllers

No matter what RAID level you use, you should replace a failed drive as soon as possible. For this reason, you should consider a system that offers hot swappable drives. These drives let you quickly replace the faulty drive without shutting down the system. If the drive is part of a RAID-1 or RAID-5 set, SQL Server can continue to run without error or interruption when the drive fails and even while it is being physically replaced. If you use the Windows NT or Windows 2000 version of RAID1 or RAID-5, you must shut down SQL Server only briefly to reestablish the fault tolerance for the drive because that process requires an exclusive lock on the disk drive by the operating system. (For RAID-1 you'll need to break and re-establish the mirror, and for RAID-5 you'll need to regenerate the striped set with parity.) When you restart SQL Server, the server computer won't even need to be rebooted. (Some hardware RAID solutions might also be able to regenerate or remirror the drive "below" the level of the operating system and hence not require SQL Server to be shut down.) Some systems also offer hot-standby drives, which are simply extra drives that are already installed, waiting to take over. Either of these approaches can get you back in business quickly.

Your disk controller must guarantee that any write operation reported to the operating system as successful will actually be completed. You should never use a write-back caching controller that can "lie" (report a write as completed without guaranteeing that it will actually perform the write) because your databases can become corrupted. In a write-back cache scheme, performance is increased because the bits are simply written to the cache (memory) and the I/O completion is immediately acknowledged. The controller writes the bits to the actual media a moment later. This introduces a timing window that makes the system vulnerable unless the controller designer has protected the cache with a battery backup and has provided correct recovery logic.

Let's say, for example, that a power failure occurs immediately after the controller reports that a write operation has completed but before the cache is actually written to disk. With write-ahead logging, SQL Server assumes that any change is physically written to its transaction log before it acknowledges the commit to the client. If the controller has just cached the write and then fails and never completes it, the system's integrity is broken. If a controller provides write-back caching as a feature, it must also guarantee that the writes will be completed and that they will be properly sequenced if they are reported to the operating system as successful. To be reliable, the caching controller also must guarantee that once it reports that an I/O has completed, the I/O will actually be carried out, no matter what. Such a controller typically employs a built-in battery backup and has solutions that guarantee that any write reported as completed will in fact be completed. If the system fails before a write operation has been completed, the operation is maintained in the controller's memory and is performed immediately after the system restarts, before any other I/O operations occur.

If a controller cannot guarantee that the write will ultimately be completed, you should disable the write-back caching feature of the controller or use a different controller. Disabling write-back caching in an I/O-intensive environment (more than 250 I/Os per second) might result in a SQL Server performance penalty of less than 5 percent. (After all, those writes must be performed eventually. The caching reduces the latency, but ultimately just as much I/O must be carried out.) In a less I/O-intensive test, the effect would likely be negligible. Realistically, the amount of the penalty doesn't matter much if the write isn't guaranteed to complete. Write-back caching must be disabled, or you run the risk of corrupting your data.

Uninterruptible Power Supply

If you're not using a write-back caching controller, you don't need UPS hardware to protect the integrity of your data from a power outage. SQL Server recovers your system to a consistent state after any sudden loss of power. However, you should definitely consider using a UPS; support for UPS hardware is built into Windows NT and Windows 2000 Server. Most hardware failures, such as memory failures and hardware crashes, result from power spikes that a UPS would prevent. Adding a UPS is probably the single most important thing you can do from a hardware perspective to maximize the availability and reliability of your system.

Without a UPS, the machine reboots and perhaps does a CHKDSK of the file system after a power flicker or power spike. This can take several minutes. During this time, your SQL Server is unavailable. A UPS can prevent these interruptions. Even if a power outage lasts longer than the life of your UPS, you have time to do an orderly shutdown of SQL Server and checkpoint all the databases. The subsequent restart will be much faster because no transactions will need to be rolled back or rolled forward.

Battery Backup, UPS, and Caching

A UPS is not the same as the battery backup of the on-board controller RAM. The job of a UPS is to bridge power failures and to give you time to do an orderly shutdown of the system. The computer continues to run for a while from the power supplied by the UPS, and it can be shut down in an orderly way (which the UPS software will do) if power isn't restored within the expected battery life.The battery backup on a caching controller card ensures that the contents of the controller's volatile memory survive; the memory is not lost, so when the system restarts, the write completes. But it doesn't provide the power to actually ensure that a write succeeds. Some cards even allow the battery backed-up RAM to be moved to a different controller if the initial controller fails. Some provide two sets of batteries so that one can be replaced while the other maintains the RAM's contents, theoretically allowing you to keep adding new batteries indefinitely.

You might expect that using a caching controller that doesn't have a battery backup in combination with a UPS is generally OK if you do an orderly shutdown of SQL Server during the life of the UPS's power. However, this isn't always the case. SQL Server support engineers who get customers' servers back up and running can tell horror stories about customers who use write-caching controllers that were not designed around transactional integrity. The caching controller must take into account issues besides power. For example, how does the controller respond to the user pressing the Reset button on the computer? A couple of years ago, a well-known caching controller would have dumped its cache if you hit the Reset button—those writes were never carried out even though the calling application (SQL Server) was notified that they had been carried out. This situation often led to corrupt databases.

The Disk Subsystem

The reliability of the disk subsystem (including device drivers) is vital to the integrity of your SQL Server data. To help you uncover faulty disk systems, the SQL Server support team created a stand-alone, I/O-intensive, multithreaded file system stress test application. This application exercises the Win32 overlapped (asynchronous) I/O services, opens its files with the same write-through cache flags used by SQL Server, and performs similar types of I/O patterns. But the test is totally distinct from SQL Server. If this non-SQL Server I/O test cannot run without I/O errors, you do not have a reliable platform on which to run SQL Server, period. Of course, like any diagnostic program, the test might miss some subtle errors. But your confidence should certainly be higher than it would be without testing. You can download the test program for free from the Microsoft Support site at http://support.microsoft.com. On the Knowledge Base Search page, select All Microsoft Products in Step 1. In Step 2, select Specific Article ID Number. In Step 3, enter Q231619. Click Go. Open the Knowledge Base article that's returned ("INF: SQL70IOStress Utility to Stress Disk Subsystem" and download the file called SQL70IOStress.exe. SQL70IOStress.exe is also included on the companion CD.

Fallback Server Capability

SQL Server Enterprise Edition can take advantage of Microsoft Cluster Server (MSCS), which is available on Windows NT 4 Server, Enterprise Edition, Windows 2000 Advanced Server, and Windows 2000 Datacenter Server. You can install SQL Server 2000 on multiple nodes in a cluster, and all nodes are seen as having a single name on the network. Applications do not have to be aware that SQL Server is installed on a cluster, and MSCS handles the failover completely invisibly to users of SQL Server. For more details, see the operating system documentation.

MSCS requires specialized hardware. By using various RAID options, you can protect your data without clustering. But without clustering, you are not protected against an application outage if the machine fails. MSCS can automatically shift the control of a "switchable" hard-drive array from a damaged node in the cluster to another node. SQL Server 2000 supports two-node clustering on Windows NT Server 4, Enterprise Edition and Windows 2000 Advanced Server, and up to four-node clustering on Windows 2000 Datacenter Server. Only one of the connections to the hard-drive array is active at any time. One of the other hard-drive connections becomes active only if the system detects that the computer currently in control of the hard drive has shut down because of a hardware failure.

The SQL Server installation on the other nodes in the cluster can perform useful work while the first installation of SQL Server operates normally and accesses the data on the shared disk. The other servers can run SQL Server (using different databases than those used by the primary server), they can act as file and print servers, or they can run some other application. If another server needs to take over the work of the first server, that second server must have enough resources to add the primary server's workload to its existing workload.

NOTE

Keep in mind that even with clustering, you need RAID to protect against media failure. The log, at the very least, must be mirrored to protect against a disk failure. The second server can run the disk drives of the failed first server, but those drives must also be protected against media failure.

Most applications probably do not need clustering because today's hardware is highly reliable. The most common hardware failure occurs in a disk drive, which can be protected without clustering by using RAID. As with RAID, clustering does not reduce the need for comprehensive and rigorous backup procedures. You'll probably need backups to recover from an occasional administrator mistake (human error), a catastrophe such as a fire or flood at the site, or a software anomaly. But some applications are so critical that the redundancy of clustering is necessary.

Other Hardware Considerations

If the server is mostly a "lights-out" operation and you won't be administering it locally or running other applications from the actual server machine, it doesn't make much sense to buy a first-class monitor or video card. Consider using an electronic switch box and sharing a monitor, keyboard, and mouse among multiple servers. If you don't use multiple servers, use a basic VGA monitor and video card.

From a performance perspective, a single high-quality network card is sufficient to handle virtually every SQL Server installation. Windows NT and Windows 2000 Server can support multiple network cards simultaneously, and sometimes your network topology will compel you to use this configuration with SQL Server as well. (Perhaps you're supporting both Ethernet and token ring clients on the same server.) However, one network card usually is more than enough—some sites support hundreds or thousands of users with a single 10-Mbit Ethernet card.

When you plan your hardware configuration, be sure to plan for your backup needs. You can back up to either tape or disk devices. As with regular I/O, you'll frequently need multiple backup devices to sustain the I/O rates required for backup. SQL Server can stripe its backup to multiple devices, including tape devices, for higher performance. For the same reasons mentioned earlier when I discussed disk devices, a SCSI tape device is typically preferable to an IDE/EIDE device.

NOTE

Not long ago, backup meant tape. Today, with disk space being the least expensive component of the entire system, many sites configure their hardware with additional disk devices purely for backup use because they prefer the extra speed and ease that backup to disk offers over tape backup. Some sites even use hot swappable drives for their backup; they rotate between a few sets of such drives, keeping a set off site in case of disaster.

EAN: 2147483647

Pages: 179