Measuring Performance

To improve the performance of your game, you must be able to measure its performance. Benchmarking is a technique used to measure the performance of specific routines or even the overall performance of an application. In contrast, instead of computing a performance score for a specific task or a performance score for the game, profiling is a technique that tries to gather a lot of information about different tasks so that their performances can be compared with one another. By doing so, a profiler can help identify performance bottlenecks of a game. In the following sections, we will discuss benchmarking and profiling.

Benchmarking

There are two types of benchmarks. Micro-benchmarks are used to measure the performance of a specific part of the application, whereas macro-benchmarks are used to measure the overall performance of an application.

The idea behind micro-benchmarks is straightforward. If you want to measure the amount of time required to run a specific segment of code, you need a time stamp immediately before the segment is executed and another time stamp immediately after it completes executing. Subtracting the former time stamp from the latter one gives you the execution time of the segment. Once you know how long it took to execute the segment, you can modify its implementation and see whether the modified version performs better. Even though writing a micro-benchmark is conceptually straightforward, writing a micro-benchmark that benchmarks what you really want to benchmark is not a trivial task. Compilers such as Javac.exe and dynamic compilers such as HotSpot can perform optimizations that heavily affect the code you are trying to benchmark. The modifications can be significant enough to give you entirely misleading conclusions when you look at the results of the benchmark.

For example, if you want to measure the cost of invoking a method by measuring the time it takes to call an empty method, you may reach the conclusion that it is practically free. This is because the HotSpot may inline the method. When inlining occurs, the invocation will be replaced with the body of the method. In the case of an empty method, the invocation will be removed and nothing will be inserted. This means the only thing your micro-benchmark will measure is the time it takes to execute the method that retrieves the time stamps. This exact example can happen with a loop. If the runtime compiler decides to unroll a loop that does not have any code in its body, the loop may be entirely removed. Keep in mind that as newer VMs become available, more sophisticated optimizations may be performed on your code. In fact, an exact benchmark that you have used in the past may become useless. A runtime optimizing compiler may be sophisticated enough to realize that the result of some expensive computation is not used in the program and decide to remove an entire section of code.

If it were possible to disable specific optimizations when benchmarking an application, then writing benchmarks would be easier. Unfortunately, even though some compilers do provide some general options for controlling the optimizations, not all of them give total control over every single optimization. To write effective micro-benchmarks you should use the result of your computations. Even if you simply print a value computed by a method to the console, a compiler would not be able to remove the method. If you do not want a method to be inlined, pass a parameter to the method and add it to a public local variable in the object.

It is important to note that optimizing compilers love constant values. If it is guaranteed that a value will not be changed, compilers can use the value to perform optimizations such as pre-computing other values, loop unrolling, and dead-code elimination. You should try to use variables instead of constants when appropriate. For example, if you want to benchmark a loop that runs 10 times, you can store the value 10 in a variable and use the variable in the loop condition. The only problem with this approach is that a compiler can detect that a variable was initialized to a constant value but never modified. Therefore, it can still unroll the loop. A better approach is to use Integer.parseInt("10") as the terminating condition of the loop. Even the most sophisticated compilers cannot guarantee that the result of the computation is always the same value.

Keep in mind that an aggressive compiler may still be able to, say, unroll a loop if it really wants to. Recall from a previous section that a compiler can make opportunistic assumptions to perform additional optimization. As long as it keeps track of the assumptions that it makes and is capable of undoing an optimization, it can revert to the original code if an assumption is violated. In other words, the compiler can choose to use an arbitrary value as a constant so that it can perform some optimizations. However, it must be able to detect any changes to the variable so that it can deoptimize or undo the optimizations it made.

Executing a small segment of code may take fractions of a second, so you must make sure your measurements are useful. Note that the System.currentMillies() can have a very low resolution, depending on the underlying platform. If you use it to benchmark a segment that takes fewer than 10 milliseconds to execute, the time may not even be measurable. There are two ways of dealing with this issue. One is to use a timer that has higher resolution. This can work, but a better approach is to repeat the test numerous times and divide the overall time by the number of times the test was run. Repeating a test many times in a single run of the VM is also important because the VM will have the chance to start up and perform appropriate optimizations.

You should always check the latest VM options for interesting features. Options such as -XX:CompileThreshold and -XX:MaxInlineSize can give you a little control over what the HotSpot does. For example, setting CompileThreshold to a very low value ensures that HotSpot does its optimizations almost immediately. MaxInlineSize can be set to a low value to prevent the VM from inlining methods. -XX:+PrintCompilation can be used to find out which parts of the code are dynamically compiled. Note that the X and XX options change often, and you should read through the options of corresponding VM.

Unlike micro-benchmarks, macro-benchmarks try to measure the overall performance of an application with real-world data. They try to see how well an application will run in actual scenarios that the user may encounter. For example, an automated program can fly though the world and collect data to generate an overall score for how well the game ran. Some games test the capability of a machine by running the game with different settings. After performing a few tests, the score computed by the macro-benchmark can determine the highest settings that still allow the game to run well. Macro-benchmarks take much more effort to create, but a good macro-benchmark can be used throughout the development process to see how changes to the game affect the overall performance.

Profiling

The most fundamental step before optimizing a game is finding the performance bottlenecks. Many programmers try to optimize computations that are just not important enough. One can spend days trying to gain fractions of a percent when rather straightforward changes can result in substantial performance increase. Profilers are effective in locating the bottlenecks. Profilers are more accessible and easier to use than you may think. Many commercial and free profilers are available. If you have not used one already, you should definitely try them. Even JDK comes with a powerful profiler called hprof. It collects profiling information during runtime and writes them to an output file. You can open the output file in a text editor and look for specific tags. There are also free tools that read the outputted file to generate visual representation of the data. HPJmeter is one example of such a tool that is both free and well maintained. Even working with the text output is not too difficult. Commercial profilers such as Jprofiler, JProbe, and Optimizeit are valuable tools that are in many ways similar to hprof. They all use the JVM profiler interface (JVMPI) to collect information while a program executes. They also have additional powerful features that make them worth their price tag.

Execution

Here we will look at some general concepts and terminologies important to profiling. These concepts and terms are common to different profilers, even those written for a specific hardware and platform such as the Intel VTune™. hprof enables you to collect information about CPU utilization as well as the state of the Java heap. hprof is a simple profiler agent that provides the base functionality provided by many commercial profilers. In addition, as mentioned earlier, tools such as HPJmeter use the data file outputted by hprof as their input and produce visual representation.

You can get the different possible options of hprof by executing the following command:

Java -Xrunhprof:help

The following lines are the more important option:

Option Name and Value Description Default ---- ---- ---- heap=dump|sites|all heap profiling all cpu=samples|times|old CPU usage off depth=<size> stack trace depth 4 cutoff=<value> output cutoff point 0.0001

The usage of the command-line parameter is as follows:

Java ... -Xrunhprof[:help]|[:<option>=<value>, ...] ApplicationClassName

The following line is a usage example:

Java -Xrunhprof:cpu=samples,depth=10,cutoff=0 MyGame

By default, the output of hprof is written to java.hprof.txt. The output file contains different tags such as TRACE, HEAP DUMP, SITES, CPU SAMPLES, and CPU TIME. Traces are call stack dumps that specify the locations of interest such as the location of where objects are allocated or the location of frequently called methods.

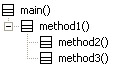

A call graph shows the relationship between different methods. It can be constructed from the traces outputted by hprof. For example, the following traces can be used to construct the call graph shown in Figure 6.1.

TRACE 1: ClassA.method1 ClassA.main TRACE 2: ClassA.method2 ClassA.method1 ClassA.main TRACE 3: ClassA.method3 ClassA.method1 ClassA.main

Figure 6.1: A simple call graph.

The main method is the root of the call graph because method1, method2, and method3 were called indirectly through the main method. Tools such as HPJmeter can easily construct the call graph from these traces. The depth option can be used to specify the depth of the traces saved by hprof. The larger the value, the deeper the traces can be. Note that if the depth is not deep enough, a complete call graph cannot be built. In addition, the cutoff option is used by the profiler to decide whether a trace should be excluded because it is insignificant. It is generally better to set the cutoff=0 so that no traces are left out. By doing so, a complete call graph can be constructed.

There are two common approaches to collecting information about the execution duration of different methods. One way is to actually time each method, and the other is to sample the running program every so often and take a brief peek at which methods are active. Even though measuring the exact execution time of different methods is useful, doing so can result in significant performance problems. To obtain exact times, every single method call has to be intercepted. The overhead can cause a program to perform disappointingly, which can in turn significantly skew the timing information. In contrast, sampling is a nonintrusive and statistical approach to estimating the execution time of different methods. The idea is to sample the running program at specific intervals and record the active methods. An active method is one that corresponds to the topmost frame in a call stack. The longer a method takes to execute and the more often a method is called, the more likely it is to be active when a sample is collected. Note that by doing so, we are not counting the number of times a method is invoked but simply estimating how much time is spent in a method. If enough samples are collected, a relatively accurate model of the program can be created.

hprof supports both the sampling and the timing approach. If you need to know the number of times a method is actually called, you cannot run the profiler in sampling mode. In addition, because the sampling approach finds out only about methods that happened to be active when the samples were taken, the call graph generated from its traces is usually incomplete.

The following lines are the profiling data collected by running the same application using cpu=times and then cpu=samples. Both tests were run for the same amount of time.

CPU TIME (ms) BEGIN (total = 210) rank self accum count trace method 1 23.81% 23.81% 108 2 ClassA.method2 2 14.29% 38.10% 24 1 ClassA.method1 ... CPU SAMPLES BEGIN (total = 4) rank self accum count trace method 1 50.00% 50.00% 2 2 java.util.zip.ZipFile.open 2 25.00% 75.00% 1 5 ClassA.method2 ...

The output tagged with CPU TIME indicates that method2 ranked number one and constituted 23.81 percent of the execution time of the program. It also indicates that method2 was called 108 times. method2 showed up as significant in the sampling approach as well, but of course, the data from the two approaches is not identical because the latter is just an estimation.

As a side note, the time does not have to be actual time units and any abstract ticks can be sufficient to generate relative data. In addition, the runtime of a method is the accumulation of all the ticks spent in the method during all the calls to it.

When it comes to interpreting the timing information, there are two views of timing data, namely exclusive and inclusive times. Exclusive time is the time spent in the actual method without taking into account the amount of time spent in the methods it called. Inclusive time is the time spent in a method along with all the methods it called. Inclusive times are a practical and useful way of associating times with tasks. For example, they can help you realize that updating the AI of a character is taking a considerable amount of time, even though the task consists of many small computations that are not significant by themselves. Inclusive times of the methods, along with a decent call graph, can be used to compute their exclusive times. On the other hand, computing inclusive results from exclusive times is problematic and can yield only estimations. For example, if multiple traces lead to method1, simply accumulating the exclusive time of method1 will cause problems when the traces have the same root. On the other hand, inclusive times are misleading when dealing with recursive methods. Using cpu=times or cpu=samples implicitly uses exclusive times. If you wish to look at inclusive times, you can run the profiler with cpu=old.

JDK comes with another tool that can give you an alternative way of looking at your application. By using the -Xprof, as opposed to -Xrunhprof, you can find out how much time is spent in interpreted versus compiled code. When providing information about the amount of time spent in interpreted code, it also notes how much of the time was spent in native code that was called from interpreted code. Xprof has a section labeled “Stub,” which lists methods that were called through JNI. The interpreted column under that section specifies the amount of work it took before the native method was called, and the native column shows how much time was spent in the native function.

Memory

To solve memory-related problems and bottlenecks, you need to obtain information about the state and activities of the GC. The standard option -verbose:gc can be used to view the activities of the GC. Using -verbose:gc will print information such as the following to the console window. You can use -Xloggc:log.txt to have the GC activities written to a log file.

[GC 16952K->16916K(19640K), 0.0009694 secs] [Full GC 17268K->100K(19640K), 0.0173905 secs]

Each line indicates whether the collection was performed on the young generation. The first line shows that a minor collection was performed, which dropped the amount of memory used from 16952K to 16916K. The number in parentheses shows that the total available memory in heap is 19640K, which is about 2MB. The time at the end of each line indicates how long it took to complete the collection. The second line indicates that a major collection was performed that was able to reclaim reasonable amount of memory and, of course, took longer than the minor collection.

The nonstandard option -XX:+PrintGCDetails provides more detailed information. For example, the following output indicates that both the young and tenured generations were collected:

0.299: [GC 0.299: [DefNew: 145K->5K(384K), 0.0016798 secs]0.300: [Tenured: 366K->221K(1600K), 0.0273660 secs] 511K ->221K(1984K),0.0312235 secs]

Note that the numbers in parentheses indicate the available heap for each generation. As mentioned earlier, the size of each generation may change when a collection occurs. If you want to fix the size of the generations, you can use Xms, Xmx, NewSize, MaxNewSize, PermSize, and MaxPermSize.

Programs such as HPjtune can read the activities of the GC and generate diagrams. They can also compute useful statistics such as heap usage, duration, cumulative allocation, and creation rate diagrams. In addition, they can point out the times at which the entire heap was collected. When minor and major collections have the same time stamps, it is an indication that the entire heap was collected.

To collect information about the content of the heap, you can use hprof and specify a value for its heap option. The heap=sites option allows you to collect information about the total count and type of allocated objects, live objects, and their site. This option can help you find out if a suspicious amount of objects of certain types are being created.

The parameter heap=dump can be used to obtain a full dump of the heap. Pressing Ctrl + Break in the console window will signal hprof to write a memory dump. By default, a heap dump is performed before the VM exits. A heap dump can be used to find out what objects are in the heap at specific times. For example, after your game has loaded, you can perform a heap dump to find out whether temporary load objects are kept around by mistake. In such scenarios, you must make sure that references to unwanted objects are set to null, or the references fall out of scope.

EAN: 2147483647

Pages: 171