Practice 6. Leverage Test Automation Appropriately

| Ted Rivera and Scott Will Investing in test automation that is appropriate for your organization and project can bring significant quality improvements and time savings. ProblemPressure to get a product out the door quickly and never-ending staffing shortfalls are twin demons that torment the production of much software today.[20] These two problems have led many teams to consider automating aspects of their testing to help increase productivity and improve quality. The problem, though, is that test automation, if not approached thoughtfully, can actually be detrimental to both productivity and quality. Teams can wind up investing too much time in automation, or cobble something together that doesn't really help find the types of defects that test automation is good at finding. In short, too much or too little test automation can have potentially dramatic and negative implications. Furthermore, the initial investment is not always well understood, ongoing maintenance of an automation test suite is often not considered, and the long-term effectiveness of automated tests can wane over time as the code becomes "inoculated" against the specific tests being run.

This practice addresses some of the different types of test automation available to independent testers and test teams, suggests ways to think through an approach to test automation, and discusses some of the potential pitfalls to be avoided. BackgroundIn his landmark work, The Mythical Man Month, Fred Brooks long ago opined that there was no such thing as a silver bullet in software development, and it would be difficult to counter this assertion.[21] Although there have been numerous advances and refinements in software engineering, none of these has produced new standards of efficiency or qualityalthough many cherish this expectation, or at least hope, for test automation. And while some still search for that elusive silver bullet, others have sought more modest incremental improvements in order to reduce the effects of the ubiquitous schedule and staffing pressures while still increasing the overall quality of their products. The people with these more measured expectations are most likely to recognize that they can make real progress by deploying appropriate test automation.

The test profession is older, and testers are more experienced now, than when the concept of test automation first emerged.[22] Initially, it was quite common to hear that test cycles would soon be reduced from months to a matter of days, or even that test teams could be eliminated altogether, all on account of the exaggerated predictions regarding test automation.

Test automation in its various formsfor example, code unit tests, user interface tests, acceptance tests, and so ondoes hold forth many promises to the teams that choose to pursue it; except in the simplest environments, however, automation is not a quick fix. Nevertheless, like urban legends, test automation myths die hard. Let's consider a few:

On the other hand, test automation will allow you to achieve ends that would otherwise be impossible. For example:

With these comments in mind, it is our aim to provide you with a perspective that will help you succeed in implementing effective test automation and integrating automation into your larger test efforts. You can think of effective test automation as similar to regular maintenance on your car. Regular oil changes, tire rotations, belt adjustments, and the like ensure your vehicle's consistent long-term performance, so that it will run smoothly on your most important days, such as when you're driving to that critical job interview or to your daughter's wedding. In the same way, test automation is not glamorous, but it can ensure that the worst problems you might otherwise encounter are averted in the mad rush to get your product out the door (see Figure 3.2). Figure 3.2. Appropriate Test Automation, like Car Maintenance, Is Not Glamorous, But Essential.Appropriate test automation will keep things running smoothly and help you find and prevent problems before they result in a disaster. Applying the PracticeNote the key word in the title of this chapterour aim is not simply test automation but test automation done appropriately. And just what constitutes appropriate test automation? Every team is going to answer that question differently.

Understand What Is Appropriate for YouAppropriate test automation is not a one-size-fits-all effort. What is ideal for one team is rarely ideal for another. To begin with, what is appropriate for you depends on the goals established for your project.

What is appropriate also depends on the skill level of your team:

Finally, what is appropriate depends on items that are unique to your organization:

These lists are by no means exhaustive; the best approach in formulating a plan for test automation for your team or project is to consider not only these questions but others as well. The following sections are intended to provide a useful starting point: gather your team together and brainstorm where you need to get to in regard to automation and in relation to where you are now. If you can understand, agree on, and prioritize the automation you need, you will stand a fighting chance of obtaining the support you need to accomplish your goal. What Should You Automate?The areas in which test automation is most beneficial fall into four broad categories:

Note Generally, "environmental" elements and "regression" are the most important aspects of test automation, because of the relative time savings a test team can realize. There may be exceptions (for example, in instances where extensive performance testing is required), but this is a fair heuristic to keep in mind. Environmental Automation

Surprisingly, environmental automation is often overlooked, and yet it can be one of the most helpful forms of automation to test teams. By environmental we mean the ability to provision machines quickly for use by the test team. For example:

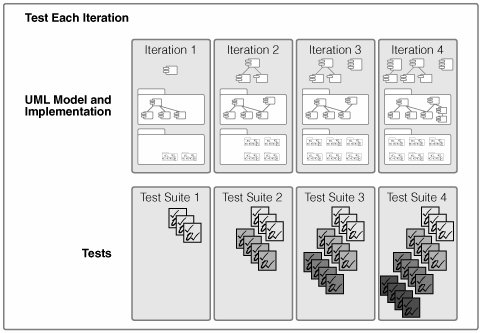

Think about how many times you need to "scrape a machine down to bare metal" in your testing and reinstall everything from the ground up just to make sure that someone else didn't leave anything hanging around that may adversely affect your testing. In some instances it could easily take an entire day (or longer!) for one person to get one machine ready to be used for testing. You can easily regain the significant amount of time wasted in such an effort by automating the provisioning of the test machines. The ability to lay down a specific version of an OS quickly, with all fix-packs and security fixes, as well as all product prerequisites, should be one of the first items you look at when automating. Additionally, when such a capability is created, others can potentially pick it up, thus multiplying its benefit to the organization. Still further, the benefits of environmental automation are greatest when you want to test a product in many different environments. Automating Regression TestingRegression testing is no more complicated a notion than running tests more than once. Automating regression testing means building a suite of tests that can be used, and reused, as needed. This becomes especially important when doing iterative development. Figure 3.3 shows how automation is incorporated when using an iterative approach. Figure 3.3. Test Automation and Iterative Development.

Note that the test suite created to test the first iteration is still being used as part of the "Iteration 2" test suite. Hopefully, you can see why automating the first test suite would be beneficial: it will be run again during testing of the second, third, and fourth iterations. And the reason it is being run during each of these iterations is to ensure that code added during later iterations does not break anything that was previously workinghence the term regression. Note that the same holds true for those tests created specifically for code added during the second iterationtheywill be executed again during the third and fourth iterations, and the tests will be run the same way each time. Consistency is key when dealing with regression testing, and it is also one of the strengths of automated testing. If tests are not run the same way each time, how do you know that a defect has not been introduced between the time it was last executed and now? The implications of testing are rarely considered as an organization begins torightlyadopt an iterative approach to development: they are too often an afterthought, or a worry. The reality of testing in an organization that employs true iterative development is that automation is less an option than a necessityit is often physically impossible to test each build at each iteration with any degree of confidence, and test teams are consequently forced to "do the best they can." With an appropriate focus on automating a regression test suite, genuine forward progress can be maintained.

One way to not only maintain, but also accelerate, forward progress is to work closely with the development team in creating test automation. Often, developers will produce "test stubs" in order to do some testing on their code. With some forethought and planning, these test stubs can be designed to become the "seeds" from which testers can grow automated test suites. For those teams venturing into "test-driven development," this scenario is practically guaranteed.[24]

In sum, regression testing provides an assurance that regression errors have not been introduced, or at least helps ensure that any errors introduced will be caught. As such, it is especially important to the overall success of an iterative development approach. Additionally, regression testing is useful during the ongoing product maintenance, and for the same reasons: should a customer find a defect that requires a fix to be coded and then sent to that customer, the ability to execute a regression test suite prior to releasing the fix can help ensure that the fix will not break something else, thus providing greater assurance that the customer will not be further inconvenienced. Therefore, despite the real danger that your automation suite's apparent effectiveness will wane after the initial inoculation it provides, isn't this a better scenario than falling victim to the "disease" of defects? Still better, though, by maintaining your test automation suite you will continually find new defects and improve overall quality. Note To reiterate, environmental and regression test automation are generally the most important areas of automation to address, because they typically provide the most significant time and labor savings and the greatest overall likelihood of improving product quality. Automating User-Interface TestingThe next area that you should consider a good candidate for automation is the testing of the GUI code. It used to be that automated GUI code testing was nothing more than simple "record and play," which proved to be quite cumbersome in spite of the really cool demonstrations that accompanied the product "glossies." As soon as a programmer changed the GUI in any way (and this is the reality of the software development process), any previously recorded session became useless, and automation had to be started all over again. Add to this the fact that not much forethought was given to providing a structured approach to what was first "recorded" and then "played back," and test teams often found themselves with a mess on their hands. Newer record-and-play tools, however, have overcome many of the initial shortcomings of this approach. While these tools are certainly the easiest way to start using automated GUI testing, tools that provide a programmatic interface allow for much more complex GUI testing. Being able to test each and every menu option, click on every button, and fill in every text entry fieldall in much less time than it would take to do manuallyis a great boon to independent testers and test teams. However, since you need a different set of skills to use programmatic interface tools than to operate the simple record-and-play tools, you should remember that appropriate GUI testing for your team depends extensively on the project goals, the type of product being developed, and the skill set of your test team.

The upside to user-interface testing is that it will help catch those defects that a customer will see, too often prominently displayed in living color. One of the potential downsides is that because most of the code written for your project is likely not user-interface codeautomating user-interface tests may not be the highest priorityunit test tools are arguably easier to use and potentially provide a more easily demonstrable payback. As such, you should resist the urge to jump into automated GUI testing because it appears to be the easiest or most visible way to get startedif you choose to do so, make sure that it is part of an overall, well-conceived test automation plan. Automating Stress and Performance Testing

One final area to consider is automating the stress and performance testing of the product.[25] In the traditional waterfall approach to software development, such testing usually cannot be accomplished until fairly late in the product development lifecycle. With many newer, iterative development approaches, however, it is easier to perform stress and performance testing earlier in the lifecycle. For example, RUP suggests carrying out the first stress and performance testing during the elaboration phase, which focuses on getting the product architecture right. The ability to accomplish early testing here allows the project team to validate that the architecture is capable of supporting the specific performance requirements, thus mitigating risk. In later phases these automated tests are rerun to ensure that product performance and capabilities have not regressed in any way.

Stress testing is typically accomplished in two primary ways: (1) by running one test repeatedly and (2) by executing numerous instances of a given test at a particular time. In the first situation, wrapping a single automated test with a script that loops back on itself is quite simple to create, but make sure that if you do so, it is because that is the way the product is going to be used; do not waste cycles automating this just because it is easy to do so. In the second situation, you're simulating something like a number of users all logging onto a server at the same time, for example, when a bank branch office opens and all the bank tellers log on to the bank's main computer. In performance testing, test cases can be "instrumented" to measure how long a given test takes to complete. Results can be recorded and then compared with previous results to see if the product performance is improving or declining. The ability to run performance tests repeatedly also provides the basis for creating capacity planning models, which can then be used to help customers understand the hardware required to achieve their desired performance levels. Now that you are (hopefully) excited about the different possibilities that exist for test automation, it's time to look at one last issue you need to think through thoroughly before jumping in with both feet. Understanding the Costs of Test AutomationMany highly technical, intelligent engineers, and even many managers of technical engineers, fail to grasp the importance of finances in relation to the work they do. This problem is sometimes less critical in small shops, where there is a more obvious and immediate correlation between how efficiently the work is done and whether or not the shop remains in business. But whenever we as engineers or technical managers fail to connect the work we do with the bottom line, we run the very real risk of seeing all our hard work come to nothing. This is one reason why, for example, agile modeling emphasizes the principle "maximize shareholder investment." Automation projects are often scrutinized more closely than other projects for their return on investment, in large measure because of the inherent expectation that they will lead to demonstrable financial savings. In any moderate to large automation effort, automation must be conceived of as similar to a development project. An automation blueprint (or design) must be developed. Prioritization of feature content, sizings, planning, staffing, and all the normal elements of software development should be considered. While it may be true that software used for internal purposes need not have the rigor of software produced for clients, an appropriate level of forethought and planning is still required if the results of test automation are to be effective. If, as a tester, you complain about the approach your partners take to development, it may be that you can use your test automation project to show a better way. Perhaps the biggest cost in test automation, and one that is habitually overlooked, concerns the upkeep of automated tests. Any product design change will have a ripple effect on the corresponding automation test suite. To ensure continued usage of the test suites after product changes have taken place, someone has to update the automated tests that have been written. Sometimes the updates will be trivial and require only minimal effort to complete, whereas other times a significant design change can demand an equally significant change to the automated test suite. The extra work involved in the upkeep of the automated test suite must be recognized and planned for throughout the history of a product. When you build a schedule for testing that does not include time for automation and maintenance, you are accountable for your automation suite's decline.[26]

In spite of the costs, investing in test automation that is appropriate for your organization and project can be very beneficial, in terms of both time savings and quality improvements. Begin by acquiring experience, skills, and some initial "success stories" and then build on those from thereyou'll be glad you did. To return to our car maintenance analogy, it is often easier to understand the cost of neglected car maintenance that results in tangible repair bills than to grasp the return on investment from routine maintenance that prevents problems in the first place. So also, with test automation, the return on investment is often undervalued. Actively communicate the value you are realizing from your investment in test automation.

Other MethodsAside from simply not testing at all, fundamentally, the only other option is to do testing manually. Even though there are significant benefits to appropriate levels of test automation, manual testing is still quite widespread. Manual testing does allow for certain levels of creativity and can be quite effective when the tester has a good intuitive feel for the product being testedand that intuitive feel is not something that can be replicated by an automated test suite. However, adding test automation does overcome some of the many possible pitfalls of manual testing, notably:

With these potential manual testing shortcomings in mind, it is easy to see why automated testing should be a regular part of your overall software testing strategy. In XP, automated testing is mandatory. You simply can't perform test-driven development without automated tests, because rerunning the tests would just not be feasible. Automated testing is also a cornerstone of the Unified Process, since you cannot effectively grow a system iteratively without some form of automated regression testing. The Unified Process includes test-driven development as one recommended development approach, and goes beyond automated testing to include guidance on other forms of testing, such as exploratory testing and usability testing. Levels of AdoptionThis practice can be adopted at three different levels:

Related Practices

Additional InformationInformation in the Unified ProcessOpenUP/Basic assumes that the basic development environment and tools already exist. Some level of test automation is assumed, in particular to support smoke tests and regression tests to ensure that builds remain stable. RUP adds guidance on how to set up a test automation environment, from basic environmental automation to regression test suites. RUP also adds guidance on how to test larger systems of systems, which may include many levels and types of testing. Detailed guidance for specialized testing, such as globalization testing, performance testing, database testing, user interface testing, and so on, are covered by RUP and RUP plug-ins. RUP also includes guidance for using specific testing tools to perform automated testing. Additional ReadingFor books, articles, and online sources referred to in this practice or related to it, consider the following:

|

EAN: 2147483647

Pages: 98

- ERP Systems Impact on Organizations

- ERP System Acquisition: A Process Model and Results From an Austrian Survey

- The Second Wave ERP Market: An Australian Viewpoint

- Context Management of ERP Processes in Virtual Communities

- Relevance and Micro-Relevance for the Professional as Determinants of IT-Diffusion and IT-Use in Healthcare