Environment Mapping

In the real world, the appearance of many objects is affected not only by the lights around them but also by other objects. Mirrors are an obvious example, and metal and other highly reflective surfaces also fall into this category. One way to generate this effect in Direct3D is with environment maps. These are special texture maps that are applied to primitives in such a way as to indicate a reflection of the surrounding scene.

Spherical Environment Maps

With a spherical environment map, a single texture is used to represent the world surrounding an object projected onto a sphere. (Think of the rendered object as being contained in a bubble.) You can also think of each texel as a representation of what you see when looking out from the object in a particular direction. Before the object is rendered, the texture coordinates of the object's vertices need to be adjusted such that the direction in which the vertex normal is pointing maps to the appropriate part of the environment map.

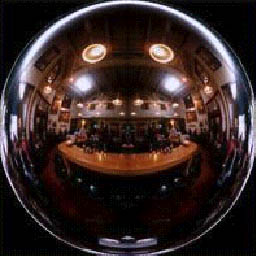

The DirectX SDK has a sample named Sphere Map that demonstrates this technique. It uses the image shown in Figure 11-6 as its sphere map.

Figure 11-6 Sphere map

Before rendering the shiny object (a teapot in the Sphere Map example), the texture coordinates of each vertex get computed as a function of the vertex's normal. Note that the normals first need to be transformed from local space into camera space.

//------------------------------------------------------------------- // Name: ApplySphereMapToObject // Desc: Uses the current orientation of the vertices to calculate // the object's sphere-mapped texture coordinates //------------------------------------------------------------------- HRESULT ApplySphereMapToObject( LPDIRECT3DDEVICE3 pd3dDevice, D3DVERTEX* pvVertices, DWORD dwNumVertices ) { // Get the current world-view matrix. D3DMATRIX matWorld, matView, matWV; pd3dDevice->GetTransform( D3DTRANSFORMSTATE_VIEW, &matView ); pd3dDevice->GetTransform( D3DTRANSFORMSTATE_WORLD, &matWorld ); D3DMath_MatrixMultiply( matWV, matView, matWorld ); // Extract world-view matrix elements for speed. FLOAT m11 = matWV._11, m21 = matWV._21, m31 = matWV._31; FLOAT m12 = matWV._12, m22 = matWV._22, m32 = matWV._32; FLOAT m13 = matWV._13, m23 = matWV._23, m33 = matWV._33; // Loop through the vertices, transforming each one and // calculating the correct texture coordinates. for( WORD i = 0; i < dwNumVertices; i++ ) { FLOAT nx = pvVertices[i].nx; FLOAT ny = pvVertices[i].ny; FLOAT nz = pvVertices[i].nz; // Check the z-component, to skip any vertices that face // backward. if( nx*m13 + ny*m23 + nz*m33 > 0.0f ) continue; // Assign the sphere map's texture coordinates. pvVertices[i].tu = 0.5f * ( 1.0f + ( nx*m11 + ny*m21 + nz*m31 ) ); pvVertices[i].tv = 0.5f * ( 1.0f - ( nx*m12 + ny*m22 + nz*m32 ) ); } return S_OK; } |

Because the sphere map texture is a highly distorted view of the world, Direct3D can't generate it in real time. You'll need to generate this texture before running the program. This means that with spherical environment mapping, the view being reflected is completely static. Whether the user notices this depends on how discernible the reflected scene is.

Cubic Environment Maps

Sphere maps can create a believable reflection effect in many cases, but they aren't perfect. As mentioned earlier, one problem is that they aren't easy to generate in real time. They also have limited resolution, especially near the edges of the map. And if the camera looks in a different direction, the sphere map might not have all the reflection information that is needed. To remedy some of these problems, Direct3D also supports cube maps. With cube maps, the reflection is encoded in a conceptual cube around the object rather than a sphere. Whereas a sphere map compresses its conceptual sphere onto a single texture, a cube map is represented as a group of textures—one per face of the cube.

The EnvCube sample in the DirectX SDK illustrates how to use cube maps. This sample loads a cube containing some textures, along with some other objects that animate and will show up in the cube maps. It updates the cube map every frame in the RenderEnvMap function by rendering the scene from multiple camera orientations and storing the results in the appropriate faces of the cube map.

//------------------------------------------------------------------- // Name: RenderEnvMap // Desc: Renders to the device-dependent environment map //------------------------------------------------------------------- HRESULT CMyD3DApplication::RenderEnvMap( EnvMapContainer* pEnvMap ) { // Check parameters. if( NULL==pEnvMap ) return E_INVALIDARG; D3DVIEWPORT7 ViewDataSave; D3DMATRIX matWorldSave, matViewSave, matProjSave; DWORD dwRSAntiAlias; // Save render states of the device. m_pd3dDevice->GetViewport( &ViewDataSave ); m_pd3dDevice->GetTransform( D3DTRANSFORMSTATE_WORLD, &matWorldSave ); m_pd3dDevice->GetTransform( D3DTRANSFORMSTATE_VIEW, &matViewSave ); m_pd3dDevice->GetTransform( D3DTRANSFORMSTATE_PROJECTION, &matProjSave ); m_pd3dDevice->GetRenderState( D3DRENDERSTATE_ANTIALIAS, &dwRSAntiAlias ); // Set up a viewport for rendering into the envmap. D3DVIEWPORT7 ViewData; m_pd3dDevice->GetViewport( &ViewData ); ViewData.dwWidth = pEnvMap->dwWidth; ViewData.dwHeight = pEnvMap->dwHeight; // Because the environment maps are small, antialias when // rendering to them. m_pd3dDevice->SetRenderState( D3DRENDERSTATE_ANTIALIAS, D3DANTIALIAS_SORTINDEPENDENT ); // Render to the six faces of the cube map. for( DWORD i=0; i<6; i++ ) { ChangeRenderTarget( pEnvMap->pddsSurface[i] ); m_pd3dDevice->SetViewport( &ViewData ); // Standard view that will be overridden below D3DVECTOR vEnvEyePt = D3DVECTOR( 0.0f, 0.0f, 0.0f ); D3DVECTOR vLookatPt, vUpVec; switch( i ) { case 0: // pos X vLookatPt = D3DVECTOR( 1.0f, 0.0f, 0.0f ); vUpVec = D3DVECTOR( 0.0f, 1.0f, 0.0f ); break; case 1: // neg X vLookatPt = D3DVECTOR(-1.0f, 0.0f, 0.0f ); vUpVec = D3DVECTOR( 0.0f, 1.0f, 0.0f ); break; case 2: // pos Y vLookatPt = D3DVECTOR( 0.0f, 1.0f, 0.0f ); vUpVec = D3DVECTOR( 0.0f, 0.0f,-1.0f ); break; case 3: // neg Y vLookatPt = D3DVECTOR( 0.0f,-1.0f, 0.0f ); vUpVec = D3DVECTOR( 0.0f, 0.0f, 1.0f ); break; case 4: // pos Z vLookatPt = D3DVECTOR( 0.0f, 0.0f, 1.0f ); vUpVec = D3DVECTOR( 0.0f, 1.0f, 0.0f ); break; case 5: // neg Z vLookatPt = D3DVECTOR( 0.0f, 0.0f,-1.0f ); vUpVec = D3DVECTOR( 0.0f, 1.0f, 0.0f ); break; } D3DMATRIX matWorld, matView, matProj; D3DUtil_SetIdentityMatrix( matWorld ); D3DUtil_SetViewMatrix( matView, vEnvEyePt, vLookatPt, vUpVec ); D3DUtil_SetProjectionMatrix( matProj, g_PI/2, 1.0f, 0.5f, 1000.0f ); // Set the transforms for this view. m_pd3dDevice->SetTransform( D3DTRANSFORMSTATE_WORLD, &matWorld ); m_pd3dDevice->SetTransform( D3DTRANSFORMSTATE_VIEW, &matView ); m_pd3dDevice->SetTransform( D3DTRANSFORMSTATE_PROJECTION, &matProj ); // Clear the z-buffer. m_pd3dDevice->Clear( 0, NULL, D3DCLEAR_ZBUFFER, 0x000000ff, 1.0f, 0L ); // Begin the scene. if( SUCCEEDED( m_pd3dDevice->BeginScene() ) ) { if( m_pFileObject1 ) m_pFileObject1->Render( m_pd3dDevice ); if( m_pFileObject2 ) m_pFileObject2->Render( m_pd3dDevice ); if( m_pEnvCubeObject ) m_pEnvCubeObject->Render( m_pd3dDevice ); // End the scene. m_pd3dDevice->EndScene(); } } ChangeRenderTarget( m_pddsRenderTarget ); m_pd3dDevice->SetViewport(&ViewDataSave); m_pd3dDevice->SetTransform( D3DTRANSFORMSTATE_WORLD, &matWorldSave ); m_pd3dDevice->SetTransform( D3DTRANSFORMSTATE_VIEW, &matViewSave ); m_pd3dDevice->SetTransform( D3DTRANSFORMSTATE_PROJECTION, &matProjSave ); m_pd3dDevice->SetRenderState( D3DRENDERSTATE_ANTIALIAS, dwRSAntiAlias ); return S_OK; } |

The shiny object to be rendered, a sphere, is created using special texture coordinates that have three dimensions instead of the usual two. The ReflectNormals function reflects the eye's vector off the surface's normal, and the resulting reflection vector is stored in the texture coordinates.

//------------------------------------------------------------------- // Name: ReflectNormals //------------------------------------------------------------------- VOID CMyD3DApplication::ReflectNormals( SPHVERTEX* pVIn, LONG cV ) { for( LONG i = 0; i < cV; i++ ) { // Eye vector (doesn't need to be normalized) FLOAT fENX = m_vEyePt.x - pVIn->v.x; FLOAT fENY = m_vEyePt.y - pVIn->v.y; FLOAT fENZ = m_vEyePt.z - pVIn->v.z; FLOAT fNDotE = pVIn->v.nx*fENX + pVIn->v.ny*fENY + pVIn->v.nz*fENZ; FLOAT fNDotN = pVIn->v.nx*pVIn->v.nx + pVIn->v.ny*pVIn->v.ny + pVIn->v.nz*pVIn->v.nz; fNDotE *= 2.0F; // Reflected vector pVIn->v.tu = pVIn->v.nx*fNDotE - fENX*fNDotN; pVIn->v.tv = pVIn->v.ny*fNDotE - fENY*fNDotN; pVIn->nz = pVIn->v.nz*fNDotE - fENZ*fNDotN; pVIn++; } } |

When the scene is rendered, the nonshiny objects are rendered normally. Then before the sphere is rendered, the cube-map texture is selected. When the sphere is rendered, the flag D3DFVF_TEXCOORDSIZE3(0) is passed to DrawPrimitive, indicating that the texture coordinates have three components. Direct3D does the work of translating the reflection vector into the appropriate texture coordinates on the cube map. The resulting sphere is nice and shiny!

Unlike sphere maps, cube maps require support from the Direct3D device to work. Hardware support for cube maps is growing but is not yet universal. So if the Direct3D device doesn't report the D3DPTEXTURECAPS_CUBEMAP flag, you should use sphere mapping or no environment mapping at all. Keep in mind that in many cases, the accuracy of the environment mapping is lost in the fast-moving action of the game. So take advantage of high-quality environment mapping when you can, but note that even mathematically inaccurate reflections can often fool the eye.

EAN: 2147483647

Pages: 131