No Single Point of Failure

We can further describe the architecture of the enterprise cluster by discussing a basic requirement of any mission-critical system: it must have no single point of failure.

An enterprise cluster should always have the following characteristic: Any computer within the cluster, or any computer the cluster depends upon for normal operation, can be rebooted without rebooting the entire cluster.

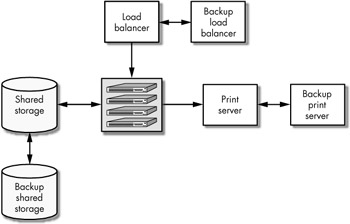

One way to be able to reboot servers the cluster depends upon without affecting the cluster is to build high-availability server pairs for all of the servers the cluster depends upon for normal operation. Our simplified cluster drawing could be redrawn as shown in Figure 3.

Figure 3: Enterprise cluster with no single point of failure

This figure shows two load balancers, two print servers, and two shared storage devices servicing four cluster nodes.

In Part II of this book, we will learn how to build high-availability server pairs using the Heartbeat package. Part III describes how to build a highly available load balancer and a highly available cluster node manager. (Recall from the previous discussion that the cluster node manager can be a print server for the cluster.)

| Note | Of course, one of the cluster nodes may also go down unexpectedly or may no longer be able to perform its job. If this happens, the load balancer should be smart enough to remove the failed cluster node from the cluster and alert the system administrator. The cluster nodes should be independent of each other, so that aside from needing to do more work, they will not be affected by the failure of a node. |

EAN: 2147483647

Pages: 219