LVS, Heartbeat, and ldirectord Recipe

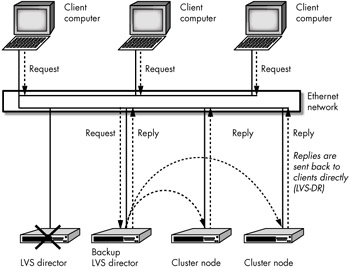

In this recipe, we'll build the highly available LVS-DR cluster shown in Figure 15-1.

| Note | If you haven't already built an LVS-DR according to the instructions in Chapter 13, you should do so before you attempt to follow this recipe. |

List of ingredients:

-

Kernel with LVS support and the ability to suppress ARP replies (2.4.26 or greater in the 2.4 series, 2.6.4 or greater in the 2.6 series)

-

Copy of the Heartbeat RPM package

-

Copy of the ldirectord Perl program

Hide the Loopback Interface

We need to tell the real servers to ignore ARP broadcasts from client computers that are searching for the owner (the MAC address) of the VIP (see the section "ARP Broadcasts and the LVS-DR Cluster" in Chapter 13 for an explanation of why this is required in an LVS-DR cluster).

There are two commonly used methods to accomplish this, and you can use either one: either create a script that is launched by the init program when the system boots (see Chapter 1), or modify the /etc/sysctrl.conf file and issue the sysctl -p command each time the system boots.[7] The chapter15 subdirectory on the CD-ROM contains a copy of the following sample init script that will let you use the first method. The script adds the VIP address to the hidden loopback device, adds a routing table entry[8] for the VIP, enables packet forwarding, and tells the kernel to hide the VIP.

| Note | This script can be used on real servers and on the primary and backup Director because the Heartbeat program[9] knows how to remove a VIP address on the hidden loopback device when it conflicts with an IP address specified in the /etc/ha.d/haresources file. In other words, the Heartbeat program will remove the VIP from the hidden loopback device when it first starts up[10] on the primary Director, and it will remove the VIP from the hidden loopback device on the backup Director when the backup Director needs to acquire the VIP (because the primary Director has crashed). |

#!/bin/bash # # lvsdrrs init script to hide loopback interfaces on LVS-DR # Real servers. Modify this script to suit # your needs—You at least need to set the correct VIP address(es). # # Script to start LVS DR real server. # # chkconfig: 2345 20 80 # description: LVS DR real server # # You must set the VIP address to use here: VIP=209.100.100.2 host=`/bin/hostname` case "$1" in start) # Start LVS-DR real server on this machine. /sbin/ifconfig lo down /sbin/ifconfig lo up echo 1 > /proc/sys/net/ipv4/conf/lo/arp_ignore echo 2 > /proc/sys/net/ipv4/conf/lo/arp_announce echo 1 > /proc/sys/net/ipv4/conf/all/arp_ignore echo 2 > /proc/sys/net/ipv4/conf/all/arp_announce /sbin/ifconfig lo:0 $VIP netmask 255.255.255.255 up[11] /sbin/route add -host $VIP dev lo:0 ;; stop) # Stop LVS-DR real server loopback device(s). /sbin/ifconfig lo:0 down echo 0 > /proc/sys/net/ipv4/conf/lo/arp_ignore echo 0 > /proc/sys/net/ipv4/conf/lo/arp_announce echo 0 > /proc/sys/net/ipv4/conf/all/arp_ignore echo 0 > /proc/sys/net/ipv4/conf/all/arp_announce ;; status) # Status of LVS-DR real server. islothere=`/sbin/ifconfig lo:0 | grep $VIP` isrothere=`netstat -rn | grep "lo:0" | grep $VIP` if [ ! "$islothere" -o ! "isrothere" ];then # Either the route or the lo:0 device # not found. echo "LVS-DR real server Stopped." else echo "LVS-DR Running." fi ;; *) # Invalid entry. echo "$0: Usage: $0 {start|status|stop}" exit 1 ;; esac Place this script in the /etc/init.d directory on all of the real servers and enable it using chkconfig, as described in Chapter 1, so that it will run each time the system boots.

| Note | The Linux Virtual Server website (http://www.linuxvirtualserver.org) also contains an installation script that will build an init script similar to this for you automatically. |

Install the Heartbeat on a Primary and a Backup Director

We will use Heartbeat to start ldirectord and bring up the VIP IP alias or secondary IP address on the primary Director. The ldirectord program and the VIP will be one resource group (see Chapter 8) under Heartbeat's control. If the primary Director crashes, Heartbeat running on the backup Director will take over this resource group and client computers will continue to be able to access the cluster.

Install ldirectord and its Required Software Components

ldirectord is a Perl program that relies on several prewritten Perl modules that help it to connect to the real servers using HTTP, POP, telnet, and so on. The following sections discuss how to install the necessary Perl modules.

Install Perl Modules Located on the CD-ROM

The chapter15/ldirectord/dependencies directory on the CD-ROM in this book includes the current Perl module dependencies for ldirectord from the Ultra Monkey website.[12] Use the following commands to extract and build each Perl module.

#mkdir -p /usr/local/src/perldeps #mount /mnt/cdrom #cp -r /mnt/cdrom/chapter15/ldirectord/dependencies /usr/local/src/perldeps #cd /usr/local/src/perldeps #tar xzvf libnet* #cd libnet-<version> #perl Makefile.PL #make #make install

Download Perl Modules From CPAN

If you choose not to install from the CD-ROM, you can download the necessary Perl modules for free from the Comprehensive Perl Archive Network or CPAN (http://www.cpan.org). Here is a list of the protocols and services that ldirectord can monitor, as well as the associated CPAN Perl modules required to monitor them.

| Service | PERL Module |

|---|---|

| HTTP | LWP::UserAgent, (libwww-perl) |

| HTTPS | Net::SSLeay, (Net_SSLeay) |

| FTP | Net::FTP (libnet) |

| LDAP | Net::LDAP (perl-ldap) |

| NNTP | IO::Socket/IO::Select (already part of Perl) |

| SMTP | Net::SMTP (libnet) |

| POP | Net::POP3 (libnet) |

| IMAP | Mail::IMAPClient (Mail-IMAPClient) |

Start the CPAN program with the commands:[13]

#cd / #perl -MCPAN -e shell

| Note | If you've never run this command before, it will ask if you want to manually configure CPAN. Say no if you want to have the program use its defaults. |

You should install the CPAN module MD5 to perform security checksums on the download files the first time you use this method of downloading CPAN modules. While optional, it gives Perl the ability to verify that it has correctly downloaded a CPAN module using the MD5 checksum process:

cpan> install MD5

The MD5 download and installation should finish with a message like this:

/usr/bin/make install -- OK

Now install the CPAN modules with the following case-sensitve commands. You will need to respond to questions about your network configuration.

| Note | You do not need to install all of these modules for ldirectord to work properly. However, installing all of them allows you to monitor these types of services later by simply making a configuration change to the ldirectord configuration file. |

cpan> install LWP::UserAgent cpan> install Net::SSLeay cpan> install Mail::IMAPClient cpan> install Net::LDAP cpan> install Net::FTP cpan> install Net::SMTP cpan> install Net::POP3

Some of these packages will ask if you want to run tests to make sure that the package installs correctly. These tests will usually involve using the protocol or service you are installing to connect to a server. Although these tests are optional, if you choose not to run them, you may need to force the installation by adding the word force at the beginning of the install command. For example:

cpan> force install Mail::IMAPClient

Each command should complete with the following message to indicate a successful installation:

/usr/bin/make install -- OK

| Note | ldirectord does not need to monitor every service your cluster will offer. If you are using a service that is not supported by ldirectord, you can write a CGI program that will run on each real server to perform health or status monitoring and then connect to each of these CGI programs using HTTP. If you want to use the LVS configure script (not described in this book) to configure your Directors, you should also install the following modules:

cpan> install Net::DNS cpan> install strict cpan> install Socket |

When you are done with CPAN, enter the following command to return to your shell prompt:

cpan>quit

The Perl modules we just downloaded should have been installed under the /usr/local/lib/perl<MAJOR-VERSION-NUMBER>/site_perl/<VERSION-NUMBER> directory. Examine this directory to see where the LWP and Net directories are located, and then run the following command.

#perl -V

The last few lines of output from this command will show where Perl looks for modules on your system, in the @INC variable. If this variable does not point to the Net and SWP directories you downloaded from CPAN, you need to tell Perl where to locate these modules. (One easy way to update the directories Perl uses to locate modules is to simply upgrade to a newer version of Perl.)

| Note | In rare cases, you may want to modify Perl's @INC variable. See the description of the -I option on the perlrun man page (type man perlrun), or you can also add the -I option to the ldirectord Perl script after you have installed it. |

Install ldirectord

You'll find ldirectord in the chapter15 directory on the CD-ROM. To install this version enter:

#mount /mnt/cdrom #cp /mnt/cdrom/chapter15/ldirectord* /etc/ha.d/resource.d/ldirectord #chmod 755 /usr/sbin/ldirectord

You can also download and install ldirectord from the Linux HA CVS source tree (http://cvs.linux-ha.org), or the ldirectord website (http://www.vergenet.net/linux/ldirectord). Be sure to place ldirectord in the /usr/ sbin directory and to give it execute permission.

| Note | Remember to install the ipvsadm utility (located in the chapter12 directory) before you attempt to run ldirectord. |

Test Your ldirectord Installation

To test the installation of ldirectord, ask it to display its help information with the -h switch.

#/usr/sbin/ldirectord -h

Your screen should show the ldirectord help page. If it does not, you will probably see a message about a problem finding the necessary modules.[14] If you receive this message, and you cannot or do not want to download the Perl modules using CPAN, you can edit the ldirectord program and comment out the Perl code that calls the methods of healthcheck monitoring that you are not interested in using.[15] You'll need at least one module, though, to monitor your real servers inside the cluster.

Create the ldirectord Configuration File

ldirectord uses a configuration file to build the IPVS table. You can call this file any legal file name you wish, but you must place it in the /etc/ha.d/conf directory. For example, the configuration file for the IPVS on VIP 209.100.100.3 can be:

checktimeout=20 checkinterval=5 autoreload=yes quiescent=no logfile="info" virtual=209.100.100.3:80 real=127.0.0.1:80 gate 1 ".healthcheck.html", "OKAY" real=209.100.100.100:80 gate 1 ".healthcheck.html", "OKAY" service=http checkport=80 protocol=tcp scheduler=wrr checktype=negotiate fallback=127.0.0.1

| Note | You must indent the lines after the virtual line with at least four spaces or the tab character. |

The first four lines in this file are "global" settings; they apply to multiple virtual hosts. When used with Heartbeat, however, this file should normally contain virtual= sections for only one VIP address, as shown here. This is so because when you place each VIP in the haresources file on a separate line, you run one ldirectord daemon for each VIP and use a different configuration file for each ldirectord daemon. Each VIP and its associated IPVS table thus becomes one resource that Heartbeat can manage.

Let's examine each line in this configuration file.

checktimeout=20

This sets the checktimeout value to the number of seconds ldirectord will wait for a health check (normally some type of network connection) to complete. If the check fails for any reason or does not complete within the checktimeout period, ldirectord will remove the real server from the IPVS table.[16]

checkinterval=5

This checkinterval is how long ldirectord sleeps between checks.

autoreload=yes

This enables the autoreload option, which causes the ldirectord program to periodically calculate an md5sum to check this configuration file for changes and automatically apply them when you change the file. This handy feature allows you to easily change your cluster configuration. A few seconds after you save changes to the configuration file, the running ldirectord daemon will see the change and apply the proper ipvsadm commands to implement the change, removing real servers from the pool of available servers or adding them in as needed.[17]

| Note | You can also force ldirectord to reload its configuration file by sending the ldirectord daemon a HUP signal (with the kill command) or by running ldirectord reload. |

quiescent=no

A node is "quiesced" (its weight is set to 0) when it fails to respond within its checktimeout period. When you set this option, ldirectord will remove the real server from the IPVS table rather than "quiesce" it. Removing the node from the IPVS table breaks all of the existing client connections and causes LVS to drop all connection tracking records and persistent connection templates for the node. If you do not set this option to no, the cluster may appear to be down to some of the client computers when a node crashes, because they were assigned to the node before it crashed and the connection tracking record or persistent connection template still remains on the Director.

When using this option, you may also want to use a command[18] like this at boot time:

echo 1 > /proc/sys/net/ipv4/vs/expire_nodest_conn

Setting this kernel variable to 1 causes the connection tracking records to expire immediately if a client with a pre-existing connection tracking entry tries to talk to the same server again but the server is no longer available.[19]

| Note | All of the sysctl variables, including the expire_nodest_conn variable, are documented at the LVS website (http://www.linuxvirtualserver.org/docs/sysctl.html). |

logfile="info"

This entry tells ldirectord to use the syslog facility for logging error messages. (See the file /etc/syslog.conf to find out where messages at the "info" level are written.) You can also enter the name of a directory and file to write log messages to (precede the entry with /). If no entry is provided, log messages are written to /var/log/ldirectord.log.

virtual=209.100.100.3:80

This line specifies the VIP addresses and port number that we want to install on the LVS Director. Recall that this is the IP address you will likely add to the DNS and advertise to client computers. In any case, this is the IP address client computers will use to connect to the cluster resource you are configuring.

You can also specify a Netfilter mark (or fwmark) value on this line instead of an IP address. For example, the following entry is also valid:

virtual=2

This entry indicates that you are using ipchains or iptables to mark packets as they arrive on the LVS Director[20] based on criteria that you specify with these utilities. All packets that contain this mark will be processed according to the rules on the indented lines that follow in this configuration file.

| Note | Packets are normally marked to create port affinity (between ports 443 and port 80, for example). See Chapter 14 for a discussion of packet marking and port affinity. |

The next group of indented lines specifies which real servers inside the cluster will be able to offer the resource to client computers.

real=127.0.0.1:80 gate 1 ".healthcheck.html", "OKAY"

This line indicates that the Director itself (at loopback IP address 127.0.0.1), acting in LocalNode mode, will be able to respond to client requests destined for the VIP address 209.100.100.3.

| Note | Do not use LocalNode in production unless you have thoroughly tested failing over the cluster load-balancing resource. Normally, you will improve the reliability of your cluster if you avoid using LocalNode mode. |

real=209.100.100.100:80 gate 1 ".healthcheck.html", "OKAY"

This line adds the first LVS-DR real server using the RIP address 209.100.100.100.

Each real= line in this configuration file uses the following syntax:

real=RIP:port gate|masq|ipip [weight] "Request URL", "Response Expected"

This syntax description tells us that the word gate, masq, or ipip must be present on each real line in the configuration file to indicate the type of forwarding method used for the real server. (Recall from Chapter 11 that the Director can use a different LVS forwarding method for each real server inside the cluster.) This configuration file is therefore using a slightly different terminology (based on the switch passed to the ipvsadm command) to indicate the three forwarding methods. See Table 15-1 for clarification.

| ldirectord Configuration Option | Switch Used When Running ipvsadm | Output of ipvsadm -L Command | LVS Forwarding Method |

|---|---|---|---|

| gate | -g | Route | LVS-DR |

| ipip | -i | Tunnel | LVS-TUN |

| masq | -m | Masq | LVS-NAT |

Following the forwarding method on each real= line is the weight assigned to the real servers in the cluster. This is used only with one of the weighted scheduling methods. The last two parameters on the line indicate which web page or URL ldirectord should ask for when checking the health of the real server, and what response ldirectord should expect to receive from the real server. Both of these parameters need to be in quotes as shown, separated by a comma.

service=http

This line indicates which service ldirectord should use when testing the health of the real server. You must have the proper CPAN Perl module loaded for the type of service you specify here.

checkport=80

This line indicates that the health check request for the http service should be performed on port 80.

protocol=tcp

This entry specifies the protocol that will be used by this virtual service. The protocol can be set to tcp, udp, or fwm. If you use fwm to indicate marked packets or fwmarked marked packets, then you must have already used the Netfilter mark number (or fwmark) instead of an IP address on the virtual= line that all of these indented lines are associated with.

scheduler=wrr

The scheduler line indicates that we want to use the weighted round-robin load-balancing technique (see the previous real lines for the weight assignment given to each real server in the cluster). See Chapter 11 for a description of the scheduling methods LVS supports. The ldirectord program does not check the validity of this entry; ldirectord just passes whatever you enter here on to the ipvsadm command to create the virtual service.

checktype=negotiate

This option describes which method the ldirectord daemon should use to monitor the real servers for this VIP. checktype can be set to one of the following:

negotiate

-

This method connects to the real server and sends the request string you specify. If the reply string you specify is not received back from the real server within the checktimeout period, the node is considered dead. You can specify the request and reply strings on a per-node basis as we have done in this example (see the discussion of the real lines earlier in this section), or you can specify one request and one reply string that should be used for all of the real servers by adding two new lines to your ldirectord configuration file like this:

request=".healthcheck.html" receive="OKAY"

connect

-

This method simply connects to the real server at the specified checkport (or port specified for the real server) and assumes everything is okay on the real server if a basic TCP/IP connection is accepted by the real server. This method is not as reliable as the negotiate method for detecting problems with the resource daemons (such as HTTP) running on the real server. Connect checks are useful when there is no negotiate check available for the service you want to monitor.

A number

-

If you enter a number here instead of the word negotiate or connect, the ldirectord daemon will perform the connection test the number of times specified, and then perform one negotiate test. This method reduces the processing power required of the real server when responding to health checks and reduces the amount of cluster network traffic required for health check monitoring of the services on the real servers.[21]

off

-

This disables ldirectord's health check monitoring of the real servers.

fallback=127.0.0.1

The fallback address is the IP address and port number that client connections should be redirected to when there are no real servers in the IPVS table that can satisfy their requests for a service. Normally, this will always be the loopback address 127.0.0.1, in order to force client connections to a daemon running locally that is at least capable of informing users that there is a problem, and perhaps letting them know whom to contact for additional help.

You can also specify a special port number for the fallback web page:

fallback=127.0.0.1:9999

| Note | We did not use persistence to create our virtual service table. Persistence is enabled in the ldirectord.conf file using the persistent= entry to speciify the number of seconds of persistence. See Chapter 14 for a discussion of LVS persistence. |

Create the Health Check Web Page

Now, on each real server and on the Director enter a command like this to create a simple health check web page:

#echo "OKAY" > /www/htdocs/.healthcheck.html

| Note | The directory used here should be whatever is specified as the DocumentRoot in your httpd.conf file. Also note the period (.) before the file name in this example.[22] |

See if the Healthcheck web page displays properly on each real server with the following command:

#lynx -dump 127.0.0.1/.healthcheck.html

Start ldirectord Manually and Test Your Configuration

In a moment we'll use Heartbeat to start our ldirectord monitoring daemon, but first you need to try starting the daemon on the Director to see if your IPVS table is created.

-

Enter this command:

#/etc/ha.d/resource.d/ldirectord -d ldirectord-209.100.100.3.conf start

You should see the ldirectord debug output indicating that the ipvsadm commands have been run to create the virtual server followed by commands such as the following:

DEBUG2: check_http: http://209.100.100.100/.healthcheck.html is down

-

Now if you bring the real server online at IP address 209.100.100.100, and it is running the Apache web server, the debug output message from ldirectord should change to:

DEBUG2: check_http: http://209.100.100.100/.healthcheck.html is up

-

Check to be sure that the virtual service was added to the IPVS table with the command:

#ipvsadm -L -n

-

Once you are satisfied with your ldirectord configuration and see that it is able to communicate properly with your health check URLs, press CTRL-C to break out of the debug display.

-

Restart ldirectord (in normal mode) with:

#/usr/sbin/ldirectord ldirectord-209.100.100.3.conf start

-

Then start ipvsadm with the watch command to automatically update the display:

#watch ipvsadm -L -n

You should see an ipvsadm table like this:

IP Virtual Server version x.x.x (size=4096) Prot LocalAddress:Port Scheduler Flags -> RemoteAddress:Port Forward Weight ActiveConn InActConn TCP 209.100.100.3:80 wrr -> 209.100.100.100:80 Route 1 0 0 -> 127.0.0.1:80 Local 1 0 0

-

Test ldirectord by shutting down Apache on the real server or disconnecting its network cable. Within 20 seconds, or the checktimeout value you specified, the real server's weight should be set to 0 so that no future connections will be sent to it.

-

Turn the real server back on or restart the Apache daemon on the real server, and you should see its weight return to 1.

Add ldirectord to the Heartbeat Configuration

Now it's time to tell Heartbeat about our ldirectord configuration so that it will start it automatically at boot time.

-

Follow the recipe in Chapter 7 to install the Heartbeat program if you have not done so already; then create the following entry in your /etc/ ha.d/haresources file:

primary.yourdomain.com 209.100.100.3 ldirectord::ldirectord- 209.100.100.3.conf

where primary.yourdomain.com is the host name (as returned by uname -n) of your primary Director.

This haresources entry tells Heartbeat that the machine named primary.yourdomain.com should normally own the ldirectord resource for VIP 209.100.100.3. The ldirectord resource, or script, is started by Heartbeat, and based on this entry, passed to the configuration file named /etc/ha.d/conf/ldirectord-209.100.100.3.conf in this example.

-

Stop ldirectord with the command:

#/etc/ha.d/resource.d/ldirectord ldirectord-209.100.100.3.conf stop

-

Make sure ldirectord is no longer running with:

#ps -elf | grep ldirectord

This command should not return any output if ldirectord is not running. If you see a running process here, be sure to kill it before going on with the next command.

-

Make sure ldirectord is not started as a normal boot script with the command:

#chkconfig --del ldirectord

-

Stop Heartbeat with the command:

#/etc/rc.d/init.d/heartbeat stop

-

Clean out the ipvsadm table so that it does not contain any test configuration information with:

#ipvsadm -C

-

Now start the heartbeat daemon with:

#/etc/rc.d/init.d/heartbeat start

and watch the output of the heartbeat daemon using the following command:

#tail -f /var/log/messages

| Note | For the moment, we do not need to start Heartbeat on the backup Heartbeat server. |

The last line of output from Heartbeat should look like this:

heartbeat: info: Running /etc/ha.d/resource.d/ldirectord ldirectord- 209.100.100.3.conf start

You should now see the IPVS table constructed again, according to your configuration in the /etc/ha.d/conf/ldirectord-209.100.100.3 conf file. (Use the ipvsadm -L -n command to view this table.)

To finish this recipe, install the ldirectord program on the backup LVS Director, and then configure the Heartbeat configuration files exactly the same way on both the primary LVS Director and the backup LVS Director. (You can do this manually or use the SystemImager package described in Chapter 5.)

Stateful Failover of the IPVS Table

As we've just seen, when the primary Director crashes and ldirectord needs to rebuild the IPVS table on the backup Director it can do so because you have placed all of the ipvsadm configuration rules into an ldirectord configuration file. However, the active client connections (the connection tracking records) are not re-created by ldirectord on the backup Director at failover time. All client computer connections to the cluster nodes are lost during a failover using the recipe we have just built.

These connections (entries in the connection tracking table) change rapidly on a heavily loaded cluster as client connections come and go, so we need a method of sending connection tracking records from a primary Director to a backup Director as they change. The LVS programers developed a technique to do this (replicate the connection tracking table to the backup director) using multicast packets. This technique was originally called the server sync state daemon, and even though it was implemented inside the kernel (the server sync state daemon does not run in userland) the name stuck. To turn on the server sync state daemon, as it is called, inside the kernel run the following command on the primary Director:

/sbin/ipvsadm --start-daemon master

Then, on the backup Director, run the command:

/sbin/ipvsadm --start-daemon backup

The primary and backup Directors must be able to communicate with each other using multicast packets on multicast address 224.0.0.81 for the master server sync state daemon to announce changes to the connection tracking records, and for the backup server sync state daemon to hear about these changes and insert them into its idle connection tracking table. To find out if your cluster nodes support multicast, see the output of the ifconfig command and look for the word MULTICAST. It should be present for each interface that will be using multicast; if it isn't you'll need to recompile your kernel and provide support for multicast (see Chapter 3).[23]

| Note | To stop the sync state daemon (either the master or the backup) you can issue the command ipvsadm --stop-daemon. |

Once you have issued these commands on the primary and backup Director (you'll need to add these commands to an init script so the system will issue the command each time it boots—see Chapter 1), your primary Director can crash, and then when ldirectord rebuilds IPVS table on the backup Director all active connections[24] to cluster nodes will survive the failure of the primary Director.

| Note | The method just described will failover active connections from the primary Director to the backup Director, but it does not failback the active connections when the primary Director has been restored to normal operation. To failover and failback active IPVS connections you will need (as of this writing) to apply a patch to the kernel. See the LVS HOWTO (http://www.linuxvirtualserver.org) for details. |

Modifications to Allow Failover to a Real Server Inside the Cluster

We have finished building our pair of highly available load balancers (with no single point of failure). However, before we leave the topic of failing over the Director as a resource under Heartbeat's control, let's look at how the Director failover works in conjunction with the LVS LocalNode mode.

Because a Linux Enterprise Cluster will have a relatively small number of incoming requests for cluster services (perhaps only one or two for each employee[25]), dedicating a server to the job of load balancing the cluster may be a waste of resources. We can fully utilize the CPU capacity on the Director by making the Director a node inside the cluster; however, this may reduce the reliability of the cluster and should be avoided, if possible.[26] When we tell one of the nodes inside the cluster to be the Director, we will also need to tell one of the nodes inside the cluster to be a backup Director if the primary Director fails.

Heartbeat running on the backup Director will start the ldirectord daemon, move all of the VIP addresses, and re-create the IPVS table in order to properly route client requests for cluster resources if the primary Director fails, as shown in Figure 15-4.

Figure 15-4: Failure of primary Director

Notice in Figure 15-4 that the backup Director is also replying to client computer requests for cluster services—before the failover the backup Director was just another real server inside the cluster that was running Heartbeat. (In fact, before the failover occurred the primary Director was also replying to its share of the client computer requests that it was processing locally using the LocalNode feature of LVS.)

Although no changes are required on the real servers to inform them of the failover, we will need to tell the backup LVS Director that it should no longer hide the VIP address on its loopback device. Fortunately, Heartbeat does this for us automatically, using the IPaddr2 script[27] to see if there are hidden loopback devices configured on the backup Director with the same IP address as the VIP address being added. If so, Heartbeat will automatically remove the hidden, conflicting IP address on the loopback device and its associated route in the routing table. It will then add the VIP address on one of the real network interface cards so that the LVS-DR real server can become an LVS-DR Director and service incoming requests for cluster services on this VIP address.

| Note | Heartbeat will remember that it has done this and will restore the loopback addresses to their original values when the primary server is operational again (the VIP address fails back to the primary Heartbeat server). |

[7]This method is also discussed on the Ultra Monkey website.

[8]See Chapter 2 for a discussion of the routing table.

[9]The IPaddr and IPaddr2 resource scripts.

[10]This assumes that the Heartbeat program is started after this script runs.

[11]Use netmask 255.255.255.255 here no matter what netmask you normally use for your network.

[12]They originally came from CPAN.

[13]You can also use Webmin as a web interface or GUI to download and install CPAN modules.

[14]Older versions of ldirectord would only display the help page if you ran the program as a non-root user. If you see a security error message when you run this command, you should upgrade to at least version 1.46.

[15]Making custom changes to get ldirectord to run in a test environment such as the one being described in this chapter is fine, but for production environments you are better off installing the CPAN modules and using the stock version of ldirectord to avoid problems in the future when you need to upgrade.

[16]The checktimeout and checkinterval values for ldirectord have nothing to do with the keepalive, deadtime, and initdead variables used by Heartbeat.

[17]If you simply want to be able to remove real servers from the cluster for maintenance and you do not want to enable this feature, you can disconnect the real server from the network, and the real server will be removed from the IPVS table automatically when you have configured ldirectord properly.

[18]Or set this value in the /etc/sysctl.conf file on Red Hat systems.

[19]The author has not used this kernel setting in production, but found that setting quiescent to "no" provided an adequate node removal mechanism.

[20]The machine running ldirectord will also be the LVS Director. Recall that packet marks only apply to packets while they are on the Linux machine that inserted the mark. The packet mark created by iptables or ipchains is forgotten as soon as the packet is sent out the network interface.

[21]How much it reduces network traffic is based on the type of service and the size of the response packet that the real server must send back to verify that it is operating normally.

[22]This is not required, but is a carryover from the Unix security concept that file names starting with a period (.) are not listed by the ls command (unless the -a option is used) and are therefore hidden from normal users.

[23]You may also be able to specify the interface that the server sync state daemon should use to send or listen for multicast packets using the ipvsadm command (see the ipvsadm man page); however, early reports of this feature indicate that a bug prevents you from explicitly specifying the interface when you issue these commands.

[24]All connections that have not been idle for longer than the connection tracking timeout period on the backup Director (default is three minutes, as specified in the LVS code).

[25]Compare this to the thousands of requests that are load balanced across large LVS web clusters.

[26]It is easier to reboot real servers than the Director. Real servers can be removed from the cluster one at a time without affecting service availability, but rebooting the Director may impact all client computer sessions.

[27]The IPaddr script, if you are using ip aliases.

EAN: 2147483647

Pages: 219