Term Extraction

If you have done some word and phrase analysis on Web sites for better search engine placement, you will be familiar with the job that this transformation task performs. The Term Extraction transformation is a tool to mine free-flowing text for word and phrase frequency. You can feed any text-based input stream into the transformation and it will output two columns: a text phrase and a statistical value for the phrase relative to the total input stream. The statistical values or scores that can be calculated can be as simple as a count of the frequency of the words and phrases, or they can be a little more complicated as the result of a formula named TFIDF score. The TFIDF acronym stands for Term Frequency and Inverse Document Frequency, and it is a formula designed to balance the frequency of the distinct words and phrases relative to the total text sampled. If you're interested, here's the formula:

TDIDF (of a term or phrase) = (frequency of term) * log((# rows in sample)/(# rows with term or phrase))

The results generated by the Term Extraction transformation are based on internal algorithms and statistical models that are encapsulated in the component. You can't alter or gain any insight into this logic by examining the code. However, some of the core rules for how the logic breaks apart the text to determine word and phrase boundaries are documented in Books Online. What you can do is tweak some external settings and make adjustments to the extraction behavior by examining the resulting output. Since text extraction is domain-specific, the transform also provides the ability to store terms and phrases that you have predetermined are noisy or insignificant in your final results. You can then automatically remove these items from future extractions. Within just a few testing iterations, you can have the transform producing meaningful results.

Before you write this transformation off as a cool utility that you'll never use, consider this: How useful would it be to query into something like a customer service memo field stored in your data warehouse and generate some statistics about the comments that are being made? This is the type of use for which the Term Extraction transform is perfectly suited. The trick to understanding how to use the component is to remember that it has one input. That input must be either a NULL-terminated ANSI (DT_WSTR) or Unicode (DT_NTEXT) string. If your input stream is not one of these two types, you can use the Data Conversion transform to convert it. Since this transformation can best be learned by playing around with all the settings, put this transform to work on exactly what was proposed before — mining some customer service memo fields.

You have a set of comment fields from a customer service database for an appliance manufacture. In this field, the customer service representative will record a note that summarizes the contact with the customer. For simplicity sake, you'll create these comment fields in a text file and analyze them in the Term Extraction transformation.

-

Create the customer service text file using the following text (cut and paste into Notepad). Save as "c:\custsvc.txt."

Ice maker in freezer stopped working model XX-YY3 Door to refrigerator is coming off model XX-1 Ice maker is making a funny noise XX-YY3 Handle on fridge falling off model XX-Z1 Freezer is not getting cold enough XX-1 Ice maker grinding sound fridge XX-YY3 Customer asking how to get the ice maker to work model XX-YY3 Customer complaining about dent in side panel model XX-Z1 Dent in model XX-Z1 Customer wants to exchange model XX-Z1 because of dent in door Handle is wiggling model XX-Z1

-

Create a SSIS project named Term Extraction. Add a Data Flow task to the Control Flow design surface.

-

Create a Flat File connection to "c:\custsvc.txt." Change the output column named in the Advanced tab to "CustSvcNote." Change OutputColumnWidth to 100 to account for the length of the field.

-

Add a Flat File Source to the Data Flow design surface. Configure the source to use the Flat File connection.

-

Since the Flat File Source output is string (DT_STR), you'll need to convert the string to either the DT_WSTR or DT_NTEXT data type. Add a Data Conversion Transform to the Data Flow design surface and connect the output of the Flat File Source. Set the Input Column to CustSvcNote, the Output Alias to ConvCustSvcNote, and the Data Type to Unicode string [DT_WSTR]. Click OK to save.

-

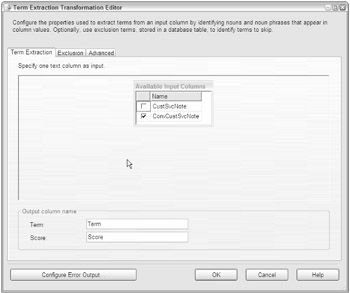

Add a Term Extraction task to the Data Flow design surface. Connect the output of the Data Conversion transform to its input. Open the Term Extraction Editor. Figure 6-17 shows the available input columns from the input stream and the two default-named output columns. You can change the named output columns if you wish. Only one input column can be chosen. Click the column ConvCustSvcNote, since this is the column that is converted to a Unicode string. If you click the unconverted column, you'll see a validation error like the following:

The input column can only have DT_WSTR or DT_NTEXT as its data type.

-

Close the Term Extraction Editor. Ignore the cautionary warnings about rows sent to error outputs. You didn't configure an error location for bad rows to be saved, but it's not necessary for this example.

-

Add an OLE DB Destination to the Data Flow. Connect the output of the Term Extraction task to the OLE DB Destination. Configure the OLE DB Destination to use your AdventureWorks connection.

-

Click on the New button to configure the Name of Table or View property. A window will come up with a CREATE TABLE DDL statement. Notice that the data types are a Unicode text field and a double. Alter the statement to read:

CREATE TABLE [TermResults] ( [Term] NVARCHAR(128), [Score] DOUBLE PRECISION )

-

When you click OK, the new table TermResults will be created in the AdventureWorks database. Click the Mappings tab to confirm the mapping between the Term Extract outputs of Term and Score to the table [TermResults].

-

Add a Data Viewer by right-clicking the Data Flow between the Term Extract transform and the OLE DB destination. Set the type to grid and accept defaults.

-

Run the package.

Figure 6-17

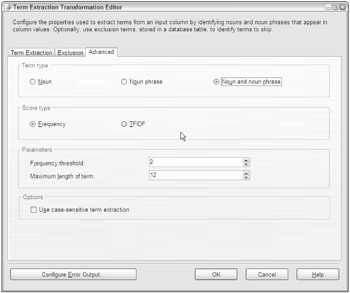

The package will stop on the Data Viewer to allow you to view the results of the Term Extract transform. You should see a list of terms and an associated score for each word. Since you just accepted all of the Term Extraction settings, the default score is a simple count of frequency. Stop the package, open the Term Extraction Transformation Editor, and view the Advanced tab. See Figure 6-18 for an example of the properties on this tab.

Figure 6-18

The Advanced tab allows for some configuration of the task and can be divided into four categories:

-

Term Type: Settings that control how the input stream should be broken into bits called tokens. The Noun Term Type will focus the transform on nouns only, Noun Phrases will extract noun phrases, and Noun and Noun Phrases will extract both.

-

Score Type: Choose between analyzing words by frequency or by a weighted frequency.

-

Parameters: Frequency threshold is the minimum number of times a word or phrase must appear in tokens. Maximum length of term is the maximum number of words that should be combined together for evaluation.

-

Options: Check this option to consider case-sensitivity or leave unchecked to disregard.

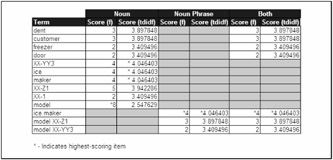

This is where the work really starts. How you set the transform up really affects the results you'll see. Figure 6-19 shows an example of the results using each of the different Term Type settings combined with the different score types.

Figure 6-19

One of the unusual things to notice is that the term "model XX-Z1" shows a frequency score of 3 when the Term Type option is set to "Both," even though you can physically count five instances of this phrase in the customer service data. However, the term "XX-Z1" is counted with the correct frequency when you break the source text into nouns only. This demonstrates that the statistical models are sensitive to where and how noun phrases are used. As a consequence, the tagging of noun phrases may not be completely accurate.

At the moment, using a combination of these statistics, you can report that customer service is logging a high percentage of calls concerning the terms "model," "model XX-Z1," "model XX-YY3," "ice maker," "dent," and "customer." An assumption can be made that there may be some issues with models XX-Z1 and XX-YY3 that your client needs to look into.

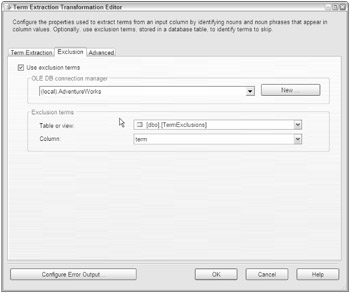

In evaluating this data, you may determine that some words over time are just not of interest to the analysis. In this example, the words "model" and "customer" really serve no purpose but to dampen the scores for other words. To remove these words from your analysis, take advantage of the exclusion features in the Term Extraction transform by adding these words to a table with a single Unicode NULL-terminated string column. Figure 6-20 shows a properly configured exclusion table in the Exclusion tab of the Term Extraction Editor. Rerun the package and review the new results.

Figure 6-20

Notice that the results of your noun-term extraction have suffered a setback. No longer do the phrases "model XX-Z1" or "model XX-YY3" even appear in the results. Huh? Well, you did tell the transform to exclude the term "model." You meant the word. You didn't intend for the phase including the word to also be removed. You'll need to remove the word "model" from your exclusion table because it is too restrictive. A different way to look at the Term Extraction transform is that it is a utility that will build a word list. To really make sense of that word list, you need to add some human intervention and the next transform — Term Lookup.

EAN: 2147483647

Pages: 182

- ERP Systems Impact on Organizations

- ERP System Acquisition: A Process Model and Results From an Austrian Survey

- The Effects of an Enterprise Resource Planning System (ERP) Implementation on Job Characteristics – A Study using the Hackman and Oldham Job Characteristics Model

- Distributed Data Warehouse for Geo-spatial Services

- A Hybrid Clustering Technique to Improve Patient Data Quality