Raw Files

Raw files are proprietary sources and destinations in the SSIS data flow that are only accessible through SSIS. The files are binary files that contain all the metadata information for the file (data types and column names) in the file header. Because this file is proprietary to SSIS, it’s the fastest source and destination. When you must stage data, it’s an excellent choice because of its speed. It’s also great from a reliability perspective, since you may use these sources and destinations in a complex data flow to capture images of your data at any point in time. For example, if you have a four-hour data flow process, you may want to stage the load at different points in time for recovery reasons; breaking up the extract and transformation from the load. This is common in a dimension load, where you may have to massage the data to be loaded into the dimension before applying the slowly changing dimension logic.

Another use for raw files is to break up a mainframe extract into more practical files. Oftentimes, you will receive files from a vendor or mainframe group as one unified file, when it should have been separated into individual files. For example, imagine the extract file shown in the following table.

| RecordType | OrderID | Item | Quantity | Price |

|---|---|---|---|---|

| 1 | 1 | |||

| 1 | 2 | |||

| 2 | 1 | Soap | 1 | 2.24 |

| 2 | 1 | Firewood | 2 | 4.5 |

| 1 | 3 | |||

| 2 | 2 | Pepper | 1 | 1.58 |

| 2 | 3 | Soap | 1 | 2.24 |

| 2 | 3 | Cola | 5 | 4.5 |

In this file, you can see there is an order entry for RecordType 1 and the details are in RecordType 2. This file would generally be a fixed-width file or a ragged-right file, but to keep it simple, this file is delimited by commas. It can be downloaded from www.wiley.com (Chapter7SalesExtract.csv).

In this example, you would have two options for processing the file into the two tables based on the RecordType column. You could process the entire file in a single data flow, but keep in mind the file would generally be much more complex than the one shown here. You may also have a timing problem if the tables are related, as these are. The timing problem is going to exist with the foreign key relationship between the Order and OrderDetails tables. You want to ensure that you never process a detail record without the parent order record being processed first.

Your second processing option is to process the file as three data flows. The first Data Flow Task would break the file into multiple files and the other Data Flow Tasks would load each table.

For reliability, let’s take the second option where you break the file into more usable files prior to load. You may also decide to add more logic into that data flow as well while you have the data in memory (such as data conversions and standardization). For this simple example, you’ll want to have the Chapter7SalesExtract.csv file in your C:\ExpertSSIS folder and you’ll want to create a new package called RawFile.dtsx.

Create two connection managers: one that points to the AdventureWorks database and another that points to your Chapter7SalesExtract.csv. The first row in the Chapter7SalesExtract.csv contains the column names, and the file is comma-delimited. Before exiting the connection manager for the Chapter7SalesExtract.csv file, go to the Advanced page and click Suggest Types. Accept the defaults and click OK. This option will read the file and perform its best guess on what the data types should be for the file.

With all the initial steps now done, drag over a Data Flow Task and name it Break Up File. In the data flow, drag over the Flat File source and point the source to the Chapter7SalesExtract.csv connection manager. Name the extract file Initial Extract.

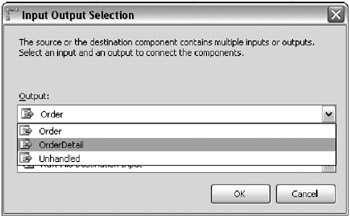

Next, drag over a Conditional Split and connect the Initial Extract source to the Conditional Split. Name the transform Read Record Type. As you already know, the Conditional Split is going to take data from a single input and break it up conditionally into multiple outputs much like a CASE statement in TSQL would do. The first case you will need to create should be called Order and the expression should be set to RecordType == 1. The second should be called Order Detail and the expression should be set to RecordType == 2. The Default output name option should just be called Unhandled. Your final configuration should resemble Figure 7-13.

Figure 7-13: Final configuration of Conditional Split

Finally, drag over two Raw File destinations. Rename one of the destinations to Order Extract and the other to Order Details Extract. Connect one output from the Conditional Split to the Order Extract destination and the other to Order Details Extract. Each time you drag the green arrow from the Conditional Split down to the destination, you will be prompted to define which output you want to send to the destination, as shown in Figure 7-14. Choose the output that corresponds with the destination that you are sending the data. The unhandled output won’t have anywhere to send the data to at this time, but in reality, you may send this data to an error queue.

Figure 7-14: Prompt to define which output you want to send to the destination

Raw files are a little unusual to configure, since they break a few of the standard rules for SSIS. For example, they don’t use connection managers. Inside the Order Extract destination, change the WriteOption property to Create Always. Change the FileName property to C:\ExpertSSIS\OrderData.raw, as shown in Figure 7-15. Even though you can’t use connection managers from within this destination, you could optionally set the destination to a variable that is dynamically set somewhere else. Next, go to the Input Columns tab and check only the OrderID column to send to the file.

Figure 7-15: Changing the FileName property

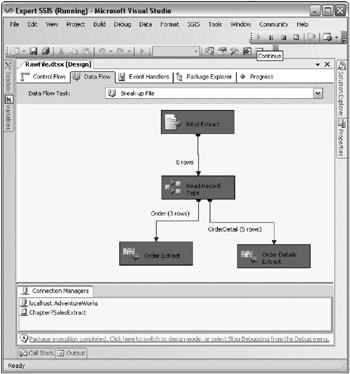

For the Order Details Extract raw file destination, change the FileName property to C:\ExpertSSIS\ OrderDetailsData.raw and the WriteOption to Create Always. The in the Input Columns tab, check every column except the RecordType column. Exit the editor and execute the package. The package execution should look like Figure 7-16 with three records being written to the Orders file and five being written to the Order Details file.

Figure 7-16: Package execution with files written to Orders and Order Details files

The hard work of the package is now complete. You can download the rest of the package at www.wiley.com to see the complete example. The script to create the target tables is called Chapter7ExampleOrderTables.sql. There are two more Data Flow Tasks to add to complete the example. One Data Flow Task would load the parent table (ExampleOrder) and the other would load the child table (ExampleOrderDetails); both Data Flows would load their respective tables using the newly created raw files as sources.

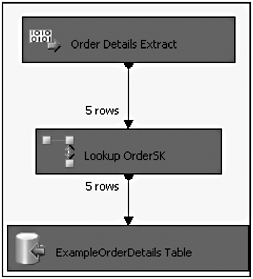

Note that for the ExampleOrderDetails table, you will need to add a Lookup transform, as shown in Figure 7-17, to look up the primary key from the Orders table to populate the child table. This OrderSK column is an identity column that represents a unique order.

Figure 7-17: Adding a Lookup transform

As you can see, raw files provide a fast way to provide reliability in your data flow. In this example’s case, raw files helped to separate the extract, transform, and load steps into distinct steps in the package.

EAN: 2147483647

Pages: 111