CPU

SQL Server 2005 operates in a very different environment than previous versions of SQL Server. When SQL Server 2000 was launched, a large server used for SQL might have two, or maybe even four, processors. Now SQL Server 2005 is able to run on the largest servers, with 64 processors, and up to 1TB of RAM running Windows Server 2003 Data Center Edition. Currently, there is a bewildering array of processor options to consider when thinking about a new system. SQL Server 2005 can run on a wide variety of processors:

-

32-bit processors: X86

-

32-bit with 64-bit extension processors: X64

-

64-bit processors: IA64

What follows is a short introduction to these different processor families, along with a short discussion of some of the factors that influence making a decision about which processor you should use.

32-bit X86 Processors

32-bit systems are fast becoming replaced by 64-bit systems. The only reason to purchase a 32-bit system today is cost, and a certainty that the system will never need more than 2 to 4GB of physical memory.

64-bit

If you're considering purchasing new servers today, you should seriously be considering 64-bit processors. Even when the server is only going to run 32-bit applications, the capability to increase memory beyond 4GB without resorting to PAE, and to greater than 64GB at all, means that a 64-bit system should be your first choice.

X64 or IA64

The key factors when deciding between X64 and IA64 are cost, availability, scalability, and processor speed. It's always dangerous to generalize, but today most X64 processors have faster clock speeds than IA64 systems. The current state of processor clock speed is such that X64 processors are running at up to 3GHz, whereas IA64 processors have been stuck at 1.6GHz for some time now, with no sight of an increase in the foreseeable future.

However, processor speed alone can be misleading, as most IA64 processors have larger caches than X64 processors. The larger cache can help minimize the disadvantage of slower processor speeds. X64 processors are typically available in a wider range of servers than IA64 systems, which are increasingly only found in specialized machines, either high-performance workstations or very large highly scalable systems from a few specialist vendors.

Hyperthreading

You want to answer two questions about hyperthreading: First, should you purchase processors with hyperthreading capability? If you do, then the second question is whether you should run with hyperthreading enabled or disabled. This is a very difficult question to answer, and a one-size-fits-all approach will not work. Any answer must involve each customer testing for themselves.

One of the most important factors when considering hyperthreading is to understand the maximum theoretical performance benefit that you might get from hyperthreading. Intel's documentation on hyperthreading reveals that the maximum theoretical performance gain from hyperthreading is 30 percent. Many people running with hyperthreading enabled for the first time expect to see double the performance, because they see two processors. You should understand that hyperthreading is only ever going to give you a maximum performance increase of 1.3 times non-hyperthreaded performance, and in practice it will be closer to 1.1 to 1.15 times. This knowledge helps put any decision about hyperthreading back into perspective.

In some cases hyperthreading, at least theoretically, won't provide any benefit. For example, in any workload where the code is running a tight loop entirely from cache, hyperthreading won't provide any benefit because there is only a single execution engine. In some cases this scenario will result in degraded performance because the OS will try to schedule activity on a CPU that isn't physically there.

Another scenario in which hyperthreading can directly affect SQL Server performance is when a parallel plan might be chosen. One of the things a parallel plan does is split the work across the available processors with the assumption that each processor will be able to complete the same amount of work in the given time. In a hyperthreading-enabled scenario, any thread that's not currently executing will be stalled until the other thread on that processor completes.

No one can yet tell you whether hyperthreading will help or hurt your performance when running your workload. Plenty of theories abound about how it might impact different theoretical workloads, but no one has yet been able to come up with prescriptive guidance that definitely says turn it on here and off over there. Unfortunately, that leaves the customer with the burden of figuring it out for themselves.

That said, however, there are a few things to consider that can help you make your decision. First, the operating system can make a big difference. Windows 2000 wasn't hyperthreading-aware, whereas Windows Server 2003 is, so expect to see better performance on Windows Server 2003 compared to Windows 2000. In addition, Windows Server 2003 Service Pack 1 added additional hyperthreading awareness, so make sure you are running SP1.

Something else to consider is that hyperthreading itself has been evolving, and although we haven't found any concrete evidence of changes in how hyperthreading is implemented on different Intel processors, feedback from the field seems to indicate that it's getting better with each generation of processors that use it. The point here is that if the customer is trying hyperthreading on an older server, then they may not see as much benefit as they would if they used it on a server with the very latest generation of processors.

Microsoft's licensing policy is the opposite of its competitors in that they license by the socket, not by the core or thread. This means that in some configurations it's possible for the operating system (and SQL Server) to recognize up to eight processors (threads) and still run on a low-end system SKU such as Windows Server 2003 Standard Edition.

Because it's so important to our discussion of hyperthreading, we close this section by repeating the following: The maximum theoretical performance improvement with hyperthreading is only 30 percent compared to non-hyperthreaded applications. In practice, this will actually be a maximum of maybe just 10–15 percent. Moreover, for a lot of customers this is going to be very difficult to measure, especially if they are running testing with a workload that doesn't produce consistent results in the first place.

Cache

The reason modern processors need cache is because the processor runs at 2 to 3GHz, and main memory simply cannot keep up with the processor's appetite for memory. To try to alleviate this, processor designers added several layers of cache to keep recently used memory in small, fast memory caches so that if you need to reuse memory it might already be in cache. In addition, because of the way cache works, it doesn't just load the byte requested, but the subsequent range of addresses as well. The amount of memory loaded on each request is determined by the cache line size, and the processor's caching algorithm pre-fetch parameters.

The cache on modern processors is typically implemented as multiple layers (L1, L2, L3). Each subsequent layer is physically farther from the processor core, and becomes larger and slower until we are back at main memory. Some caches are general purpose and hold copies of any memory (L2 and L3). Other caches are very specific and hold only address lookups (TLB), data (L1 data), or instructions (instruction cache). Typically, L1 is smaller and faster than L2, which is smaller and faster than L3. L3 cache is often physically located on a separate chip, and so is farther from the processor core than L1 or L2, but still closer than main memory.

Processor cache is implemented as a transparent look-through cache. This means that controlling functions on the chip manage the process of filling the cache and managing cache entries. At this point, we should consider some SQL Server specifics.

SQL Server 2005 is a considerably more complex product than SQL Server 2000. Building in all this additional complexity is achieved at the cost of additional lines of code, which results in additional bytes in the size of the final exe. Sqlservr.exe. The exe for SQL Server 2000 is 8.9MB; the exe for SQL Server 2005 has grown considerably and is now weighing in at 28MB. Moreover, simply starting the service requires more system resources. SQL Server 2000 runs in about 29MB of memory. (You can easily see this by using the Task Manager and looking at the Mem Usage column for SQL Server. The Mem Usage column provides an approximation of the working set for the process.) Connecting with SQLCMD and issuing a simple query, such as selecting a name from master..sysdatabases, adds another 0.5MB to that number. SQL Server 2005 uses around 50MB of memory just to start. On my test server, the Task Manager Mem Usage column reports 49,604KB. Connecting with SQLCMD and issuing the same command causes that to grow to over 53MB (53,456 KB).

All this increased complexity results in a lot more code to do the same operations in SQL Server 2005 than in SQL Server 2000. This manifests itself as an increase in sensitivity to cache size, so the smaller the cache, the slower you might run, and vice versa. In other words, if you have a choice between two processors running at the same speed, go for the one with the largest cache. Unfortunately, the increased cache usually comes at a price. Whether that additional cost is worthwhile is very difficult to quantify. If you have the capability to run a test on both processors, this is the best way to determine the potential improvement. Try to come up with a test that delivers a specific metric that you can factor against the cost to deliver a clear indication of the cost/benefit of larger cache.

Note one qualification to the information just presented in the preceding paragraph. SQL Server 2005 adds a lot more complexity to how it determines the best plan for executing your query. You will see this in an increase in the time it takes to compile a query, and in most cases a reduction in the query execution time. This means that the first time a new query is run, there is a chance that it may actually take longer to run than in SQL 2000, but future executions of the plan from cache will execute considerably faster.

This is an excellent reason to make use of any feature that enables you to reuse a plan. As long as SQL Server has taken all that time to figure out a fast way to get you your data, reuse that plan as much as you can before it's thrown away. This enables you to optimize your time. One sure way to fail to take advantage of this, and to set yourself up for worse performance, is to issue only ad hoc statements, which ensures there is no plan reuse. In addition, you will spend a lot of time compiling statements that are never reused.

Multi-Core

One of the biggest challenges facing multi-processor system designers is how to reduce the latency caused by the physical limitations of the speed of light and the distance between processors and memory. One solution is to put multiple processors on a single chip. This is what a multi-core system does, and it provides more potential for better performance than a single-core system because of this reduced latency between processors and memory. The big question is, do you need a multi-core system?

The answer to this depends on how many processors you need and how much you are willing to pay. If you need an eight-processor system, you will get a potentially faster system for less money by purchasing a quad-socket dual-core system, rather than an eight-socket single-core system.

In most cases, a multi-core system will deliver 1.6 to 1.8 times the performance of a single-core system. This is pretty much in line with the performance of a dual-socket system, making it a very good option. For the latest information on this issue, check out what the hardware vendors are doing with their Transaction Processing Council numbers (see www.tpc.org). The TPC results are a great way to compare hardware, although some hardware vendors don't publish results for the systems you want to compare.

Another factor to consider is scalability. Rather than purchase a straightforward dual-socket, single-core system, you can purchase a dual-socket system with a single-core processor that is capable of being upgraded to dual-core processors in the future. This way, you can defer the expense of adding dual-core processors when you need to add more processing power.

Before continuing, it's worth defining some clear terminology here to avoid confusion when discussing multi-core systems:

-

The socket is the physical socket into which you plug the processor. Before multi-core systems arrived, there used to be a direct one-to-one relationship between sockets and execution units.

-

A core is equivalent to an execution unit, or what you would previously have considered to be a processor. With a multi-core processor there will be two or more of these per socket.

-

A thread in this context is not the same as the thread you might create in your program, or the operating system threads; it is only relevant in the context of hyperthreading. A hyperthreading thread is not a new execution unit, but a new pipeline on the front of an existing execution unit. See the section "Hyperthreading" for more details on how this is implemented.

Now let's look at a specific example to illustrate what this actually means.

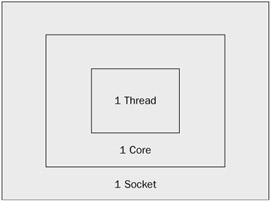

Figure 11-4 shows a single-socket, single-core processor with no hyperthreading, which results in one thread.

Figure 11-4

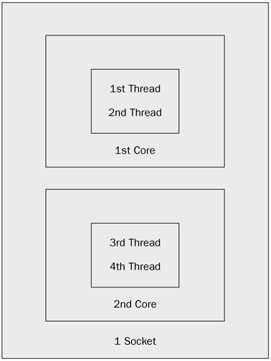

Figure 11-5 shows a single-socket, multi-core processor with hyperthreading, which results in four threads, licensed as one processor.

Figure 11-5

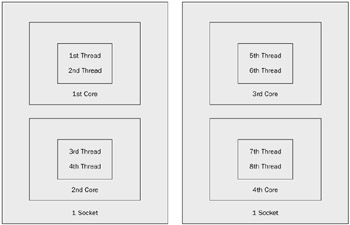

Figure 11-6 shows a dual-socket, dual-core processor with hyperthreading, which results in eight threads, licensed as two processors.

Figure 11-6

System Architecture

Another key purchasing decision has to be made regarding the machine architecture. For systems up to eight processors there really isn't much choice. Single-socket, dual-socket, quad-socket, and even most eight-way systems are only available in a symmetric multi-processor (SMP) configuration. It's only when you move to 16-, 32-, or 64-way systems that you need to consider the options of SMP versus NUMA. This isn't something you actually need to configure, but rather something you should understand as an option when considering the purchase of one type of system versus another.

Symmetric Multi-Processing

A symmetric multi-processor (SMP) system is a system in which all the processors are connected to a system bus in a symmetric manner. All processors have to share access to system resources over a common system bus. This architecture works great for smaller systems in which the physical distance between resources is short, the number of resources is small, and the demand for those resources is small.

NUMA

NUMA stands for non-uniform memory access; this architecture is often also referred to as ccNUMA, meaning a cache-coherent version of NUMA. The main difference between an SMP system and a NUMA system is where the memory is connected to, and how processors are arranged on the system bus.

Whereas on an SMP system the memory is connected to all the processors symmetrically via a shared bus, on a NUMA system each group of four processors has its own pool of "local" memory. This has the advantage that each processor doesn't pay a cost of going to a bus past more than four processors to access memory, provided the data it wants is in the local memory pool. If the data it wants is in the memory pool from another node, then the cost of accessing it is a little higher than on an SMP system. Therefore, one of the objectives with a NUMA system is to try to maximize the amount of data you get from local memory, as opposed to accessing memory on another node.

NUMA systems typically have a four-sockets-per-node configuration, and implement multiple nodes up to the current system maximum of sixteen nodes. This fully populated configuration delivers a full 64 processors to the operating system.

Smaller configurations that are any multiple of a four-socket node can usually be accommodated, allowing for highly configurable servers, and highly scalable servers. For example, a company could start with a single four-socket node and scale up all the way to 16 four-socket nodes, for 64 sockets.

One of the problems with NUMA systems is that as they grow, the issues of maintaining cache coherency also grow, introducing management overhead. Another problem is that as the number of nodes increases, the chance of the data you want being local is reduced.

The operating system must also be NUMA aware to schedule threads and allocate memory local to the node, which is more efficient than allocating nonlocal memory or scheduling a thread to run on a different node. Either of these actions incurs an overhead of node memory access, either to fetch data or to fetch the thread's data from the old node and transfer it to the new node.

EAN: 2147483647

Pages: 193