CPU Considerations

The challenge in building a large SMP is that, because CPUs have increased performance through technology upgrades and through the use of increasingly larger caches, the performance gains achieved through leveraging these caches are significant. Consequently, you need to cache relevant data whenever possible, to allow processors to have relevant data available and resident in their caches. Chip and hardware vendors have attempted to capitalize on this phenomenon through expansion of the processor and system cache.

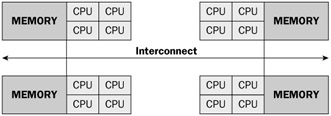

As a result of this phenomenon, new system architectures such as Cellular Multiprocessing (CMP), CC-NUMA, as seen in Figure 12-11, and NUMA (noncache coherent) have been introduced to the market.

Figure 12-11

Although this has been successful, it has produced two new challenges: the need to manage data locality and cache coherency.

Data Locality

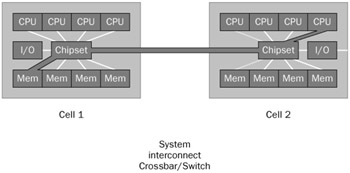

Data locality is the concept of having all relevant data locally available to the processor while it's processing a request. All memory within a system is available to any processor on any cell. This introduces the concept of near and far memory. Near memory is the preferred method, since it is accessed by a processor on the same cell. As shown in Figure 12-12, accessing far memory is expensive because the request has to leave the cell and traverse the system interconnect crossbar to get the cell that holds the required information in its memory.

Figure 12-12

The cost of accessing objects in far memory versus near memory is often threefold or more. If data locality is left unmanaged in a large SMP system, it can prevent an application from scaling due to data locality issues. SQL Server 2000 SP4 (Post SP3 QFE available) introduced innovations to better scale on this platform.

Cache Coherency

For reasons of data integrity, only one processor can update any piece of data at a time; other processors that have copies in their caches will find out that their local copy is "invalidated" and thus must be reloaded. This mechanism is referred to as cache coherency. Cache coherency requires that all the caches are in agreement as to the location of all copies of the data and which CPU currently has permission to perform the update. Supporting coherency protocols is one of the major scaling problems in designing big SMPs, particularly if there is a lot of update traffic. This was also addressed with the introduction of NUMA and CMP architectures.

SQL Server 2005 has been optimized to take advantage of NUMA advancements exposed by both Windows and the hardware itself. As discussed in the "Memory Consideration" section, SQL OS is the technology the SQL Server leverages to exploit these advances.

Affinity mask

The affinity mask configuration option restricts SQL Server (or an instance) to run on a subset of the processors. If SQL Server 2005 is running on a dedicated server (and nothing else), allowing SQL Server to use all processors will ensure best performance. In a server consolidation or multiple-instance environment, SQL Server should always be configured to dedicated hardware resources (affinitizing processors by instances).

The benefits of Hyper-Threading in SQL Server workloads have been a matter of heated discussion. To ensure predictability of the environment, I recommend that this feature be disabled. When configuring SQL Server 2005, processor affinity should coincide with the number of processors or cores available to SQL Server. Hyperthreading is discussed in greater detail in Chapter 11.

| Note | If Hyperthreading is enabled, be sure to set affinity on CPUs, always setting the affinity on both logical processors on a physical processor to the same SQL Server instance. |

SQL Server Processor Affinity Mask

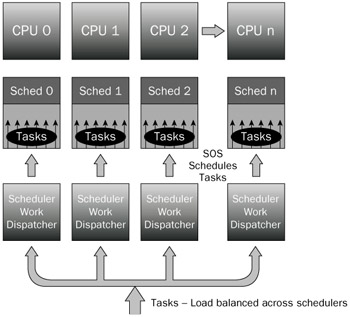

SQL Server's mechanism for scheduling work requests is handled through a data structure concept called a scheduler. There scheduler is created for each processor assigned to SQL Server through the affinity mask configuration setting at startup time. "Worker" threads (a subset of the max worker threads configuration setting) are dynamically created during a batch request and are evenly distributed between each CPU node and load-balanced across its schedulers (refer to Figure 12-13).

Figure 12-13

Incoming connections are assigned to the CPU node. SQL Server assigns the batch request to a task or tasks, and the tasks are managed across schedulers. Only one task at a time can be scheduled for execution by a scheduler on a CPU. A task is a unit of work scheduled by the SQL Server. This architecture guarantees an even distribution of the hundreds of connections or, in many cases, thousands of connections that can result from a large deployment.

Default SQL Server Work Scheduling

The default setting for affinity mask is 0, which enables the Windows scheduler to schedule and move schedulers across any available CPU within a CPU node to execute its worker threads. SQL 2005 in this configuration has its processes controlled and scheduled by the Windows scheduler. For example, a client requests a connection, and the connection is accepted. If no threads are available, one is dynamically created and associated with a task. The work assignments from the scheduler to the CPUs are managed through the Windows scheduler, which has its work distributed among all processors within a CPU node. This is the preferred method of execution, since Windows load-balances the schedulers evenly to all processors.

SQL Server Work Scheduling using Affinity Mask

The affinity mask configuration setting can be used to assign a subset of the available CPUs to the SQL Server process. SQL Server worker threads are scheduled preemptively by the scheduler. A worker thread will continue to execute on its CPU until it is forced to wait for a resource, such as locks or I/O, to become available. If the time slice expires, the thread will voluntarily yield, at which time the scheduler will select another worker thread to begin execution. If it cannot proceed without access to a resource such as disk I/O, it sleeps until the resource is available. Once access to that resource is available, the process is placed on the run queue before being put on the processor. When the kernel transfers control of the CPU from an executing process to another that is ready to run, this is referred to as a context switch.

Context switching

Context switching is expensive because of the associated housekeeping required to move from one running thread to another. Housekeeping is the maintenance of keeping the context or the set of CPU register values and other data that describes the process state. The kernel then loads the context of the new process, which then starts to execute. When the process taken off the CPU next runs, it resumes from the point at which it was taken off the CPU. This is possible because the saved context includes the instruction pointer. Also, context switching takes place in kernel or privileged mode.

The total CPU processing time is equal to the privileged mode time plus the user mode time.

Privileged mode

Privileged mode is a processing mode designed for operating system components and hardware-manipulating drivers. It allows direct access to hardware and all memory. Privileged time includes time-servicing interrupts and deferred process calls (DPC).

User mode

User mode is a restricted processing mode designed for applications such as SQL Server, Exchange, and other application and integral subsystems. The goal of performance tuning is to maximize user mode processing by reducing privilege mode processing. This can be monitored with the Processor: % Privileged Time counter, which displays the average busy time as a percentage of the sample time. A value above 15 percent may indicate that a disk array is being heavily utilized or a high level of network traffic requests. In some rare cases, a high rate of privileged time might even be attributed to a large number of interrupts generated by a failing device.

Priority Boost

By enabling Priority boost, SQL Server runs at a priority base of 13 in the Windows 2003 scheduler rather than its default of 7. On a dedicated server, this might improve performance, although it can also cause priority imbalances between SQL Server functions and operating system functions, leading to instability. Improvements in SQL Server 2005 and Windows Server 2003 make the use of this option unnecessary.

| Note | Priority boost should not be used when implementing failover clustering. |

SQL Server Lightweight Pooling

Typically, context switching does not become problematic until it reaches about 40,000 switches per second. The SQL Server Lightweight Pooling option provides relief for this by enabling tasks to use NT "fibers" rather than threads as workers.

A fiber is an executable unit that is lighter than a thread and operates in the context of user mode. When light-weight pooling is selected, each scheduler uses a single thread to control the scheduling of work requests by multiple fibers. A fiber can be viewed as a "lightweight thread," which under certain circumstances takes less overhead than standard worker threads to context switch. The number of fibers is controlled by the max worker threads configuration setting.

Max degree of parallelism (MAXDOP)

By default, this setting is set to 0, which enables the SQL Server to consider all processors when creating an execution plan. In some systems, based on application-workload profiles, it is recommended to set this setting to 1 (recommended for SAP & Siebel); this prevents the query optimizer from choosing parallel query plans. Using multiple processors to run a query is not always desirable in an OLTP environment, although desirable in a data warehousing environment.

| Note | In SQL Server 2005, you can now assign query hints to individual queries to control the degree of parallelism. |

Partitioned table parallelism is also affected by the MAXDOP setting. Returning to the example used in the "Storage Alignment" section, a thread would have been leveraged across each partition also. Had the query been limited to a single partition, multiple threads would be spawned (up to MAX DOP setting) within that partition.

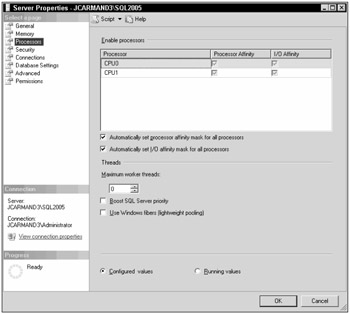

Affinity I/O mask

The affinity I/O mask feature, shown in Figure 12-14, was introduced with SP1 of SQL Server 2000. This option defines the specific processors on which SQL Server I/O threads can execute. The affinity I/O mask option has a default setting of 0, indicating that SQL Server threads are allowed to execute on all processors. The performance gains associated with enabling the affinity I/O mask feature are achieved by grouping the SQL threads that perform all I/O tasks (Nondata Cache retrievals, specifically physical I/O requests) on dedicated resources. This keeps I/O processing and related data in the same cache systems, maximizing data locality and minimizing unnecessary bus traffic.

Figure 12-14

When using affinity masks to assign processor resources to the operating system, either SQL Server processes (non-I/O) or SQL Server I/O processes, care must be exercised not to assign multiple functions to any individual processor.

You should consider SQL I/O affinity when there is high privileged time on the processors that aren't affinitized to SQL Server. For example, consider a 32-processor system running under load with 30 of the 32 processors affinitized to SQL Server (leaving two processors to the Windows O/S and other non-SQL activities). If the Processor: % privileged time is high (greater than 15%), SQL I/O affinity can be incorporated to help reduce the privileged-time overhead in processors associated with SQL Sever.

The following is the process for determining what values to set for SQL I/O affinity.

-

Add I/O capability until there's no I/O bottleneck (Disk Queue length has been eliminated) and all unnecessary processes have been stopped.

-

Add a processor designated to SQL I/O Affinity.

-

Measure the CPU utilization of these processors under heavy load.

-

Increase the number of processors until the designated processors are no longer peaked. Make sure you select processors that are in the same cell.

Max Server Memory

When max server memory is kept at the default setting, SQL Server acquires and frees memory in response to internal pressure and external pressure. Dynamic AWE is only supported on Windows Server 2003. For SQL Server implementations based on Windows Server 2003, a max server memory setting is optional but recommended. For Windows 2000 implementations, the setting max memory value is strongly recommended.

Index Creation Memory Option

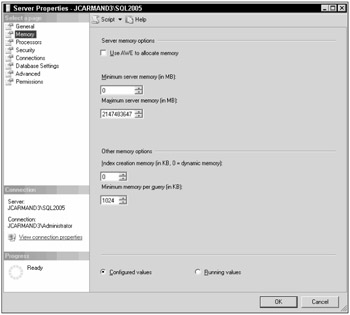

The index creation memory setting, shown in Figure 12-15, determines how much memory can be used by SQL Server for sort operations during the index-creation process. The default value of "0" allows the SQL Server to automatically determine the ideal value as seen in the figure below. In conditions where index creation is performed on very large tables, preallocating space in memory will allow for a faster index-creation process.

Figure 12-15

Remember that once memory is allocated it is reserved exclusively for the index-creation process, and values are set in KB of memory.

Min Memory per Query

You can use the 'min memory per query' option to improve the performance of queries that use hashing or sorting operations. The SQL Server automatically allocates the minimum amount of memory set in this configuration setting. The default 'min memory per query' option setting is equal to 1024 KB, which can be seen in Figure 12-15. It is important to ensure that SQL Server environment has plenty of memory available. In an environment with high query-execution concurrency, if the setting is set too high, the server will wait for a memory allocation to meet the minimum memory level before executing a query.

EAN: 2147483647

Pages: 193