1.3 What Is Concurrent Programming?

|

| < Day Day Up > |

|

1.3 What Is Concurrent Programming?

The purpose of this book is to help programmers understand how to create concurrent programs. Specifically, it is intended to help programmers understand and program special concurrent objects, called concurrent components. [1] Because these components are used only in concurrent programs, a good definition of a concurrent program is needed before components can be defined and methods given for their implementation. This section provides a good working definition of a concurrent program after first explaining why concurrent programming is an important concept for a programmer to know. The working definition of a concurrent program provided here will serve as a basis for understanding concurrent programming throughout the rest of the book.

1.3.1 Why Do Concurrent Programming?

The first issue in understanding concurrent programming is to provide a justification for studying concurrent programming. Most students and, indeed, many professional programmers have never written a program that explicitly creates Java threads, and it is possible to have a career in programming without ever creating a thread. Therefore, many programmers believe that concurrency in programming is not used in most real systems, and so it is a sidebar that can be safely ignored. However, that the use of concurrent programming is hidden from programmers is itself a problem, as the effects of a concurrent program can seldom be safely ignored.

When asked in class, most students would say they that have never implemented a concurrent program, but then they can be shown Exhibit 1 (Program1.1). This program puts a button in a JFrame and then calculates Fibonacci numbers in a loop. The fact that there is no way to set the value of stopProgram to false within the loop implies that the loop is infinite, and so it can never stop; however, when the button is pressed the loop eventually stops. When confronted with this behavior, most students correctly point out that when the Stop Calculation button is pressed the value of stopProgram is set to true and the loop can exit; however, at no place in the loop is the button checked to see if it has been pressed. So, some mechanism must be present that is external to the loop that allows the value of stopProgram to be changed. The mechanism that allows this value to be changed is concurrency.

Exhibit 1: Program1.1: A Program To Calculate Fibonacci Numbers

import java.awt.*; import java.awt.event.*; /** * Purpose: This program illustrates the presence of threads in * a Java program that uses a GUI. A button is created * that simply toggles the variable "stopProgram" to * false, which should stop the program. Once the * button is created, the main method enters an * infinite loop. Because the loop does not explicitly * call the button, there appears to be no way for the * program to exit. However, when the button is pushed, * the program sets the stopProgram to false, and * the program exits, illustrating that the button is * running in a different thread from the main method. */ public class Fibonacci { private static boolean stopProgram = false; public static void main(String argv[]) { Frame myFrame = new Frame("Calculate Fibonacci Numbers"); List myList = new List(4); myFrame.add(myList, BorderLayout.CENTER); Button b1 = new Button("Stop Calculation"); b1.addActionListener(new ActionListener() { public void actionPerformed(ActionEvent e) { stopProgram = true; } }); Button b2 = new Button("Exit"); b2.addActionListener(new ActionListener() { public void actionPerformed(ActionEvent e) { System.exit(0); } }); Panel p1 = new Panel(); p1.add(b1); p1.add(b2); myFrame.add(p1, BorderLayout.SOUTH); myFrame.setSize(200, 300); myFrame.show(); int counter = 2; while(true) { if (stopProgram) break; counter + = 1; myList.add("Num = "+ counter + "Fib = "+ fibonacci(counter)); myFrame.show(); } //Note: stopProgram cannot change value to true in the above //loop. How does the program get to this point? myList.add("Program Done"); } public static int fibonacci(int NI) { if (NI < = 1) return 1; return fibonacci(NI - 1) + fibonacci(NI - 2); } } What is happening in Exhibit 1 (Program1.1) is that, behind the scenes and hidden from the programmer, a separate thread, the Graphical User Interface (GUI) thread, was started. This thread is a thread started by Java that is running all the time, waiting for the Stop Calculation button to be pressed. When this button is pressed, the GUI thread runs for a short period of time concurrently with the main thread (the thread doing the calculation of Fibonacci numbers) and sets the value of stopProgram to true. Thus, Exhibit 1 (Program1.1) is a very simple example of a concurrent program. Because nearly every Java programmer at some point has written a program that uses buttons or other Abstract Window Tool Kit (AWT) or Swing components, nearly every Java programmer has written a concurrent program.

This brings up the first reason to study concurrent programming. Regardless of what a programmer might think, concurrent programming is ubiquitous; it is everywhere. Programmers using visual components in nearly any language are probably using some form of concurrency to implement those components. Programmers programming distributed systems, such as programs that run on Web servers that produce Web pages, are doing concurrent programming. Programmers who write UNIX ".so" (shared object) files or Windows ".com" or ".ddl" files are writing concurrent programs. Concurrency in programs is present, if hidden, in nearly every major software project, and it is unlikely that a programmer with more than a few years left in a career could get by without encountering it at some point. And, as will be seen in the rest of the book, while the fact that a program is concurrent can be hidden, the effects of failing to account for concurrency can result in catastrophic consequences.

The second reason to study concurrent programming is that breaking programs into parts using concurrency can significantly reduce the complexity of a program. For example, there was a time when implementing buttons, as in Exhibit 1 (Program1.1), involved requiring the loop to check whether or not a button had been pressed. This meant that a programmer had to consistently put code throughout a program to make sure that events were properly handled. Using threads has allowed this checking to be handled in a separate thread, thus relieving the program of the responsibility. The use of such threads allows programmers to write code to solve their problem, not to perform maintenance checks for other objects.

The third reason to study concurrent programming is that its use is growing rapidly, particularly in the area of distributed systems. Every system that runs part of the program on separate computers is by nearly every definition (including the one used in this book) concurrent. This means every browser access to a Web site involves some level of concurrency. This chain of concurrency does not stop at the Web server but normally extends to the resources that the Web server program uses. How to properly implement these resources requires the programmer to at least understand the problems involved in concurrent access or the program will have problems, such as occasionally giving the wrong answer or running very slowly.

The rest of this text is devoted to illustrating how to properly implement and control concurrency in a program and how to use concurrency with objects in order to simplify and organize a program. However, before the use of concurrency can be described, a working definition of concurrency, particularly in relationship to objects, must be given. Developing that working definition is the purpose of the rest of this chapter.

1.3.2 A Definition of Concurrent Programming

Properly defining a concurrent program is not an easy task. For example, the simplest definition would be when two or more programs are running at the same time, but this definition is far from satisfactory. For example, consider Exhibit 1 (Program1.1). This program has been described as concurrent, in that the GUI thread is running separately from the main thread and can thus set the value of the stopProgram variable outside of the calculation loop in the main thread. However, if this program is run on a computer with one Central Processing Unit (CPU), as most Windows computers are, it is impossible for more than one instruction to be run at a time; thus, by the simple definition given above, this program is not concurrent.

Another program with this simple definition can be illustrated by the example of two computers, one running a word processor in San Francisco and another running a spreadsheet in Washington, D.C. By the definition of a concurrent program above, these are concurrent. However, because the two programs are in no way related, the fact that they are concurrent is really meaningless.

It seems obvious that a good definition of concurrent programming would define the first example as concurrent and the second as not concurrent; therefore, something is fundamentally wrong with this simple definition of concurrent programming. In fact, the simple-minded notion of concurrency involving two activities occurring at the same time is a poor foundation on which to attempt to build a better definition of the term concurrency. To create a definition of concurrency that can be used to describe concurrent programming, a completely new foundation needs to be built. A better, workable definition is supplied in the rest of Section 1.3.2.

1.3.2.1 Asynchronous Activities

Defining a concurrent program begins by defining the basic building block of a program which will be called an activity. An activity could be formally defined as anything that could be done by an abstract Turing machine or as an algorithm. However, what is of interest here is a working definition, and it is sufficient to define an activity as simply a series of steps implemented to perform a task. Examples of an activity would be baking a pie or calculating a Fibonacci number on a computer. The steps required to perform a task will be called an ordering.

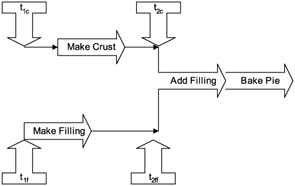

Activities can be broken down into subactivities, each an activity itself. For example, baking a pie could consist of making the crust, making the filling, filling the crust with the filling, and baking the pie. For example, Exhibit 2 shows the steps in baking a pie, where the crust must first be made, then the filling made, the filling added to the crust, and the pie baked. If the order of these activities is completely fixed, then the ordering is called a total ordering, as all steps in all activities are ordered. In the case of a total orderings of events, the next step to be taken can always be determined within a single activity. An activity for which the order of the steps is determined by the activity is called a synchronous activity. Note that partial orderings are also controlled by synchronous activities; these are implemented by the programming equivalent of "if" and "while" statements.

Exhibit 2: Synchronous Activity to Make a Pie

![]()

In the case of making a pie it is not necessary to first make the crust and then make the filling. The filling could be made the night before, and the crust could then be made in the morning before combining the two to make a pie. If the order in which the crust and the filling are made can be changed, then the ordering is called a partial ordering (the order of steps to make the crust and the order of steps to make the filling remain fixed, but either can be done first). However, if one activity must always finish before the other begins, it is possible to implement this behavior with a synchronous activity.

A special case occurs when, for a partial ordering, the next step is not determined by a single activity. To show this, several values of time must be defined. The time after which preparing the crust can be started is t1c, and the time that it must be completed is t2c. The time after which preparing the filling can be started is t1f, and the time that it must be completed is t2f. Now, if [(t1c < = t1f < t2c)║(t1f < = t1c < t2f)], then the activities of making the crust and the filling can (but do not necessarily have to) overlap. If the steps overlap, then the overall ordering of the steps cannot be determined within any one task or, thus, any one activity. One example of this situation for baking a pie is illustrated in the Gant chart in Exhibit 3. Note that many other timelines are possible, as the crust does not have to start at t1c, nor does it have to end at t1f; it simply has to occur between those two times. The same is true of making the filling. The two activities might not actually overlap; it is sufficient that they can overlap.

Exhibit 3: One Possible Example of Asynchronous Activities in Making a Pie

The only way that these two activities can overlap in this manner is if the lists of steps for the activities are being executed independently. For example, it is possible that two bakers are responsible for the pie, one making the filling and the other making the crust. It is also possible that one baker is responsible for both the crust and filling, but they are switching back and forth from doing steps from one part of the recipe (making the crust) to another part of the recipe (making the filling). However they are accomplished, by the definition given here the steps involved in the two subtasks are being executed independently, or asynchronously, of each other. This type of activity is called an asynchronous activity.

The definition of an asynchronous activity leads to a very simple definition of concurrency: Concurrency is defined as the presence of two or more asynchronous activities.

When asynchronous activities are present in a program, it is possible (but not necessary) for the steps for the two activities to interleave. As we will see in Chapter 2, the number of different ways they can interleave can be quite large, and the results can be quite unexpected. However, note that from the definition of asynchronous activities the two activities do not have to run at the same time; they simply have to be able to run at the same time. This is a useful distinction, because the problems that will be encountered in concurrency occur not because the activities execute at the same time but because they can interleave their executions. It is also useful because if a program allows activities to interleave, it must protect against the ill effects of that interleaving whether it occurs or not. As will be seen, this means that methods that might be used concurrently must be synchronized even if the vast majority of the time the use of the synchronized statement provides no benefit.

The importance of the improvement of this definition of concurrency over the definition of concurrency as multiple activities happening at the same time cannot be overemphasized. This definition implies the types of problems that can occur and the way to solve those problems. If a concurrent program does not actually run two activities at the same time, but it can do so, then action must be taken to make sure problems do not occur. Any argument as to whether these two activities are actually running at the same time, or if they generally run one after the other, is a moot point. Arguments about how the activities are actually implemented (for example, are priorities present in the system?) and how the implementation might affect the interactions (does the higher priority process always have to run first?) also do not matter. If the asynchronous activities are present, then the program must account for this behavior.

It should be noted that the definition of asynchronous activities solves the first problem with the definition of concurrency. The two threads running in Exhibit 1 (Program1.1) are asynchronous activities, thus they are concurrent. However, the two computers running in different cities are also asynchronous activities, so the definition of concurrent programming must be further tightened.

1.3.2.2 Synchronization of Asynchronous Activities

That two or more asynchronous activities are concurrent is a good definition of concurrency, but it is not a useful definition. As was mentioned before, two asynchronous activities that are unrelated are concurrent, but that does not mean that any particular action must be considered when reasoning about them. A useful definition requires that some interaction between the activities is needed. This interaction between activities requires that the activities must coordinate (or synchronize), so this section will define how synchronization affects the activities.

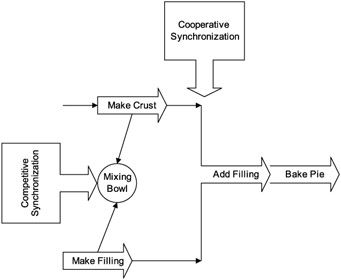

Sebesta [SEB99] says that, "Synchronization is a mechanism that controls the order in which tasks execute." In terms of activities, this definition suggests that, while the asynchronous activities represent separate control over the execution of steps in the activity, at times the asynchronous activities agree to come together and cooperate in order to create valid partial orderings within the activities. Sebesta defines two types of synchronization, competitive synchronization and cooperative synchronization. To see how synchronization affects the partial orderings within an asynchronous activity, examples of these two types of synchronization are given.

Exhibit 4 gives examples of both types of synchronization. The figure illustrates two asynchronous activities: making a pie crust and making a filling. These two activities will synchronize, first showing synchronization of a shared resource for competitive synchronization and, second, showing synchronization around an event for cooperative synchronization. To understand competitive synchronization, consider what would happen if both recipes called for mixing the ingredients in a large bowl but only one large bowl is available so both activities must use the same bowl. If both activities used the bowl without considering the actions of the other activity it would be possible to mix the filling and the crust at the same time, which would result in an incorrect solution to the problem of making the pie. Therefore, the two activities must compete for the use of the resource and synchronize on it with the rule that when one activity is using it the other cannot continue until the resource becomes free. In this example, the bowl is a shared resource that the two activities must synchronize on in order to correctly solve this problem, in this case referred to as competitive synchronization.

Exhibit 4: Competitive and Cooperative Synchronization

The second type of synchronization occurs when asynchronous activities must wait on an event to occur before continuing. In Exhibit 4, this occurs when the making of the pie crust and the filling must both be completed before the filling can be added to the crust and the pie baked. Because the two activities must cooperate in waiting for this event, this type of synchronization is called cooperative synchronization. Note that, while the synchronization does impose a partial ordering on the asynchronous activities, it does not make them synchronous. Except for when the activities must synchronize for some reason, they are still asynchronous.

1.3.2.3 Concurrent Programming

With the introduction of asynchronous activities and synchronization, the background is now in place to define concurrency. Concurrency is the presence of asynchronous activities that interact and thus must at some point in their execution implement either competitive or cooperative synchronization. This is a workable definition of concurrency as it requires activities that do not actually run at the same time but which behave as if they do. It also requires that they must synchronize to be considered concurrent.

Now that a workable definition of concurrency has been built, it is relatively easy to build a definition of a concurrent program:

Concurrent Program: A program that contains asynchronous activities which synchronize at one or more points or on one or more resources during execution.

By design, this definition does not specify how asynchronous activities are implemented in the program. These activities might be Ada tasks, UNIX processes, Pthreads, or Java threads. It also does not say how the synchronization is achieved, which once again could be through Ada select statements, UNIX operating system calls, or use of a Java synchronized statement. Further, it does not say how the activities communicate, whether by method calls in a single process, interprocess communications such as UNIX pipes, or Remote Method Invocation (RMI), to processes on completely different computers. These are all just details of individual concurrent programs, but the basic principals of concurrency will always be the same.

[1]The term component is poorly defined and is used in object-oriented programming. Because some readers might use the term in non-concurrent contexts, the concept is introduced as concurrent components here; however, all components in this book are concurrent components, so the concurrent part of the term will be dropped, and the term component will represent a concurrent component.

|

| < Day Day Up > |

|

EAN: 2147483647

Pages: 162