Web-Tier Performance Issues

|

|

We've already discussed the implications for performance scalability of web-tier session management. Let's consider a few web-tier-specific performance issues we haven't covered so far.

View Performance

In Chapter 13 we looked at using several different view technologies to generate a single example view. We considered authoring implications and maintainability, but didn't consider performance, beyond general considerations.

Let's look at the results of running the Microsoft Web Application Stress Tool against some of the view technologies we discussed.

| Note | Note that I slightly modified the controller code for the purpose of the test to remove the requirement for a pre-existing user session and to use a single Reservation object to provide the model for all requests, thus avoiding the need to access the RDBMS. This largely makes this a test of view performance, eliminating the need to contact business objects, and avoids the otherwise tricky issue of ensuring that enough free seats were available in the database to fulfill hundreds of concurrent requests. |

The five view technologies tested were:

-

JSP, usingJSTL

-

Velocity 1.3

-

XMLC 2.1

-

XSLT using on-the-fly "domification" of the shared Reservation object

-

XSLT using the same stylesheet but a cached org.w3c.dom.Document object (an artificial test, but one that enables the load of domification using reflections to be compared to that of performing XSLT transforms)

Each view generated the same HTML content. Both WAS and the application server ran on the same machine. However, operating system load monitoring showed that WAS did not consume enough resources to affect the test results. The XSLT engine was Xalan.

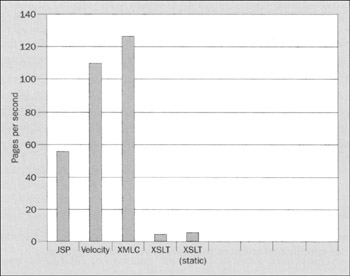

The following graph shows the results of running 100 concurrent clients continually requesting pages:

While the actual numbers would vary with different hardware and shouldn't be taken as an indication of likely performance in a production environment, the differences between them are a more meaningful measure.

I was surprised by the very large spread of results, and reran the tests several times before I was convinced they weren't anomalous. JSP achieved around 54 pages per second; Velocity 112; XMLC emerged a clear winner at 128; while both XSLT approaches were far slower, at 6 and 7 pages per second respectively.

The conclusions are:

-

View technology can dictate overall application performance, although the effect would be less if more work needed to be done by business objects to satisfy each request. Interestingly, the difference between the performance of XSLT and the other technologies was significantly greater than including a roundtrip to the database in the same use case. While I had expected that XSLT would prove the slowest view technology, like many developers I tend to assume that accessing a database will almost always be the slowest operation in any use case. Thus this test demonstrates the importance of basing performance decisions empirically.

-

JSP shouldn't be an automatic choice on the assumption that it will outperform other view technologies because JSP pages are compiled into servlets by the web container. XMLC proved well over twice as fast, but it does involve commitment to a very different authoring model. To my mind, Velocity emerged the real winner: JSP's perceived performance advantage is one of the essential arguments for preferring it to a simpler technology such as Velocity.

-

Generating entire pages of dynamic content using XSLT is very slow - too slow to be an option in many cases. The cost of XSLT transforms is far greater than the cost of using reflection to "domify" JavaBean data on the fly. The efficiency of the stylesheet seems to make more difference as to whether "domification" is used. Switching from a "rule based" to "fill-in-the-blanks" stylesheet boosted performance by about 30%, although eliminating the use of Java extension functions didn't seem to produce much improvement.

| Important | The figures I've quoted here may vary between application servers: I would hesitate to draw the conclusion that XMLC and Velocity will always be nearly twice as fast as JSP. The Jasper JSP engine used by Jetty may be relatively slow. I've seen results showing comparable performance for all three technologies in Orion 1.5.3, admittedly with older versions of Velocity and XMLC. The fact that the JSP results were very similar on comparable hardware with Orion, while the new Velocity and XMLC results are much faster, suggest that Velocity and XMLC have been optimized significantly in versions 1.3 and 2.1 respectively. However, these results clearly indicate that we can't simply assume that JSP is the fastest option. Note also that a different XSLT implementation might produce better results, although there are good reasons why XSLT performance is unlikely to approach that of the other technologies. While it's important to understand the possible impact of using different products, we shouldn't fall into the trap of assuming that because there are so many variables in J2EE applications - application server, API implementations, third-party products, etc.-benchmarks are meaningless. Early in any project we should select an infrastructure to work with and make decisions based on its performance and other characteristics. Deferring such a decision, trusting to J2EE portability, may prove expensive. Thus performance on JBoss/Jetty with Xalan is the only meaningful metric for the sample application at this point. |

Web Caching Using HTTP Capabilities

We've discussed the various options for caching data within a J2EE application. There is another important caching option, which enables content to be cached by the client browser or between the client browser and the J2EE application server, based on the capabilities of HTTP.

There are two kinds of web caches that may prevent client requests going to the server, or may reduce the impact on the server of subsequent requests: browser caches, and proxy caches.

Browser caches are individual caches, held by client browsers on the user's hard disk. For example, looking in the C:\Documents and Settings\Rod Johnson\Local Settings\Temporary Internet Files directory on my Windows XP machine reveals megabytes of temporary documents and cookies. Some of the documents include GET parameters.

Proxy caches are shared caches, held by ISPs or organizations on behalf of hundreds or thousands of users.

Whether or not subsequent requests can be satisfied by one of these caches, depends on the presence of standard HTTP header values, which we'll discuss below. If requests can be satisfied by the user's browser cache, the response will be almost instant. The user may also experience much faster response if the content can be returned by a proxy cache inside an organization firewall, or maintained by an ISP, especially if the server(s) the web site runs on is a long way from the user. Both forms of caching are likely to greatly reduce load on the web application in question, and on Internet infrastructure as a whole. Imagine, for example, what would happen if content from sites such as an Olympic Games or football World Cup site were not proxied!

HTTP caching is often neglected in Java web applications. This is a pity, as the Servlet API provides all the support we need to make efficient use of it.

Cache Control HTTP Headers

The HTTP 1.0 and 1.1 protocols define several cache control header options: most importantly the HTTP 1.0 Expires header and the HTTP 1.1 Cache-Control header. Request and response headers precede document content. Response headers must be set before content is output to the response.

Without cache control headers, most caches will not store an object, meaning that successive requests will go directly to the origin server (in the present context, the J2EE web container that generated the page). However, this is not guaranteed by the HTTP protocols; if we don't specify caching behavior explicitly, we're at the mercy of the browser or proxy cache between origin server and client browser. The default behavior of each of these may vary.

The HTTP 1.0 Expires header sets a date before which a cached response should be considered fresh. This date is in GMT format, not the format of the origin server's or client's locale. Fortunately the Servlet API spares us the details of setting this information. The header will look like this:

Expires: Mon, 29 Jul 2002 17:35:42 GMT

Requests received by the cache for the given URL, including GET parameter data, can simply be served cached data. The origin server need not be contacted again, guaranteeing the quickest response to the client and minimizing load on the origin server. Conversely, to ensure that the content isn't cached, the Expires date can be set to a date in the past.

The HTTP 1.1 Cache-Control header is more sophisticated. It includes several directives that can be used individually or in combination. The most important include the following:

-

The max-age directive specifies a number of seconds into the future that the response should be considered fresh, without the need to contact the origin server. This is the same basic functionality as the HTTP 1.0 Expires header.

-

The no-cache directive prevents the proxy serving cached data without successful revalidation with the origin server. We'll discuss the important concept of revalidation below.

-

The must-revalidate directive forces the proxy to revalidate with the server if the content has expired (otherwise caches may take liberties). This is typically combined with a max-age directive.

-

The private directive indicates that the content is intended for a single user and therefore should not be cached by a shared proxy cache. The content may still be cached by a browser cache.

Caches can revalidate content by issuing a new request for the content with an If-Modified-Since request header carrying the date of the version held by the cache. If the resource has changed, the server will generate a new page. If the resource hasn't changed since that date, the server can generate a response like the following, which is a successful revalidation of the cached content:

HTTP/1.1 304 Not Modified

Date: Tue, 30 Jul 2002 11:46:28 GMT

Server: Jetty/4.0.1 (Windows 2000 5.1 x86)

Servlet-Engine: Jetty/1.1 (Servlet 2.3; JSP 1.2; java 1.3.1_02)

Any efficiency gain, of course, depends on the server being able to do the necessary revalidation checks in much less time than it would take to generate the page. However, the cost of rendering views alone, as we've seen above, is so large that this is almost certain. When a resource changes rarely, using revalidation can produce a significant reduction in network traffic and load on the origin server, especially if the page weight is significant, while largely ensuring that users see up-to-date content.

Imagine, for example, the home page of a busy news web site. If content changes irregularly, but only a few times a day, using revalidation will ensure that clients see up-to-date information, but slash the load on the origin server. Of course, if content changes predictably, we can simply set cache expiry dates to the next update date, and skip revalidation. We'll discuss how the Servlet API handles If-Modified-Since requests below.

At least theoretically, these cache control headers should control even browser behavior on use of the Back button. However, it's important to note that proxy - and especially, browser - cache behavior may vary. The cache control headers aren't always honored in practice. However, even if they're not 100% effective, they can still produce enormous performance gains when content changes rarely.

This is a complex - but important - topic, so I've been able to provide only a basic introduction here. See http://www.mnot.net/cache_docs/ for an excellent tutorial on web caching and how to use HTTP headers. For a complete definition of HTTP 1.1 caching headers, direct from the source, see http://www.w3.org/Protocols/rfc2616/rfc2616-sec14.html.

The HTTP POST method is the enemy of web caches. POST pretty much precludes caching. It's best to use GET for pages that may be cacheable.

| Note | Sometimes HTML meta tags (tags in an HTML document's <head> section) are used to control page caching. This is less effective than using HTTP headers. Usually it will only affect the behavior of browser caches. Proxy caches are unlikely to read the HTML in the document. Similarly, a Pragma: nocache header cannot be relied upon to prevent caching. |

Using the Servlet API to Control Caching

To try to ensure correct behavior with all browsers and proxy caches, it's a good idea to set both types of cache control headers. The following example, which prevents caching, illustrates how we can use the abstraction provided by the Servlet API to minimize the complexity of doing this. Note the use of the setDateHeader() method to conceal the work of date formatting:

response.setHeader ("Pragma", "No-cache"); response.setHeader ("Cache-Control", "no-cache"); response.setDateHeader ("Expires", 1L); When we know how long content will remain fresh, we can use code like the following. The seconds variable is the time in seconds for which content should be considered fresh:

response.setHeader( "Cache-Control", "max-age=" + seconds); response.setDateHeader( Expires", System.currentTimeMillis() + seconds * 1000L);

To see the generated headers, it's possible to telnet to a web application. For example, I can use the following command to get telnet to talk to my JBoss/Jetty installation:

telnet localhost 8080

The following HTTP request (followed by two returns) will retrieve the content, preceded by the headers:

GET /ticket/index.html

Our second code example will result in headers like the following:

HTTP/1.1 200 OK

Date: Mon, 29 Jul 2002 17:30:42 GMT

Server: Jetty/4.0.1 (Windows 2000 5.1 x86)

Servlet-Engine: Jetty/1.1 (Servlet 2.3; JSP 1.2; java 1.3.1_02)

Content-Type: text/html;charset=ISO-8859-1

Set-Cookie: jsessionid=1027963842812;Path=/ticket

Set-Cookie2: jsessionid=1027963842812;Version=1;Path=/ticket;Discard

Cache-Control: max-age=300

Expires: Mon, 29 Jul 2002 17:35:42 GMT

The Servlet API integrates support for revalidation through the protected getLastModified() method of the javax.servlet.http.HttpServlet class, which is extended by most application servlets. The Servlet API Javadoc isn't very clear on how getLastModified() works, so the following explanation may be helpful.

When a GET request comes in (there's no revalidation support for POST requests), the javax.servlet.HttpServlet service() method invokes the getLastModified() method. The default implementation of this method in HttpServlet always returns −1, a distinguished value indicating that the servlet doesn't support revalidation. However, the getLastModified() method may be overridden by a subclass. If an overridden getModified() method returns a meaningful value, the servlet checks if the request contains an If-Modified-Since header. If it does, the dates are compared. If the request's If-Modified-Since date is later than the servlet's getLastModified() time, HttpServlet returns a response code of 304 (Not Modified) to the client. If the getLastModified() value is more recent, or there's no If-Modified-Since header, the content is regenerated, and a Last-Modified header set to the last modified date.

Thus to enable revalidation support, we must ensure that our servlets override getLastModified().

Implications for MVC Web Applications

Setting cache expiry information should be a matter for web-tier controllers, rather than model or view components. However, we can set headers in JSP views if there's no alternative.

One of the few disadvantages of using an MVC approach is that it makes it more difficult to use revalidation, as individual controllers (not the controller servlet) know about last modification date. Most MVC frameworks, including Struts, Maverick, and Web Work, simply ignore this problem, making it impossible to support If-Modified-Since requests. The only option when using these frameworks is to use a Servlet filter in front of the servlet to protect such resources. However, this is a clumsy approach requiring duplication of infrastructure that should be supplied by a framework. This is a real problem, as sometimes we can reap a substantial reward in performance by enabling revalidation.

The framework I discussed in Chapter 12 does allow efficient response to If-Modified-Since requests. Any controller object that supports revalidation can implement the com.interface21.web.servlet.LastModified interface, which contains a single method:

long getLastModified (HttpServletRequest request);

Thus only controllers that handle periodically updated content need worry about supporting revalidation. The contract for this method is the same as that of the HttpServlet getLastModified() method. A return value of −1 will ensure that content is always regenerated.

The com.interface21.web.servlet.ControllerServlet entry point will route revalidation requests to the appropriate controller using the same mapping as is used to route requests. If the mapped controller doesn't implement the LastModified interface, the controller servlet will always cause the request to be processed.

The characteristics of a getLastModified() implementation must be:

-

It runs very fast. If it costs anywhere near as much as generating content, unnecessary calls to getLastModified() methods may actually slow the application down. Fortunately this isn't usually a problem in practice.

-

The value it returns changes not when the content is generation, but when data behind it changes. That is, generating a fresh page for a client who didn't send an If-Modified-Since request shouldn't update the last modified value.

-

There's no session or authentication involved. Authenticated pages can be specially marked as cacheable, although this is not normally desirable behavior. If you want to do this, read the W3C RFC referenced above.

-

If a long last modification date is stored, in the controller or in a business object it accesses, thread safety is preserved. Longs aren't atomic, so we may require synchronization. However, the need to synchronize access to a long will be far less of a performance hit than generating unnecessary content.

With the com.interface21.web.servlet.mvc.multiaction.MultiActionController class we extended in the sample application's TicketController class, revalidation must be supported on a per-method, rather than per-controller, level (remember that extensions of this class can handle multiple request types). Thus for each request handling method, we can optionally implement a last modified method with the handler method name followed by the suffix "LastModified". This method should return a long and take an HttpServletRequest object as an argument.

The Welcome Page in the Sample Application

The sample application provides a perfect opportunity to use revalidation, with the potential to improve performance significantly. The data shown on the "Welcome" page, which displays genres and shows, changes rarely, but unpredictably. Changes are prompted by JMS messages. The date it last changed is available from querying the com.wrox.expertj2ee.ticket.referencedata.Calendar object providing the reference data it displays. Thus we have a ready-made value to implement a getLastModified() method.

The welcome page request handler method in the com.wrox.expertj2ee.ticket.web.TicketController class has the following signature:

public ModelAndView displayGenresPage( HttpServletRequest request, HttpServletResponse response);

Thus, following the rule described above for the signature of the optional revalidation method, we will need to append LastModified to this name to create a method that the MultiActionController superclass will invoke on If-Modified-Since requests. The implementation of this method is very simple:

public long displayGenresPageLastModified( HttpServletRequest request) { return calendar.getLastModified(); } To ensure that revalidation is performed with each request, we set a must-revalidate header. We enable caching for 60 seconds before revalidation will be necessary, as our business requirements state that this data may be up to 1 minute old:

public ModelAndView displayGenresPage( HttpServletRequest request, HttpServletResponse response) { List genres = this.calendar.getCurrentGenres(); cacheForSeconds (response, 60, true); return new ModelAndView(WELCOME_VIEW_NAME, GENRE_KEY, genres); } The cacheForSeconds method, in the com.interface21.web.servlet.mvc.WebContentGenerator superclass common to all framework controllers, is implemented as follows, providing a simple abstraction of handling the details of HTTP 1.0 and HTTP 1.1 protocols:

protected final void cacheForSeconds( HttpServletResponse response, int seconds, boolean mustRevalidate) { String hval = "max-age=" + seconds; if (mustRevalidate) hval += ",must-revalidate"; response.setHeader("Cache-Control", hval); response.setDateHeader("Expires", System.currentTimeMillis() + seconds * 1000L); } | Important | J2EE web developers - and MVC frameworks - often ignore support for web caching controlled by HTTP headers and, in particular, revalidation. This is a pity, as failing to leverage this standard capability unnecessarily loads application servers, forces users to wait needlessly, and wastes Internet bandwidth. Before considering using coarse-grained caching solutions in the application server such as caching filters, consider the option of using HTTP headers, which may provide a simpler and even faster alternative. |

Edged Side Caching and ESI

Another option for caching in front of the J2EE application server is edge-side caching. Edge-side caches differ from shared proxy caches in that they're typically under the control of the application provider or host chosen by the application provider and in being significantly more sophisticated. Rather than rely on HTTP headers, edge side caches understand which parts of a document have changed, thus minimizing the need to generate dynamic content.

Edge-side caching products include Akamai and Dynamai, from Persistence Software. Such solutions can produce large performance benefits, but are outside the scope of a book on J2EE.

Such caching is becoming standardized, with the emergence of Edge-Side Includes (ESI). ESI is "a simple markup language used to define Web page components for dynamic assembly and delivery of Web applications at the edge of the Internet". ESI markup language statements embedded in generated document content control caching on "edge servers" between application server and client. The ESI open standard specification is being co-authored by Akamai, BEA Systems, IBM, Oracle, and other companies. Essentially, ESI amounts to a standard for caching tags. See http://www.esi.org for further information.

JSR 128 (http://www.jcp.org/jsr/detail/128.jsp), being developed under the Java Community Process by the same group of organizations, is concerned with Java support for ESI through JSP custom tags. The JESI tags will generate ESI tags, meaning that J2EE developers can work with familiar JSP concepts, rather than learn the new ESI syntax.

Many J2EE servers themselves implement web-tier caching (this is also a key service of Microsoft's .NET architecture). Products such as iPlanet/SunONE and WebSphere offer configurable caching of the output of servlets and other web components, without the need to modify application code.

|

|

EAN: 2147483647

Pages: 183