MITs Risk Analysis

|

|

In MITs, the process of estimating risk involves identifying "what" the risk is and "how much" is required before action will be taken to mitigate that risk. These answers are provided through performing quantitative and qualitative analysis.

We discuss these topics throughout the rest of this chapter. In the next phase, actual test selection, the risk analysis ensures that the selected test set has the highest probability of packing the biggest punch, smallest test set, highest yield. We discuss this in the following chapter, "Applied Risk Analysis."

Quantitative and Qualitative Analysis

Both quantitative and qualitative analysis came from chemistry. They go back before the Renaissance in Europe. You probably remember reading about efforts to turn lead into gold. Well, that's where these methods started. Chemistry is what happened to alchemy when the I-feel-lucky approach to turning lead into gold gave way to methods and metrics.

What are quantitative and qualitative analysis? Let's examine dictionary-type definitions:

-

Qualitative analysis. The branch of chemistry that deals with the determination of the elements or ingredients of which a compound or mixture is composed (Webster's New World Dictionary).

-

Quantitative analysis. The branch of chemistry that deals with the accurate measurement of the amounts or percentages of various components of a compound or mixture (Webster's New World Dictionary).

Risk analysis deals with both understanding the components of risk and the measuring of risk. Recognizing "what bad things might happen" is not "risk analysis" until you quantify it. You could limit yourself to one or the other of these two forms of analysis, but if the object is to provide a solution, you are missing the boat.

Next, you have to quantify what are you willing to do to keep it from failing. "Try real hard" is not an engineering answer. It contains no measured quantity. But it's the one we've been hearing from software makers. Getting real answers to these questions does not require "art." It requires measurement. And it requires that management allocate budget to the test effort to do so.

For instance, if you are going to make cookies, it isn't enough to know what the ingredients are. You need to know how much of each thing to use. You also need to know the procedure for combining ingredients and baking the cookies. These things taken together create a recipe for the successful re-creation of some type of cookies. If the recipe is followed closely, the probability for a successful outcome can be estimated based on past performance.

For this risk cookie, my "ingredients" are the criteria that are meaningful in the project, such as the severity, the cost of a failure, and the impact on customers, users, or other systems. There are any number of possible criteria, and each project is different. I start my analysis in a project by identifying the ones that I want to keep track of.

One of the easiest things to quantify is cost. We usually use units of time and currency to measure cost. Even if what is lost is primarily time, time can have a currency value put on it. Management seems to understand this kind of risk statement the best. So, it's the one I look for first.

After identifying the risk criteria that will be used to evaluate the items in the inventory, I want to determine the correct amount of priority to give each one, both individually and in relation to one another. I use an index to do this.

The MITs Rank Index

The MITs Rank Index is normally a real number between 1.0 and 4.0. A rank index of 1 represents the most severe error. A Severity 1 problem usually is described using terms like shutdown, meltdown, fall down, lethal embrace, showstopper, and black hole. Next is a rank of 2; this represents a serious problem, but not one that is immediately fatal. A rank index of 3 is a moderate problem, and a rank index of 4 is usually described as a matter of opinion, a design difficulty, or an optional item.

Rank indexes are real numbers, so a rank index of 1.5 could be assigned to a risk criteria. The decimal part of the index becomes important when we use the MITs rank index to calculate test coverage. This topic is covered in detail in Chapter 10, "Applied Risk Analysis."

Weighting an Index

Several types of weighting can be applied in addition to the rank index; for example, two additional factors that are often considered when assigning a rank index are the cost to fix the problem and the time it will take to fix the problem, or the time allowed to resolve an issue. The time allowed to resolve an issue is a normal part of a service level agreement, or SLA. This item will become increasingly important in Web applications, since hosting and Web services are both provided with SLAs. Failure to adhere to an SLA can become a matter for loss of contract and litigation.

Table 9.1 provides a simple index description and some of the criteria that might be used to assign a particular rank. Like everything else in MITs, you are encouraged to modify it to meet your own needs. However, the index should not become larger than 4 in most cases, because it translates into such a small percentage of test coverage that the benefits are normally negligible.

| Rank = 1 | Highest Priority Failure: Would be critical. Risk: High; item has no track record, or poor credibility. Required by SLA, has failed to meet an SLA time or performance limit. |

| Rank = 2 | High Priority Failure: Would be unacceptable. Risk: Uncertain. Approaching an SLA time or performance limit, could fail to meet an SLA. |

| Rank = 3 | Medium Priority Failure: Would be survivable. Risk: Moderate. Little or no danger of exceeding SLA limits. |

| Rank = 4 | Low Priority Failure: Would be trivial. Risk: May not be a factor. SLA-Not applicable. |

The Art of Assigning a Rank Index

In some cases, there is one overriding factor, such as the risk of loss of life, that makes this an easy task. When there is such a risk, it becomes the governing factor in the ranking, and assigning the rank index is straightforward and uncontested. The rank index is 1.

However, in most cases, assigning a rank index is not so straightforward or obvious. The normal risk associated with an inventory item is some combination of factors, or risk criteria. The eventual rank that will be assigned to it is going to be the product of one or more persuasive arguments, as we discussed in Chapter 3, "Approaches to Managing Software Testing."

The rank index assigned to a MITs ranking criteria may be a subtle blend of several considerations. How can we quantify multiple ranking criteria? It turns out that assigning a rank index is something of an art, like Grandmother's cookies. A lump of shortening the size of an egg may be the best way to describe the risk index that you should apply to a potential failure. For example, consider the following problem from my testing of the 401(k) Web Project (discussed later in the chapter):

One of the errors I encountered caused my machine to lock up completely while I was trying to print a copy of the 401(k) Plan Agreement document from a downloaded PDF file displayed in my browser. I was supposed to review the document before continuing with the enrollment process. When the machine was rebooted, the PDF file could not be recovered. I tried to download the file again, but the system, thinking I was finished with that step, would not let me return to that page. I could not complete the enrollment because the information that I needed to fill out the rest of the forms was contained in the lost PDF file.

I reported this failure as a Severity 1 problem because, in my opinion and experience, a normal consumer would not be willing to pursue this project as I did. Remember, this was not the only blockage in the enrollment process. As far as I am concerned, an error that keeps a potential customer from becoming a customer is a Severity 1 error. Developers did not see it this way. As far as they were concerned, since the file wasn't really lost and no damage was really done, this was a minor problem, a Severity 3 at most.

Fortunately, the MITs ranking process allows us to balance these factors by using multiple ranking criteria and assigning them all a rank index, as you will see in the next topic.

The MITs Ranking Criteria

Over the years, I have identified several qualities that may be significant factors in ranking a problem or the probability of a problem.

Identifying Ranking Criteria

The following are qualities I use most frequently in ranking a problem or the probability of a problem:

-

Requirement

-

Severity

-

Probability

-

Cost

-

Visibility

-

Tolerability

-

Human factors

Almost every different industry has others that are important to them. You are invited and encouraged to select the ranking criteria that work for your needs. The next step is to determine the appropriate rank index for a given ranking criteria.

Assigning a Risk Index to Risk Criteria

The way that I determine the rank index for each ranking criteria is by answering a series of questions about each one. Table 9.2 shows several questions I ask myself when attempting to rank. These are presented as examples. You are encouraged to develop your own ranking criteria and their qualifying questions.

Table 9.2: MITs Risk Criteria Samples

| Required | |

|---|---|

| Is this a contractual requirement such as service level agreement? Mitigating factors: Is this an act of God? If the design requirements have been prioritized, then the priority can be transferred directly to rank. Has some level of validation and verification been promised? | Must have = 1 Nice to have = 4 |

| Severity | |

|---|---|

| Is this life-threatening? System-critical? Mitigating factors: Can this be prevented? | Most serious = 1 Least serious = 4 |

| Probability | |

|---|---|

| How likely is it that a failure will occur? Mitigating factors: Has it been previously tested or integrated? Do we know anything about it? | Most probable = 1 Least probable = 4 |

| Cost | |

|---|---|

| How much would it cost the company if this broke? Mitigating factors: Is there a workaround? Can we give them a fix via the Internet? | Most expensive = 1 Least expensive = 4 |

| Visibility | |

|---|---|

| If it failed, how many people would see it? Mitigating factors: Volume and time of day intermittent or constant. | Most visible = 1 Least visible = 4 |

| Tolerabillty | |

|---|---|

| How tolerant will the user be of this failure? For example, a glitch shows you overdrew your checking account; you didn't really, it just looks that way. How tolerant would the user be of that? Mitigating factors: Will the user community forgive the mistake? | Most intolerable = 1 Least intolerable = 4 |

| Human Factors | |

|---|---|

| Can/will humans succeed using this interface? Mitigating factors: Can they get instructions or help from Customer Service? | Fail = 1 Succeed = 4 |

The only danger I have experienced over the years with risk ranking happens if you consistently use too few criteria. If you use only two criteria for all ranking, for example, then the risk analysis may not be granular enough to provide an accurate representation of the risk. The worst fiasco in risk-based testing I ever saw was a project that used only two criteria to rank the testable items: probability and impact. These two criteria did not account for human factors, or cost, or intersystem dependencies. It turned out that these factors were the driving qualities of the feature set. So, the risk analysis did not provide accurate information.

Combining the Risk Factors to Get a Relative Index

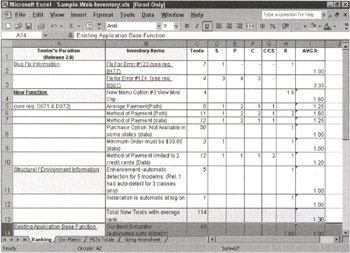

MITs does not require that you apply a rank index to all of your criteria for every item on the inventory, so you don't have to apply a rank to inappropriate qualities. The MITs ranking process uses the average of all the criteria that have been assigned a risk index. Figure 9.3 shows a sample ranking table from the Tester's Paradise inventory (discussed in Chapters 8, 10, 12, and 13).

Figure 9.3: Sample ranking index for Tester's Paradise.

Notice that the different items don't all have risk indexes assigned for every criteria. For example, if the risk is concentrated in a requirement, as in the structural item, installation is automatic at logon. Therefore, there may be no need to worry about any of the other criteria. But the risk associated with an item is normally a combination of factors, as with the New Functions category, where most of the risk factors have been assigned an index value.

| Note | When a ranking criteria has been assigned a rank index, I say that it has been "ranked." |

In this sample, each ranking criteria that has been ranked is given equal weight with all other ranked criteria for that item. For example, the item Method of Payment (data) has four ranked criteria assigned to it; the average rank is simply the average value of (1 + 2 + 1 + 1)/4 = 1.25. It is possible to use a more complex weighting system that will make some criteria more important than others, but I have never had the need to implement one. This system is simple and obvious, and the spreadsheet handles it beautifully. The spreadsheet transfers the calculated average rank to the other worksheets that use it. If a change is made in the ranking of an item on the ranking worksheet, it is automatically updated throughout the project spreadsheet.

In some projects, like the real-world shipping project, it is necessary to rank each project for its importance in the whole process, and then rank the items inside each inventory item. This double layer of ranking ensures that test resources concentrate on the most important parts of a large project first. The second internal prioritization ensures that the most important items inside every inventory item are accounted for. So, in a less important or "risky" project component, you are still assured of testing its most important parts, even though it will be getting less attention in the overall project. This relative importance is accomplished through the same process and serves to determine the test coverage for the item.

Using Risk Ranking in Forensics

After the product ships or goes live or whatever, there is an opportunity to evaluate the risk analysis based on the actual failures that are reported in the live product or system. Problems reported to customer service are the best place to do this research. I have had the opportunity several times over the past years to perform forensic studies of various products and systems. There is much valuable information that I can use when I develop criteria for the next test effort by examining what was considered an important or serious problem on the last effort.

Mining the customer support records gives me two types of important information. First, I can use the actual events to improve my analysis in future efforts and, second, I find the data that I need to put dollar amounts in the cost categories of my risk analysis. If I can use this real-world cost information when I rank my tests and evaluate my performance, then I can make a much better case for the test effort. This last point is well illustrated in this next case study, the 401(k) Web Project.

|

|

EAN: 2147483647

Pages: 132