Memory Management for SQL Server 2000

One of the most important administrative tasks any DBA or system administrator has to do is manage the memory that an instance of SQL Server 2000 uses. This section covers the various types of operating system memory tuning you can use with SQL Server and offers recommendations on how to use them. Several types of memory tuning are available, and each has its own advantages, disadvantages, and uses.

| Note | This is not intended as a complete guide to the operating system memory manager. Rather, this is a brief overview designed to give you enough understanding of the memory manager to comprehend the various memory-tuning techniques available to SQL Server, as well as the implications that can arise from using them. |

Before describing the various types of memory tuning, however, here is a brief tour of the operating system memory manager. This tour will help you understand the hows and the whys of employing the various types of memory tuning.

Understanding the Memory Manager

All versions of Windows up through Microsoft Windows 2000 and some versions of Microsoft Windows Server 2003 were primarily 32-bit operating systems until the release of the 64-bit versions of Windows Server 2003.

The amount of memory the operating system can address is directly tied to how many bits the operating system is. The 32-bit versions of Windows are able to address 2 32 bytes of physical memory out of the box, or 4,294,967,296 bytes. To put it more plainly, Windows can address 4 GB of physical memory. The obvious implication is that the more address bits your operating system has, the more memory you can address. So a 64-bit operating system would be able to address, at most, 2 64 bits worth of physical memory (which is 18,446,744,073,709,551,616 bytes ”an extremely large number). From this discussion, you would think that 32-bit versions of Windows are limited to supporting only 4 GB of physical memory, but that is not the case.

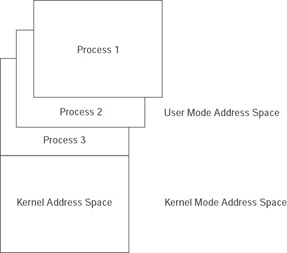

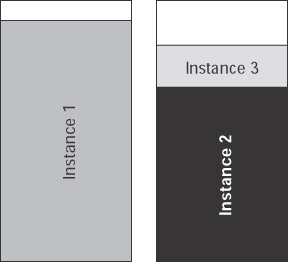

However, before you get excited and start writing or implementing applications that will require 4 GB of memory just to load, there are a few ground rules about how memory can be used. Windows utilizes something known as a memory split (see Figure 14-4). This means that by default, the kernel can utilize up to 2 GB of memory in its own separate memory space and each application can utilize up to 2 GB of memory in its own private address space.

Figure 14-4: An example of a memory split.

The memory manager works this way for two main reasons: self protection and application protection. The kernel mode gets 2 GB all to itself so that it will have enough room to do whatever it needs to do without running out of space. Plain and simple, applications will not run if the kernel space does not have enough memory. Along with this self-protection, user mode applications cannot address kernel mode space and accidentally corrupt kernel mode memory and cause an access violation (AV).

Notice that previously it was noted that each application can utilize up to 2 GB of memory. That might seem a little odd given that, natively, the operating system can recognize only 4 GB of total memory. For example, pretend that a server that has 4 GB of RAM (which we will call \\myserver for the purpose of the example), and the kernel mode functions are using 1 GB of memory, leaving 3 GB for all other applications. Now, also imagine that an instance of SQL Server 2000 is active and using its full range of 2 GB of memory, leaving only 1 GB for all other applications. Now add Web services, native user mode applications, management and monitoring processes, and we are running at a full 3.9 GB of memory used. What happens when a new process is started that needs 200 MB of memory? This single request would put the processes over the actual amount of memory that the system has in total. So now, with each application getting its own 2 GB range, the memory picture begins to look a little more like Figure 14-5.

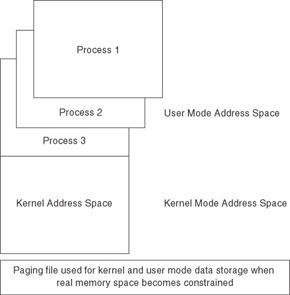

Figure 14-5: Memory split with a paging file in use.

To combat this hard limit of physical memory in the server, the memory manager uses disk space to substitute for physical memory. This disk space is called the paging file (pagefile.sys). Whenever there is not enough physical memory left to satisfy requests , memory that is currently not in use will be written to the paging file on disk, freeing physical memory to satisfy requests (actually, the process is somewhat more complicated than described here, but for this discussion, it is sufficient).

| Note | Paging is a fairly generic term that refers to the memory manager writing from memory to disk. This can be writing data out to a paging file or simply saving a file to disk. In context for this text, we will use the term paging to refer to writing to the page file for the purposes of memory management unless otherwise noted. |

So far, there can be 4 GB of memory in the operating system with 2 GB available to kernel mode functions and 2 GB available to each user mode application. If physical memory becomes constrained, items in memory that have not been recently used will be written out to a pagefile to free physical memory for more pressing needs, and the memory picture looks more like Figure 14-6. So what happens when an application needs some of its memory that has been written out to the paging file?

Figure 14-6: Freeing physical memory.

To enable applications to not have to keep track of memory in case it gets moved or worry about overwriting the memory of other applications and processes, the memory manager virtualized all the memory addresses used by user mode applications and processes. For example, imagine that we have two processes, process A and process B, and both ask for 1 MB of memory. Both processes get an answer from the memory manager that they have been allocated 1 MB of memory starting at address 0x28394532. What happens when both applications write to that address? Will one of the processes erroneously overwrite the other s data? Will one of the processes receive an error? Will one or both of the applications crash?

The answer is none of the above. Both processes will be able to write to the same address because of the virtualization that goes on behind the scenes. User mode processes are never able to directly write to physical memory and never actually know where their data resides. A user mode processes and requests a block of memory and writes to it. In the meantime, the memory can be written out to the pagefile, and when the application does something with the memory, the memory manager will go out to the pagefile and retrieve the data from the pagefile for the application. The key thing here is that the application never knew anything about the virtualization process; it simply thinks it is writing to a memory location.

How does an application get its memory back once it is in the paging file? It does not get it back. Whenever an application references memory in the paging file, the memory manager will retrieve the data out of the paging file for the application because, remember, the application is not responsible for keeping track of where the data really is.

Because of this virtualization, each application can write to the virtual locations of 0x00000000 “ 0x7ffffffff (or 2 GB) without affecting any other process. Each application thinks that its memory location 0x38344134 is the only one on the server, when, in fact, many applications are simultaneously using that virtual location. All the while, in the background, the memory manager is writing memory for those applications and keeping track of where they are in physical memory and in the pagefile.

Keeping this in mind, the previous statement that the kernel space and each application could utilize up to 2 GB of memory was somewhat incorrect. Now that we have introduced the concept of virtual memory, it is far more accurate to state that the kernel mode space, as well as each application, can utilize up to 2 GB of virtual memory. Certain portions of the memory manager, however, cannot be paged to disk.

The kernel address space can also use 2 GB of address virtual memory, but the key difference is that all processes in the kernel space share the same 2 GB. Each process does not get its own unique memory space use without affecting other kernel mode processes. Any user mode thread that enters and does its work in this space and then returns will lose access to the memory it just used. Because of this, it is very important for drivers and kernel processes to be very good at handling memory. When rogue processes corrupt or overwrite kernel address space memory, the results are disastrous indeed. Errors in the kernel mode space, where most hardware driver code exists, often lead to a dreaded system crash if the right set of conditions occurs. In Windows 2000 and later, this is less likely to happen due to protection mechanisms built into the operating system.

Most memory in the kernel mode space is virtualized. Some memory, depending on the function, can be paged out to disk as well. There is a special type of memory that cannot be paged out to disk. One of the key items that resides in that space is the portion of the memory manager that handles virtual address translation and its related items and drivers for disk access. Those must remain resident in physical memory at all times (this is addressed later).

Memory access is always slow compared to memory access from physical memory. For example, imagine that an application named memoryhog.exe references three pieces of data it has stored in memory: Mem1, Mem2, and Mem3. Mem1 is right where memoryhog.exe left it. The memory manager has not shuffled it around at all. The memory manger has not moved Mem2 either, but the virtual memory address no longer points to the data that is still in physical memory. The data is not out on the paging file yet. The memory manager, however, has moved Mem3 into the paging file. memoryhog.exe does not know this, of course ”it simply references the memory virtual addresses it wrote to in the first place. Which reference will occur faster and why? The answer is Mem1, and here is why.

When memoryhog.exe references Mem1, nothing extra happens. It is right where memoryhog.exe left it, and no extra looking and retrieving goes on. When Mem2 is referenced, it is a little slower because the memory manager has to repoint the virtual address to the physical memory, but the performance hit is negligible because the item still remained in physical memory. Mem3, however, must be retrieved from disk, and it is the slowest of all. Disk access times are measured in milliseconds and memory access time is measured in nanoseconds, so every time memory has to be retrieved from disk, it takes approximately 1 million times longer to retrieve the data than if it had still been resident in physical memory.

Whenever data is not where an application originally left it and the memory manager has to retrieve the data, a page fault ”which means that you are now accessing a physical disk ”is incurred. You must be aware of two types of page faults: soft page faults, as occurred with Mem2, and hard page faults, as occurred with Mem3.

Because hard page faults are devastating to system performance, operating system “based solutions were developed to help applications use and retain more data in physical memory.

Breaking the 2-GB Barrier Under 32-Bit

Because databases are growing so large and usage is heavy, the default 2 GB that a single user mode process can utilize is often insufficient for real-world needs. In order to combat this and increase performance (or to prevent performance degradation due to increased load), you will need to tune the amount of memory you are using for a given SQL Server 2000 instance.

The following memory tuning options are discussed in this section:

-

/3GB switch

-

Physical address extensions (PAE)

-

Address windowing extensions (AWE)

Each option does not function the same but has the same goal: to increase performance of an application by modifying the way the memory manager works to reduce disk paging.

/3GB boot.ini Switch

/3GB is a switch configured in the boot.ini file read by Windows at startup. This option allows an application such as SQL Server to utilize up to 3 GB of virtual memory in its private address space rather than the default maximum of 2 GB. The memory is virtual and can still be paged out if necessary. Here is an example of a boot.ini entry with the switch:

multi(0)disk(0)rdisk(0)partition(1)\WINDOWS='Windows Server 2003, Enterprise' /fastdetect /3GB

The benefits of using /3GB are immediately obvious. An application can map 1 more GB of memory into its private memory space. Specifically , SQL Server is able to map 3 GB of data into its memory space, a gain of 50 percent.

| Note | Applications have to be compiled specifically with the IMAGE_FILE_LARGE_ADDRESS_AWARE flag to take advantage of the /3GB switch. The 32-bit version of SQL Server 2000 is one of these applications. |

Applications such as SQL Server that can frequently refer to the same data multiple times to satisfy different requests can greatly benefit from an extra GB of memory to map.

An administrator will need to keep several considerations in mind before enabling the /3GB switch. Usage of this switch will limit the kernel memory space to the remaining 1 GB. In some cases this can cause undesirable results if the kernel mode space is not large enough for the operating system. If the right conditions do not exist, the server s performance can be degraded or possibly result in a system crash. It would never be wise to allow a single application to starve the kernel of memory because it not only could affect other applications but also could cause the kernel to not have enough memory to continue to function at all. Also, because the memory is still virtualized, SQL Server or any other application that uses a 3 GB virtual address space might not necessarily realize a performance benefit.

The /USERVA boot.ini Switch

Because of these possible problems, a new subswitch has been introduced in Windows 2003 Server (as well as Windows XP Service Pack 1 or later) that can only be used in conjunction with the /3GB switch, the /USERVA switch. The /USERVA switch allows an administrator to determine the size of the user mode virtual address space between 2 GB and 3 GB. For example, assume that an administrator determines (through testing and benchmarks) that the system will perform at its peak with a user mode virtual address space of 2.5 GB and the kernel will be able to work comfortably with a 1.5 GB virtual address space. The administrator could them modify boot.ini to the following:

multi(0)disk(0)rdisk(0)partition(1)\WINDOWS='Windows Server 2003, Enterprise' /fastdetect /3GB /userva=2560

In some cases restricting the kernel to only 1 GB of memory might not be desirable, but the kernel might not need 2 GB, either, so the /USERVA switch allows administrators a middle-ground alternative to the all-or-nothing approach of the /3GB switch.

| Important | Without the /3GB switch, the /USERVA switch will be ignored if it is put into boot.ini alone. |

Despite these concerns, using the /3GB switch is a perfectly valid approach to memory. The key to determining whether or not it will be useful in your environment is testing. Usage trends and performance changes can rarely be predicted ; before and after performance benchmarks and tests are essential to determining whether or not memory tuning is beneficial in a particular case.

Physical Address Extensions (PAE)

You might have noticed that we mentioned earlier that a condition might exist where total system memory usage is more than 4 GB. What does an administrator do then, since earlier we pointed out that a 32-bit operating system can only address 4 GB of memory? Simple, make the operating system use more than 32 bits.

PAE is a hardware modification that allows a 32-bit hardware platform to address more than 4 GB. Essentially, PAE changes the addressing from 32-bit to 36-bit addressing mode on an Intel server (37-bit on AMD). The calculation for the amount of memory is 2 n , where n is the number of bits of the operating system. So an Intel-based processor, when PAE is enabled, will allow an operating system to address up to 64 GB of memory, since 2 36 works out to 64 GB.

Implementing PAE is done through a /PAE switch configured in the startup line for the specific installation of Windows in boot.ini option. Here is an example of a boot.ini entry with the switch; PAE is also enabled by means of a switch in boot.ini. Table 14-4 shows how much memory you can access with /PAE set under the various editions of Windows.

multi(0)disk(0)rdisk(0)partition(2)\WINNT='Windows 2000 Advanced Server' /PAE /basevideo /sos

| Operating System | Maximum Memory (in GB) |

|---|---|

| Windows 2000 Advanced Server | 8 |

| Windows 2000 Datacenter Server | 32 |

| Windows 2003 Server Enterprise Edition | 32 |

| Windows 2003 Server Datacenter Edition | 64 |

PAE allows the operating system to see and utilize more physical memory than otherwise possible. The obvious benefit to this is that with more physical memory available, the operating system will have less need of a paging file to service memory requests.

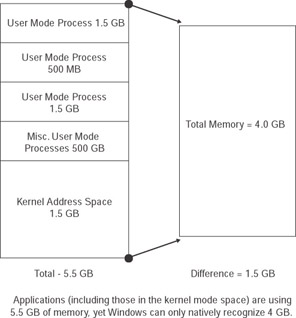

For example, a server, named MyServer, has 4 GB of memory. SQL Server is using 2 GB, the various kernel functions are using 1.5 GB, and other applications, such as virus scanners , essential services, and support applications, are using 2 GB of memory. Whoops, that adds up to 5.5 GB of memory. Since normally a server can support only up to 4 GB of memory, this means that at least 1.5 GB worth of data is paged out to disk at any given time (again, this is not strictly true, but this is a simple overview of the memory manager). Constantly swapping 1.5 GB of data in and out of the pagefile is a performance- intensive task, so it would really be beneficial if somehow the system could use more than 4 GB of physical memory to decrease, if not eliminate, the pressure on the paging file.

With PAE, a server can recognize and use more than 4 GB of physical memory. This is a great performance enhancer because, even though all memory is virtualized for applications (remember, this means they never know where it is in physical memory or if it has been pushed out to the pagefile), data does not get pushed out to the pagefile unless there is a deficiency of physical memory. Because of this, increasing the amount of physical memory available decreases the amount of pagefile usage that takes place and will therefore increase performance.

How does this help SQL Server? It does not help directly. Although applications directly take advantage of the /3GB switch, the performance gains that come from PAE are hidden from the application. In fact, applications are completely unaware of PAE or the amount of physical memory. Applications get an indirect performance boost because more of their data can remain resident in physical memory on a system with >4 GB of memory with PAE enabled. That is good news, because applications do not natively need to do anything to take advantage of PAE; it just happens.

Combining /PAE and /3GB

For some, the better news might be that you can combine the /3GB and /PAE switches to allow applications (correctly compiled, of course) to use up to 3 GB of memory and to allow more of that data to remain resident in physical memory, providing a performance boost from both ends.

Earlier, we mentioned that the memory manager virtualizes memory for applications and for portions of the kernel so they do not have to do the work of keeping track of where their data is located. Because of this, the memory manager must keep track of where data is located, in physical memory or the pagefile, so that when an application asks for the data in location 0x12345678, the memory manager can look in a translation table, find the data, and grab it from the right place, whether it is the pagefile or memory, and send it to the application. One of the structures involved in this translation and lookup process is called a page frame number (PFN).

Because PAE creates a larger range of physical addresses for the memory manager to keep track of and index, the amount of PFN entries that is required grows dramatically. On a system that is booted with both the /PAE and /3GB switches, an interesting thing happens. The amount of memory that must be indexed/translated in a lookup table is dramatically increased, more key data structures involved in that lookup process are used, and the area of memory where that structure is stored, kernel mode memory, is capped at 1 GB.

This combination will exhaust system kernel space much earlier than normal. Because of this, the memory manager imposes a hard cap of 16 GB on a system booted with both the /3GB and /PAE boot options. Even if a system has 32 GB, if it is booted with both options, only 16 GB will be recognized. So if your SQL Server instance requires more than 16 GB of memory, you cannot mix these two memory models.

| Warning | Even though the memory manager imposes a hard limit of 16 GB in this configuration, it is possible to encounter problems even with lesser amounts of memory (say, 8 GB or 12 GB), so it is always a good idea to give the kernel as much room as possible either by reducing the memory load or by using the /USERVA subswitch to increase kernel memory. |

Address Windowing Extensions (AWE)

What if 3 GB is not enough for an application? What if an application needs to use 5 GB of physical memory and cannot ever have that memory paged out to disk? There is a way to have an application use gigabytes of virtual memory and ensure that data mapped into that physical memory was never written to the pagefile. It is Address Windowing Extensions (AWE), which is enabled in SQL Server 2000. This feature was introduced in SQL Server 2000 and does not exist in earlier versions.

AWE is an API set that compliments PAE. Unlike PAE, AWE is not a boot option. Applications must directly invoke the AWE APIs in order to use them. The specifics of the AWE API are beyond the scope of this book and are not covered here.

| More Info | To learn about specific APIs and to get code samples, please see MSDN online at http://msdn.microsoft.com. |

Since there are no code samples of AWE or even a look at the API set, this section looks at the functionality AWE provides through applications that utilize it, such as SQL Server, and how it affects memory usage.

Because an application virtual address space can be extended only to 3 GB on the Intel 32-bit platform, applications (and database applications in particular, since they deal with such large datasets and do a lot of swapping of data from disk to memory) need a method to map large portions of data into memory and keep that data in physical memory at all times to increase the performance. This also allows an application to map/remap data into its virtual memory space very quickly without failures.

AWE does just that. AWE allows an application to reserve chunks of physical memory that cannot be paged out to the paging file or otherwise manipulated except by the reserving application (which will be SQL Server, since that is the topic of discussion). AWE will keep the data in physical memory at all times. Because the memory manager cannot manage this memory, it will never be swapped out to the paging file. The application is now completely responsible for handling the memory in a responsible manner.

Because this technique is only useful if an application is able to reserve large chunks of memory, it is a technology that best compliments existing technology, namely PAE, rather than being a stand-alone memory tuning solution. When you use the combination of PAE and AWE with SQL Server, the instance does not dynamically manage the size of the memory address space used. And once initialized , AWE memory holds all the memory acquired at the instance s startup until the SQL Server is shut down. To enable AWE in SQL Server, you must use the sp_configure stored procedure. Here is the syntax to run in a query window to enable AWE:

EXEC sp_configure 'awe enabled', 1 RECONFIGURE

| Important | AWE enabled is not a dynamic configuration change. You must stop and start your SQL Server instance before it takes effect. And if you need to change the settings, you must also go through another stop and start. You must plan for this downtime, which means it might affect any service level agreement (SLA) you have in place. |

You must set the Max Server Memory and Set Working Size configuration options when using AWE with SQL Server. If they are not set, SQL Server grabs the total memory available (except 128 MB to allow the base operating system to function). This potentially deprives the operating system ”and any other processes that would be running on the same server ”of memory.

Because the memory pages used by the instance of SQL Server are taken from the nonpaged pool of Windows memory, none of the memory can be exchanged. This means that if the physical memory is filled up, SQL Server cannot use the page file set up on a physical disk to account for the surplus in memory usage.

In terms of AWE and multiple instances, since the memory allocated to SQL Server is expecting to grab is a static amount to be kept, if the memory you have told SQL Server to take upon startup is greater than the amount of memory that is actually available, SQL Server 2000 automatically changes the mode form AWE to non-AWE (dynamic allocation) for that instance. This means that SQL Server will only use up to 2 GB of memory. This is the case if there are multiple instances on one server or if the node is starting up an instance after a failover in a server cluster. If more than 2 GB of physical memory is available, the AWE-enabled instance will allocate all available memory during the failover startup and will only leave 100 “120MB free for the operating system, effectively starving it. However, if other instances that are not using AWE use more memory during the failover situation or are using AWE and consume that memory, and the whole system has less than 2 GB of physical memory available, the instance that fails over and starts on the other node will be started in the non- AWE mode.

AWE presents the same dangers as the other memory-tuning techniques. Using any one, or a combination, of these methods to boost SQL Server performance through memory tuning can starve other applications ”or even the kernel itself ”of memory that they need to perform adequately. In some cases the starvation caused by weighing memory too heavily in favor of SQL Server can cause performance degradation in other areas that is severe enough to cause an instance s performance to drop.

The bottom line is that SQL Server memory tuning can be a really good thing but it also has the potential to cripple a server. As with most things, take time to understand the outcome of server-wide changes such as this before you make them.

You might have noticed that in this section we give no hard numbers , examples, or performance charts showing at which point performance drops off. Because performance is such a subjective topic and very dependent upon hardware, installed applications, services, and the configuration of these items, giving hard and fast numbers for performance is practically impossible . The key to finding the best performance for your infrastructure is testing and establishing benchmarks to gauge the effects of changes. There are literally mountains of data on this subject in MSDN, TechNet, and in the various resource kits, since a subject that broad is outside the scope of this book.

Paging File Sizing and Location

As noted above, the operating system uses the paging file, which is a physical file located on your disk subsystem, for some memory operations. The general rule of thumb is to size it at 1.5 times the amount of physical memory that you have. However, in Windows 2000, there is a maximum of 4 GB for the page file size. A paging file size of 2050 MB (just over 2 GB) is the minimum file size necessary for computers with 4 GB or more of physical memory. In Windows 2000 and Windows Server 2003 32-bit, there is a maximum file size of 4 GB, but there is no limit in 64-bit versions of the operating system. In Windows Server 2003 64-bit, you can have a paging file size much larger than the 2050 MB, and it is typically three times the physical RAM. To do this, you have two options:

Option 1: Through the Graphical User Interface (GUI)

-

Select My Computer, right-click, and select Properties (in Windows Server 2003 you might need to turn on the Classic view to see My Computer). Or, under Control Panel, select System.

-

Select the Advanced tab. In Windows Server 2003, click Settings under the Performance category. In Windows 2000, click Performance Options.

-

In Windows Server 2003, in Performance Options, select the Advanced tab, and in Virtual Memory, click Change. In Windows 2000, in Performance Options, click Change in Virtual Memory. The Virtual Memory dialog box, as shown in Figure 14-7, will be displayed.

Figure 14-7: Changing your paging file settings. -

In Virtual Memory, select the drive where you want to place your paging file and an initial and maximum size. You can allow Windows to manage it, but it is recommended that you control this. Click Set when you are finished. Click OK. You will see a message similar to the one shown in Figure 14-8. Click OK three more times.

Figure 14-8: Restart message.

Option 2: Through the Registry

-

In the location where you will create the extra paging files, create folders for each additional paging file.

-

Start the Registry Editor with Regedt32.exe, and find the following registry key:

HKEY_LOCAL_MACHINE\System\CurrentControlSet\Control\SessionMana ger\MemoryManagement

-

Under the key, find the Pagingfiles value, and double-click it. Delete any entries that exist and add entries for each of the files you will be using in the folders you created. For example:

c:\pagefile1\pagefile.sys 3000 4000 c:\pagefile2\pagefile.sys 3000 4000

-

When you are finished, click OK and exit the Registry Editor. You must restart the operating system for the new changes to take effect; this is not a dynamic change.

-

After the system is rebooted, verify the new settings using the instructions for the GUI to see the changes in the Virtual Memory dialog box.

In terms of placing the pagefile.sys, it is recommended that you do not place it on any disk that will have SQL Server databases, because if you run out of memory and start paging, you could have serious I/O contention with your SQL Server databases and have a double performance problem (memory and disk). You might choose to use a form of redundant array of independent disks (RAID) to protect your paging files, and that is absolutely required if you split your paging files among multiple disks. RAID 1 would work well.

Also, how you configure your paging file is directly related to how you set the Startup and Recovery settings in Computer Management, which you can find in the Advanced tab when you right-click Computer Management (Local) and select the Properties. If you choose either the Kernel Memory Dump or Complete Memory Dump option and select the option Overwrite Any Existing File, Windows will write over any existing dump file and use the same name . If you do not select Overwrite Any Existing File, you will have the ability to save all your memory dumps, but you will have to account for the space. See Figure 14-9 for the Startup and Recovery settings in Computer Management.

Figure 14-9: Startup And Recovery dialog box from Computer Management.

If you want to get full memory dumps after a crash with 32-bit systems larger than 4 GB, you must add the /MAXMEM switch in boot.ini like this:

multi(0)disk(0)rdisk(0)partition(1)\WINDOWS='Windows 2000 Advanced Server' /fastdetect /MAXMEM= n

where n is the amount of memory you are specifying as the maximum amount of memory that the operating system can use.

| Caution | /MAXMEM was originally designed for stress testing and debugging, so use this with extreme caution. |

For example, if you put /MAXMEM=128, you would be restricting the operating system to only 128 MB of memory, and no matter how much more memory you have in your server, that is all it would see. Do not set this lower than 128 MB for Windows 2000 versions of the server or for Windows Server 2003, Enterprise Edition. Windows Server 2003, Datacenter Edition, has a minimum requirement of 512 MB of memory. Realistically, if you use /MAXMEM, you should set it to the minimum recommended RAM, not the bare minimum needed.

| Important | Using /MAXMEM requires that one of the paging files on the system must be at least 1 MB larger than your physical memory; otherwise you will not get a full memory dump. |

| Warning | /MAXMEM is an undocumented boot.ini switch that you can find in Volume 3 of the Windows NT Resource Kit. It is meant only for specific configurations, and the memory you specify must be contiguous. This option is valid through Windows 2000 and is not guaranteed to be in future operating systems. Do not use the /MAXMEM option without careful consideration and thorough testing. |

SQL Server Memory Recommendations

The toughest part about understanding the memory models is putting it into practice. What do you do for your SQL Server instances? This becomes more glaring on a server cluster where you are trying to implement more than one instance or where you are trying to consolidate multiple SQL Servers onto one or more instances, possibly on a single server or a cluster. Use Table 14-4 as well as Table 14-5 for 64-bit versions to see how much memory you can use under your operating system of choice.

| Note | If your hardware as well as your operating system choice supports hot swap memory, you might be able to add more physical memory to your server without incurring downtime. However, to use the memory, you would need to allocate it in an application such as SQL Server, which might require a reconfiguration that necessitates downtime of some sort .

|

| Important | Although you can expand beyond 4 GB of memory in SQL Server, the 32-bit version of SQL Server 2000 will always be limited to 2.6 GB of procedure cache. If you have multiple workloads, you will need to test whether they can all coexist and one will not exhaust the procedure cache. If so, you might need multiple instances as each instance gets its own procedure cache; it is not shared between instances. |

64-bit SQL Server 2000 changes the rules a bit. All memory under 64-bit is dynamic, and you have much more available to you. In this case, whether you are on a cluster or using a stand-alone server, you can allow SQL Server to manage its own memory, and you can set the MIN SERVER MEMORY option of SQL Server to set the minimum amount of memory for each instance. To set this through Transact-SQL, here is the syntax:

EXEC sp_configure 'min server memory', 8000 GO

Conversely, you can also set your memory settings in Enterprise Manager in the Memory tab of the instance s properties, as shown in Figure 14-10.

Figure 14-10: Memory tab of an instance s properties.

Using the Memory tab, you can set the following: if you select Dynamically Configure SQL Server Memory, you can set a maximum or minimum amount of memory, or both, that the SQL Server instance can use. By default, the maximum is set to the total amount of memory SQL Server can use and the minimum is set to 0, meaning SQL Server can use up to every amount of available memory. If you are using this option, when you set Minimum (MB) on the screen, it corresponds to executing sp_configure with MIN SERVER MEMORY, and Maximum (MB) is the same as issuing sp_configure with MAX SERVER MEMORY.

If you select the option Reserve Physical Memory For SQL Server, this will physically reserve that amount of memory for SQL Server and not let it go until you reconfigure (and subsequently restart) SQL Server. You should be setting this option only when you also select the option Use A Fixed Memory Size (MB) with an appropriate number. Behind the scenes, when this combination is set, SQL Server is issuing sp_configure twice, once with MAX SERVER MEMORY, and once with SET WORKING SET SIZE (setting that to 1). Setting this option also requires a stop and a restart of SQL Server, as shown in the prompt in Figure 14-11.

Figure 14-11: Prompt after selecting Reserve Physical Memory For SQL Server.

| Tip | It is not a good idea to select Reserve Physical Memory For SQL Server along with Dynamically Configure SQL Server Memory. They are, in essence, conflicting options, and you would be telling SQL Server to dynamically manage memory but grab a fixed amount. If you set Set Working Set Size with dynamic memory, it might cause problems. The Enterprise Manager GUI does not prevent you from combining these options. |

If you are using /3GB only, you can allow SQL Server to manage memory dynamically up to 3 GB, or you can set a static amount. Nothing special is required. However, if you are using AWE memory, as mentioned earlier, you must set the option awe enabled to 1, as well as set working set size to 1 to allow SQL Server to physically reserve the amount of memory. Here is what the syntax looks like:

EXEC sp_configure 'awe enabled', 1 RECONFIGURE EXEC sp_configure 'set working set size', 1 RECONFIGURE

Then you will need to set the options Max Server Memory and Min Server Memory to the same number.

EXEC sp_configure 'min server memory', 8000 RECONFIGURE EXEC sp_configure 'max server memory', 8000 RECONFIGURE

Once all this is done and you restart your SQL Server instance, you will be able to take advantage of your memory above 4 GB with AWE.

When you are looking to tune your memory used for SQL Server, when can you combine /3GB with AWE under 32-bit? Follow Table 14-6.

| Amount of Physical Memory | Recommendation |

|---|---|

| Up to 4 GB | No switches (each instance can use only up to 2 GB of memory) or /3GB (each instance can use up to 3 GB of memory dynamically or statically, reserving 1 GB for Windows). No real benefit from setting AWE with this small amount of memory. /3GB is used for plan cache. |

| 4 GB to 16 GB | Can combine /3GB and /PAE with AWE, but you would need to test the use of both to see if any benefit is gained . /3GB can help with plan cache, and AWE for data cache. |

| More than 16 GB | /PAE with AWE only; /3GB is useless. If combining instances for consolidation or failover clustering, make sure the instances do not rely on /3GB. |

| Note | It is possible to use AWE memory without having more than 4 GB of memory, but you will get very little benefit from setting a server to use AWE and be able to grab only 2 GB of memory. You might as well just set working set size to 1 (or select Reserve from the GUI) and select the amount of memory. They will have basically the same effect. |

| Warning | Do attempt to deploy AWE, PAE, or /3GB in a production environment without testing the configuration first. You do not want any surprises awaiting you. Also, remember to take into account room for the operating system. The rule of thumb is to leave at least 1 GB for Windows just in case, as long as you can spare it. |

Memory and Multiple Instances on the Same Server or Server Cluster

One thing many people struggle with is how to set memory for a clustered instance or multiple clustered instances running as part of a server cluster or if you have multiple instances on one Windows installation. In addition to the considerations listed in the previous section, for a cluster you now need to take failover into account. If you have a single instance cluster, assuming all nodes are equal in capacity and are configured the same, you will not have to worry about anything. During a failover, the SQL Server should start up and behave exactly the same way.

Multiple instances are different. Why? In a failover, your goal should be to ensure that during the failover, when the instance is restarted on another node, it will have the correct capacity from a processor and memory standpoint (this section will deal only with memory; see Chapter 6, Microsoft SQL Server 2000 Failover Clustering, for details on processors). Here is where the version of your operating system greatly plays into how you ultimately configure your memory for each SQL Server virtual server. For example, if you are using Windows 2000 Advanced Server, you are limited to 8 GB of memory, eight processors, and two nodes. If you need two, three, four, or even more instances, this could be challenging to say the least, especially if they all need more than 4 GB of memory each! Switching to Windows Server 2003 Enterprise Edition, you can now have up to 32 GB of memory (not to mention two more nodes that would be available to you).

With multiple instances on a stand-alone server, all instances need to play well together in the proverbial sandbox. Although you do not need to worry about failover, you do need to worry about performance and about ensuring that you are not starving one instance to feed another. Your operating choice and the amount of memory it can handle will influence the design of your instances, and, specifically, the amount of memory you can allocate.

Think of your instances as glasses of liquid. If you have two glasses that are half-full, you can pour the contents of one into the other. If you have two full glasses , you cannot combine them. This is how your SQL Servers will behave in a failover or when you combine instances on one server. To prevent this, no matter what you employ (PAE/AWE or /3GB), you should set the Max Server Memory option on each instance to physically cap the amount of memory each instance will get.

Consider this example: you have a two-node cluster with three SQL Server instances. Instance 1, which currently resides on Node 1, has 7 GB of memory configured using AWE. Node 2 houses Instance 2, which has 5 GB of memory allocated with AWE, and one instance that is not using any advanced options and is currently using 1.5 GB of memory. Each node individually has a total of 8 GB of physical memory. This is shown in Figure 14-12.

Figure 14-12: Nodes prior to failover.

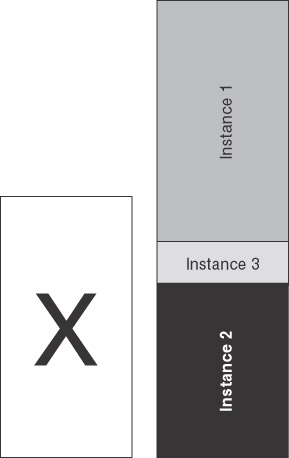

A problem occurs on Node 1, causing a failover. The instance tries to restart on Node 2, but it cannot. You now have a failed set of SQL Server resources that cannot come online, causing an availability problem. Why? Well, to use AWE, you need to guarantee that the memory you told SQL Server to use will be there. A failover is basically a stop and start on another server. In this case, you were already using 6.5 of the available 8 GB of memory. 6.5 + 7 does not equal 8, and it does not matter how big your page file is. Your instance will not grab the memory for AWE. It might, however, start up, but it will only grab the amount of memory that it can up to 2 GB (see earlier for how AWE behaves in a failover). Now you are risking memory starvation of the operating system, and this can affect all instances. This is shown in Figure 14-13.

Figure 14-13: Nodes after failover.

So how do you prevent this? By planning, these are the types of scenarios you need to play out in your head when proposing the number of instances on a cluster. Your management might see a two-node cluster and say, Hey, why is this second server doing nothing? Get something on there! , leaving you in the state of the previous example.

The way you can balance memory in this case would be to give two instances that need a fixed amount of memory 3 GB each and let the other one be dynamic (or set it to 1 GB or 1.5 GB to allow room for the operating system). If you cannot live with this, you will need another cluster or set of servers to handle this workload. The recommendation was basically to halve the memory, which might lead some to believe that you are wasting resources, but again, is performance or availability your goal? By definition, if you are reading this book and implementing things like a cluster, you are probably saying that availability is your highest priority. If you never have to failover and you left the instances at 7 GB, 5 GB, and dynamic, things would work great. But once a failover happened , all bets would be off.

64-bit does change the rules, as stated above. Because all memory is dynamic and you have much more available to you, you should set a fixed minimum amount of memory that SQL Server will need to use and let SQL Server manage anything else it needs. This way, you will always guarantee that your instance will have enough memory to do what it needs to do, but you are giving it the option of grabbing more memory if necessary.

| Important | When employing AWE, using 64-bit, or setting a fixed amount of memory, the SQL Server instance upon startup will need to physically reserve the configured amount of physical memory. This process is not instantaneous. The more memory you allocate to SQL Server, the more time it will take to start. In a failover scenario, it will definitely increase your failover time, so take that into account. It is usually measured in minutes, rather than seconds. |

EAN: 2147483647

Pages: 137