Application server virtualization

|

| < Day Day Up > |

|

Virtualization in the application server environment

In many IT operating environments, every J2EE application has its own customized environment with dedicated server hardware. This can lead to many problems:

-

Server hardware is underused. Studies show that machines in UNIX® environments are only 10% utilized. Machine use in Windows-based platforms is even worse.

-

Quality of service to end-users is poor. If a Web application experiences a surge in traffic there may not be sufficient resources to maintain good response times. Adding new server hardware to the environment can be time consuming and costly.

-

Response to business needs for deploying new applications is slow. Setting up a new server environment for each application is time consuming and complex.

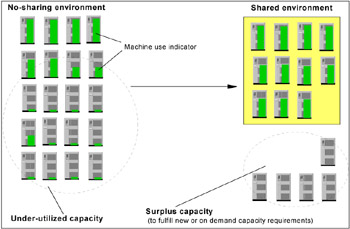

As shown in Figure 2-1, a virtualized application server environment addresses these issues. A virtualized application server environment means that the IT organization provides virtual application server resources to lines of business, as opposed to physical server resources that are dedicated to each application. In addition, the lines of business are charged for exactly the resources they consume, thereby converting a fixed cost to a variable cost.

Figure 2-1: Transformation to a virtualized server environment

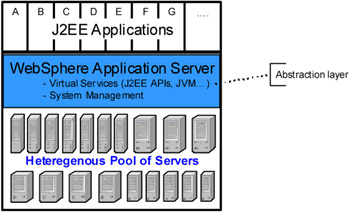

The sharing of physical resources requires an abstraction layer that decouples the consumer of the resource from the physical resource. With J2EE applications, this abstraction layer is handled by middleware software, such as the WebSphere Application Server. As depicted in Figure 2-2, WebSphere Application Server allows virtual computing services to be provided to applications through established standards, regardless of the physical implementation of the physical environment.

Figure 2-2: The role of WebSphere Application Server in a virtualized environment

Challenges

If virtualization were easy, it would be more pervasive today. There are, however, many technical challenges to attaining virtualization. For an application server environment, the three main challenges are:

Application isolation: handling the potential negative impact of any failing application on the remaining healthy applications in a shared environment

Failover and high-availability: managing inevitable scheduled and nonscheduled outages

System administration: reducing operational complexity of managing shared resources

The remainder of this chapter discusses approaches to the architectural and system management techniques for solving each of these challenges.

Preparing the application server environment for virtualization

Several steps are essential to preparing an IT operating environment for server virtualization. The next sections review the importance of monitoring, prioritizing applications, and standardizing.

Monitoring and the autonomic loop

Monitoring resources and application performance is a critical element of the virtualized operating environment. The benefits provided by monitoring include:

-

Historic data to assist with planning future resource needs

-

Real time data to quickly react to unexpected resource needs

-

Ability to measure adherence to performance service level agreements (SLAs)

-

Proactive alerts and detail data to quickly detect and solve application problems

-

Resource usage data by application, necessary for allocating costs appropriately

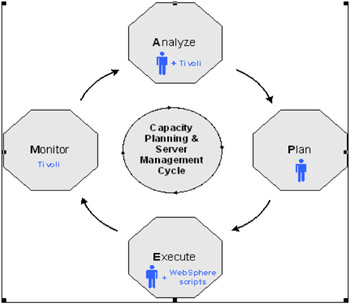

The four tasks involved in maintaining the right amount of resources in a virtualized server environment can be described using the autonomic loop, as shown in Figure 2-3:

Monitor: Monitor resource needs using tools such as those provided by Tivoli®.

Figure 2-3: Monitoring and the autonomic loop

Analyze: Analyze real time and historic data and compare to required SLAs or resource use thresholds.

Plan: Decide what actions are required, if any, to ensure applications are getting the required resources and end users are satisfied with quality of service.

Execute: Execute the actions required to maintain efficient use of resources and customer satisfaction. The execute step covers actions such as adding or removing resources to the shared server environment, both overall and for specific applications.

Today these tasks involve combining monitoring tools, such as those from Tivoli, with personnel involvement in several areas. For example, the execute step is one of the most tedious and costly system administration tasks in the application server environment. Later in this chapter the use of scripts is reviewed as a way to improve the consistency, precision, and speed of completing this task. Future on-demand products will automate this cycle, creating what IBM refers to as an autonomic loop.

Prioritizing applications

In a virtualized server environment it's important to differentiate applications based on an assigned priority. The main argument for assigning priority is that some applications are more important to the business than others. Prioritizing applications ultimately raises the quality of service and reduces costs because:

-

When server resources are constrained, higher priority applications are allotted resources of the virtualized environment ahead of the lower priority applications ensuring end users remain satisfied with the performance of the higher priority application.

-

It is less costly to deliver lower levels of service to some applications. Although application owners will want the best available qualities of service, there may be no business justification for the extra cost.

In addition to higher qualities of service to end users, higher priority applications can be provided with SLAs that also provide faster response time for system support from IT operations. With a variable IT cost structure, prioritization can also provide a means for additional refinement of the cost allocation.

For the purpose of discussion, three priorities are defined: high, medium, and low. The high priority workloads are the business critical applications. These workloads are promised the highest levels of service and the owners are charged accordingly. As resource availability is constrained, the resources of the low priority workloads are deprived before the medium priority workloads.

Standardizing

Standardizing on the abstraction layer (Figure 2-2) allows sharing. The standards become part of the contract between the consumer of the service, the applications from the lines of business, and the provider of the server resources. Any application that has to deviate from the contract requires its own standalone, or nonshared, environment. Complexity decreases the efficiency of sharing resources and increases costs. Therefore, a simpler abstraction layer increases the efficiency of resource sharing and reduces the cost to provide a service. It is important to standardize not only on a standard such as J2EE, but also on a specific version of the standard. It's possible to attain even more decoupling and simplification by using an application framework on top of the application program interfaces (APIs) for J2EE and Java 2 Platform, Standard Edition (J2SE).

|

| < Day Day Up > |

|

EAN: N/A

Pages: 117