Technical Issues in Constructing a Data Warehouse

Due to Internet business activities and intense business-to-business activities, today, enterprise data warehouses are not limited to the four walls of a corporation. Today's mobile workforce and partnerships with vendors and suppliers are forcing data warehouse builders to provide secure access to corporate data from many parts of the world.

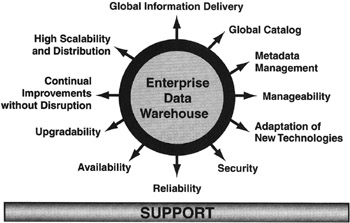

Data warehouses must therefore operate in 24-7 mode. How is this possible? Figure 1-3 shows technical issues that need attention to support such environments.

Figure 1-3: The Technical Aspects of a Data Warehouse.

Follow clockwise starting with Global Information Delivery.

Global Information Delivery

Global information delivery services are a must in today's data warehouse environment. The Internet and intranets are becoming the primary vehicles for information delivery. Though Internet technology remains very fluid, it is still the first choice to deliver information to end users. One must understand robustness (scalability, security, reliability, and cost) of Web services needed to deliver information to end users. Moreover, it is critical that such Web services provide seamless integration and provide similar development/management environments with the rest of the data objects in all data warehouse layers.

Global Catalog

Global catalog goes hand in hand with the information delivery services. Today, it is hard to find what you need because catalogs are based on an individual data warehouse. End users spend a lot of time finding what they need. The support of one global catalog is a key component of a global information delivery system.

Metadata Management

Metadata management plays an integral role in the quality of information managed in a data warehouse. Metadata is information about the data such as data source type, data types, content description and usage, transformations/derivations rules, security, and audit control attributes. Access to metadata is not limited to the data warehouse administrator but must also be made available to end users. For example, users want to know how revenue figures are calculated. Metadata defines rules to qualify data before storing it in the database. The end result is a data warehouse that contains complete and clean data.

Manageability

Managing a traditional central data warehouse is a relatively simple task. However, in today's environment where data objects are distributed across the world and also reside on end-user workstations (laptops), it is a nightmare to manage such environments. This is exactly what we are facing today. One must question vendors on how they will manage such mobile data warehouse environments. As part of its Customer Relationship Management (CRM) initiative, SAP is planning a Mobile Sales Automation (MSA) server that integrates and manages data between the SAP Business Information Warehouse and the data sets on a salesperson's laptop.

Adaptation of New Technologies

Implementation of an enterprise data warehouse is usually a multi-year project. By the time you go live with a data warehouse, you probably will have found several new technologies that are very powerful and flexible to manage, access, and present information. Technically, as long as you have built your data warehouse based on an architecture using accepted industry standard APIs, you should be able to incorporate emerging technologies without extensive reengineering. For example, Microsoft has a generic data access API named Open Database Connectivity (ODBC) that accesses data from just about any relational and flat file sources.

Because ODBC cannot access data from multidimensional data sources, Microsoft proposed another standard, OLE DB for OLAP (ODBO), to access data from multidimensional databases. Today, if your existing data warehouse environment uses open APIs, you can easily join information from multidimensional and relational data sources using ODBC. ODBO, for example, integrates multidimensional data from SAP BW and Microsoft's OLAP server. Soon, in-memory database technology will emerge to process large volumes of data in memory for fast data access and manipulation. Can your data warehouse use new technologies without overhauling the existing design? Make sure that the data warehouse can adopt new emerging technologies with the least amount of work. Look for the data warehouse construction technologies that have published APIs and automatically understand interface definition of new objects and methods to manipulate data.

Security

In the past, data warehouse security was much easier to manage because data warehouses were centrally controlled and end users remained within the walls of a company. Today this has changed. Individual roles are not defined by whom you report to but rather by what your functions are. Moreover, mobile workforces across the globe take on multiple roles. This makes security administration a big challenge. A data warehouse environment must support a very robust security administration by using roles and profiles that are information object behavior-centric rather than pure database-centric. For example, a role such as cost center auditor defined in a data warehouse allows one to view cost center information for a specific business unit for a given quarter but not to print or download cost center information to the workstation.

Reliability and Availability

Reliability and availability are important areas in successful use of a data warehouse. Users always want to know if the quality of information is reliable. How is the Key Performance Indicator (KPI) calculated? Additionally, does the infrastructure (networking gears, hardware, database management systems) comprise reliable products that handle large data volume needs? Are such systems highly available? Can on-line backup and recovery/restoration of data objects be performed without having the user log off? If a system fails, how quickly does a standby system come up online? Can it preserve end users' state information and allow them to restart their work where they left off when primary systems crash? Based on data warehouse availability needs, one can use hardware clustering and appropriate high-availability software technologies to build a 24-7 data warehouse environment.

New data warehouse construction products provide methods to make systems highly available during data refresh. Products like SAP BW refresh a copy of an existing data object for incoming new data updates while end users keep using the existing information objects. However, when a new information object is completely refreshed, all new requests are automatically pointed to the newly populated information object. Such technologies must be an integral component of enterprise data warehouses.

Upgradability and Continual Improvements

The ability to upgrade and continually improve a data warehouse environment without disruptions is necessary for a 24-7 environment. What this means is that if any component of a data warehouse (database management system, hardware, network, or software) needs upgrades, it must not lock out users, preventing them from doing their regular tasks. Moreover, any time a new functionality is added to the environment, it must not disrupt end-user activities. This may seem impossible, but it's not. For example, one can apply certain software patches or expand hardware components (storage) while users are using the data warehouse environment. During such upgrades, end users may notice some delays in retrieving information, but they are not locked out of the system.

Scalability and Data Distribution

Scalability and data distribution are major challenges facing today's data warehouses. Often, scalability of an environment is equated to data volume or number of users. This assumption is somewhat true for OLTP applications. In a data warehouse, hundreds of users may be accessing a predefined report without any performance degradation. On the other hand, a few analysts can bring the entire system to a grinding halt by performing very complicated queries or doing data mining against large data volumes. Note here how a data warehouse environment scales to support both types of users, those performing complex and light data manipulations, under one umbrella by using application servers.

Due to large data volume movement requirements, data warehouses consume enormous network resources (four- to five-fold more than a typical OLTP transaction environment). In an OLTP environment, one can predict network bandwidth requirements because data content associated with each transaction is somewhat fixed. In data warehousing, it is very hard to estimate network bandwidth to meet end-user needs because the data volume may change for each request based on data selection criterion. Moreover, large data sets are distributed to remote locations across the world to build local data marts. The network must be scalable to accommodate large data movement requests.

To build dependent data marts, you need to extract large data sets from a data warehouse and copy them to a remote server. A data warehouse must support very robust and scalable services to meet data distribution and/or replication demands. These services also provide encryption and compression methods to optimize usage of network resources in a secured fashion.

| Team-Fly |

EAN: 2147483647

Pages: 174