NFS Server Availability

NFS is Sun Microsystems' technology that provides the ability to expose server file systems to other servers. Originally developed for Unix systems to be able to remotely mount other Unix server file systems, it has now come to be used widely by systems and application architects . NFS is also now available on Windows-based platforms and is interoperable with Unix and Windows servers and clients .

NFS has its issues. There are performance trade-offs and security implications for using it, but when tuned correctly and implemented in the right way, it can serve as a powerful solution to share data between multiple nodes ”a cheaper option than using Network Attached Storage (NAS) ”and, in many cases, it does the job just as effectively.

NFS is widely used to provide a shared file system mountable across many Unix (or Windows) servers. You'll now look now at some implementation options where people are using NFS within WebSphere environments.

NFS Options

NFS is essentially a shared file system technology. Similar in function to NAS, Storage Area Networks (SAN), and Session Message Block (SMB) ”which is a Windows-based file system network protocol ”NFS provides a simple and cost-effective way of sharing data between hosts .

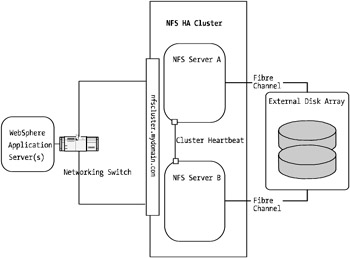

Prior to the likes of NAS and Samba (using SMB), NFS was the main network file sharing technology available. Even now, NAS has limitations in being able to share common data (shared data) with multiple hosts in a read-write configuration, but NFS supports it well. Figure 8-2 shows a typical example of a NFS solution.

Figure 8-2: Basic NFS implementation

The difficulty with NFS configurations is that there's a fair amount of file locking and I/O management that takes place within the NFS server itself. Because of this, it's not easy to load balance or cluster NFS without some solid design considerations.

Consider for a moment that you have a cluster of WebSphere application servers, all accessing data files present on a remote NFS server. If you're wondering what you could use NFS for in a WebSphere environment, consider some of these options:

-

WebSphere operational log directory sharing

-

Commonly deployed Enterprise Archive (EAR)/Web Archive (WAR)/Enterprise JavaBean (EJB)/Java Archive (JAR) files directory

-

Application data area ”for example, temporary files and images created on the fly such as Portable Document Format (PDF) files, and so on

-

Raw data being read into the WebSphere applications

If the NFS server went down, what would happen to your WebSphere application servers? If your WebSphere environment was using the NFS server for operational purposes, chances are things would get wobbly pretty quickly. If it was an application- related area or data store, then your applications would most likely cease functioning. You need some form of load balancing or clustered NFS solution to ensure that your data is always available.

There are several options available for highly available NFS solutions. The following are some of the products that provide solid, robust solutions for WebSphere-based applications using NFS:

-

EMC Celera HighRoad (a hybrid NFS/Fibre/SCSI solution)

-

Microsoft Windows internal clustering (NT, 2000, XP, and 2003 Server editions)

-

Veritas Cluster Server (most operating systems, such as Solaris, Linux, AIX, HP-UX, Windows)

-

Sun Microsystems SunCluster (Sun Solaris systems only)

-

High Availability Cluster Multiprocessing (HACMP) (IBM AIX systems only)

-

MC/ServiceGuard (HP-UX systems only)

-

HP TruCluster (Digital/Compaq/HP Tru64 Unix systems only)

-

Polyserve Matrix Server

All of these, and many more on the market, provide solid NFS cluster solutions. In fact, NFS is one of the easier forms of clustering available given that it's what I term a quasi-stateless technology. That is, imagine that you have two NFS servers, both unplugged from a network yet both configured as the same host (in other words, with the same IP address, the same host name , the same local disk structure and files, and so on). If you have a third Unix server that's an NFS client and remotely mounted to one of the NFS servers, you'd be able to happily access files and data over the network to the NFS server.

If you pulled the network cable out of one NFS server and plugged it into the secondary Unix server, nine times out of ten, your NFS client wouldn't know the difference ”apart from a possible one- to two-second delay. Of course, if you did this during a large, single file I/O, depending on how you tuned your NFS server and clients, it may result in additional latency and the file I/O being retried (retrieval would start again).

NFS is inherently a resilient technology. For this reason, it's a solid and cost-effective data sharing technology for J2EE-based applications.

Implementation and Performance

Using one of the NFS cluster offerings mentioned, you can easily set up an automated network file sharing service that's highly available. The Unix vendor cluster technologies such as SunCluster, MC/ServiceGuard, and so forth provide solid solutions for high availability.

You need to consider the following, however, when looking at your highly available NFS implementation:

-

Network infrastructure performance

-

Failover time

-

File I/O locking and management

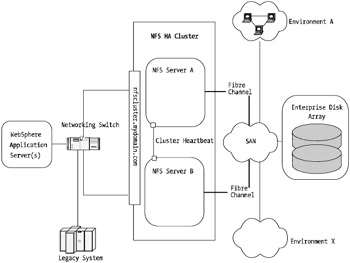

You'll now look at each of these in some more detail. Figure 8-3 shows a highly available NFS implementation.

Figure 8-3: Highly available NFS implementation

In Figure 8-3, a NFS cluster exists as well as a number of WebSphere-based hosts. This implementation is advanced given that it shares storage behind the NFS servers via a SAN. A highly available NFS environment doesn't need this. However, if you're sharing more than one NFS server node, you'll require this type of technology or something similar to it.

Many system vendors manufacture dual-headed disk arrays that allow two hosts to connect to the array at the same time. It's then left up to the high-availability cluster to determine which node sees those disks and controls them. In most cases, only one server can directly connect to a disk array volume group at the same time.

| Note | There are advanced technologies available from EMC and Veritas that provide high-end data clusters that can expose shared disk volumes via clustered file systems with up to eight nodes serving as active-active data bearers . This level of environment is beyond the scope of this book. |

Given that the NFS technology is communicating on a standard Transmission Control Protocol/Internet Protocol (TCP/IP) network (also one of NFS's values), it's bound by normal network related issues such as routing, Denial of Service (DoS) attacks, poor performance, and so forth.

As you'll see in the next section, with something as critical as your WebSphere NFS-based data store, always ensure there are multiple routes between the client and servers.

Consider using a private network that's internal to your WebSphere cluster and NFS cluster that's used for both internal WebSphere traffic and NFS traffic only, and leave customer- facing traffic to a separate network. This will help mitigate any chance of DoS attacks that directly impact your file I/O. It'll also ensure that any large NFS I/O that takes place doesn't impact customer response times.

Furthermore, use the fastest network technology available. For example, 100 megabits per second (Mbps) Ethernet is essentially the same speed as an old 10 megabytes per second (MBps) Small Computer System Interface (SCSI). This may be sufficient for many implementations , but remember the earlier discussion of disk I/O when you saw how quickly a SCSI bus of 80MBps can be fully consumed! Consider using Gigabit Ethernet, which will operate , in theory, at the same speed as Fibre Channel (100MBps). In practice, you'll see speeds closer to 50MBps. Still, it'll burst and is better than 5MB “10MBps!

Failover time within your NFS cluster is another important issue. You don't want to run a cluster that takes 30 seconds to failover. When configuring your NFS cluster, ensure that the cluster is only operating a highly available NFS agent and not servicing other components within the same cluster. Most well-configured NFS cluster solutions will failover within a matter of seconds. Any longer than this, and some of the WebSphere timeout settings discussed in other chapters will start to kick in and cause problems for your users.

File locking and I/O management is another important issue. Although NFS is versatile, it can introduce some quirky problems with environment integrity if you try to force it to perform double-file I/O locking. This situation occurs when you have two NFS clients trying to write and update the same file at the same time. Although technically this is possible, there are well-documented situations with NFS where this will cause NFS server daemons to go into an unhappy state for a short period while they try to manage the file I/O.

Avoid having your WebSphere-based NFS application clients writing to the same file at the same time. If you need to write to one particular file from many locations, consider developing a distributed file writer service within your application and have all WebSphere application clients communicate to it for file writing I/O.

If your WebSphere applications are simply writing to their own files (or reading) but are on the shared NFS storage, then this is fine and there will be no I/O latency issues.

EAN: 2147483647

Pages: 111