Executing Reports in Real Time

| | ||

| | ||

| | ||

Executing Reports in Real Time

The most common way to use SQL Server technology to access real-time data is to use Reporting Services. A report written against the transaction system will, by default, be executed on demand using live data. If your system is small, your usage is light, you have few cross-system integration requirements, and no one needs ad hoc access to the real-time data, you can serve real-time data from standard reports.

The main drawback of this approach is that it stresses the transaction system. Many companies decide to build a DW/BI system in part to move reporting off of the transaction systems. A popular report that queries a large section of the relational tables is going to be very expensive to run in real time. You shouldnt abandon Reporting Services immediately, however. It provides several caching features that will help you address this performance problem.

What if your users need to see integrated and cleaned data in real time? Dont abandon Reporting Services yet. Its really easy to source a report directly from an Integration Services package, and depend on Integration Services to perform the transformation and integration.

Later in this chapter we talk about how to populate the relational data warehouse database in real time. The same Reporting Services techniques that we discuss in this section can, of course, be used against a real-time data warehouse database.

Serving Reports from a Cache

To improve the performance of the reporting system, Reporting Services provides some features to reduce latency and use pre-stored reports. The first technique is to cache reports on a schedule. A user cant tell the difference between a cached report and a normal on-demand report, except for the date and time the report ran. The first user to run the report has to wait for the query to execute and the report to render. Subsequent users simply pull the report from the cache. You specify a schedule for each report, detailing how long the report can be cached before it expires. Once the cached report expires , the next query will result in a new, refreshed report being created and cached. Users may be surprised to see uneven query performance from one time the report is run to the next .

Reporting Services caching sounds a lot like Analysis Services proactive caching, which we discuss later in this chapter. The two features address the same problem, but the Analysis Services technique is more complex because it supports ad hoc use of the data.

The second very easy technique for improving performance of reports against live data is to create a snapshot report. A snapshot report is a feature of Reporting Services Enterprise Edition that saves the reports body, including the dataset, in the report catalog database. A snapshot report addresses the problem of cached reports uneven performance. Someonewe cant predict whomis going to pay the price of executing a cached report after the old cache has expired. With a snapshot report you could instead schedule the execution of the report to run on a schedule and store its results. Youd probably choose this approach if youre worried that the CEO would be the one who might execute the expired cached report and have to wait for the refresh.

| Reference | See the Books Online topic Report Caching in Reporting Services for more information about cached reports. See the Books Online topic Setting Report Execution Properties for more information about snapshot reports. |

Sourcing a Report from an Integration Services Package

As Table 17.1 highlighted, the main problem with connecting Reporting Services directly to the transaction database is that you cant transform or integrate the data. Although a report can be fed from multiple sources, if those sources are misaligned or contain bad data, theres not much you can do. If you cant fix the data in the query SQL used to define the reports data sources, youre out of luck.

Add Integration Services into the picture, and your options expand. The obvious solution is to run an Integration Services package that populates a table or tables, and then run a report on those tables. However, if the transformed data doesnt have permanent valueperhaps because your DW/BI system is updated daily and you plan to leave it that wayyou can run a package that generates a report as its output. Or, more accurately, you can create a report that runs an Integration Services package as its input.

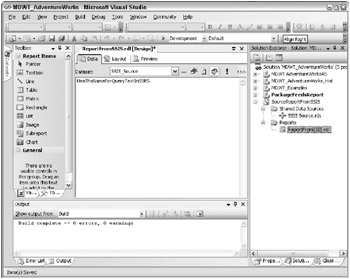

This is surprisingly easy to do. Create an Integration Services package with a Data Flow task that generates the rowset that you want as input to the report. This package can be as simple or complex as you like. In the final step of the Data Flow, where normally youd write the data to a table, send it to a Data Reader transform. The Data Reader transform doesnt have a nice user interface. You use the advanced properties editor. No worriesall you need to do is to name the transform, as illustrated in Figure 17.1, and also to choose which columns are included. Remember what you named the Data Reader transform because youll use this name in the report definition in Reporting Services. Save the packageremember where you saved itand go to a reporting project to define the report.

Figure 17.1: Setting up the DataReader destination transform

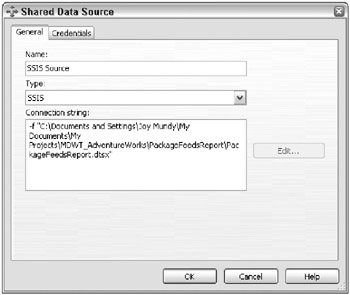

Create the data source for the report, as illustrated in Figure 17.2. The data source type is SSIS, rather than the normal OLE DB or SQL Server data source type. The connection string is simply the location of the package. In Figure 17.2 were pointing to a package in the file system.

Figure 17.2: Setting up the reports data source

The report definition is exactly the same as normal, except for the query text. The query text is simply the name of the Data Reader destination transform that you created in Integration Services, as you can see in Figure 17.3.

Figure 17.3: Setting up the reports query text

This is a very simple technique for serving up a predefined report on a constructed dataset. The biggest drawback is that theres no built-in way to parameterize the report. With a modest development effort you could use environment variables to communicate between a custom reporting portal and Integration Services, but thats hardly an out-of-the-box experience.

| | ||

| | ||

| | ||

EAN: N/A

Pages: 125

- Step 1.1 Install OpenSSH to Replace the Remote Access Protocols with Encrypted Versions

- Step 3.1 Use PuTTY as a Graphical Replacement for telnet and rlogin

- Step 4.1 Authentication with Public Keys

- Step 4.3 How to Generate a Key Pair Using OpenSSH

- Step 6.2 Using Port Forwarding Within PuTTY to Read Your E-mail Securely