Capacity Planning

| Capacity planning, also called right sizing, is a process by which you develop a working model or hypothesis for the amount of loading that is placed on your server and the power of the server necessary to balance that load. When you successfully determine the right size for your server(s), you will have achieved the following five goals, in order of their importance:

Knowable, Unknowable, Known, and UnknownAs you might rightly suppose, it is hard to completely balance all the factors involved in capacity planning. There are known unknowns, such as the following:

There are also unknown unknowns, such as these:

Let's face it: Capacity planning is a very difficult task because no one can predict the future, and technology advances are uncertain. Let's start on some solid ground, what we defensively call the knowable knowns. There are two basic approaches to capacity planning, and they hinge on whether you have or can get established historical data for your usage pattern. If you can't, you must rely on the experience of others in similar circumstances who are using a server like the one you intend to deploy in a situation that is similar or at least can be extrapolated to the situation you are in. When you can establish historical data, you are in more control over the design you choose and the result you can achieve. Let's look at these two situations in a little more detail. The "art" of capacity planning is one that can yield to statistical analysis, although many people find that it is easier to determine whether a certain type of server will not be able to maintain a load than whether that server can support that load. Consider a situation in which you have a server with a disk system capable of 250 IOPS (inputs/outputs per second), but you need to attain 500 IOPS. You know that your system won't give you the performance you want, but what you don't and can't know is whether if you double the number of disks you can achieve the rate you want. At some point in the system's design, you are going to max out your I/O bus, and you can't know if that point will be reached somewhere between 250 and 500 IOPS or beyond. Here is one fact that you can absolutely take to the bank, no matter how powerful your server: Your users and staff will find a way to consume all of its resources at some point. This is a variant of Parkinson's Law (www.heretical.com/miscella/parkinsl.html): "Work expands so as to fill the time available for its completion." Sizing ToolsWhen you are attempting to configure a server for a particular purpose, you can assume that all operating systems and applications make different demands on your server. Because software architectures vary widely, you need to characterize the capability of the operating system and the application to determine what it is capable of supporting. A hypothetical database application might be multithreaded and able to distribute a load among a number of processors; this would argue strongly for one or a few large SMP multiprocessor boxes. With similar large databases in existence, you find that I/O isn't often the problem; rather, raw computing power is. The system crunches a lot of data from a short question to return to the client an answer that doesn't have much data in it. Applications that require persistent connections, channels, or sockets are good candidates for scale-up server consolidations. Upon closer inspection, you might find that published data by the vendor shows that a particular operating system/database application scales performance linearly from one to four processors but starts to loose multithreaded efficiency as the number of processors goes above four. By the time you get to eight processors, those eight processors run at 60% efficiency. This is in fact a very common scenario, and it comes up because the crossbar architecture that couples two quad processor sets together to make an eight-way system isn't 100% efficient and doesn't scale perfectly. So with that information, you have a first cut at right sizing your server, and you see that a quad server or an eight-way is the limit beyond which you do not want to go. Consider a webserver deployment, the traditional "scale-out" instead of scale-up application where very little processing is done at the server but where I/O requirements are extreme. Any application that doesn't require or can't maintain a persistent connection isn't going to benefit the most from a faster server; it will benefit more from having more network connections and more available servers to connect to. That's why webservers tend to be deployed in server farms. With a webserver, much of the processing gets done on the client side. When you examine the metrics of the particular webserver/operating system combination that you intend to use, you may find that the application can't make good use of more than two processors (this is another common scenario). Therefore, this system architecture begs for a solution in which many more computers are used and the amount of I/O that can flow through the network interface is the key bottleneck. Note IBM has a redbook called "IBM e-server pSeries Sizing and Capacity Planning: A Practical Guide" that you can download from their site, at www.redbooks.ibm.com/abstracts/SG247071.html?Open. This book contains a lot of conceptual material, including benchmarks, guidelines for application-specific sizing, a listing and description of available sizing tools, and IBM's Balanced System Guidelines. Although the redbook is aimed at selecting a pSeries server, the principles of sizing apply to other servers as well. In order to decide how to size your server without being able to characterize the potential system loading, you need to look at similar systems that are deployed in the field. If you are lucky, you may know other places running servers and applications similar to the one you are running, and you can piggyback on their experience. Chances are, though, that you don't or that the other companies aren't willing to share them with you, so the next logical place to start to develop your capacity plan is with the server vendor selling you the system or with the application vendor selling you the software. IBM, for example, has a tool for this called the eConfig configuration that is available only to IBM and IBM Business Partners. If you are purchasing a server from a large OEM such as Hewlett-Packard, IBM, or Dell, you might find that the company has developed online sizing tools that can aid your selection of a particular server from that manufacturer. For example, here are some sizing tools that you can use for different applications:

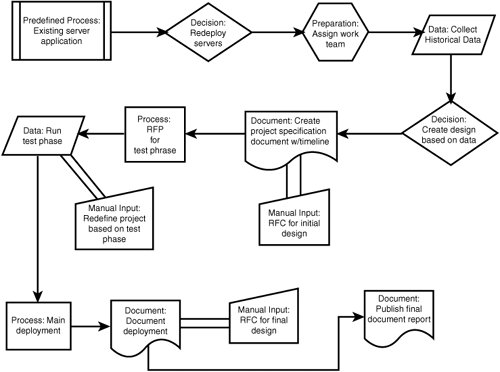

Although these systems identify only particular models from Dell and Hewlett-Packard, you can be relatively certain that if a dual-processor server handles the load that the sizer suggests, someone else's dual-processor system or even your own home-built one will probably be in the right ballpark. Capacity Planning PrinciplesIt is possible to be a lot more precise when you have historical data guiding your selection of a server. In a real case scenario, you can determine the parameters necessary to get optimum performance by examining logs from the previous generation of server(s). With a running system, you can analyze how resources are utilized and where any bottlenecks might be, and you can determine the nature of the I/O for which you need to tune your system. Figure 20.2 shows the common steps in a capacity planning project. Figure 20.2. A right sizing flowchart is a very useful tool when you are involved in a server resizing project. Let's consider an example of how a capacity planning project might proceed. The principles involved in capacity planning based on historical data are as follows:

An Example of Historical DataAt Company XYZ the decision is made to consolidate a large number of Microsoft Exchange 2000 servers at several sites into a smaller number of more powerful systems at a single sitea scale-up consolidation project. The rationale for this approach is that fewer servers offer fewer points of failure and lower costs of management. Exchange is an application which requires that four persistent connections (sockets or channels) be made to clients, which makes it a good candidate for a scale-up project. The project manager is tasked with right sizing the system(s) to be purchased and developing the parameters under which the system is operational. The company had determined that instant messaging (IM) reduced its total cost of ownership (TCO) and wanted to add additional server resources to its Exchange operation. There was also the concern that as messages attached more and more rich content, their storage needed to be consolidated in order to handle the additional requirements. The first step in the analysis was to collect data on the volume of email and the types of email in use by the company. It turns out that logging is turned on and a set of reports are generated that summarize email traffic in 48 increments each day (every 30 minutes). From these data the following can be determined:

Based on these characteristics, the project set the following goals:

Exchange 2000's database engine is tuned to read and write in 8KB blocks, or extents. (That's not always the case, however: Other applications, such as SQL Server, are designed to use 16KB blocks.) The idea for disk tuning is that you try to match the READ/WRITE characteristics of the server to the application. For Exchange, the two most important characteristicsthe ones that the performance logs and literature recognize as bottlenecksare the READ to WRITE ratio and the I/O loading, known as the request rate. The actual ratios as read by utilities such as IOMeter or IOBench were 3 to 1 READs to WRITEs (on average). From the Windows Performance Monitor and other utilities, you can measure IOPS, which is a measure of the storage system loading. The two relevant disk counters were Pending I/O Disk Requests and Average Disk Queue Length. These factors define the average I/O per user, approximately 1.5 IOPS per user. Memory counters also defined server RAM as a potential bottleneck. System SelectionWith all the aforementioned design parameters and application characteristics, it was possible for the people who are specifying the server consolidation project to make various system selections. The original dual-processor servers were replaced with eight-way servers in a ratio of 4 to 1. This size server was expected to support 3,500 users with the user characteristics described in the previous section. The Exchange messaging system was installed in a single domain on the same data center network. A large storage array system was chosen, and each Exchange data store was placed onto a large single-volume server with a 4KB cluster size specified. This cluster size was a little larger than the mean message, allowing most clusters to be completely filled by a message of mean size without wasting much additional disk space. To attain the desired I/O, the number of 15,000rpm dual-homed Fibre Channel disks required was calculated based on the storage vendor's performance data; also, a disk cache size was established so that the desired throughput and an additional margin for growth could be achieved. Note In selecting system components, the general rule of thumb is to determine which component is going to cost the most money and/or which component is going to be the most difficult one to fix if it isn't correctly sized for the project. That component should then be selected in order to best meet the needs of the project. For the Exchange project described in the Company XYZ example, that evaluation could have led the project manager to pay particular attention to either the servers (the most difficult to correct) or to the large disk arrays (the most expensive). Usually the most expensive component is the one that is also the most difficult to correct. Because WRITEs are slow and represent a large portion of the disk I/O, Company XYZ chose a RAID 0+1 array, which provides the speed of striping with the first-line redundancy of mirroring. It also decided to back up each data store with a BCV (business continuance volume) for failover in case of primary disk storage array failure. Backups were collected as snapshots, with a full backup made of a BCV every week and stored to tape. It was also established that snapshots average around two hours to take and that switching over to a BCV upon system failure could be effected within two hours, with most of the time taken up by bringing the BCV up-to-date with transaction logs. In this particular example, it was found that the system had a life cycle of around 3.5 years. There were also some surprises. It was found that virus scanning software had an unexpected impact on performance, that adjusting the disk quota size for a user affected what is called the single instance ratio (a single file is stored on disk with many pointers to it from users' mailboxes), and that the system could be successfully scaled. Although this project is based on an Exchange server consolidation project, which means the data are probably not useful to your project, the principles it exposes are useful to anyone trying to deploy a right-sized server. That is, by understanding your application's characteristics, measuring performance bottlenecks and key characteristics, and extrapolating correctly, using your particular loading, it is possible to make good equipment selections. As with all large projects, if your deployment installs multiple systems, it is prudent to create a test bed to determine whether your assumptions are borne out by fact before proceeding to add additional systems. Note At this point, any deployment project should have produced a project specification that everyone involved has signed off on. The last thing you want to have happen is to deploy a server that is sized to service 3,500 users and have a high-level manager say that he or she said "5,500 users" or, worse yet, "350 users." Having management sign off on a document ameliorates these kinds of problems, and it lays out all the assumptions you've made when you can't establish a completely factual model. Therefore, any good specification should document any caveats, establish a risk analysis, and make promises about performance in a conservative way. A project specification document doesn't need to be Department of Defense fighter-jet long; it can be a spreadsheet and an email message, but it's something you need to create whenever you are spending someone else's money. |

EAN: 2147483647

Pages: 240