Section 4.3. Page Frames

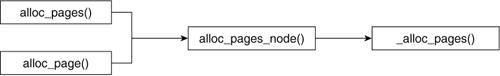

4.3. Page FramesA page frame is the unit of memory that holds a page. When a process requests memory, the kernel can request a page frame. In the same manner, when a page frame is no longer being used, the kernel releases it to make it available for another process. The following functions are called to perform those operations. 4.3.1. Functions for Requesting Page FramesA few routines can be called to request a page frame. We can split up the functions into two groups depending on the type of their return value. One group returns a pointer to the page struct (return type is void *) that corresponds to the page frame that is allocated to the request. This includes alloc_pages() and alloc_page(). The second group of functions returns the 32-bit virtual address (return type is a long) of the first allocated page. These include __get_free_page() and __get_dma_pages(). Many of these routines are simply wrappers around a lower-level interface. Figures 4.2 and 4.3 show the calling graphs of these routines. Figure 4.2. alloc_*() Calling Graph

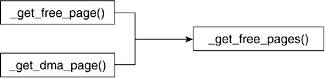

Figure 4.3. get_*_page() Calling Hierarchy

The following macros and functions refer to the number of pages being handled (requested or released) in powers of 2. Pages are requested or released in contiguous page frames in powers of 2. We can request 1, 2, 4, 8, 16, and so on groups of pages.[6]

4.3.1.1. alloc_pages() and alloc_page()alloc_page() requests a single page and thus has no order parameter. This function fills in a 0 value when calling alloc_pages_node(). Alternatively, alloc_pages() can request two order pages: ----------------------------------------------------------------------------- include/linux/gfp.h 75 #define alloc_pages(gfp_mask, order) \ 76 alloc_pages_node(numa_node_id(), gfp_mask, order) 77 #define alloc_page(gfp_mask) \ 78 alloc_pages_node(numa_node_id(), gfp_mask, 0) ----------------------------------------------------------------------------- As you can see from Figure 4.2, both macros then call __alloc_pages_node(), passing it the appropriate parameters. alloc_pages_node() is a wrapper function used for sanity checking of the order of requested page frames:

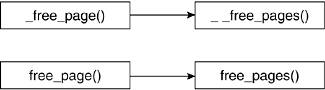

As you can see, if the order of pages requested is greater than the allowed maximum order (MAX_ORDER), the request for page allocation does not go through. In alloc_page(), this value is always set to 0 and so the call always goes through. MAX_ORDER, which is defined in linux/mmzone.h, is set to 11. Thus, we can request up to 2,048 pages. The __alloc_pages() function performs the meat of the page request. This function is defined in mm/page_alloc.c and requires knowledge of memory zones, which we discussed in the previous section. 4.3.1.2. __get_free_page() and __get_dma_pages()The __get_free_page() macro is a convenience for when only one page is requested. Like alloc_page(), it passes a 0 as the order of pages requested to __get_free_pages(), which then performs the bulk of the request. Figure 4.3 illustrates the calling hierarchy of these functions. ----------------------------------------------------------------------------- include/linux/gfp.h 83 #define __get_free_page(gfp_mask) \ 84 __get_free_pages((gfp_mask),0) ----------------------------------------------------------------------------- The __get_dma_pages() macro specifies that the pages requested be from ZONE_DMA by adding that flag onto the page flag mask. ZONE_DMA refers to a portion of memory that is reserved for DMA accesses: ----------------------------------------------------------------------------- include/linux/gfp.h 86 #define __get_dma_pages(gfp_mask, order) \ 87 __get_free_pages((gfp_mask) | GFP_DMA,(order)) ----------------------------------------------------------------------------- 4.3.2. Functions for Releasing Page FramesThere are multiple routines for releasing page frames: two macros and the two functions for which they each serve as a wrapper. Figure 4.4 shows the calling hierarchy of the page release routines. We can again split up the functions into two groups. This time, the split is based on the type of parameters they take. The first group, which includes __free_page() and __free_pages(), takes a pointer to the page descriptor that refers to the page that is to be released. The second group, free_page() and free_pages(), takes the address of the first page to be released. Figure 4.4. *free_page*() Calling Hierarchy

The __free_page() and free_page() macros release a single page. They pass a 0 as the order of pages to be released to the functions that perform the bulk of the work, __free_pages() and free_pages(), respectively: ----------------------------------------------------------------------------- include/linux/gfp.h 94 #define __free_page(page) __free_pages((page), 0) 95 #define free_page(addr) free_pages((addr),0) ----------------------------------------------------------------------------- free_pages() eventually calls __free_pages_bulk(), which is the freeing function for the Linux implementation of the buddy system. We explore the buddy system in more detail in the following section. 4.3.3. Buddy SystemWhen page frames are allocated and deallocated, the system runs into a memory fragmentation problem called external fragmentation. This occurs when the available page frames are spread out throughout memory in such a way that large amounts of contiguous page frames are not available for allocation although the total number of available page frames is sufficient. That is, the available page frames are interrupted by one or more unavailable page frames, which breaks continuity. There are various approaches to reduce external fragmentation. Linux uses an implementation of a memory management algorithm called the buddy system. Buddy systems maintain a list of available blocks of memory. Each list will point to blocks of memory of different sizes, but they are all sized in powers of two. The number of lists depends on the implementation. Page frames are allocated from the list of free blocks of the smallest possible size. This maintains larger contiguous block sizes available for the larger requests. When allocated blocks are returned, the buddy system searches the free lists for available blocks of memory that's the same size as the returned block. If any of these available blocks is contiguous to the returned block, they are merged into a block twice the size of each individual. These blocks (the returned block and the available block that is contiguous to it) are called buddies, hence the name "buddy system." This way, the kernel ensures that larger block sizes become available as soon as page frames are freed. Now, look at the functions that implement the buddy system in Linux. The page frame allocation function is __alloc_pages() (mm/page_alloc.c). The page frame deallocation functions is __free_pages_bulk(): ----------------------------------------------------------------------------- mm/page_alloc.c 585 struct page * fastcall 586 __alloc_pages(unsigned int gfp_mask, unsigned int order, 587 struct zonelist *zonelist) 588 { 589 const int wait = gfp_mask & __GFP_WAIT; 590 unsigned long min; 591 struct zone **zones; 592 struct page *page; 593 struct reclaim_state reclaim_state; 594 struct task_struct *p = current; 595 int i; 596 int alloc_type; 597 int do_retry; 598 599 might_sleep_if(wait); 600 601 zones = zonelist->zones; 602 if (zones[0] == NULL) /* no zones in the zonelist */ 603 return NULL; 604 605 alloc_type = zone_idx(zones[0]); ... 608 for (i = 0; zones[i] != NULL; i++) { 609 struct zone *z = zones[i]; 610 611 min = (1<<order) + z->protection[alloc_type]; ... 617 if (rt_task(p)) 618 min -= z->pages_low >> 1; 619 620 if (z->free_pages >= min || 621 (!wait && z->free_pages >= z->pages_high)) { 622 page = buffered_rmqueue(z, order, gfp_mask); 623 if (page) { 624 zone_statistics(zonelist, z); 625 goto got_pg; 626 } 627 } 628 } 629 630 /* we're somewhat low on memory, failed to find what we needed */ 631 for (i = 0; zones[i] != NULL; i++) 632 wakeup_kswapd(zones[i]); 633 634 /* Go through the zonelist again, taking __GFP_HIGH into account */ 635 for (i = 0; zones[i] != NULL; i++) { 636 struct zone *z = zones[i]; 637 638 min = (1<<order) + z->protection[alloc_type]; 639 640 if (gfp_mask & __GFP_HIGH) 641 min -= z->pages_low >> 2; 642 if (rt_task(p)) 643 min -= z->pages_low >> 1; 644 645 if (z->free_pages >= min || 646 (!wait && z->free_pages >= z->pages_high)) { 647 page = buffered_rmqueue(z, order, gfp_mask); 648 if (page) { 649 zone_statistics(zonelist, z); 650 goto got_pg; 651 } 652 } 653 } ... 720 nopage: 721 if (!(gfp_mask & __GFP_NOWARN) && printk_ratelimit()) { 722 printk(KERN_WARNING "%s: page allocation failure." 723 " order:%d, mode:0x%x\n", 724 p->comm, order, gfp_mask); 725 dump_stack(); 726 } 727 return NULL; 728 got_pg: 729 kernel_map_pages(page, 1 << order, 1); 730 return page; 731 } ----------------------------------------------------------------------------- The Linux buddy system is zoned, which means that lists of available page frames are maintained separate by zone. Hence, every search for available page frames has three possible zones from which to get the page frames. Line 586The gfp_mask integer value allows the caller of __alloc_pages() to specify both the manner in which to look for page frames (action modifiers). The possible values are defined in include/linux/gfp.h, and Table 4.2 lists them.

Table 4.3 provides a pointer to the zonelists that correspond to the modifiers from gfp_mask.

Line 599The function might_sleep_if() takes in the value of variable wait, which holds the logical bit AND of the gfp_mask and the value __GFP_WAIT. The value of wait is 0 if __GFP_WAIT was not set, and the value is 1 if it was. If Sleep-inside-spinlock checking is enabled (under the Kernel Hacking menu) during kernel configuration, this function allows the kernel to block the current process for a timeout value. Lines 608628In this block, we proceed to go through the list of zone descriptors once searching for a zone with enough free pages to satisfy the request. If the number of free pages satisfies the request, or if the process is allowed to wait and the number of free pages is higher than or equal to the upper threshold value for the zone, the function buffered_rmqueue() is called. The function buffered_rmqueue() takes three arguments: the zone descriptor of the zone with the available page frames, the order of the number of page frames requested, and the temperature of the page frames requested. Lines 631632If we get to this block, we have not been able to allocate a page because we are low on available page frames. The intent here is to try and reclaim page frames to satisfy the request. The function wakeup_kswapd() performs this function and replenishes the zones with the appropriate page frames. It also appropriately updates the zone descriptors. Lines 635653After we attempt to replenish the page frames in the previous block, we go through the zonelist again to search for enough free page frames. Lines 720727This block is jumped to after the function determines that no page frames can be made available. If the modifier GFP_NOWARN is not selected, the function prints a warning of the page allocation failure, which indicates the name of the command that was called for the current process, the order of page frames requested, and the gfp_mask that was applied to this request. The function then returns NULL. Lines 728730This block is jumped to after the requested pages are found. The function returns the address of a page descriptor. If more than one page frames were requested, it returns the address of the page descriptor of the first page frame allocated. When a memory block is returned, the buddy system makes sure to coalesce it into a larger memory block if a buddy of the same order is available. The function __free_pages_bulk() performs this function. We now look at how it works: ----------------------------------------------------------------------------- mm/page_alloc.c 178 static inline void __free_pages_bulk (struct page *page, struct page *base, 179 struct zone *zone, struct free_area *area, unsigned long mask, 180 unsigned int order) 181 { 182 unsigned long page_idx, index; 183 184 if (order) 185 destroy_compound_page(page, order); 186 page_idx = page - base; 187 if (page_idx & ~mask) 188 BUG(); 189 index = page_idx >> (1 + order); 190 191 zone->free_pages -= mask; 192 while (mask + (1 << (MAX_ORDER-1))) { 193 struct page *buddy1, *buddy2; 194 195 BUG_ON(area >= zone->free_area + MAX_ORDER); 196 if (!__test_and_change_bit(index, area->map)) ... 206 buddy1 = base + (page_idx ^ -mask); 207 buddy2 = base + page_idx; 208 BUG_ON(bad_range(zone, buddy1)); 209 BUG_ON(bad_range(zone, buddy2)); 210 list_del(&buddy1->lru); 211 mask <<= 1; 212 area++; 213 index >>= 1; 214 page_idx &= mask; 215 } 216 list_add(&(base + page_idx)->lru, &area->free_list); 217 } ----------------------------------------------------------------------------- Lines 184215The __free_pages_bulk() function iterates over the size of the blocks corresponding to each of the free block lists. (MAX_ORDER is the order of the largest block size.) For each order and until it reaches the maximum order or finds the smallest possible buddy, it calls __test_and_change_bit(). This function tests to see whether the buddy page to our returned block is allocated. If so, we break out of the loop. If not, it sees if it can find a higher order buddy with which to merge our freed block of page frames. Line 216The free block is inserted into the proper list of free page frames. |

EAN: N/A

Pages: 134