8.4 The Router as the Firewall

|

8.4 The Router as the Firewall

This section describes two separate options for the configuration of a router as an actual firewall. The first is configuring the router as the only firewall on your network, and the second is the configuration of a router as a second firewall on your network. In each case, the configuration would be the same; only the implementation philosophy differs.

Before explaining how a router alone could operate as a fairly robust firewall, we will spend some time re-examining why you would not want to do this if you could get away with it. Note that this entire argument is based on the conclusions of your risk analysis. We are assuming that the need for security at this point outweighs the additional cost of a separate firewall.

As has been mentioned several times in this text, effective countermeasures are layered. Defense in depth is the best approach to implementing a security policy. If one device or countermeasure should fail or be circumvented, both of which are circumstances you should count on, we need to have other countermeasures to continue providing protection. If your router is your only firewall, and if it is incorrectly configured, your defenses are not as robust as you think. In fact, unless you take the time to carefully audit your configuration, you may simply have a false sense of security.

By using a firewall in addition to a filtering router, you have provided additional protection to your network. If your router is compromised, your internal network at least remains secure. Some implementations take this philosophy to the point of placing multiple firewalls in series. This configuration ensures that if one firewall should be compromised or misconfigured, a second one is there to continue the protection. In this configuration, firewalls from different vendors are often employed to ensure that an undiscovered vulnerability affecting one device does not affect the second. In a real sense, by configuring your router as a firewall along with a separate firewall, you will have implemented just this philosophy.

Performance has often been cited as a reason to not configure a router as a complex firewall. In days of yore, that was the case. Routers were not powerful enough to rapidly forward packets and enforce access-list rules at the same time. Times have changed and the phenomenal increases we have seen in computing power and architectures apply to routers as well. No longer are routers simply a "poor man's firewall;" rather, they are robust and configurable combination routers/firewalls in their own right. There are only two situations in which this argument should be a consideration for your implementation. The first is when you are committed to making the most of existing (and somewhat dated) hardware. The second is if you are processing packets at high speeds for optical (OC) type Internet connections. Remember that, for the most part, the speed of your LAN and the firewall is not going to be the limiting element for network performance. The speed of the WAN link is going to be the bottleneck; and $50,000 for a high-speed firewall is not going to change that.

The primary argument against using a router as your single firewall device is the lack of redundancy. In security terms, this could affect all the things we are trying to ensure: confidentiality, integrity, and especially availability.

For the configuration of a router as a firewall, we will want to include all of the rules we configured when implementing a router to work as a screen for another firewall. That means no obviously invalid packets and control access to the router itself. We also need to consider the rest of the security policy.

As with the rest of security, the actual configuration of the firewall is one of the last steps to take when implementing a firewall. There are several steps that precede the implementation of rules. The first is examining the security policy and determining what rules need to be implemented. Do not give into the temptation to skip the security policy step and survey the network to learn what applications are being used and simply configure the firewall around them. Doing this creates a reactive configuration that only reflects the current state of the network without evaluating the security of the applications you are allowing through the firewall. In effect, you are simply making sure that insecure applications have access through the firewall if you follow this approach.

Instead, create your firewall rules based on your security policy. While there are a number of ways to create configuration rules from policy, standards, and procedures, I prefer a decidedly low-tech approach using a piece of notepaper. I create a box on a blank sheet of paper that represents the firewall. I then make one side of the box "inside" and the other side of the box "outside." If part of the deployment, I will also include another section of the box that is labeled "DMZ." This serves to remind me of the firewall's view of the world. From the point of view of the firewall, there is only traffic entering its interfaces and traffic leaving its interfaces. All rules must be written to reflect this somewhat egocentric point of view.

Once I am reminded how the firewall looks at things, I then start combining the standards and procedures document, looking for rules that the firewall must enforce. Because a security policy is a high-level document, it should not contain configuration statements such as "allow traffic from any source on the Internet to access the SMTP mail server at 200.1.1.100 on port 25 only." Instead, a security policy standards document might include statements such as:

-

"Our perimeter will allow Internet access to our DMZ only, which will contain the following essential application servers: DNS, SMTP, POP, HTTP, an HTTP/FTP proxy, and FTP server.

-

Access to the Internet from the internal network will be limited to application traffic not easily proxied and will be limited to connections initiated from our clients only. Essential applications are Telnet for VTY100 terminal emulation and ICMP traffic."

Of course, there are many more statements of principle that might be found in a security policy, but the above should be enough to give us a chance to practice our firewall logic. We will find that even from such simple statements, there are number of lessons we can learn about the router/firewall and the configuration of firewalls in general.

From this point, it is simply a matter of assigning ports and addresses and diagramming their directionality on the notepaper. Once this has been completed, you may want to type up your rule set into something presentable because most management types or clients do not like to see scribbled notes as part of your final documentation.

-

Let us see how this would work based on our current rule set. We will assume, for sake of simplicity, that the hosts on our network have the following IP addresses in the DMZ:DNS: 200.1.1.10

-

SMTP: 200.1.1.20

-

POP3: 200.1.1.30

-

HTTP: 200.1.1.40

-

HTTP/FTP Proxy: 200.1.1.50

-

FTP: 200.1.1.60

-

LAN network is 200.1.2.0/24

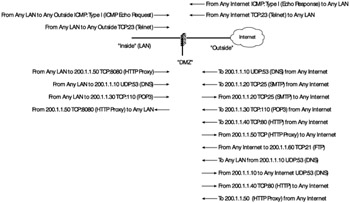

Using our notepaper, we can easily diagram the allowed traffic:

-

Allow DNS, UDP destination port 53, from "outside" to "DMZ" 200.1.1.10. Outside to inside tells us that traffic from the Internet is allowed in to the server.

-

Allow SMTP, TCP destination port 25, from "outside" to "DMZ" 200.1.1.20. The rule allows the receipt of e-mail from our mail server.

-

Allow SMTP, TCP destination port 25, to "outside" from "DMZ" 200.1.1.20. This allows the sending of e-mail from our mail server.

-

Allow POP3, TCP destination port 110, from "outside" to "DMZ" 200.1.1.30. This rule allows remote user clients to check their e-mail on our POP3 server.

-

Allow HTTP, TCP destination port 80, from "outside" to "DMZ" 200.1.1.40. This rule allows Internet hosts to access our Web server.

-

Allow HTTP traffic from the "inside" network to "DMZ" 200.1.1.50, TCP destination ports 8080 (our proxy server port number). Allow clients from the LAN to connect to the proxy.

-

Allow HTTP traffic from the proxy on "DMZ" 200.1.1.50, to access the Internet. Because Web sites can theoretically run on any number of ports, we will only restrict this traffic according to IP addresses instead of ports.

-

Allow FTP, TCP destination port 21, from "outside" network to "DMZ" 200.1.1.60.

-

Allow Telnet traffic, TCP destination port 23, from "inside" network to the "outside."

-

Allow ICMP traffic, IP protocol type 1, from "inside" network to the "outside."

Just following our rule set gives us a notepaper that looks like the diagram in Exhibit 6. If we were to implement this rule set, however, we would find that our functionality is severely limited because there is much that must also be considered for even these simple rules. Consider the simple scenario where a user on the inside tries to create a Telnet session to telnet.testservers.com. First, the client must perform a domain lookup. Assuming the we also have a split DNS, the client PC will query the LAN DNS server, which will forward the packets to the DMZ DNS server when it fails to provide a local name resolution. But our current rules will not allow this. We need to allow DNS traffic from the "inside" to the DMZ and then allow the return traffic. So we add another line to our diagram:

-

Allow DNS queries, destination port 53, from the "inside" network to the "DMZ" DNS server at 200.1.1.10.

-

Allow DNS reply traffic, source port 53, from the "DMZ" DNS server 200.1.1.10 to the "inside" network.

Exhibit 6: Initial Notes for Firewall Rules

We can pause at this point and discuss several items that our rule set has also suggested.

8.4.1 Firewalls Define Themselves According to Their Interfaces

Our firewall has three interfaces, each labeled as "inside," "outside," or "DMZ." Any rule on the router simply tells the router/firewall if it is allowed to forward traffic to a specific port or drop the traffic that is not allowed. Firewall filtering rules should always be defined from the point of view of the firewall. If you have trouble visualizing packets entering and leaving the router, imagine that you are sitting at the interface of the router with a checklist that represents your rule set. In a sense, you are the door guard of the router. You are checking packets as they enter the interfaces and, from your point of view, "in" and "out" are from the interface itself.

8.4.2 All Traffic Must Be Explicitly Defined

Virtually any field that is available for reading in either a network or transport layer packet header defines traffic. Common filtering fields include source and destination IP addresses, IP protocol values, IP fragmentation, IP packet length, source ports, destination ports, and TCP option bits. To properly filter traffic, this information needs to be defined. Note that from our above rules, not all of the listed fields must be defined, only those that are relevant to the filtering process. Depending on the protocol, the most difficult element of creating firewall rules is simply ensuring that all of the protocols that work together to enable an application are properly defined. Some firewall products have sophisticated default rule sets that allow you to simply say, "Allow all traffic associated with SIP, the protocol commonly used for VoIP." [3] In this case, the firewall vendor has predefined the associated protocols and port values — a great timesaver and aid in reducing security-affecting misconfigurations. Someone with experience can easily create these rules manually; but if you do not have experienced firewall staff on hand, they are a convenient resource.

8.4.3 Return Traffic May Not Be Automatically Assumed by Firewall Rules

It only makes sense that if you want to allow users to access HTTP sites on the Internet, then the return traffic, the packets with the actual text and graphics that show up in the users' Web browser, should be allowed back into the network. Firewalls, by design, are very conservative and do not necessarily allow this return traffic. Therefore, instead of simply stating, "Allow clients to access HTTP sites on the Internet," you need to explicitly state, "Allow traffic from clients to access HTTP sites, and allow the return traffic from HTTP sites to return to the clients." Just as with Rule 8.4.2, however, there are a number of firewall products that will automatically configure these rules for you through the use of preconfigured rule sets. Regardless of the user interface, however, the implementation on the firewall needs to explicitly allow this traffic.

There are more rules, but right now lets just apply these three to our existing firewall notes. Thus far, we have included DNS, but other rules that need to be included to make our network operate as we would expect.

-

Allow our "DMZ" DNS server at 200.1.1.10 to make queries to other DNS servers on the Internet. These packets will have a destination UDP port of 53.

-

Allow LAN clients to access the POP3 server as well; TCP destination port 110 from "inside" to "DMZ" 200.1.1.30.

-

Allow HTTP traffic, TCP source port 80, from "DMZ" 200.1.1.40 to "outside." This allows HTTP traffic from our Web sever to return to Internet hosts that request content.

-

Allow HTTP proxy traffic to return to the LAN by allowing "DMZ" 200.1.1.50 to send packets with a TCP source port of 8080 to "inside."

-

Allow Telnet traffic from the Internet to return to the "inside" hosts by allowing traffic with a TCP source port 23 to return to the "inside" network.

-

Allow ICMP traffic to return to the "inside" network by allowing the IP protocol 1 from the "outside" network to the "inside" network.

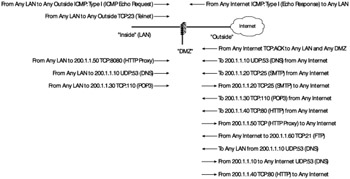

By the time we have included all these new rules, our firewall notepaper looks like the diagram in Exhibit 7. As we can see, manually defining return traffic for all our services is complicated and prone to error. In fact, it is so complicated and prone to error that we are going to look for another way to complete the same task in a much simpler fashion. Let us first state our goal so that we can then redefine it. Our intent is to make our network secure by only allowing traffic that is defined by our security policy. This includes limiting inbound and outbound traffic to specified ports and networks.

Exhibit 7: Return Traffic and Essential Protocols Added to Firewall Notes

But our rules are not perfect. While we want to allow return traffic, we really only want to allow return traffic that is part of an existing connection. Right? In other words, the rule above, "Allow Telnet traffic from the Internet to return to the "inside" hosts by allowing traffic with a TCP source port 23 to return to the "inside" network," does not really do just that if we closely examine its operation. This rule allows any packet with a TCP source port of 23 access to our internal network when we really only want packets associated with an established user Telnet session. So how can we control this traffic and make the configuration of our access rules simpler and therefore more secure? Here is a hint: refer back to the TCP materials and take a look at the TCP header. Are there any options in the header that would help us filter based on only established connections?

There is! We see from the TCP three-way handshake that the first three packets of a TCP connection are used for setting up a TCP session. The client sends a TCP packet to the appropriate IP address and port with the SYN (synchronize) bit set, and the server responds back with both the SYN and ACK (acknowledgement) bits set. Finally, the remote client sends a final ACK to acknowledge the receipt of the server's packet and the transfer of data begins. During the actual transfer of data, we may see bits such as the ACK, RST (reset), URG (urgent), PSH (push), and FIN (finish) bits set, but we never see the SYN bit set again. In fact, the only time we should see a SYN bit alone in a packet is during the initial setup of a session.

Voila! We now have a way to filter based upon the state of the connection. We can simply tell our router/firewall that if it sees any packets from the "outside" that are destined for the "inside" or the "DMZ" that if they do not have the SYN bit in the TCP header, then assume that they are part of an established connection and allow the traffic back in. This can be done for all our services with a single statement:

-

Allow packets that are part of an established connection from "outside" to "inside" and "DMZ."

Notice the change this single rule has on our firewall notes, shown in Exhibit 8.

Exhibit 8: Allowing Return Traffic Looking for ACK Bits

While this single line serves to simplify our firewall rules slightly, there are a number of problems with it as it stands. We will discuss these short-comings and offer solutions. For now, we want to continue with the basic firewall configuration.

There is one more troublesome entry in our firewall that we must examine. As it stands, we are allowing all ICMP traffic in both directions from the Internet to our LAN. Presumably, our security policy specified that internal hosts be allowed to use ICMP traffic as it assists in error reporting and troubleshooting. Programs such as PING and traceroute make extensive use of ICMP, and other error messages such as "host not found," "network not found," and "Time to live expired" are all essential to the normal operation of our host computers.

At the same time, ICMP can be used for nefarious purposes. The clearest example of this is to use ICMP echoes, the types of packets used by the PING program, to map a network. As our rule stands, although we have a router firewall with a DMZ and fairly strict rules in place, an attacker from the Internet could use echo packets to map our entire LAN network and learn the number of hosts and subnets on the network.

These same ICMP packets could be used to try to crash our host computers through the use of illegal options. Although this technique is becoming rarer as protocol stacks become tighter, it is good policy to also guard against unknown vulnerabilities. The best way to do that is to only use the functions that are essential to your business operations.

A more realistic rule is to examine ICMP and note those packets that are most likely to be used in normal network operations. If one of your users used PING and sent an ICMP "echo" packet to troubleshoot a network connection, you would reasonably expect an ICMP "echo-reply" in return. So echo-reply is an ICMP packet we would want to allow back into the network. On the other hand, if someone were to map your network using PING, [4] he or she would be sending ICMP "echo" packets to your network. These inbound packets from the "outside" would be something that would not be allowed.

Another ICMP packet type that is commonly seen coming back to our network is the ICMP "destination unreachable." Unlike echo traffic, destination unreachable packets are returned during normal IP traffic operation. A user might send a normal TCP connection request to a remote network, only to find that they have entered the address incorrectly or the host is no longer available. In this case, allowing ICMP destination unreachable traffic back into your network would allow users to more quickly realize that there is a problem with the connection instead of sitting around for a couple of minutes waiting for the connection to timeout because the router/firewall is discarding the error messages. We might then consider allowing ICMP "destination unreachable" packets back into our network as well.

Based on our understanding of ICMP operation, we are ready to modify our firewall notes a bit more. Instead of blindly allowing ICMP traffic in and out of our network, we allow only certain useful ICMP traffic. In this case, we allow all ICMP traffic out of our network, and allow only two types back in — namely, echo-replies and destination unreachable messages. Our rule set is modified to reflect these changes:

-

Allow all ICMP traffic, IP protocol 1, from the "inside" network to the "outside."

-

Allow all ICMP traffic, IP protocol 1, from the "DMZ" network to the "outside."

-

Allow only ICMP "echo-reply" and "destination unreachable" traffic from the "outside" to the "inside" network.

-

Allow only ICMP "echo-reply" and "destination unreachable" traffic from the "outside" to the "DMZ."

Note that separate rules were required for the DMZ and inside networks, as they represent different interfaces on the router/firewall. For now, we are keeping them logically distinct on our firewall diagram to ensure that they are properly implemented. When actually writing the rules, we will look for a chance to combine the inside and DMZ networks to shorten our overall rule set and increase efficiency.

Note also that we have made a conscious decision to allow all ICMP traffic from the inside network to the rest of the world. This is something that should be carefully considered as you write your security policy and implement the rules into your firewall. Many organizations will rightfully control outbound traffic as much as they would control inbound traffic. Others will allow most types of outbound traffic and tightly control only inbound traffic.

There are pros and cons to each decision. When you control outbound traffic, you control what applications users can access on the Internet. This is certainly useful, given the number of productivity-wasting sites that can be found out there. If users are using chat programs, the programs can be effectively blocked by only allowing applications with destination ports that match commonly used legitimate services such as HTTP (TCP port 80), FTP (TCP port 21), Telnet (TCP port 23), DNS (UDP port 53), etc.

When you control outbound traffic, you may also be reducing the risk of Trojanized programs or worms on your network. Many of these programs are configured to "phone home" periodically. The intent is that because many organizations do not restrict outbound traffic, firewall rules can effectively be circumvented if the program on the inside of the network initiates the connection to the attacker's location. Because return traffic that is part of an existing connection is typically only lightly filtered, if at all, this is a very effective technique.

Controlling outbound traffic also helps your organization behave like a good Internet citizen. By carefully controlling what traffic your network produces, you reduce the risk that someone or a program is going to use your network as a launching ground for attacks on other Internet sites. Not only is this good Internet etiquette, but it may also reduce your legal liability for such attacks.

Controlling outbound traffic is not without its drawbacks. The first is that it may increase the overhead and maintenance on the part of your IT and information security staff. Invariably, someone will discover a program or application that is a "must-have" and require that the firewall be reconfigured to allow this traffic inbound and outbound. Of course, proper planning for the security policy would hopefully eliminate these types of situations.

It may happen, however, that legitimate services are being run on alternate ports. There is no Internet law that states that Web servers need to operate on port 80. It is just a common convenience. Because most people access Web sites through links and not by entering in the full URL, it is a simple matter to redirect users to alternate ports without their knowledge. From the user's point of view, legitimate resources are not available. From the network security staff's point of view, because services can be run on any port, the additional overhead to open outbound ports here and there turns into a situation where you might just as well open them all.

Others argue correctly that most Trojan makers understand that certain ports will almost always be open to outbound traffic. It is a pretty good bet that a company that filters outbound traffic will allow outbound TCP port 80 through the firewall because this is popular for Web servers. Just as there is no law stating that you must run your Web server on port 80, there is no law stating that a service running on port 80 must be a Web server. Many companies that surreptitiously install spyware on computers make use of this fact to allow their software to phone home to a data collection center through home and corporate firewalls. Other gray-area programs such as chat programs and peer-to-peer file sharing programs can also be configured to send traffic on port 80 to allow their usage in a firewall environment. It would seem, then, that from one point of view, the effort to control out-bound Internet traffic only adds complexity to the firewall configuration and provides little real benefit because of the ease with which IP services are reconfigured.

Another point of view, however, understands that controlling outbound traffic will have only a limited effect on network security, but limited effect is what network security is all about. If we were to wait until the "magic bullet" of network security is invented that solved every conceivable problem before implementing our own security policy, then we would be waiting a long time. Information security, and the firewall itself, is about the combination of countermeasures, each one incrementally reducing the risk on our networks. If controlling outbound traffic is a limited protection, and can be provided with the same hardware and administration that we are already paying for to control inbound traffic, then it seems logical to go through the effort to deploy it as part of our security policy implementation.

At this point we have the basis for our firewall implementation included on our notepaper. We are ready to start the configuration. Before we do, however, there are two essential rules of firewalls that we must first discuss. The first is, no matter the vendor, firewall rules are applied to packets in the order in which they are written. That means that as a packet is checked against a firewall rule set, Rule 1 is checked first. If the packet matches that rule, then it is processed according to the rule. If the packet does not match Rule 1, it is checked against Rule 2, and so on. The implication of this is that you can sabotage your rule set if you do not ensure that your rules are applied to the firewall in the proper order. Let us examine a simple example. Consider the two rules below:

-

Allow any traffic to our HTTP server at 200.1.1.30 when it has the TCP destination port 80.

-

Deny any traffic that has a source IP address from the Internet that is part of the private address ranges of 10.0.0.0/8, 172.16.0.0.12, and 192.168.0.0.16.

If applied in this order, we can see that a packet with an invalid source IP address but with a destination to our HTTP server on the proper port will be allowed through the firewall. This is certainly not our intent. Instead, the rules should be applied in the following order:

-

Deny any traffic that has a source IP address from the Internet that is part of the private address ranges of 10.0.0.0/8, 172.16.0.0.12, and 192.168.0.0.16.

-

Allow any traffic to our HTTP server at 200.1.1.30 when it has the TCP destination port 80.

Now the proper security will be enforced.

Because we know that packets will be checked against our rule set, it is also good practice to place the most commonly matched rules toward the top of the rule set. There is no sense in making a router/firewall check through 50 rules for 80 percent of packets. The difficult matter to resolve is how to implement the most commonly used rules with the checking of rule sets in order. In summary, you cannot always make it work. Consider our modified rule set from the preceding paragraph. Unless we are the victims of some serious troublemakers from the Internet, HTTP traffic will easily match more often than spoofed source packets. From our previous discussion, we see that we cannot easily place the rule to allow HTTP traffic prior to the rule that prohibits private addresses.

You may have a question as to which traffic is going to match particular firewall rules the most. Many times, this can be guesstimated through knowledge of your networking environment. If the majority of your Internet-bound traffic originates as user traffic, then it makes sense that the rule that matches established sessions is going to be the most commonly matched rule. If you host your own Web server and that server sees very high volumes of traffic, then the rule matching traffic to your Web server is going to be the rule with the most matches.

Many times, you can tune a firewall by examining the logs. Most firewall implementations have a logging option that will display the total matches for each rule in the firewall rule set. Simply configure the rule set in the manner that makes the most sense to you at the time, and then tune it after some period of "normal" network activity. Worrying that one rule getting 500 more hits is checked after another rule is not really worth the effort, but the general concept is simply to place commonly matched rules at the top of the list as long as overall security is not affected.

The second essential rule of firewall operation is the implicit "deny all" at the end of every filter list. This means that any traffic that is not explicitly permitted in the preceding filter statements is automatically denied. Many times, knowledge of this rule is assumed and the "deny all" statement does not actually appear in the rule set, but it is there.

In academic settings, the origin of the "deny all" rule is linked to the gradual evolution of firewall and information security philosophies. Some of the earliest security philosophies were defined as "permissive." That is another way of saying that only traffic that was explicitly denied would be blocked. All other traffic would be permitted. In practice, this shows up as an implementation rule that an application like Instant Messaging would be blocked to prevent lost worker productivity and all other traffic would be allowed. We know that there are 65,536 TCP ports that can run applications. Changing the server port the forbidden applications were running on could easily circumvent blocking one port.

In a very short time, it became clear that a restrictive security policy was the only real way to provide network security. All traffic would be blocked in each direction unless it was explicitly allowed. This should be your only option for configuring filters.

Now that our simple rule set has been established, we can put it into action. We will first want to include our filters for packets that should just never show up on our network.

-

Deny any packet from the "outside" with a source that matches our "inside" network.

-

Deny any packet from the "outside" with a source IP address in the private range of 10.0.0.0/8.

-

Deny any packet from the "outside" with a source IP address in the private range of 172.16.0.0/12.

-

Deny any packet from the "outside" with a source IP address in the private range of 192.168.0.0/16.

-

Deny any packet from the "outside" with a source IP address of 0.0.0.0/32 (an all 0s source address).

-

Deny any packet from the "outside" with a source IP address of 255.255.255.255 (an all 1s broadcast address).

Our risk assessment has convinced us that, for our needs, maintaining a "bogon" filter, which is dozens of separate entries in our filter list for unallocated source networks, is going to cost more in management than it will provide in security. Thus, we will forego the configuration of a "bogon" filter list.

We now add the rules that permit our network traffic.

-

Allow packets that are part of an established connection from "outside" to "inside" and "DMZ."

-

Allow DNS, UDP destination port 53, from "outside" to "DMZ" 200.1.1.10.

-

Allow DNS, UDP destination port 53, from "inside" to "DMZ" 200.1.1.10

-

Allow DNS, UDP source port 53, from "DMZ" 200.1.1.10 to "inside." Allow the DMZ DNS server to respond to queries by the "inside" hosts.

-

Allow DNS, UDP source port 53, from "outside" to "DMZ" DNS server. This rule allows query responses to be returned to the DMZ DNS server from Internet DNS servers.

-

Allow SMTP, TCP destination port 25, from "outside" to "DMZ" 200.1.1.20. This rule allows the receipt of e-mail from our mail server.

-

Allow SMTP, TCP destination port 25, to "outside" from "DMZ" 200.1.1.20. This allows the sending of e-mail from our mail server.

-

Allow POP3, TCP destination port 110, from "outside" to "DMZ" 200.1.1.30. This rule allows remote user clients to check their e-mail on our POP3 server.

-

Allow HTTP, TCP destination port 80, from "outside" to "DMZ" 200.1.1.40. Allows Internet hosts to access our web server.

-

Allow HTTP traffic from the "inside" network to "DMZ" 200.1.1.50, TCP destination ports 8080 (our proxy server port number). Allow clients from the LAN to connect to the proxy.

-

Allow HTTP traffic from the proxy on "DMZ" 200.1.1.50 to access the Internet. Becauses Web sites can theoretically be run on any number of ports, we will only restrict this traffic according to IP addresses instead of ports.

Allow Telnet traffic, TCP destination port 23, from "inside" network to the "outside."

-

Allow ICMP echo packets from the "inside" network to the "outside."

-

Allow ICMP echo packets from the "DMZ" network to the "outside."

8.4.4 Testing Our Filtering

Now that the router/firewall has been configured, the process of auditing our work needs to occur. Initially, this work can be done by ourselves as the network administrator. At some point we will want to have another party audit our network. We humans are funny in that we often allow ourselves to look with rose-tinted glasses at our own work. We do our best the best way we know how. Naturally, our own auditing of our rules will match our own expectations. As a friend of mine is prone to saying, "We don't know what we don't know."

To overcome this shortcoming, it is essential to have another party examine your work. Creating a bullet-proof packet filter is very difficult to accomplish the first time through, and there is no reason to fear someone else pointing out what you have done wrong. When configuring network security, you should err on being correct in your configuration over being proud.

At this stage, auditing will take two different forms. The first is ensuring that user applications are operating correctly. Ideally, you are applying your firewall rules at night or on the weekend and you have a chance to test the most commonly used applications on your firewall. If you do not do your testing either prior to implementation or during an off-peak period, you will find that users are very helpful in pointing out your misconfiguration through a continual stream of calls, pages, and frenzied visits to your office. You will, of course, share their excitement and then risk further misconfiguration in an attempt to appease them. Test your rule set before imposing it on the users trying to do their jobs.

Common problems in this area are generally related to an incomplete understanding of the protocols that users employ on a daily basis. Even simple protocols such as FTP use a combination of ports for normal operation and have multiple "default" configurations, depending on the client software in operation. Multimedia and voice applications are even less for-giving, in that entire ranges of ports need to be configured for proper operation. Many tunneling protocols used by VPNs have different ports used for session management and data transfer.

Many times, return traffic is not sufficiently accounted for. Traffic is allowed out of the network and not back in. If you have a complicated subnetting scheme due to remote branch offices, make sure that when you define the "inside" network, you are including the remote IP subnets in your definition of "inside." Otherwise, your local LAN will operate correctly with regard to user traffic, but applications on the remote LAN will not.

The other type of auditing is sometimes called "penetration testing" or "ethical hacking." This is testing your own defenses against threats by attacking your own network. There are a number of tools available on the Internet to perform these attacks, so I will just outline the general concept here and provide more details in Chapter 12, "Network Penetration Testing."

As you deploy your perimeter security to match your security policy, you should constantly monitor your progress. A computer that is isolated from your network should be used to perform a number of attacks and scans on your network. A laptop is ideal for this purpose because you can scan from a number of locations. You can download tools separately and run each one against your network — or you can utilize the free programs Nmap and Nessus. In combination, Nmap will scan your network and firewall looking for open ports and servers listening through the firewall. Nessus will then compare your services and firewall against a fairly up-to-date attack database that will catalog your network services, vulnerabilities, and suggested fixes. While it is not a good idea to rely on a single tool, or a pair of tools, for network auditing, these two tools in combination are a perfect way to fairly quickly gauge your progress and check for any glaring errors. [5]

[3]The Session Initiation Protocol (SIP) is commonly used to initiate Voice-over-IP calls. The actual transfer of voice information, however, is done using the Real-Time Protocol (RTP).

[4]Note there are many ways of using packets to map a network. Using ICMP echoes is only the most obvious way. Most scanners will not even bother with this option because it is so widely defended against.

[5]The Nmap program and documentation can be found at www.nmap.org, and the Nessus server, client, and documentation can be found on www.nessus.org.

|

EAN: 2147483647

Pages: 119