The Growth of Server-Based Development

Several factors have come together to make this the ideal time to study and understand the nuances of server-based development. Perhaps the most important factor is the emergence of Windows 2000 as the server of choice within many organizations. Windows 2000 provides many features that previous server operating systems did not.

Windows 2000 allows developers to write server-based systems by using the tools that they are already familiar with, such as Microsoft Visual C++, Borland C++, Microsoft Visual Basic, and Borland's Delphi. I will argue that in many cases, the tools based on Visual C++ are the most appropriate for production server systems, but for many applications Visual Basic will suffice. Visual Basic programmers out there are legion, and their work will lead to an onslaught of Visual Basic server solutions in addition to the C and C++ solutions already becoming available.

NetWare

Novell NetWare was previously the server of choice and is still a strong force in the network operating arena. At its inception, NetWare did exactly what was needed: file and print sharing with what now seems like minimal hardware. NetWare networks frequently supported dozens of users for file and print services using Intel 486-based servers with 32 MB of RAM. However, writing a NetWare Loadable Module (NLM) was a daunting process, and NetWare never emerged as an application server for the masses.

Why was it difficult to write an NLM? Consider the environment. NLMs generally run in ring 0 of the CPU, a ring that provides no memory protection. In the early days of NetWare, running code in ring 0 was a feature rather than a bug because the Intel 80286 and 80386 processors were the most powerful microprocessors available for servers, and code executed faster when running in ring 0. As CPU speed increased, running NLMs in ring 0 became less of a benefit. NetWare 4 and later versions could run NLMs in either ring 0 or ring 3, which was slower but safer. Code running in ring 3 is less likely to bring down the entire server but can still bring down an individual application by performing tasks that in other operating systems might simply cause a resource leak.

The second problem with NetWare as an application server was the limited selection of tools available for NLM development. For a long time, only the Watcom C/C++ compiler was able to create NLMs. The Watcom C/C++ is a perfectly capable compiler, but it is not the preferred compiler for the majority of developers. Other compilers can be used for NLM development, but they still require use of the Watcom linker, making the use of any other compiler more complex. The importance of limited tool selection cannot be understated. Programmers often have near-religious attachment to their compilers and need compelling reasons to switch.

Cracks in the Fat Client Model

The most common client/server model before the Internet explosion was the "fat client" model, in which most of the processing took place on the client computer, with only the database and some modest amount of business logic on the server. The client took care of not just the presentation of information but also decisions about what information was shownas well as when and how information would be saved. The popular tools used for client/server development included PowerSoft, PowerBuilder, and Visual Basic. These tools left significant portions of a system's logic on the client computer.

The fat client model seemed more entrenched than ever, with ever more powerful client workstations absorbing the increasing amount of code that allowed client/server systems to function. However, about the time the Internet and soon after the local Internets, or intranets , were emerging, several cracks in the fat client model began to appear. First, the overwhelming cost of upgrading client workstations every year was taking its toll on corporate information technology (IT) budgets . Since the current year's fat client software ran slowly if at all on the previous year's PC, new client/server software meant new hardware.

Second, as users began to make use of their powerful new systems and loaded several applications on the client workstation, configuration problems that had been manageable previously became an additional drain on IT budgets. The existence of two mutually exclusive client applications (only one running on a client workstation at a time) became more than a theoretical possibility. Many hours were lost to just such situations, with dynamic-link library (DLL) conflicts the most common cause of failures. The financial burden on IT budgets, christened "Total Cost of Ownership" (TCO), brought about changes in the computer marketplace on a scale not seen since microcomputers became common in the late seventies.

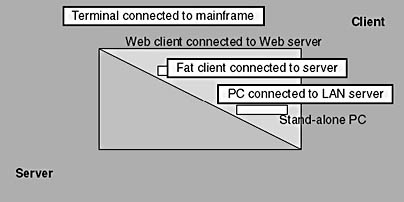

The Need for Server-Based ApplicationsAt the same time that NetWare provided a less-than -hospitable environment for server-based development, the need for server-based applications was skyrocketing because of the Internet. The Internet is at the server-centric extreme of the client/server continuum. Contrary to the media hype of the early nineties, client/server is not a binary choice. Figure 1-3 shows the range of client-only to server-only options, with balanced client/server systems in the middle. Systems can range from stand-alone PCs in which the client does all the work to terminals connected to mainframe computers in which, except for some basic keyboard handling and display management, the server does all the work. The Internet has turned the client/server world upside-down.

Figure 1-3 The client/server continuum.

The Internet: A Solution to TCO Problems

Begun as Arpanet during the cold war, the Internet was designed to be a decentralized system that could withstand the loss of one or more nodes, presumably during nuclear attack. After many years of use, a protocol called Hypertext Transfer Protocol (HTTP) was introduced as a way to share information in a manner different from exclusively text-based methods . HTTP was used to transmit Hypertext Markup Language (HTML) documents. HTML documents contained text and links to graphic elements and were viewed using a browser. A browser was essentially a program that converted HTML page text and instructions to a display that merged text and graphics. HTTP pages were located on what became known as the World Wide Web.

Researchers, government agencies, and educational institutions were the primary users of the Internet; for the most part, it was ignored by the general public. As information on the Internet became more consumer oriented and as modem speeds increased, more and more of the general public began to use the Internet. Just as the IBM PC brought about increasingly usable software development tools for microcomputers, the emergence of the Internet caused development tool vendors , from Borland to Microsoft to Sun Microsystems, to create tools for developing ever more powerful Internet applications.

These new Internet development tools (some client-side, some server-side) were attractive not merely to people developing Internet Web pages. Before too long, developers realized that the new Internet development technologies provided some of the solutions they sought to reduce TCO and, specifically , client-side configuration abnormalities. Although the Internet was not of immediate interest to corporate IT managers, the ideas behind the technology were. Developers had created some pretty fantastic applications on the Web, and using these applications from a 28.8-Kbps modem was, if not pleasant, at least possible. What would happen if these applications were operated not over a relatively slow phone line but rather over the overburdened corporate LAN or WAN?

The Intranet: Bandwidth Nirvana

Applying Web technologies to common corporate IT problems created a new model that accomplished more than developers anticipated. Dubbed the "intranet," this new model simplified client-side configuration. To install an application, you merely set up a TCP/IP stack and a browser (standard equipment in current 32-bit Windows operating systems), pointed the browser at an intranet page, and added the page to the "favorite places" list. Compare this process to the nightmare some traditional application installations can become.

Once installation was simplified, a second benefit was realized: because the Internet grew up in a bandwidth-starved environment, intranet applications ran like a champ on the corporate LAN or WAN. As many industries went merger-crazy (for example, healthcare and banking), applications increasingly needed to operate efficiently in a lower-bandwidth environment. Intranet applications fit the bill.

Today additional unanticipated advantages continue to fuel the growth of intranet applications. Virtually all graduating high school and college students have at least a passing knowledge of the Web and how a browser functions as a user interface. Applications that behave as new employees expect allow training to focus on application-specific issues rather than on user interface issues. Once again, the importance of your comfort level with application tools cannot be overstated.

Where there is an intranet, there is a server

All intranet systems have at least one thing in common: they require a server. Initially, virtually all Internet servers ran one of the several available UNIX variants that were popular in academia. Servers used Common Gateway Interface (CGI) to acquire information entered by the user at the client browser application. CGI operated in a way that is familiar to UNIX wizards, passing information by means of environment variables and command line redirection. Unfortunately, the supply of UNIX wizards is limited, and corporate IT's demand for intranet developers is seemingly unlimited. This led to a problem. Luckily for you and me, the solution is Windows NT.

Windows 2000 Hardware Support

Microsoft had long sought to influence the Network Operating System (NOS) world, as it had done with many other operating system and application areas. LAN Manager and OS/2 were early Microsoft attempts to dethrone the leaders in the NOS market, such as Novell NetWare and Banyan VINES. Banyan became a less competitive NOS as time passed, but NetWare was a formidable product that dominated the NOS market for years in terms of sales and installed base.

As previously mentioned, a weakness of NetWare was the difficulty of developing server-based solutions. NetWare NLM development was beyond all but the most dedicated programmers, who would commonly have to move to a new set of tools for NetWare server development.

As Windows NT versions 3.5 and 3.51 became available, the need for a suitable server platform for application development reached its peak. As long as servers continued to do what they had been doing for the last decade (mostly providing printer and file sharing, with some minimal applications running on the server), NetWare was untouchable. When the rules of the game changed, however, and server-based development became more than just a convenience, Windows NT finally found its role as the premier file server for many LAN applications. Whereas sales and installed base numbers can be argued either way, the tide has turned toward Windows NT for many new server installations and for virtually all servers running nontrivial applications. Windows 2000 Server and Windows 2000 Advanced Server should speed up migration by adding improved support for today's hardware.

EAN: 2147483647

Pages: 91