8.4 Maximizing Operational Efficiency

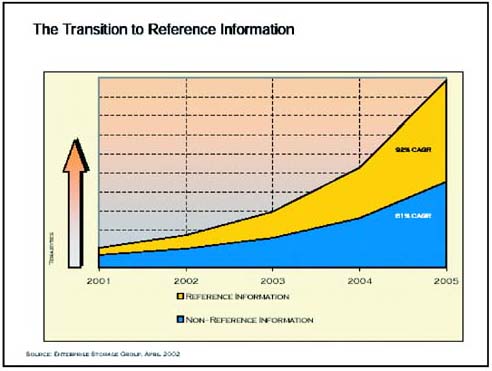

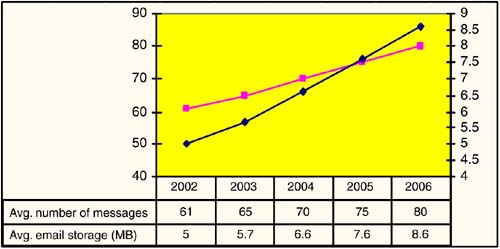

| The examples in the previous section present several compelling reasons to deploy an IP core as part of a storage network deployment. Though the examples span a range of conventional SAN applications, the inclusion of the IP core delivers cost savings and functionality not available in traditional implementations . But the reach and potential for storage applications that make use of a robust, high-speed IP core go well beyond the types of examples described so far. With the increasing fluidity of the end-to-end storage chain, as evidenced by the introduction of commoditized networks, interfaces, and hardware platforms, and the ability to distribute storage functions across these components , several new storage applications emerge. These applications take advantage of available infrastructure beyond conventional means and deliver powerful opportunities to tailor storage infrastructure to particular needs. In this section, we take a specific look at two implementations for dealing with fixed content and the solutions that were applied. These customer scenarios complement other types of IP storage network examples in the book by maintaining the IP core as a central deployment theme. When storage strategies are combined, companies can develop synergistic technology opportunities that favor lower TOC and increased returns. 8.4.1 Fixed-Content Storage Networking ImpactThe type of mission-critical information enterprises store is shifting from traditional database and transaction information to fixed-content information such as email, business documents, medical images, check or invoice images, log files, and other static, flat-file information. Mission-critical fixed content does not change after creation, but it contains important information that must be stored and accessed over a long period of time. Fixed content is growing so rapidly that it is overtaking the amount of database and transactional information stored in corporate networks. According to a study by the Enterprise Storage Group, fixed-content information is growing at a compound annual growth rate (CAGR) of 92 percent, while database and transactional information is growing at a CAGR of 61 percent. As a percent of overall storage within the enterprise, fixed-content information will overtake database and transactional information by the end of 2004, as shown in Figure 8-11. Figure 8-11. The transition to reference information. (Source: The Enterprise Storage Group, April 2002) Today's storage solutions are built for high-availability enterprise applications such as online transaction processing (OLTP), enterprise resource planning (ERP), and data warehousing, with fixed content a relatively small part of the enterprise storage need. As such, the bulk of today's systems cater to the needs of dynamic data and applications. In this dynamic data world, data is stored in volumes and files, and performance is focused on block and file I/O and system availability. In the fixed-content world, data is stored in objects with metadata and "content address" tags, and performance is focused on metadata query processing, real-time indexing, and retrieval. Fixed content drives two changes in today's storage paradigm, the focus on vertical performance intelligence and the necessity for a networked system. Storage network performance has moved from historical measures of disk density, fault tolerance, and input-output speed to new measures based on application performance. Similar to how databases have been tuned to perform on specific storage platforms, so must other performance- intensive applications be tuned. In order for a fixed-content system to perform well, applications, database, content search, and storage management must act in concert with hardware components. As such, a vertical performance intelligence emerges in which the link between applications and storage become as important to performance as the individual components themselves . The entire vertical software stack, from application to storage management, must be tuned to address the needs of fixed-content storage. This is different from legacy storage systems, which predominantly focus on component or horizontal intelligence. One way the storage infrastructure performs in such an environment is to physically separate the data from the metadata. Separating data from metadata implies the necessity for a networked infrastructure that can scale the two components independent from each other. Figure 8-12 outlines a comparison between the needs of fixed content and dynamic data storage. Figure 8-12. Comparing dynamic and fixed-content characteristics. 8.4.2 An Email Storage Case StudyEmail has been singled out as a runaway train driving many storage and storage- related expenditures. The requirement, real or perceived, to store and access this information over time poses a serious problem for IT departments and budgets . Not only must IT departments store this information, but users must also have ready access to it to conduct business effectively. In many corporate enterprises, email and office documents are the primary source of the company's corporate history, knowledge, and intellectual property. Therefore, protection of and timely access to this information is as mission-critical as an online transaction database. Due to SEC regulations, many financial services companies must store email for at least three years . In 2002 the SEC planned to levy over $10 million in penalties to financial services companies for noncompliance to these regulations. In other regulated vertical industries, such as pharmaceuticals , transportation, energy, and government, similar regulatory trends mandate saving email and other fixed content (e.g., customer records, voice recordings) for long periods of time. The proliferation of corporate scandals in 2002 will push this trend out into other vertical industries. Email has become the de facto audit tool for all mission-critical areas of a business. According to the Radicati Group, average email storage per user per day will grow from 5 MB in 2002 to 8.6 MB by 2006. See Figure 8-13. Figure 8-13. Increase in email use per user per day. (Source: The Radicati Group, Inc.) To explore the impact of fixed-content email storage on storage network design, let's consider a financial services company that charges its user departments appromimately $0.25 per MB per month to store email and email attachments. With a current email box limit of 50 MB, this company spends approximately $150 per user per year. With raw storage hardware at approximately $0.05 per MB, this means that other hard and soft costs of storing email are over 50 times the cost of the raw storage media alone. Industry benchmarks indicate that the costs of managing storage are normally 7 to 10 times the cost of storage, but email presents some extraordinary demands on the system. The problem arises from storing email over a long period of time. The first component of the storage architecture is storing the email online, where users have a capacity limit for their mailbox, perhaps 50 MB as in the example above. The second component of the architecture is more problematic , as it archives the email when it has dropped off the mailbox infrastructure but still needs to be accessed. Archives are valuable only if a user can access information within a minute or two to solve a business need. For example, quality control managers, audit teams , and compliance officers must be able query and retrieve email over multiyear periods to conduct their everyday business processes. To illustrate the magnitude of archiving email, consider the following example from another financial services company. This company archives 1.5 TB of email per month, with an average size of 33 kilobytes (no attachments). To meet regulatory requirements, email must be stored and must be accessible for a minimum of three years. With 54 TB and 1.64 billion objects to store, the demands and costs explode:

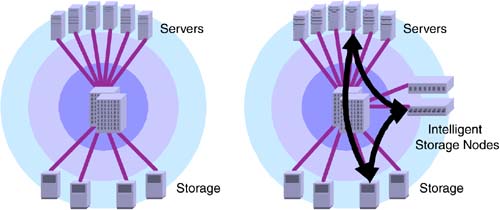

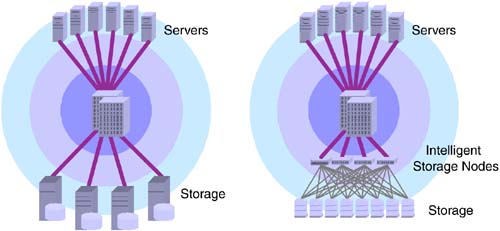

In this case, the storage and management of the metadata becomes a bigger problem than the storage of the data itself. The costs of the storage real estate are only the beginning as you consider the CPUs and backup infrastructure necessary to support these systems. The staffing costs are also significant if you consider what DBA resources are needed to manage an index with 1.64 million entries. To solve email storage management, customers have deployed solutions that separate the metadata from the actual data to provide highly scalable indexing solutions, as shown in Figure 8-14. Figure 8-14. Implementing a distributed system for email storage. The separation of index information from content allows allocation of independent resources. In Figure 8-14, the intelligent storage node represents an indexing node, and additional nodes can be added as needed. If the querying time needs acceleration, but the total amount of data hasn't changed, adding additional indexing nodes can help. Similarly, if more storage is required for content yet there is flexibility on query and access times, only storage needs to be added. Of course, the flexibility is derived from the separation of resources on a network, typically by using IP and Ethernet. While this type of storage solution varies significantly from the IP storage network configurations described earlier in this chapter, it highlights the breadth of solutions that make use of the IP core. 8.4.3 Imaging Fixed ContentFixed content comes in a variety of types, each requiring a specific optimized storage solution. Email indexing data is relatively easy to manipulate. Consider the simplicity of sorting by standard email fields such as to, from, date, subject, and so on. Even without indexing data, the ability to take a binary scan of an email message is quite simple. Standard images, specifically digital photographs, are not easily indexed via metadata or binary scan and therefore cannot universally benefit from the same solutions. Anyone with a digital camera knows the trials and tribulations of keeping track of digital pictures and how complicated the filing of that data can get. Yet digital images are rapidly consuming storage, both individually and for companies that specialize in processing, printing, and storing digital media. Every boost in the megapixel capabilities of a new camera directly impacts the amount of storage required for the photographs. In 1999 several companies jumped into the online photo business, offering digital photograph storage as a way for consumers to share and print photographs over the Internet. Many of these companies did not survive the dotcom meltdown and were out of business within a couple of years. However, several survived and continued to prosper . In the race to stay in business, managing storage played an increasingly competitive role in these businesses. Without a cost-effective means to handle the influx of terabytes of customer photographs, these companies could not survive. One promising company that recognized this challenge early on and took an aggressive approach to managing storage costs is Snapfish. In January 2000, during the initial build-out of its storage infrastructure, the company spent just shy of $200,000 per terabyte for roughly 8 terabytes of usable storage. By late 2002, that figure dropped to roughly $10,000 per terabyte. While some of that price decline can be attributed to macroeconomic factors and the pricing power transfer from vendors to customers, it is also a direct result of an aggressive company campaign to drive storage costs down through adaptive infrastructure and shift in the architectural model for storage. 8.4.4 Achieving the Ten-Thousand-Dollar TerabyteIn January 2000 Snapfish executives selected a complete storage solution from a prominent enterprise storage vendor. The package included a single tower with just over 8 usable terabytes for $1.6 million, or roughly $200,000 per terabyte, and a three-year support and maintenance agreement. To facilitate the image storage, Snapfish selected a NAS approach and front-ended the storage with a vendor-provided NAS head. Both the database files of customer data and the images were housed on the same storage. However, this particular solution lacked flexibility in that the vendor controlled all maintenance and configuration changes. In the rapid development of Snapfish's business and infrastructure, this relationship proved inefficient in handling the customer's needs. By the end of 2000, the company knew it had to make adjustments to fuel the dramatic growth in its business. One of the unique aspects of Snapfish's business model is that it develops print film for customers, yet also provides a wide range of digital photography services. But with a roll of film using about 10 megabytes of storage, and over 100,000 rolls coming in every month, Snapfish was expanding by over 1 terabyte a month. A lower cost solution for image storage was needed. Since the transactional nature of the Web site required more rapid response than the high-volume nature of the image storage, the company split its storage requirements. It moved the customer database information to storage provided by its primary server vendor, facilitating tighter integration and minimizing support concerns. At the same time, it moved the high-capacity image storage to specialized NAS filers. The new NAS storage included just over 6 terabytes at an approximate cost of $120,000 per terabyte. This cut storage costs by over 40 percent, but more importantly, placed the company in an advantageous position. By going through the process of selecting and migrating to a new vendor, it developed the technical expertise to handle a shift in the storage infrastructure and gained negotiating leverage in vendor selection. Another unique aspect Snapfish's business is high-resolution image retention. The company stores high-resolution images of customer photographs for up to 12 months based on a single print purchase. This leads to enormous requirements for high-resolution storage. Only 10 percent of the overall storage requirements serve low-resolution images, such as those that are used for display on the Web site. Therefore, the company has two distinct classes of image storage. Low-resolution images are frequently accessed, require rapid response times, but do not consume excessive storage space. High-resolution images have medium access requirements, require moderate response times, and consume approximately 90 percent of the total storage. Of course, in both cases, reliability and high availability are paramount. The quest to find low-cost storage for high-resolution images led Snapfish to place a bet on a low-cost NAS provider using a commodity NAS head with less expensive IDE drives on the back end. Towards the end of 2001, it took a chance with a moderate storage expense of $18,000 for 1.6 terabytes for a cost of $11,250 per terabyte ”a startling drop to just over 5 percent of the cost per terabyte only two years prior! Unfortunately, that purchase came with a price of excessive downtime that ultimately became untenable. Yet the aggressive nature of Snapfish's storage purchasing, including what could be called experimentation, continued to add migration expertise and negotiating leverage to the organization. Ultimately, the company went back to specialized NAS filers, though this time it selected a product line that included IDE drives. By returning to the brand- name vendor it had used previously, it did pay a slight premium, purchasing over 8 terabytes at a cost of approximately $25,000 per terabyte. This provided the required reliability and availability but kept the cost of the high-resolution image storage low. At the time of this book's printing, Snapfish was in the middle of its next storage purchasing decision as the direction of the overall industry moves towards distributed intelligence throughout the network with a focus on the IP storage model. The continued addition of individual NAS filers left the IT department with a less than optimal method of expanding storage. With each filer having a usable capacity of under 10 terabytes, the configuration fostered "pockets" of storage. Also, having to add a filer to increase the number of spindles in the configuration (or the total number of disk drives) meant adding overhead simply to expand capacity. Snapfish looked to a distributed NAS solution. Instead of the traditional filer-based approach, this new solution incorporated a set of distributed NAS heads, each connecting to a common disk space. Server requests can access any available NAS head that in turn queries the appropriate disk drives to retrieve the files. The solution provides significant scalability and requires only additional disk drives, not additional filers, to expand capacity. This distributed NAS solution builds upon a Gigabit Ethernet core for the storage infrastructure and fits into the overall IP storage model. In this case, the access layer holds the disk drives, which are IDE-based to provide a low-cost solution. The distributed NAS heads fit in the IP storage distribution layer, providing file access between servers and disk drives. By maintaining an IP core, the IT department has tremendous flexibility to add other storage platforms to the overall infrastructure as needed. Simplified diagrams of the initial and future configurations are shown in Figure 8-15. Figure 8-15. Distributed intelligent storage nodes for NAS scalability. |

EAN: 2147483647

Pages: 108