2.6 Storage Area Networks

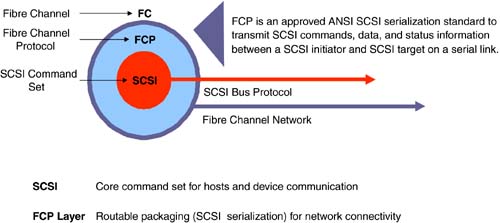

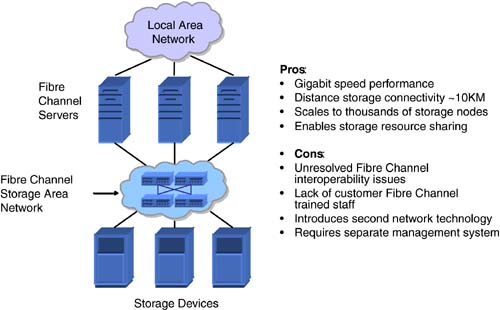

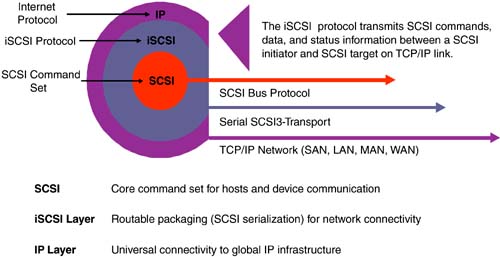

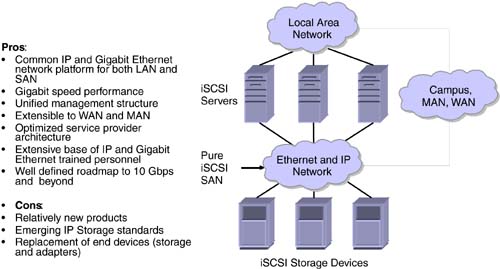

| Entire books have been written about SANs. Two notable volumes are Designing Storage Area Networks and IP SANs , both by Tom Clark. For those interested in the next level of technical detail, they both serve as excellent references. Achieving the roadmap objectives of IP Storage Networking ”Straight to the Core requires a thorough understanding of SANs and the underlying infrastructure of both Fibre Channel and IP SANs. This section covers such material with the intent to quickly move on to the software spectrum that maximizes the impact of a flexible, well-architected infrastructure. 2.6.1 SAN History and BackgroundIn the mid-1990s, IT professionals recognized the gap between DAS and NAS, and momentum grew for a mechanism that combined the performance and direct access of DAS with the network flexibility of NAS. The solution that emerged was Fibre Channel. Fibre Channel is actually a SCSI-based implementation, only in a serial format. SCSI commands are at the root of all storage exchanges between imitators and targets. Commands such as write, read, and acknowledge provide the rules of engagement between two devices. This SCSI command set has proved invaluable as the lingua franca for storage. Originally, the SCSI command set could operate only on top of a SCSI parallel bus. This cabling mechanism involved the use of large, high-density cabling with as many as 68 wires per cable. SCSI cabling cannot extend beyond 25 meters and can effectively support only a handful of devices. Fibre Channel solved SCSI limitations of device count and distance with the Fibre Channel Protocol (FCP) and Fibre Channel networks (see Figure 2-9). It is important to remember that these two components do not necessarily need to operate together, especially when considering implementations of IP storage. With the ability to layer the SCSI command set on to FCP, and then transmit the information across a serial high-speed network built with Fibre Channel, IT professionals could now build networked storage infrastructures where servers have direct access to storage devices, new storage devices could easily be added to the network, and storage capacity could be shared among multiple servers. These SAN benefits, including the ability for storage professionals to manage the ever-increasing amount of data, led to the adoption of this architecture by virtually all large corporations. Figure 2-9. Basics of Fibre Channel Protocol (FCP). With the routable packaging in place via FCP shown in Figure 2-9, the network build-out for storage could take place with Fibre Channel SANs, shown in Figure 2-10. Figure 2-10. Fibre Channel storage area networks. Fibre Channel SANs proved invaluable for storage professionals looking to expand direct access storage capacity and utilization. However, Fibre Channel SANs came with their own set of issues that left the end-to-end solution incomplete. This includes unresolved interoperability issues between multiple vendors' products. While compatibility between Fibre Channel adapters in servers and Fibre Channel storage devices is mature, the interoperability required to build Fibre Channel fabrics with switches and directors from multiple vendors remains a difficult and complex process. Fibre Channel SANs, through the inherent nature of introducing a new network technology different from IP and Ethernet, requires new staff training, new equipment, and new management systems. These factors add considerably to the overall total cost of the storage infrastructure. Finally, Fibre Channel networking was designed as a data center technology, not intended to travel long distances across WANs. With today's requirements for high-availability across geographic areas, Fibre Channel networks cannot compete independently, even with the help of extenders or Dense Wave Division Multiplexing (DWDM) products. At a maximum, these solutions can extend Fibre Channel traffic to around 100 kilometers. 2.6.2 Pure IP Storage NetworksStarting in 2000, the concept of a pure IP SAN took hold. The basic concept of this implementation was to create a method of connecting servers and storage devices, with the direct access block methods applications expected, via an Ethernet and IP network. This would provide IT professionals the ability to utilize existing, well- understood , mature networking technology in conjunction with block-addressable storage devices. The underlying technology enabling this architecture is known as Internet Small Computer Systems Interface, or iSCSI (see Figure 2-11). Figure 2-11. Basics of Internet Small Computer System Interface (iSCSI). iSCSI, in its simplest form, represents a mechanism to take block-oriented SCSI commands and map them to an IP network. This protocol can be implemented directly on servers or storage devices to allow native connectivity to IP and Ethernet networks or fabrics. Once accomplished, storage applications requiring direct access to storage devices can extend that connection across an IP and Ethernet network, which has a nearly ubiquitous footprint across corporate and carrier infrastructures. The basics of the iSCSI protocol are outlined in Figure 2-11, while an implementation example is shown in Figure 2-12. Figure 2-12. Pure iSCSI storage networks. This wholehearted embrace of underlying IP and Ethernet technologies ”mature network technology, installed base, software integration, industry research ”should guarantee iSCSI will succeed as a storage transport. However, networking technologies succeed based on provision of a transition path. The same applies for iSCSI, where the transition path may occur from internal server storage to iSCSI SANs, parallel SCSI devices to iSCSI, or enterprise Fibre Channel storage to iSCSI SAN. Adoption of new technologies occurs most frequently with those likely to gain. With iSCSI, that would indicate adoption first in mid-size to large corporations that have already deployed a Fibre Channel SAN; recognize the benefits of direct access, networked storage infrastructures; and need the ability to scale to large deployments across geographically dispersed locations. This profile often accompanies a significant storage budget that could be considerably trimmed in cost and complexity savings introduced by an IP-centric approach. For a pure iSCSI storage network, no accommodation is made for Fibre Channel. More specifically , the iSCSI specification, as ratified by the IETF, makes no reference to Fibre Channel. That protocol conversion is outside the purview of the standards organization and left completely to vendor implementation. Several mechanisms exist to enhance current Fibre Channel SANs with IP. Optimized storage deployment depends on careful analysis of these solutions, attention to control points within the architecture, and recognition that aggressively embracing IP networking can lead to dramatic total cost benefits. These considerations are covered in Section 2.6.5, "Enhancing Fibre Channel SANs with IP." 2.6.3 SAN GUIDANCE: Comparing Ethernet/IP and Fibre Channel FabricsWith the introduction of IP storage as a capable storage network transport, many comparisons have been drawn between the two technologies. For fair comparison, one must recognize that Fibre Channel and Ethernet are both Layer 2 networking technologies virtually equivalent in performance. They both provide point-to-point, full-duplex , switched architectures. It is very hard to differentiate networking technologies with these common characteristics. Therefore, the speed and feed comparisons between Fibre Channel and Ethernet are largely irrelevant. However, Ethernet is the largest home for the Internet Protocol, and IP ubiquity dramatically expands fabric flexibility. The equivalent in Fibre Channel would be the FCP, but since that works only on Fibre Channel fabrics, it can't compete with IP as an intelligent packaging mechanism. With that in mind, the Table 2-1 depicts some fabric differences. Fibre Channel SANs function well in data center environments and are useful for massive Fibre Channel end-device consolidation. But for reasons identified above, Fibre Channel requires complementary technologies such as IP and Ethernet to help integrate storage into overall corporate cost and management objectives. Table 2-1. Comparison of Fibre Channel and Ethernet/IP Fabrics

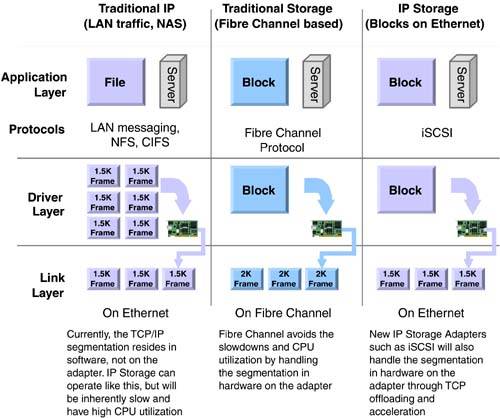

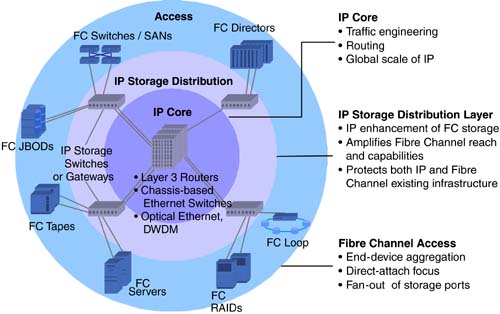

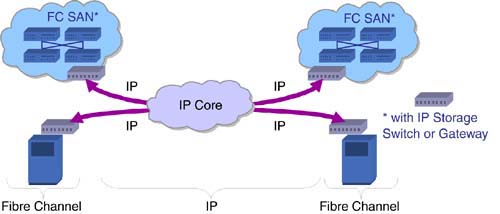

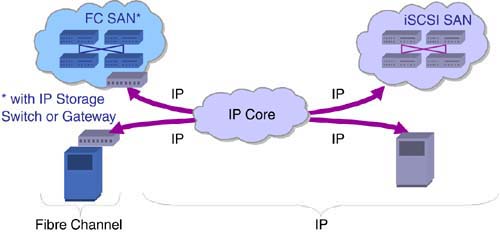

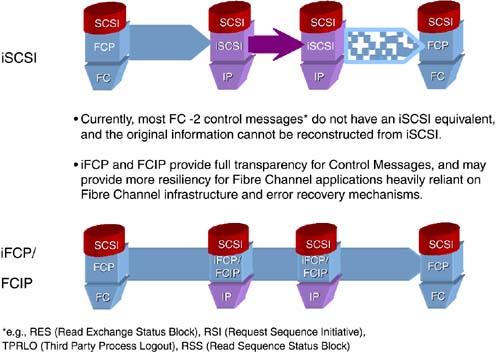

2.6.4 Host Bus Adapters and Network Interface CardsRegardless of the networking interconnect, servers and storage devices need methods to take data from the CPU or the disk drives out to the wire. Servers use HBAs or network interface cards (NICs) for such functions, and storage subsystems use controllers. Both types of devices are functionally similar. Frequently, end-system connectivity gets inappropriately mixed in with network connectivity to describe SANs, but the two are fundamentally different. With the ability to do wire-speed conversion within networking fabrics between different cabling and protocols, end-system interconnects and network fabrics need not be identical. With the dramatic differences between traditional IP-based LAN traffic using NICs and traditional storage-based traffic using Fibre Channel HBAs, it helps to clarify how these fit with new IP storage adapters, such as iSCSI. In the traditional IP case, TCP/IP segmentation resided in the host application layer. When the server wanted to send information out on the wire (link layer), it would have to segment the file into smaller frames that would be sent to the NIC driver layer. The driver layer had the simple task of relaying those packets to the wire. With large files, however, the host-based process of parsing the information into smaller units consumed considerable CPU cycles ”and ultimately led to poor performance. Fibre Channel-based HBAs compensated for this inefficiency by moving the data segmentation directly to the card and letting the card do the heavy lifting of converting to smaller frames for the link layer. This freed CPU cycles for application processing and created a highly efficient mechanism for moving data out to the wire. A combination of these two approaches results in the efficiency of the Fibre Channel approach, through a process called TCP/IP offload. TCP/IP offload can be used for network based servers using TCP/IP, including iSCSI or NAS. The processing for data segmentation once completed in the host now moves to the card, freeing CPU cycles. Also, the data comes out of the server directly to a TCP/IP and Ethernet link using iSCSI, providing native access to IP networks and the corresponding universal reach. IP storage can run on traditional IP NICs through software drivers, but the CPU utilization will be high and could impact application performance during operating hours. During off-peak times, when CPU cycles are available, this approach may be sufficient. IP storage adapters can be further categorized by the types of offload mechanisms. At the basic level, all IP storage adapters employ some form of TCP/IP offloading . Further, some adapters will offload the iSCSI process from the host as well. The intricacies among these implementations require examining several features during evaluation. First and foremost, IP storage adapters should provide wire-speed throughput with CPU utilization of less than 10 percent. This guarantees that even in times of peak processing, the adapter will perform without consuming more that 10 percent of the available CPU resources. Additionally, features like integrated storage and network traffic processing on a single card should be evaluated. Even if such features aren't used all the time, common interface cards for network and storage connectivity cut redundancy costs dramatically. For example, two integrated cards could operate together, one in network mode the other in storage mode. If one fails, the other takes over in both modes. While performance may be impacted, the cost savings for redundancy likely far outweigh that trade-off. Finally, dual-pathing and link aggregation of multiple adapters may be required for high-performance, mission-critical applications. These features should be present in IP storage adapters. Figure 2-13 diagrams the basics of traditional IP network, traditional storage, and new IP storage adapters. Figure 2-13. Network interface cards, host bus adapters, IP storage adapters. 2.6.5 Enhancing Fibre Channel SANs with IPCritical decisions face storage and networking professionals as they try to balance Fibre Channel storage and SAN investment with IP networking investment. The buildup of two incompatible switched, full-duplex, point-to-point networking architectures simply cannot continue long term . It behooves everyone in the organization, from the CIO to storage managers, to question maintaining separate, independent networking topologies based on different technologies, such as one based on Fibre Channel and one based on Ethernet. Traditionally, there has been a distinct separation between networking groups and storage groups within corporate IT departments. Some storage groups have claimed that networking technologies cannot support their requirements and that independent storage traffic requires special networks. The reality of the situation is that IP and Ethernet technologies have supported storage traffic for some time (in file-level formats), and recent innovations such as iSCSI provide block-level transport as well. Going forward, networking professionals interested in an overall view of the IT infrastructure will benefit from a closer look at storage, and vice versa for storage professionals. Whether or not the merging of Fibre Channel and IP technologies meets certain organizational structures is largely irrelevant. Market forces behind the integration of these technologies are upon us, and savvy technologists and business leaders will embrace this dynamic change. 2.6.6 Making Use of the IP CoreEnhancing Fibre Channel solutions with IP starts through an understanding of the IP core. This part of the network provides the most performance, scalability, and intelligence to facilitate the Internet-working of any devices. Equipment used in the IP core can include routers, chassis-based enterprise Ethernet switches, and optical networking equipment using standard Ethernet or DWDM. Other networking technologies include ATM, Frame Relay, and SONET, all of which have mature mechanisms for handling IP transport. At the edge of the network reside Fibre Channel devices, including servers, storage, and Fibre Channel SAN switches and directors. This equipment provides access to Fibre Channel devices, specifically end-device aggregation, and the fan-out of storage ports. In order to make use of the existing IP core, which spans from enterprise, to campus, to metropolitan area networks (MANs) and wide area networks (WANs), an IP storage distribution layer integrates the Fibre Channel devices. This intelligent mechanism for protocol conversion enables Fibre Channel to extend its reach and capabilities in ways that it could not accomplish single-handedly. The basics of this model are shown in Figure 2-14. Figure 2-14. Using IP to enhance Fibre Channel. 2.6.7 Linking Fibre Channel to Fibre Channel with IPThe most common initial deployments for Fibre Channel using IP include remote replication for large Fibre Channel disk subsystems and linking two Fibre Channel SANs across IP, otherwise known as SAN extension. In the first case, IP storage switches or gateways front-end each Fibre Channel subsystem, providing Fibre Channel access to the disks and IP and Ethernet connectivity to the network. Typically, a subsystem-based replication application (such as EMC's SRDF, Hitachi's TrueCopy, HP/Compaq's DRM, XIOtech's REDI SAN Links) then operates on top of this physical link. Across a pair of subsystems, one will assume the initiator status while another assumes the target status, and the appropriate data is replicated through synchronous or asynchronous mechanisms. Similar architectures exist that may also include Fibre Channel SANs, such as switches and directors. Those Fibre Channel SAN islands can also connect via IP using IP storage switches or gateways. Both of these options are shown in Figure 2-15. Servers and storage within each SAN can be assigned to view other devices locally as well as across the IP connection. Figure 2-15. Mechanisms for linking Fibre Channel SANs and devices with IP networks. The benefits of these architectures include the ability to use readily available and cost-effective IP networks across all distances. Typically, these costs can be an order of magnitude less than the costs associated with dedicated dark fiber- optic cabling or DWDM solutions required by pure Fibre Channel SANs. Also, adoption of IP technologies within the core prepares for the addition of native iSCSI devices. 2.6.8 Integrating iSCSI with Fibre Channel across IPThe addition of iSCSI devices means that more of the connection between initiators and targets can be IP-centric. Storage planners gain tremendous flexibility and management advantages through this option, maximizing the use of IP networking and localizing the Fibre Channel component to the attachment of storage devices. Using iSCSI devices with native IP interfaces allows the devices to talk to each other across any IP and Ethernet network. However, iSCSI makes no provision for Fibre Channel, and the conversion between iSCSI devices and Fibre Channel devices must reside within the network. This protocol conversion process typically takes place within an IP storage switch or gateway that supports both iSCSI and Fibre Channel. It can also take place in a server with specialized software or even within a chip that is embedded in a storage subsystem, as shown in Figure 2-16. Figure 2-16. Mechanisms for linking Fibre Channel SANs to iSCSI with IP Networks. For a single device-to-device session, such as server to disk, most multiprotocol products can easily accomplish this task. However, when considering more sophisticated storage device-layer interaction, such as device-to-device interconnect for remote mirroring or SAN extension/interconnect, the protocol conversion process may have more impact on the overall operation. Since iSCSI and Fibre Channel have separate methods of SCSI command encapsulation, the various "states" of each storage session differ between the implementations. And while in a perfect world, the conversion process should not affect the overall integrity of the transaction, there is no practical way to preserve complete integrity across two translations (Fibre Channel to iSCSI and iSCSI to Fibre Channel). Therefore, using iSCSI to interconnect two Fibre Channel disk subsystems or two Fibre Channel SANs adds unnecessary risk to the overall system, particularly when considering the storage error conditions that must be kept in check. This is shown in Figure 2-17. Mitigating this risk requires a complementary approach to iSCSI, embracing the IP network, and aiming for a transition path to pure IP SANs. Figure 2-17. iSCSI and iFCP/FCIP to connect two Fibre Channel end nodes. |

EAN: 2147483647

Pages: 108