The FCC New Spectrum Policy

|

|

The American spectrum management regime is approximately 90 years old. In the opinion of FCC Chairman Michael Powell, it needs to be reexamined and taken in a new direction. Historically, four core assumptions have formed spectrum policy: (1) unregulated radio interference will lead to chaos; (2) spectrum is scarce; (3) government command and control of the scarce spectrum resource is the only way chaos can be avoided; and (4) the public interest centers on the government choosing the highest and best use of the spectrum. The following sections examine the four problem areas in spectrum management and provide their solutions.

Interference-The Problem

Since 1927, interference protection has always been at the core of federal regulators' spectrum mission. The Radio Act of 1927 empowered the Federal Radio Commission to address interference concerns. Although interference protection remains essential to the mission, interference rules that are too strict limit users' ability to offer new services; on the other hand, rules that are too lax may harm existing services. I believe the Commission should continuously examine whether there are market or technological solutions that can-in the long run-replace or supplement pure regulatory solutions to interference.

The FCC's current interference rules were typically developed based on the expected nature of a single service's technical characteristics in a given band. The rules for most services include limits on power and emissions from transmitters. Each time the old service needs to evolve with the demands of its users, the licensee has to come back to the Commission for relief from the original rules. This process is not only inefficient, but it can also stymie innovation.

Due to the complexity of interference issues and the RF environment, interference protection solutions may be largely technology driven. Interference is not solely caused by transmitters, which is the usual assumption on which the regulations are almost exclusively based. Instead, interference is often more a product of receivers; that is, receivers are too dumb, too sensitive, or too cheap to filter out unwanted signals. However, the FCC's decades-old rules have generally ignored receivers. Emerging communications technologies are becoming more tolerant of interference through sensory and adaptive capabilities in receivers. That is, receivers can sense what type of noise, interference, or other signals are operating on a given channel and then adapt so that they transmit on a clear channel that allows them to be heard.

Both the complexity of the interference task-and the remarkable ability of technology (rather than regulation) to respond to it-are most clearly demonstrated by the recent success of unlicensed operations. According to the Consumer Electronics Association, a complex variety of unlicensed devices is already in common use, including garage and car door openers, baby monitors, family radios, wireless headphones, and millions of wireless Internet access devices using Wi-Fi technologies. Yet despite the sheer volume of devices and their disparate uses, manufacturers have developed technology that allows receivers to sift through the noise to find the desired signal.

Interference-The Solution

The recommendation of the Interference Protection Working Group of the FCC's Spectrum Policy Task Force was that FCC should consider using the interference temperature metric as a means of quantifying and managing interference. As introduced in this report, interference temperature is a measure of the RF power available at a receiving antenna to be delivered to a receiver, that is, power generated by other emitters and noise sources. More specifically, it is the temperature equivalent of the RF power available at a receiving antenna per unit bandwidth, measured in units of °Kelvin (K). As conceptualized by the Working Group, the terms interference temperature and antenna temperature are synonymous. Interference temperature is a more descriptive term for interference management.

Interference temperature can be calculated as the power received by an antenna (watts) divided by the associated RF bandwidth (hertz) and Boltzman's Constant (equal to 1.3807 wattsec/°Kelvin). Alternatively, it can be calculated as the power flux density available at a receiving antenna (watts per meter squared), multiplied by the effective capture area of the antenna (meter squared), with this quantity divided by the associated RF bandwidth (hertz) and Boltzman's Constant. An interference temperature density could also be defined as the interference temperature per unit area, expressed in units of °Kelvin per meter squared and calculated as the interference temperature divided by the effective capture area of the receiving antenna (which is determined by the antenna gain and the received frequency). Interference temperature density could be measured for particular frequencies using a reference antenna with known gain. Thereafter, it could be treated as a signal propagation variable independent of receiving antenna characteristics.

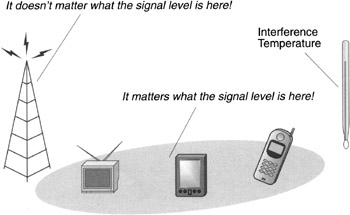

As illustrated in Figure 9-1, interference temperature measurements could be taken at receiver locations throughout the service areas of protected communications systems, thus estimating the real-time conditions of the RF environment.

Figure 9-1: Interference temperature Source- FCC

Like other representations of radio signals, instantaneous values of interference temperature vary with time and, thus, need to be treated statistically. The Working Group envisions that interference thermometers could continuously monitor particular frequency bands, measure and record interference temperature values, and compute the appropriate aggregate value(s). These real-time values could govern the operation of nearby RF emitters. Measurement devices could be designed with the option to include or exclude the on-channel energy contributions of particular signals with known characteristics such as the emissions of users in geographic areas and bands where spectrum is assigned to licensees for exclusive use.

The FCC could use the interference temperature metric to set the maximum acceptable levels of interference, thus establishing a worst-case environment in which a receiver would operate. Interference temperature thresholds could therefore be used, where appropriate, to define interference protection rights.

The time has come to consider an entirely new paradigm for interference protection. A more forward-looking approach requires that there be a clear quantitative application of what is acceptable interference for both license holders and the devices that can cause interference. Transmitters would be required to ensure that the interference level (or interference temperature) is not exceeded. Receivers would be required to tolerate an interference level.

Rather than simply saying your transmitter cannot exceed a certain power, the industry instead would utilize receiver standards and new technologies to ensure that communication occurs without interference, and that the spectrum resource is fully utilized. So, for example, perhaps services in rural areas could utilize higher power levels because the adjacent bands are less congested, therefore decreasing the need for interference protection.[2]

From a simplistic and physical standpoint, any transmission facility requires a transmitter, a medium for transmission, and a receiver. The focus on receiver characteristics has not been great in past spectrum use concerns; hence, a shift in focus is in order. The Working Group believes that receiver reception factors, including sensitivity, selectivity, and interference tolerance, need to play a prominent role in spectrum policy.[3]

Spectrum Scarcity-The Problem

Much of the Commission's spectrum policy was driven by the assumption that there is never enough for those who want it. Under this view, spectrum is so scarce that government forces rather than market forces must determine who gets to use the spectrum and for what. The spectrum scarcity argument shaped the Supreme Court's Red Lion decision, which gave the Commission broad discretion to regulate broadcast media on the premise that spectrum is a unique and scarce resource. Indeed, most assumptions that underlie the current spectrum model derive from traditional radio broadcasting and have nothing to do with wireless broadband Internet applications.

The Commission has recently conducted a series of tests to assess the actual spectrum congestion in certain locales. These tests, which were conducted by the Commission's Enforcement Bureau in cooperation with the Task Force, measured use of the spectrum at five major U.S. cities. The results showed that although some bands were heavily used, others either were not used or were used only part of the time. It appeared that these holes in bandwidth or time could be used to provide significant increases in communication capacity through the use of new technologies, without impacting current users. These results call into question the traditional assumptions about congestion. It appears that most spectrum is not in use most of the time.

Today's digital migration means that more data can be transmitted in less bandwidth. Not only is less bandwidth used, but innovative technologies like software-defined radio and adaptive transmitters can also bring additional spectrum into the pool of spectrum available for use.

Spectrum Scarcity-The Solution

While analyzing the current use of spectrum, the Task Force took a unique approach, looking for the first time at the entire spectrum, not just one band at a time. This review prompted a major insight-a substantial amount of white space is present that is not being used by anybody. The ramifications of this insight are significant. It suggests that although spectrum scarcity is a problem in some bands some of the time, spectrum access is a larger problem-how to get to and use those many areas of the spectrum that are either underutilized or not used at all.

One way the Commission can take advantage of this white space is by facilitating access in the time dimension. Since the beginning of spectrum policy, the government has parceled this resource in frequency and space. The FCC historically permitted use in a particular band over a particular geographic region often with an expectation of perpetual use. The FCC should also look at time as an additional dimension for spectrum policy. How well could society use this resource if FCC policies fostered access in frequency, space, and time?

Technology has, and now hopefully FCC policy will, facilitate access to spectrum in the time dimension that will lead to more efficient use of the spectrum resource. For example, a software-defined radio may allow licensees to dynamically rent certain spectrum bands when they are not in use by other licensees. Perhaps a mobile wireless service provider with software-defined phones will lease a local business's channels during the hours the business is closed. Similarly, sensory and adaptive devices may be able to find spectrum open space and utilize it until the licensee needs those rights for his or her own use. In a commercial context, secondary markets can provide a mechanism for licensees to create and provide opportunities for new services in distinct slices of time. By adding another meaningful dimension, spectrum policy can move closer to facilitating the consistent availability of spectrum and further diminish the scarcity rationale for intrusive government action.

Government Command and Control-The Problem

The theory back in the 1930s was that only the government could be trusted to manage this scarce resource and ensure that no one got too much of it. Unfortunately, spectrum policy is still predominantly a command and control process that requires government officials-instead of spectrum users-to determine the best use for spectrum and make value judgments about proposed and often overhyped uses and technologies. It is an entirely reactive and too easily politicized process.

In the last 20 years, two alternative models to command and control have developed, and both have flexibility at their core. First, the exclusive use or quasi-property-rights model, which provides exclusive, licensed rights to flexible-use frequencies, is subject only to limitations on harmful interference. These rights are freely transferable. Second, the commons or open-access model, which allows users to share frequencies on an unlicensed basis and has usage rights that are governed by technical standards, but has no right to protection from interference. The Commission has employed both models with significant success. Licensees in mobile wireless services have enjoyed quasi-property-right interests in their licensees and transformed the communications landscape as a result. In contrast, the unlicensed bands employ a commons model and have enjoyed tremendous success as hotbeds of innovation.

Government Command and Control of the Spectrum - The Solution

Historically, the Commission has had limited flexibility via command and control regulatory restrictions on which services licensees could provide and who could provide them. Any spectrum users who wanted to change the power of their transmitter, the nature of their service, or the size of an antenna had to come to the Commission to ask for permission, wait the corresponding period of time, and only then, if relief was granted, modify the service. Today's marketplace demands that the FCC provide license holders with greater flexibility to respond to consumer desires, market realities, and national needs without first having to ask for the FCC's permission. License holders should be granted the maximum flexibility to use, or allow others to use, the spectrum, within technical constraints, to provide any services demanded by the public. With this flexibility, service providers can be expected to move spectrum quickly to its highest and best use.

Public Interest-The Problem

The fourth and final element of traditional spectrum policy is the public interest standard. The phrase "public interest, convenience, or necessity" was a part of the Radio Act of 1927 and likely came from other utility regulation statutes. The standard was largely a response to the interference and scarcity concerns that were created in the absence of such a discretionary standard in the 1912 Act. The phrase "public interest, convenience, and necessity" became a standard by which to judge between competing applicants for a scarce resource and a tool for ensuring that interference did not occur. The public interest under the command and control model often decided which companies or government entities would have access to the spectrum resource. At that time, spectrum was not largely a consumer resource; it was accessed by a relatively select few. However, Congress wisely did not create a static public interest standard for spectrum allocation and management.

Public Interest-The Solution

The FCC should develop policies that avoid interference rules that are barriers to entry, that assume a particular proponent's business model or technology, and that take the place of marketplace or technical solutions. Such a policy must embody what has benefited the public in every other area of consumer goods and services-choice through competition and limited, but necessary, government intervention into the marketplace to protect such interests as access to people with disabilities, public health, safety, and welfare.

[2]Michael Powell, "Broadband Migration - New Directions in Wireless Policy," speech to Silicon Flatirons Conference, University of Colorado, Boulder, Colorado, October 30, 2002.

[3]Federal Communications Commission Spectrum Policy Task Force, "Report of the Interference Protection Working Group," November 15, 2002, 25.

|

|

EAN: 2147483647

Pages: 96