Chapter 16: Working with Tabular Data

I begin this chapter with an abstract discussion of the SQL Server table, its attributes and elements, and then move on to dissecting the SELECT statement, before hitting view and other query facilities. The coverage of the SQL Server SELECT statement is exhaustive here, covering the new features, such as support for XML data. Our discussion of the SELECT will first focus on fundamentals and the structure of its syntax, before delving into techniques and strategies, with advanced features like its COMPUTE BY clause, ROLLUPs, and CUBEs,

I also discuss the concept of data conservation in a client/server relational database management system (RDBMS). Notice again the phrase data conservation. I use this term to stress “wise use of data” and further elaborate on the concepts I discussed in Chapter 12.

“Conservation” is an interesting term. A discipline of ecology, it means the wise use of a resource and not “protectionism” or “exploitation.” I would like to apply this concept of wise use to data and retrieval methods appropriate for the fat server model-the primary paradigm of data management using SQL Server (and other DBMS server systems).

But before we can explore this further and test the validity of the concepts, let’s discuss the basics.

The SQL Server Table

Let me start with some recap: By now you certainly know that the table in SQL Server is the primary unit of storage. SQL Server tables are created and maintained in databases, and we described how both databases and tables get created back in Chapter 6. The database, thus, is nothing more than a container, albeit a container with numerous features that allow us to manage the data in the table and control access to it.

| Note | A database is a SQL Server object; it has no reference in relational theory, or in the SQL ANSI standard. |

A SQL Server table is made up of columns and rows. Rows, known as tuples in a table, represent the complete “record” of data within the table. The relational model, however, allows the record to spread over several tables, but taken collectively all the interrelated rows of data combine to make the record. Often, it makes sense for us to break large tables into many smaller ones, to infuse some modularity in the structure of our data, which makes it easier to work with. For example, in a simple order-entry and order-management database, we would typically create a table for the customer’s details and a table for the customer orders and so on.

Out of a collection of customers and each customer’s orders, we are able to manipulate and farm our data (conservation again) to produce documents representing orders, statements, invoices, picklists, packing labels, mailing labels, and so forth. And with analysis services and functions, we can “milk” the data for information to assist us in business decision-making. For each document or assemblage of data, however, we still need only work two or three primary tables of operational data, at the minimum. We would create a table for customer details (company name, telephone number, contact, and so on), one for shipping information (physical addresses as opposed to billing addresses), and one for order details.

It is quite possible and often desirable to add columns to the general customer details table. My rules for this are to first investigate the attributes and the domains of the data you are storing. If new data you are adding to the table only represent one column, it would not make any sense to create a separate table for it. For example, if your business rules require payment only through credit cards and you need no more than a few columns to store credit card numbers, expiration, and name on the card, by all means attach a few more columns to the customer details table.

SQL Server tables can hold 1,024 columns. Your model might work well with a few large tables with many columns, and it might work better (or worse) if the model called for many smaller tables. For example, if the business decided to accept several forms of payments, from cash to credit (charge and account), then it would make sense to provide tables that specialize in the storage of this information. For the most part your database lets you know when its tables should be split up.

| Note | The tables, data, and query strategies discussed in this chapter revolve around operational data (OLTP) and not analytical data (OLAP), the latter being beyond the scope of this book. |

The more tables you have, the more complex the model. There is also more of a chance of redundant data occurring in the database. Whether this is a poor, bad, or unconscionable thing depends on which relational guru you worship. We have guidelines for this dilemma, thanks to Codd, known as normalization. Called the Rules of Normalization, I prefer to see them as guidelines; others treat them like the Talmud. In fact, the nature of the RDBMS is such that unless you are flexible and adopt relational theory as a guideline, as opposed to gospel, you are destined to founder. SQL Server solutions may in fact contain undernormalized data, overnormalized data, and what at times may even appear to be denormalized data. It all depends on your application and what you are trying to achieve. A customer order-entry system, an accounts payable/accounts receivable system, and a database supporting the switching matrix of an automatic call distributor may bear no resemblance to one another.

However, the SQL Server database model requires us to be data conservationists. We need to thus strive to prevent, as far as possible, the repetition of data in our database. Otherwise, we will have to deal with unnecessary bottlenecks, slow queries, misleading query results, and so forth. On the other hand, we should not be so limiting that the database becomes impossible to access easily.

Table Concepts

It is often difficult to change our concept of a table, the abstract impression of it, as being something that looks like a spreadsheet. This is obviously due in large part to the GUI presentation of data in gridlike structures. For ISAM databases, data is almost always presented to the user in grids. What’s worse, users are allowed to edit and update data within the grid, which doesn’t sit well with SQL Server solutions (and we’ll deal with why shortly).

While it is permissible in some cases to let this go with users, often the so-called data-bound grid does more harm than good for the database designers and modeling people. In fact, I have even met people who cannot see beyond the grid, and so severe is their habit, they prefer to store their data in spreadsheets and never go near relational databases. When you cannot see your data for the cells, you are severely hampering your ability to model and build effective SQL Server solutions that query well. In other words, you are unable to see the entire record in the database, only seeing part of it in a cell.

For example, many years ago when I made the transition from Paradox and Access databases to SQL Server, one of the first habits I found hard to kick was the autoincrement feature, and I believe this is true for a lot of Access users still today. While autoincrement, which added a new number automatically to each new “row” of data, helped ensure unique records, it also perpetuated the fixation on cells, which had to exist in a precise range where no row numbers could be missing. The identity property of a column in a SQL Server table offers the same functionality; you should use it with care and avoid fixation on an exact sequence of numbers that remind you of a spreadsheet.

In the late 1990s, I experimented with several of my database contracts, dropping grids from the entire client application and only working with hierarchical data trees, lists, and a variety of string objects. I refused (and still do as far as possible) to “bind” a grid to a SQL Server table. Not only did I find it far easier to create super-thin clients, but also I found my users beginning to work with data far more sophisticatedly. I had gotten them to see beyond the grid, to the data on a much broader scale, as part of a system with depth and perspective. Today, of course, the disconnected data set relegates much of my past pet peeves to the dust of time.

While it is impossible to dismiss columns and column names as being the fabric of SQL Server tables, you should strive to view your data in terms of the domains that make up a complete record in the database. Each row or tuple is made up of one or more (seldom one) domains, and for each of the record’s domains you need to provide a value. In some cases, you might not know the value (the NULL issue again), but we will delve into that little problem later. (Domains are discussed in depth in Chapter 12.)

A customer record, for example, is made up of several domains: first names, last names, street numbers, street addresses, cities, postal codes, and so on. If one row’s collection of domain values is repeated in another row in the same table, we violate the rules of uniqueness. And if the row is repeated in the same table, or another table, but is unique only by virtue of a row identifier, or an identity value, then we violate rules of data redundancy (and our wise use mission goes out the window).

We strive to ensure that no two records can be identical (at least one domain value in the record makes the record unique); there are several means to ensure this. We might get away with a “clone” of a record by giving the record an instance or column identifier. You could create a record number, a customer number, a part number, and so on.

In other words, our records are nothing more than collections of domains, or, in OO terms, objects with collections of properties. In order for us to search for single records or a group of records in a table, the table needs to be ordered according to a sequence of domains. In other words, we would say that a customer number would be domain 1, called CustomerNumbers, and that CustomerName could be called domain 2. It does not matter where in the table the domains are positioned, 1 or 76, or what they are labeled, as long as each record maintains the domain order-and the data type each domain stores. In other words, the city domain (in the fourth column) for record A cannot also be the state domain (also column 4), because that would make our data impossible and nonsensical to work with.

| Note | In pure object systems, this situation can be very different; in some cases, the domains may not be stored in columns. Similar to the contents of the living cell, the records as objects do not maintain properties in any order, and the property needs only to be referenced in some way. |

Thus our queries are built according to the following language: “Find me records in which the customer’s city domain is Miami, or the customer’s order number domain contains the values of ‘12–08–45–A1.’” By concentrating on the value of the data in the domain as opposed to a collection of cells, you’ll have a better feel for your data when it comes to modeling and building queries.

Data-bound controls in the client tier create many problems for SQL Server solutions. To begin with, they have the habit of establishing connections to the server and maintaining them with no rhythm or reason. But mostly the controls tend to perpetuate the cell habit, encouraging you to fixate on the cells as opposed to the values. If you are moving from Access (with MDB files) to SQL Server, you should try to kick the data-bound control habit. In today’s highly distributed data processing environment, the buzz word is “scalability,” and nothing can trash scalability more than binding client controls to database objects.

By referencing columns according to their position in a table (thinking left to right), we can ensure that SQL Server places our values in the correct domains. Domain integrity and type constraints can of course be used to ensure that correct values get stored in the domains. But knowing the position of a column is not essential (and is outdated) because we reference the column by name, no matter where it is in the table. In other words, our insert languages would sound like “place this value into the column for the cities domain, whose record number is X.”

In this regard, it is quite clear that the table is a server-side object, not something that can be instantiated or re-created in the client. In other words, it exists only within a database schema and has no foundation anywhere other than in the database.

Perhaps one of the most dangerous operators in the SQL language is the * (star, or asterisk), which means all or any. So if we say “SELECT * from the CUSTOMERS table,” we are asking SQL Server to send us all the rows and all domain values (every column). This really does wonders for the network and the health of our client and user. But what is worse is that it leads to the notion that the table is being reproduced outside the database, and this is a dangerous perception.

When we query SQL Server, no matter what object the data is drawn from in the database (table, view, table variable, table expression, cursor, and so on), we are served with a replica of the data in the database, which we display at the client in grids, trees, lists, or individual text fields. Our queries thus need to be highly efficient so that only the most valid data the client needs is sent across the network, not as a table, but in tabulated form nonetheless (represented by tabular data streams or XML), so that the client can present the data in the correct fields or containers. Can you imagine what it would be like if we sat down for dinner in a crowded restaurant and asked the kitchen to send us every meal, just because we were not sure what we felt like eating?

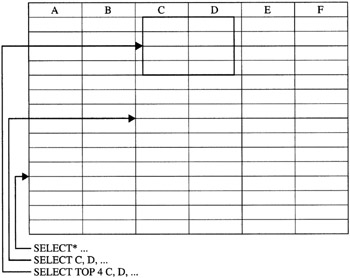

The query at the worst should return no more than twenty rows if the client does not know the exact row it needs, but the query should always strive to send as few rows as possible. Figure 16–1 illustrates what I consider a safety zone for sending a collection of rows to the client.

Figure 16–1: Narrowing down the SELECT to conserve network, server, and client resources

Figure 16–1 demonstrates what I earlier called data ecology or data farming, which means querying only for just enough records to satisfy the client and balance the number of round trips to the server. The best scenario, as indicated in the figure, is one row, and possibly even one value. The less known about a value being searched on, the more rows get returned to the client. And conversely, the more you know about the row, the fewer the number of rows that need to be returned. If you know the exact value that makes the row unique, SQL Server will send you that row and no other.

The row or rows of data returned by SQL server is known as a result set. You can call it anything you like in the client development or external environment (recordset, resultset, rowset, dataset and so on), but from SQL Server it is the result of a query, no matter how simple or how complex. Your queries should also be written in such a way that the result returned contains not only as few records as possible, but only the exact columns needed. If you need to display all the values of a row, so be it.

Sometimes you need just a domain value at the client for some reason that the client does not need to know about. Understand, however, that you are not being much of a conservationist if you write software that updates 20 fields in a record when only one needs to be updated. In other words, why send packets containing 20 values back to SQL Server when you need only send a packet to update one value. Not only do you consume more network bandwidth, but you also consume more processing bandwidth on the server.

If you ask SQL Server for the customer 100 row so that you can update the postal code, does it not make more sense to only receive the customer number, optionally the name to keep the software user sure he or she has the right record, and the postal code? You can then transmit only this field back to the server instead of directly inserting numerous values into the table using an inline SQL statement, or better still just pass a single value to a stored procedure’s parameter. You could pass the values to a stored procedure to determine the most efficient means of updating the code field, leaving everything else intact, without having to transmit the search logic to find the record. We’ll get back to this later.

Let’s recap before we move on:

-

A table is a unit of storage, maintained in a SQL Server database.

-

A table has no reference outside the database: The table exists as a database object; it cannot be transferred out of SQL Server; only its data can, but as a copy in a result set.

-

The data is transmitted (copied) from a table as a tabulated datagram, which is called a result set. The result set is transmitted to the client; it is formatted by the TDS protocol or within an XML text stream.

-

Client components (such as OLE DB and ADO.NET) know how to read the tabulated data from the protocol stack and how to present it in the client.

-

Data that is not changed is discarded; data that must be changed is returned to the server as an update.

EAN: 2147483647

Pages: 239