Overview

| | ||

| | ||

| | ||

10g AS mid- tier servers are designed to be fast and efficient at receiving, processing, and returning data for client requests . However, even the most powerful servers are sometimes not enough when being hit with large numbers of simultaneous connections. There comes a point when even the largest machine wouldn't be able to process all the requests for a popular website. Adding more mid-tier machines to form a cluster is a viable option, but it's expensive and more difficult to maintain. In cases like this, the adage "work smarter, not harder" is appropriate and Oracle's WC is a way of working smarter .

WC is a content caching server that receives incoming client requests before they reach the OHS, and it attempts to provide the content that request seeks (HTML, XML, documents, images, and so on). By providing the content to fulfill the request, it's faster than forcing OHS to receive and process it. In addition, it reduces the processing requirements on the back-end OHS, application-server components , database, and network. Essentially WC acts as a "first stop" for a client request and if it cannot fulfill the client needs, the request is then passed on to OHS as normal.

Architecture

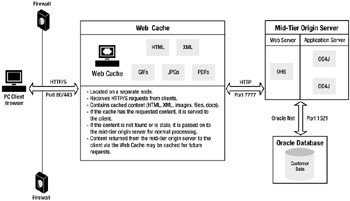

The architecture of a simple WC deployment is shown in Figure 19-1 and further explained in the following sections.

Figure 19-1: Web Cache architecture

WC exists in front of the OHS server as a caching reverse proxy server. Instead of outside clients accessing the internal OHS directly, the clients ' URL requests are sent to the WC server. This is transparent to the users who think their URL request is going to the same web server as normal; they don't realize that they're accessing a proxy server. The WC server can be on the same node as the mid-tier instance or it can be on a different node or even a different operating system platform than the mid-tier instance.

The WC server contains stored content in the form of HTML, XML, documents such as PDFs, and images. This content can be in the form of complete pages or partial pages. It can be static or even dynamically generated content, as you'll see later in the chapter.

If the content needed to meet the user request is found in the cache, then a check is performed to determine if the content is old ( expired ) or invalid. This is determined by invalidation and cacheability rules set within WC. By default, WC considers this invalid content to be unusable and will not serve it to the client request. If however, the content is found and is valid, it's served to the client without ever even being passed to the OHS server. This is retuned to the user as a cache hit . The client session will remain alive as long as the WC KeepAlive directive states, but after that the connection is terminated because the initial request has been fulfilled.

In the event the requested content isn't found, or it's marked stale or invalid, the request cannot be served by WC. In these cases it's considered a cache miss and is passed to OHS for processing. Oracle refers to the OHS in this case as the origin server , which is actually the midtier OHS and application-server components that normally would receive the request in the absence of WC.

Once it has been determined that the request is a cache miss, WC will look for an origin server to which it will pass the request. WC can be configured to send requests on one or more origin servers located in a mid-tier cluster. Based on the relative load of each member of the cluster, the settings within WC, and if any session binding has been established, WC will route the request to one origin server. Once at the origin server, the request is processed as normal. Ultimately, a response will be generated for the client request and it will be sent back to the client through the WC server.

On the way back to the client, the WC will examine the content. If it's determined to be cacheable content, it will be cached in the WC for future requests.

| Note | DBAs managing 10g AS will find this caching concept very familiar. Oracle databases attempt to cache both data and SQL in memory areas (database buffer cache and shared SQL area). These areas are absolutely critical to good database performance, and the WC tool is a valuable one. |

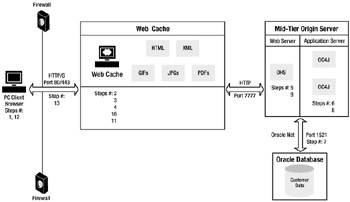

For a more detailed explanation of how WC works, we show you the steps involved in a cache miss in Figure 19-2.

Figure 19-2: A cache miss

In the preceding diagram, you can see the following steps have occurred where the cached content wasn't available:

-

A URL request is made by a client. Instead of being routed to the OHS server, it's routed to the WC server.

-

WC examines the URL to see what content it's requesting. The content can be static or dynamically generated. Furthermore, the resulting page doesn't need to be completely stored in the cache. Static components of a page can be cached while the dynamic components can be derived. This is covered in greater detail in the "Cacheability Rules and Invalidation" section later in this chapter.

-

WC then searches through its cached content to see if the requested content is found. If the content is found, it's then checked to see that it has been marked as invalid or is too old (expired). In this case, the content isn't found in the cache. This is referred to as a cache miss and the event will be recorded in the performance statistics for the WC server.

-

Since the requested content hasn't yet been cached, it must be sent on to an origin server to be processed. WC checks its list of available origin servers and finds the one that is least busy. It routes the request to that mid-tier origin server for processing. By default, WC simply picks an origin server to send the request to. However, you can bind a session to a specific origin server to support stateful sessions.

Note WC can be used as a load-balancing tool. Normally a network load balancer such as Big-IP from f5 Networks would be used for this. However, as you'll see in Chapter 21 "Configuring Clustering and Failover," WC can route requests to a pool of mid-tier origin servers in a cluster.

-

Processing on the mid-tier origin server is the same as without WC. The OHS web listener receives the request and routes it to the appropriate application server module. In this case, it uses mod_oc4j to route it to the OC4J webdev instance.

-

The OC4J webdev instance processes the request as a Servlet (thus illustrating that WC can work with dynamic content).

-

During processing, the Servlet needs to make a call to an Oracle database via JDBC on port 1521. This occurs and the data is returned back to the OC4J webdev instance.

-

Processing completes in the OC4J webdev instance. A combination of data, images, and HTML is returned to the OHS web listener component.

-

OHS returns the response from OC4J to the WC server.

-

WC examines the result set returned from OHS. Within WC, the administrator establishes rules regarding what content can be cached and what cannot be cached. WC applies these rules and determines that the HTML and image files will be cached, but the dynamic data content will not be. The cached content is loaded into the WC memory cache so that it will be available if requested again.

-

WC returns the content back to the client browser.

-

At the client browser side, some additional caching may occur independent of WC.

-

The connection between WC and the client browser remains established for the duration of the KeepAlive parameter. This is because clients typically send multiple requests to a website as part of a "transaction." Even though HTTP is stateless, it's a performance boost to keep this network connection established for a few seconds after a request is processed and returned. It eliminates the overhead associated with establishing a new connection between WC and the client. This administrator sets the KeepAlive parameter as you'll see later, but the default is five seconds.

In the previous example you saw how WC seemingly acts as a web server to process requests, but that's an incorrect description. WC isn't really that "smart" and doesn't truly process a request in the same way as OHS. WC looks at a request, checks to see if it has that content and it's valid, and returns it if it does. Otherwise it passes it off to OHS to do the real work. WC is advanced enough to support SSL, caching, and load balancing, but it shouldn't be considered a true web server. By design its job and the job of OHS are different, as they should be.

Benefits

Deploying WC is optional, but it's beneficial because it improves response time for the client while reducing the load on the system. Specifically, it provides the following benefits:

-

Content is delivered from one source. Rather than having to retrieve and assemble content from multiple sources (for example, file system for HTML and images, OC4J to generate dynamic content, database for data), the content is available on one WC server. This provides a faster presentation of content to the client.

-

Content is retrieved from memory. Requested content is stored in the WC memory, rather than disk. It's well known that providing content for a request from memory is much faster than from the disk. If the maximum amount of memory allocated to WC is used, the less popular content is removed from the cache.

-

Reduced network traffic on servers. One network hop is all that's needed to get the content from WC. Without WC, content must be retrieved from a web server, possibly a separate application server, and likely a database. Often this requires multiple network requests from an application server to the database and involves large amounts of data being moved. This contributes to network congestion.

-

Reduced download network traffic to client. WC has the ability to store both compressed and noncompressed content (as does OHS). The benefit is that it reduces network traffic to send the client by sending compressed content. The user sees a benefit because the time to uncompress the content within the browser is outweighed by the reduction in download time from the server. This is especially beneficial over already slow network connections.

-

Reduced processing time. With WC, content needs to be processed only once before it can be cached (there's no way to preload content). This means that rather than having to perform the same redundant processing on the web server, application server, and database for a routine request, the content is already prepared and staged on the WC server to be provided to incoming requests.

-

Improved scalability. Since the same content doesn't have to be redundantly generated for each request, a larger number of unique requests can be processed by the origin server. Without WC, to support a larger number of concurrent users you would likely need multiple mid tiers in a cluster that's used in conjunction with a network load balancer. With WC, a single mid tier can support a larger number of users because it doesn't have to keep processing the same redundant request again and again.

-

Potentially a cheaper solution. Because WC servers help reduce the number of mid-tier origin servers needed, using WC can be a cheaper solution. Furthermore, since a WC server is essentially just a caching server, there isn't the same need to host it on a large node as you do the mid tiers that perform processing. The idea here is to use smaller WC servers in front of larger, more expensive mid tiers that do the "heavy lifting ."

-

Supports clustering mid tiers and failover. WC acts as a load balancer in addition to being a caching server. Therefore, it can be deployed in front of a mid-tier cluster to direct requests to whichever mid tier is least busy. Furthermore, if one mid tier fails, requests are routed to the surviving mid tiers. This is detailed in Chapter 21.

While the main benefit of WC is to provide content faster to clients, the benefit that's often overlooked is the system scalability benefits. By implementing WC, you're able to reduce (not eliminate) some of the load on your mid tiers. While running a single mid tier, the need to process every individual request from a client (many of which are redundant across multiple clients) will only work in small to medium- sized applications; truly large applications with many simultaneous users will eventually crush that mid tier. You can scale your mid tiers vertically only so much until the largest machine is still too small.

As we discussed previously, once your largest mid tier is maxed out, you can add additional large mid tiers in a clustered configuration to distribute the load. Theoretically, you can keep adding mid tiers behind a load balancer until response times are adequate. The main problem is that this solution is expensive and more difficult to maintain, but there's also a performance overhead associated with traffic between clustered instances.

WC servers can themselves be clustered if needed. Using WC to reduce the number of redundant requests sent to the mid tiers will enable your existing mid tiers to process more unique requests freeing them up to do the heavy processing tasks associated with the application. Using WC also reduces the amount of network traffic on the system behind the mid-tier servers and SQL processing within the database servers.

Drawbacks

So WC offers some important benefits, but it isn't necessary for every system. Some small and some medium-sized systems simply don't generate enough requests to make WC necessary. The mid-tier origin server can often handle incoming process requests just fine without the need for caching. In cases like this, the users may or may not notice a gain in performance with WC.

Furthermore, not all applications will benefit greatly from it. WC is good for sites that have a large number of requests for the same content. If the application is a typical Internet application, online store, or Oracle Portal where speed and presentation is key, then WC may be a good choice. On the other hand, if the application is more processing intensive when the same content is seldom repeated, such as with reporting systems, WC would likely yield minimal gains.

Sometimes WC will simply be redundant. Other content-caching products may be already implemented. The Squid caching proxy server ( www.squid-cache.org/ ) is one example. However, products like these certainly aren't as integrated with 10g AS as Oracle WC and you may still want to evaluate the additional benefits that WC could provide.

Consider your exact requirements before implementing WC, along with the following potential issues:

-

Requires a dedicated WC node. WC is designed to be installed on a separate node from the mid tier. Even though this WC node can be a relatively small Windows server, not all organizations have an extra server just for WC. WC can be installed on the same server as the mid tier and would provide some benefits, but not as much as if it were on a dedicated server.

-

Requires extra Oracle license. Not all organizations are willing to pay the Oracle licensing costs associated with 10g AS being on an additional WC server. Be sure to check with your Oracle sales representative before installing 10g AS to see if this is applicable to your organization.

-

Provides an additional administration burden . WC is an additional 10g AS J2EE installation that must be configured, patched, and maintained. Although setting up WC is relatively simple, it's an additional task. Furthermore, after setup it must be maintained , monitored , and the cache contents administered.

-

Requires developer support. Most administrators aren't able to identify the high demand, cacheable web content nearly as well as the application developers. WC comes with default cacheability rules for content that obviously should be cached, such as image files. However, for more advanced cacheability rules, you usually need application developers to determine what content to cache and for how long to cache it. Expect to work with the developers to identify content that will be cached, how long it can be cached, when to invalidate it, and write the rules to implement these decisions.

We consider WC to be highly useful and sometimes mandatory in certain circumstances. However, we don't believe it should be automatically implemented on every new system without considering the issues mentioned previously.

| | ||

| | ||

| | ||

EAN: 2147483647

Pages: 150