Hostnames and Interfaces

|

A Solaris network consists of a number of different hosts that are interconnected using a switch or a hub. Solaris networks connect through to each other by using routers, which can be dedicated hardware systems or Solaris systems that have more than one network interface. Each host on a Solaris network is identified by a unique hostname: these hostnames often reflect the function of the host in question. For example, a set of four web servers may have the hostnames www1, www2, www3, and www4, respectively.

Every host and network that is connected to the Internet uses the Internet Protocol (IP) to support higher-level protocols such as TCP and UDP. Every interface of every host on the Internet has a unique IP address, which is based on the network IP address block assigned to the local network. Networks are addressable by using an appropriate netmask , which corresponds to a Class A (255.0.0.0), Class B (255.255.0.0), or Class C (255.255.255.0) network.

Solaris supports multiple Ethernet interfaces, which can be installed on a single machine. These are usually designated by files like

/etc/hostname.hme n

or, for older machines,

/etc/hostname.le n

where n is the interface number, and le and hme are interface types. Interface files contain a single hostname or IP address, with the primary network interface being designated with an interface number of zero. Thus, the primary interface of a machine called server would be defined by the file /etc/server.hme0 , which might contain the IP address 203.17.64.28. A secondary network interface, connected to a different subnet, might be defined in the file /etc/server.hme1 . In this case, the file might contain the IP address 10.17.65.28. This setup is commonly used in organizations that have a provision for a failure of the primary network interface, or to enable load balancing of server requests across multiple subnets (for example, for an intranet web server processing HTTP requests ).

A system with a second network interface can either act as a router or as a multihomed host. Hostnames and IP addresses are locally administered through a naming service, which is usually the Domain Name Service (DNS) for companies connected to the Internet and the Network Information Service (NIS/NIS+) for companies with large internal networks that require administrative functions beyond what DNS provides, including centralized authentication.

It is also worth mentioning at this point that it is quite possible to assign different IP addresses to the same network interface, which can be useful for hosting virtual domains that require their own IP address, rather than relying on application-level support for multihoming (for example, when using the Apache web server). Simply create a new /etc/hostname.hmeX:Y file for each IP address required, where X represents the physical device interface, and Y represents the virtual interface number.

The subnet mask used by each of these interfaces must also be defined in /etc/ netmasks . This is particularly important if the interfaces lie on different subnets or if they serve different network classes. In addition, it might also be appropriate to assign a fully qualified domain name to each of the interfaces, although this will depend on the purpose to which each interface is assigned.

Subnets are visible to each other by means of a mask. Class A subnets use the mask 255.0.0.0. Class B networks use the mask 255.255.0.0. Class C networks use the mask 255.255.255.0. These masks are used when broadcasts are made to specific subnets. A Class C subnet 134.132.23.0, for example, can have 255 hosts associated with it, starting with 134.132.23.1 and ending with 134.132.23.255. Class A and B subnets have their own distinctive enumeration schemes.

Internet daemon

inetd is the super Internet daemon that is responsible for centrally managing many of the standard Internet services provided by Solaris through the application layer. For example, telnet, ftp, finger, talk, and uucp are all run from the inetd. Even third-party web servers can often be run through inetd. Both UDP and TCP transport layers are supported with inetd. The main benefit of managing all services centrally through inetd is reduced administrative overhead, because all services use a standard configuration format from a single file. Just like Microsoft Internet Information Server (IIS), inetd is able to manage many network services with a single application.

There are also several drawbacks with using inetd to run all of your services. For example, doing so means there is now a single point of failure, meaning that if inetd crashes because of one service that fails, all of the other inetd services may be affected. In addition, connection pooling for services like the Apache web server is not supported under inetd. A general rule is that high-performance applications, where there are many concurrent client requests, should use a stand-alone daemon.

The Internet daemon relies on two files for configuration. The /etc/inetd.conf file is the primary configuration file, consisting of a list of all services currently supported and their runtime parameters, such as the file system path to the daemon that is executed. In addition, the /etc/services file maintains a list of mappings between service names and port numbers , which is used to ensure that services are activated on the correct port.

FTP Administration

FTP is one of the oldest and most commonly used protocols for transferring files between hosts on the Internet. Although it has been avoided in recent years for security reasons, anonymous FTP is still the most popular method for organizing and serving publicly available data. In this section, we will examine the FTP protocol and demonstrate how to set up an FTP site with the tools that are supplied with Solaris. We will also cover the popular topic of anonymous ftp and GUI FTP clients and explain some of the alternative ftp servers designed to handle large amounts of anonymous ftp traffic.

With the UNIX-to-UNIX Copy Program (UUCP) beginning to show its age, in the era of Fast Ethernet and the globalization of the Internet, the File Transfer Protocol (FTP) was destined to become the de facto standard for transferring files between computers connected to each other using a TCP/IP network. FTP is simple, transparent, and has a rich number of client commands and server features that are very powerful in the hands of an experienced user . In addition, there are a variety of clients available on all platforms with a TCP/IP stack, which assists with transferring entire directory trees, for example, rather than performing transfers file by file.

Although originally designed to provide remote file access for users with an account on the target system, the practice of providing anonymous FTP file areas has become very common in recent years, allowing remote users without an account to download (and in some cases upload) data to and from their favorite servers. This allowed the easy dissemination of data and applications before the widespread adoption of more sophisticated systems for locating and identifying networked information sources (such as gopher and HTTP, the Hypertext Transfer Protocol). Anonymous FTP servers typically contain archives of application software, device drivers, and configuration files. In addition, many electronic mailing lists keep their archives on anonymous FTP sites so that an entire year s worth of discussion can be retrieved rapidly . However, relying on anonymous FTP can be precarious because sites are subject to change, and there is no guarantee that what is available today will still be there tomorrow.

FTP is a TCP/IP protocol specified in RFC 959. On Solaris, it is invoked as a daemon through the Internet super daemon (inetd); thus, many of the options that are used to configure an FTP server can be entered directly into the configuration file for inetd ( /etc/inetd.conf ). For example, in. ftpd can be invoked with a debugging option ( -d ) or a logging option ( -l ), in which case all transactions will be logged to the /var/adm/messages file by default.

The objectives of providing a FTP server are to permit the sharing of files between hosts across a TCP/IP network reliably and without concern for the underlying exchanges that must take place to facilitate the transfer of data. A user need only be concerned with identifying which files he or she wishes to download or upload and whether or not binary or ASCII transfer is required. The most common file types, and the recommended transfer mode, are shown in Table 19-1. A general rule of thumb is that text-only files should be transferred using ASCII, but applications and binary files should be transferred using binary mode. Many clients have a simple interface that makes it very easy for users to learn to send and retrieve data using FTP.

| Extension | Transfer Type | Description |

|---|---|---|

| .arc | Binary | ARChive compression |

| .arj | Binary | Arj compression |

| .gif | Binary | Image file |

| .gz | Binary | GNU Zip compression |

| .hqx | ASCII | HQX (MacOS version of uuencode) |

| .jpg | Binary | Image file |

| .lzh | Binary | LH compression |

| .shar | ASCII | Bourne shell archive |

| .sit | Binary | Stuff-It compression |

| .tar | Binary | Tape archive |

| .tgz | Binary | Gzip compressed tape archive |

| .txt | ASCII | Plaintext file |

| .uu | ASCII | Uuencoded file |

| .Z | Binary | Standard UNIX compression |

| .zip | Binary | Standard zip compression |

| .zoo | Binary | Zoo compression |

FTP has evolved through the years, although many of its basic characteristics remain unchanged. The first RFC for FTP was published in 1971, with a targeted implementation on hosts at MIT (RFC 114), by A.K. Bhushan. Since that time, enhancements to the original RFC have been suggested, including

-

RFC 2640: Internationalization of the File Transfer Protocol

-

RFC 2389: Feature Negotiation Mechanism for the File Transfer Protocol

-

RFC 1986: Experiments with a Simple File Transfer Protocol for Radio Links Using Enhanced Trivial File Transfer Protocol (ETFTP)

-

RFC 1440: SIFT/UFT: Sender-Initiated/Unsolicited File Transfer

-

RFC 1068: Background File Transfer Program (BFTP)

-

RFC 2585: Internet X.509 Public Key Infrastructure Operational Protocols: FTP and HTTP

-

RFC 2428: FTP Extensions for IPv6 and NATs

-

RFC 2228: FTP Security Extensions

-

RFC 1639: FTP Operation Over Big Address Records (FOOBAR)

Some of these RFCs are informational only, but many suggest concrete improvements to FTP that have been implemented as standards. For example, many changes will be required to fully implement IPv6 at the network level, including support for IP addresses that are much longer than standard. Despite these changes and enhancements, however, the basic procedures for initiating, conducting, and terminating an FTP session have remained unchanged for many years. FTP is a client/server process ”a client attempts to make a connection to a server, by using a command like

client% ftp server

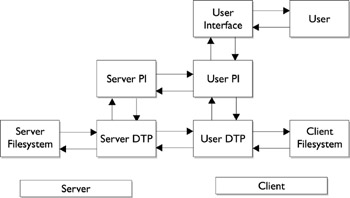

If there is an FTP server active on the host server, the FTP process proper can begin. After a user initiates the FTP session through the user interface or client program, the client program requests a session through the client protocol interpreter (client PI), such as DIR for a directory listing. The client PI then issues the appropriate command to the server PI, which replies with the appropriate response number (for example, 200 Command OK, if the command is accepted by the server PI). FTP commands must reflect the desired nature of the transaction (for example, port number and data transfer mode, whether binary or ASCII) and the type of operating to be performed (for example, file retrieval with GET, file deletion with DELE, and so forth). The actual data transfer process (DTP) is conducted by the server DTP agent, which connects to the client DTP agent, transferring the data packet by packet. After transmitting the data, both the client and server DTP agents then communicate the end of a transaction, successful or otherwise , to their respective PIs. A message is then sent back to the user interface, and ultimately the user finds out whether or not their request has been processed . This process is shown in Figure 19-1.

Figure 19-1: The FTP model.

Anonymous FTP allows an arbitrary remote user to make an FTP connection to a remote host. The permissible usernames for anonymous FTP are usually anonymous or ftp. Not all servers offer FTP ”often, it is only possible to determine if anonymous FTP is supported by trying to login as ftp or anonymous. Sites that support anonymous FTP usually allow the downloading of files from an archive of publicly available files. However, some servers also support an upload facility, where remote, unauthenticated, and unidentified users can upload files of arbitrary size . If this sounds dangerous, it is: if you don t apply quotas to the ftp users directories on each file system that the ftp user has write access to, it is possible for a malicious user to completely fill up the disk with large files. This is a kind of denial of service attack, since a completely filled file system cannot be written to by other user or system processes. If a remote user really does need to upload files, it is best to give them a temporary account by which they can be authenticated and identified at login time.

Solaris 9 provides the ftpconfig command to install anonymous FTP.

EAN: 2147483647

Pages: 265