Lesson 1: Cluster Service Architecture

A cluster is basically a group of servers that appear as a single virtual server. A virtual server corresponds to a generic IP address and a network name, and allows your users to access all the resources in the cluster, including Exchange 2000 services, without having to know the names of the individual nodes. Even if one system fails and a second node must assume the workload, your users can immediately reconnect using the generic cluster name, which hides the complexity of the cluster from the users completely. By grouping two or more computers together in a cluster, you can minimize system downtime caused by software, network, and hardware failures. On one cluster you can configure multiple virtual servers.

This lesson highlights the general characteristics of clustered systems running Windows 2000 Advanced Server or Windows 2000 Datacenter Server. It introduces specific hardware requirements and covers other dependencies that you need to take into consideration when designing a cluster for Exchange 2000 Enterprise Server.

At the end of this lesson, you will be able to:

- Identify important features and issues regarding the clustering technology.

- Describe the architecture of the Windows 2000 Cluster service.

Estimated time to complete this lesson: 75 minutes

Server Clusters in Exchange 2000 Environments

Clustering is a mature technology available for all popular network operating systems, including Solaris and Windows 2000. Nonetheless, it is still a controversial instrumentation. For instance, organizations often utilize clustered Windows 2000 systems to provide continuous, uninterrupted services to their users. Implicitly, it is assumed that clustering provides fault tolerance in all imaginable areas, including information store databases. Despite assumptions and desires, it is impossible to achieve these goals with Windows 2000 Cluster service. Although it is not a 100% perfect solution, clustering does reduce the number of potential single points of failure and thereby improves service availability.

In the case of a system failure, the Cluster service takes the virtual server offline on the first node before it takes it online again on another cluster member; hence, business processes are interrupted, and users need to reconnect to the cluster after the failover. The advantage of clustering, however, is that your users can reconnect almost immediately. The Cluster service is indeed the world's fastest system repair service. Likewise, clusters don't protect and don't repair information store databases, which remain single points of failure no matter how many nodes your cluster contains. Therefore, you still have to develop a sound backup and disaster recovery strategy, as discussed in Chapter 20, "Microsoft Exchange 2000 Server Maintenance and Troubleshooting."

Advantages of Server Clusters

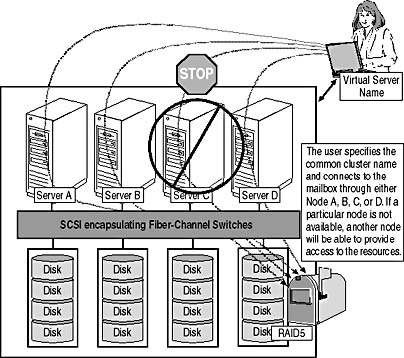

Despite their limitations, clusters can significantly improve system availability in case of hardware or software failures or during planned maintenance. Clusters are an ideal choice if you want to build Exchange 2000 systems that your company depends on for its business. Clusters can also be used to scale to support a very large user base. To give an example, you could group four quad-processor systems and four shared redundant arrays of independent disks (RAIDs) together and let this cluster handle four individual storage groups each containing 5000 mailboxes. That's 20,000 users you can support with one clustered system (see Figure 7.1).

Figure 7.1 A four-node Windows 2000 cluster

Important

To take advantage of four-node clustering and 64 GB memory support, you must install Windows 2000 Datacenter Server.

The advantages of clustered Windows 2000 systems are:

- High availability of system resources

- Improved scalability through grouping of server resources

- Reduced system management costs

Cluster Hardware Configuration

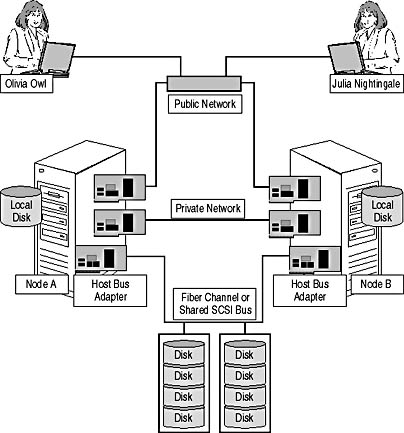

Figure 7.2 illustrates the essential hardware components of a cluster. A shared Small Computer System Interface (SCSI) bus and two network cards are required in addition to typical server equipment, such as a local disk where the operating system must be installed. The shared SCSI bus allows all nodes access to the cluster disks. The public network adapters, again, provide client connectivity and a communication path between the nodes. In intervals of seconds, nodes exchange state information between each other, which is known as a heartbeat. The public network adapters should be configured redundantly on each server to reduce the chance that a failure of a network interface card will result in the failure of the entire cluster. The private network adapter, on the other hand, is optional and should be installed to implement an additional communication channel between the nodes in case the public network fails. The private network adapter is not a requirement, but it is necessary if you want to create a cluster with complete hardware redundancy.

The nodes in a cluster are connected by up to three physical connections:

- Shared storage bus. Connects all nodes to the disks (or RAID storage systems) where all clustered data must reside. A cluster can support more than one shared storage bus if multiple adapters are installed in each node.

- Public network connection. Connects client computers to the nodes in the cluster. The public network is the primary network interface of the cluster. Fast and reliable network cards, such as Fast Ethernet or Fiber Distributed Data Interface (FDDI) cards, should be used for the public interface.

- Private network connection. Connects the nodes in a cluster and ensures that the nodes will be able to communicate with each other in the event of an outage of the public local area network (LAN). The private LAN is optional but highly recommended. Low-cost Ethernet cards are sufficient to accommodate the minimal traffic of the cluster communication.

It is very important that you check Microsoft's Hardware Compatibility List, specifically, for the Cluster service. Only hardware components, especially host bus adapters and disks that are tested by Microsoft, should be used for clustering. In addition, make sure the host bus adapters are of the same kind and have the same firmware revision. Configure the shared storage carefully. If you are using a traditional SCSI-based cluster, connect only disks and SCSI adapters to it—no tape devices, CDs, scanners, and so forth—and ensure that the bus is terminated properly on both ends. If you choose a critical hardware component or improperly terminate the shared SCSI bus, you may end up with corruption of the cluster disks instead of a reliable and highly available cluster.

Figure 7.2 Physical connections in a two-node cluster

Tip

It is advisable to purchase complete cluster sets from reliable hardware vendors instead of configuring the cluster hardware manually.

Partitioned Data Access

As shown in Figure 7.2, Olivia and Julia are both able to access the shared SCSI disk sets of the cluster through either Node A or Node B. However, it is impossible to access the shared disks through both Node A and Node B at the same time. This is because the Cluster service of Windows 2000 does not support concurrent access to shared disks, also known as the "shared-everything" data access model, which is more common in mainframe-oriented environments.

To avoid the overhead of synchronizing concurrent disk access, the Cluster service of Windows 2000 relies on the partitioned data model, in jargon also called "shared-nothing" model. The shared-nothing model is usually sufficient for PC systems, but its disadvantage is that you cannot achieve dynamic load balancing. For instance, when you configure a resource group for a virtual Exchange 2000 Server in a two-node cluster (as shown in Figure 7.2), only one node at a time can own this resource group and provide access to its resources. In the event of a failure, the Exchange 2000 resource group can be failed over (moved) from the failed node to another node in the cluster, but it is impossible to activate the same virtual server on more than one node in tandem.

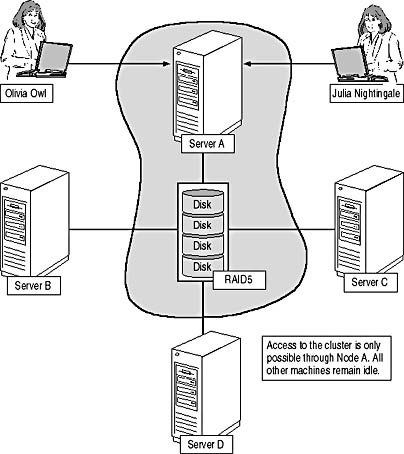

Gaining and Defending Physical Control

The fact that the Cluster service relies on the partitioned data model has far-reaching design consequences. For example, it doesn't make much sense to configure a four-node cluster with just one shared physical disk set (see Figure 7.3). As mentioned, this disk set can only be owned by one node. The node owning the resource would request exclusive access to the disk by using the SCSI Reserve command. No other node can then access the physical device unless an SCSI Release command is issued. Hence, the three other nodes must remain idle until the first node fails.

NOTE

Only one node can gain access to a particular disk at any given time even though the disks on the shared SCSI bus are physically connected to all cluster nodes.

Figure 7.3 A questionable four-node cluster configuration

Windows 2000 Clustering Architecture

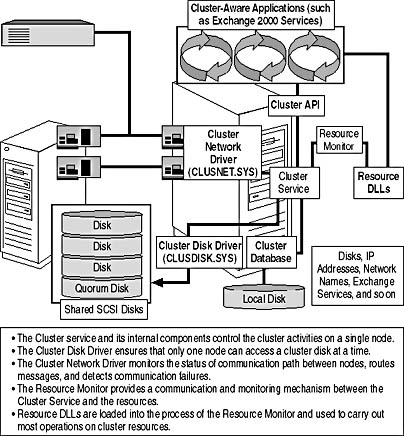

To act as one unit, clustered Windows 2000 computers must cooperate with each other closely. The Cluster service consists of several internal elements and relies on additional external components to handle the required tasks. The Node Manager, for instance, is an internal module that maintains a list of nodes that belong to the cluster and monitors their system state. It is this component that periodically sends heartbeat messages to its counterparts running on other nodes in the cluster. The heartbeats allow the node managers to recognize node faults. The Communications Manager, another internal component, manages the communication between all nodes of the cluster through the cluster network driver (see Figure 7.4).

Figure 7.4 Cluster service and its external components

Resource Monitoring and Failover Initiation

The health of each cluster resource is monitored by the Resource Monitor, which is implemented in a separate process communicating with the Cluster service via remote procedure calls (RPCs). Resource Monitor relies on Resource DLLs to manage the resources (see Figure 7.4). Resources are any physical or logical components that the Resource Manager (another internal component of the Cluster service) can manage, such as disks, IP addresses, network names, Exchange 2000 Server services, and so forth. The Resource Manager receives system information from resource monitor and node manager to manage resources and resource groups and initiate actions, such as startup, restart, and failover. To carry out a failover, the Resource Manager works in conjunction with the Failover Manager. The failover procedures are explained in Lesson 2.

Configuration Changes and the Quorum Disk

Other important components are the Configuration Database Manager, which maintains the cluster configuration database, and the Checkpoint Manager, which saves the configuration data in a log file on the quorum disk. The configuration database, also known as the cluster registry, is separate from the local Windows 2000 Registry, although a copy of the cluster registry is kept in the Windows 2000 Registry. The configuration database maintains updates on members, resources, restart parameters, and other configuration information.

The quorum disk, which holds the configuration data log files, is a cluster- specific resource used to communicate configuration changes to all nodes in the cluster. The Global Update Manager provides the update service that transfers configuration changes into the configuration database of each node. The quorum resource also holds the recovery logs written by the internal Log Manager module of the Cluster service. There can only be one quorum disk in a single Windows 2000 cluster.

Joining a Cluster and Event Handling

When you start a server that is part of a cluster, Windows 2000 is booted as usual, mounting and configuring local, noncluster resources. After all, each node in a Windows 2000 cluster is also a Windows 2000 Advanced Server or Datacenter Server in itself. The Cluster service is typically set to automatic startup. When this service starts, the server determines the other nodes in the cluster based on the information from the local copy of the cluster registry. The Cluster service attempts to find an active cluster node (known as the sponsor) that can authenticate the local service. The sponsor then broadcasts information about the authenticated node to other cluster members and sends the authenticated Cluster service an updated registry if the authenticated service's cluster database was found outdated.

From the point of view of other nodes in the cluster, a node may be in one of three states: offline, online, or paused. A node is offline when it is not an active member of the cluster. A node is online when it is fully active, is able to own and manage resource groups, accepts cluster database updates, and contributes votes to the quorum algorithm. The quorum algorithm determines which node can own the quorum disk. A paused node is an online node unable to own or run resource groups. You may pause a node for maintenance reasons, for instance.

The Event Processor, another internal component of the Cluster service, manages the node state information and controls the initialization of the Cluster service. This component transfers event notifications between Cluster service components and between the Cluster service and cluster-aware applications. For example, the Event Processor activates the Node Manager to begin the process of joining or forming a cluster.

EAN: N/A

Pages: 186

- Chapter I e-Search: A Conceptual Framework of Online Consumer Behavior

- Chapter IV How Consumers Think About Interactive Aspects of Web Advertising

- Chapter XIV Product Catalog and Shopping Cart Effective Design

- Chapter XVI Turning Web Surfers into Loyal Customers: Cognitive Lock-In Through Interface Design and Web Site Usability

- Chapter XVIII Web Systems Design, Litigation, and Online Consumer Behavior