Selecting a Model

The selection of a programming model should be driven by the user and system requirements. When I am enlisted to design and implement a new system, I often go in with preconceived ideas about the size and scope of the final solution. Although it's important to start somewhere, I've learned to keep my mind open to changing requirements. Projects that start small can soon reveal a much larger scope and growth potential, and problems that seemed expansive might be easily solved with small, simple solutions. Likewise, shrink-wrapped commercial applications often need to be customized to such a degree that it is most cost-effective to build the system from scratch; and large, expensive custom applications can sometimes be replaced with off-the-shelf software. In short, an ounce of careful planning and design can be far more beneficial than a pound of brute-force application development.

Database application programming models roughly fall into the following categories:

-

Desktop Database Applications

-

Client/Server Database Solutions

-

Three-tier Component Solutions

-

Web Server Applications

-

Multi-tier Web Service Solutions

-

Multi-system Integrated Solutions

Additionally, database systems generally fall into these categories:

-

On-Line Transaction Processing Databases

-

On-Line Analytical Processing Databases

-

Hybrid Database Systems

A database solution is going to involve some combination of application model and database system. Before discussing the finer points of each of these models, I'd like to put some questions in your head. In many database applications, the business requirements aren't always cut-and-dried. You can reason that there may be some opportunity to incorporate pieces of these different models and that a system may need to evolve from one type to another. That's the beautiful thing about modern tools. If you design a system correctly, it can grow and evolve. Ask yourself the following questions about your project or application:

-

How many users need access to data?

How many users do you have now and how many users will you have in a year, or in five years? Are they employees, customers, or vendors? The volume of concurrent users is a significant factor. After you establish the answer to this question, you also need to know something about the needs of these users. For example, 10 users who will consistently enter and modify records can be far more demanding than a thousand users who will occasionally browse data or view reports. It's often difficult to predict the size or profile of your user base years into the future, but this will have a large bearing on your scalability needs — how much the system will need to grow in the future.

Modular, multi-tier applications are more scalable but also more complex and expensive to build.

-

Where are users located?

Are users situated in the same building or on the local-area network (LAN)? Perhaps they are at multiple sites or they need access to the system when they travel. Geographic boundaries have typically been one of the most significant factors in overall solution design.

-

What is your current infrastructure investment?

Implementing a new software solution involves more than installing a database and writing software. Any solution requires a significant investment in server and network infrastructure. Many companies have already made a sizable investment and are committed to a specific platform, operating system, and maybe even the database product.

Does your company currently manage database servers, web servers, component hosting services, and a corporate network? Do you have available bandwidth for the increased load? Do you use server clustering or replication? Not only do these services and the related hardware represent a cost, but so do trained and capable personnel. It's important to consider the existing infrastructure and to decide whether you can design a compatible system, or whether it makes sense to take on this additional investment.

-

What are your security requirements and restrictions?

How sensitive is the data you are managing? How costly would a security breach be to your business? Consider the legal and regulatory risks and restrictions. If you need a high level of security protection, this represents a greater cost in terms of coding standards, auditing, and testing. Encryption components and certificates are reasonably affordable, but encrypted data slows the system and requires more bandwidth.

-

How current does the data need to be?

It's not particularly difficult for a simple database system to let all users see and manipulate current data, but this becomes an issue when the system approaches its capacity limits. If data won't change that often or if data concurrency isn't a big issue, one database may suffice for both data entry and reporting applications. Otherwise, it may be necessary to use two separate databases: one for entry and another for reporting and analysis.

-

What data volumes do you anticipate?

Databases grow. That's inevitable. How much storage space will your database require in the next year, or five years? Very large databases have a higher maintenance overhead and need a more capable server. Historical data can be managed by archiving or partitioning portions of the database.

-

What are the system availability requirements?

Although allowing a database server to run around the clock isn't very expensive, guaranteeing that it will always be running can be very expensive. When does the data need to be available? During business hours? Week days? 24/7? Unless you invest in redundant, fail-over systems, you must plan for some downtime — both scheduled and unscheduled. A data maintenance and recovery plan will help but cannot guarantee 100% uptime unless you have redundancy and measures to mitigate every risk of failure.

-

What are your delivery time constraints?

Writing software and building a solution takes time, typically months to years. Usually 20%–30% of the total time will be spent gathering requirements and designing the system. Another 20%–30% is required for testing and debugging. After installation, deployment, and training, this leaves only 30%–50% of the time for the actual system development. Many projects fail because of unexpected schedule overruns because these factors aren't considered or estimated realistically. Pre-built, shrink-wrapped systems can usually be delivered faster, but custom-built solutions often offer greater flexibility.

-

What are your budget constraints?

The more complex the project, the more difficult it may be to estimate the final cost. Custom solutions are often budgeted based on the return on investment (ROI) rather than the initial cost. This is because, quite frankly, it's often difficult to justify the cost without considering the long-term benefit.

Desktop Database Applications

This is the most traditional type of database application. Several file-based database products have been around for many years. When I began working with medical billing software in the late 1980s, our application stored data in flat text files and the programmers wrote code that did a lot of the low-level work performed by database engines today. Most desktop database applications, even as early as the 1980s, used integrated database tools such as dBase, Clipper, Clarion, Paradox, FileMaker, and FoxPro. The most popular desktop database for Windows is Microsoft Access. Most modern database products, like Access, support variations of the SQL language.

Access will support a handful of concurrent network users. Because the database engine doesn't run as a server-hosted service, large data volumes and complex queries can easily create excessive network traffic. Even if the database file is located on a server, data is processed on the user's computer. The advantage of this option is its simplicity and low initial cost. The disadvantage is its lack of scalability and less-efficient query processing.

Microsoft Access includes a forms design environment to create data-centric user interfaces. More sophisticated and lighter-weight applications can also be created using Microsoft Visual Studio or other application development suites.

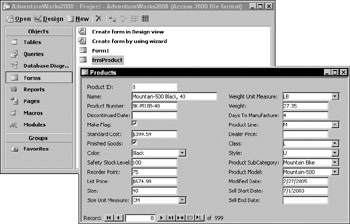

The Access database engine incorporates an application programming interface (API) called Data Access Objects (DAO). In recent years, Microsoft has all but discouraged the use of the Access JET database engine and DAO for programming. In its place, it has promoted the Microsoft SQL Server 2000 Desktop Engine (MSDE), a lightweight client/server database distributed with Access and Microsoft Office. The MSDE is being replaced by the SQL Server 2005 Express Edition. Even though the use of the traditional Access database is being deemphasized, the fact remains that it can actually be simpler and easier to use for creating small database solutions. Figure 14-1 shows an Access form opened from the Access database window. This form may be used for data entry, viewing, or modifying existing records.

Figure 14-1:

Client/Server Database Solutions

The most significant difference between this and the desktop database is that the database engine runs as a service on a file server. Requests sent to the database are processed on the server and only results are returned from queries. All query and database processing occurs on the database server. As previously mentioned, the MSDE database and SQL Server 2005 Express Edition are inexpensive options for getting started and running a small database on a desktop computer. MSDE databases have a hard limit of 10 concurrent connections.

When client/server databases came onto the scene in the mid 1990s, it was a common practice to pass ad-hoc SQL strings from the client application to the server. Although this practice causes a significant performance improvement over the client-side processing of desktop databases, it doesn't take advantage of some of SQL Server's query execution optimizations. By using stored procedures with views and user-defined functions, applications benefit from improved security and compiled execution plan caching.

This model takes advantage of the processing capabilities of two computers: the client and server. That's why it is also known as a two-tier solution. This is typically an effective solution for mid-size applications with users connected to a LAN. The client-side application is installed entirely on each user's desktop computer. Data access code routines connect directly to the back-end database server through an API layer, such as ActiveX Data Objects (ADO) or ADO.NET. The first native API for SQL Server was introduced for SQL Server 6.0. Roughly modeled after the existing DAO object model designed for Access/JET databases, Remote Data Objects (RDO) was built on top of Open Database Connectivity (ODBC), Microsoft's first database connectivity and driver standard. In the late 1990s, Microsoft introduced ADO, an upgraded API engineered to work more efficiently with SQL Server 7.0 and above, using the OLEDB connectivity standard. DAO, RDO, and ADO, along with their corresponding connectivity components, implemented Microsoft's original object-oriented programming and execution standard, known as the Component Object Model (COM). Microsoft's latest program execution model is the .NET Common Language Runtime (CLR). This supports updated objects used to connect through ODBC, OLEDB, and the .NET native SQL Server data provider (for SQL Server 7.0 and up).

Client/server applications exist at the entry-level of distributed solution models and have limited scalability. One common practice today is to design the system with three tiers and then deploy the middle-tier components on either the client or server computers, as illustrated in Figure 14-2. This way, if the solution needs to be scaled into a larger environment, it may simply be a matter of reconfiguring existing components to run on three computers. Although this option comes at an elevated initial cost, it may spare the additional cost of rewriting large portions of the application if the solution needs to be scaled up.

Figure 14-2:

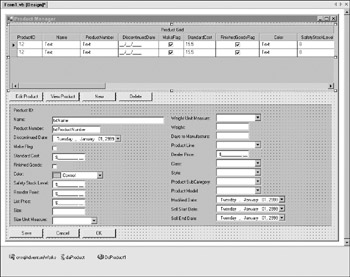

The client application contains the user interface. Figure 14-3 shows a Windows form in design view. Using Visual Studio, a variety of application types can be created to be used on the Windows desktop, in a web browser, at the command prompt, or on portable mobile devices.

Figure 14-3:

Specialized user interface controls give users a rich, interactive experience for entering and modifying record data. In this example, you can see a variety of controls that may be appropriate for different data types and fields. These include the following:

-

Drop-down list combo boxes

-

Check boxes

-

Masked currency text boxes

-

A color-picker drop-down list box

-

Date-picker drop-down list boxes

Some of these controls have associated event-handling code, which runs when certain activities are performed by the user. The most common example is the click event of a button. Programmers can write program logic using a programming language of their preference, such as C#, Visual C++, or Visual Basic.NET. The following simplified Visual Basic code might run when the Save button is clicked after a user enters or modifies a product record:

Private Sub btnSave_Click(ByVal sender As System.Object, _ ByVal e As System.EventArgs) Handles btnSave.Click ‘** Determine whether new or existing record If bNewRecord Then ‘** New record is being added ‘** Define and open a connection: Dim cn As New SqlClient.SqlConnection("Data Source= " _ & "Corp_DatabaseServer;Initial Catalog=AdventureWorks") cn.Open() ‘** define a command object for the Insert SQL: Dim cm As New SqlClient.SqlCommand cm.Connection = cn cm.CommandType = CommandType.Text cm.CommandText = "INSERT INTO Product (Name, ProductNumber, " _ & "DiscontinuedDate, MakeFlag, StandardCost) " _ & "SELECT ‘" _ & Me.txtName.Text & "‘, ‘" _ & Me.txtProductNumber.Text & "‘, ‘" _ & Me.dtpkDiscontinuedDate.Value & "‘, " _ & Me.chkMakeFlag.Checked.ToString & ", " _ & Me.txtcurStandardCost.Value.ToString ‘** Execute the SQL: cm.ExecuteNonQuery() ‘** Close the connection cn.Close() Else ‘** Existing record is being updated ‘** Define and open a connection: Dim cn As New SqlClient.SqlConnection("Data Source= " _ & "Corp_DatabaseServer;Initial Catalog=AdventureWorks") cn.Open() ‘** define a command object for the Insert SQL: Dim cm As New SqlClient.SqlCommand cm.Connection = cn cm.CommandType = CommandType.Text cm.CommandText = "UPDATE Product SET " _ & "Name = ‘" & Me.txtName.Text & "‘ ,’" & "ProductNumber = ‘" & & Me.txtProductNumber.Text & "‘, ‘" _ & "DiscontinuedDate = ‘" & Me.dtpkDiscontinuedDate.Value & "‘, " _ & "MakeFlag = " & Me.chkMakeFlag.Checked.ToString & "‘, " _ & "StandardCost = " & Me.txtcurStandardCost.Value.ToString _ & "WHERE ProductID = " & me.lblProductID.Text ‘** Execute the SQL: cm.ExecuteNonQuery() ‘** Close the connection cn.Close() End If End Sub

In this example, the actual Transact-SQL statements are assembled in the client code. This may seem to have the advantage of keeping all of the business logic in one place. However, this approach passes uncached ad-hoc SQL statements to the database server that will likely not perform as well as precompiled stored procedures. Allowing queries to be passed to the server on external connections can also be a security risk. This code is simple and relatively easy to maintain, but it may not be a very scalable solution.

n-tier Component Solutions

As two-tier solutions grew and began to run out of steam, many software designers looked for a way to take their applications to the next level. The great challenge was that Windows was designed to primarily run user-interactive applications on the desktop. Developing server-based components has long been difficult and expensive, using capabilities in the hands of large product vendors, not IT application developers. It took a few years for that to change. Visual Basic version 5.0 and 6.0 used COM and ActiveX technology, enabling software developers to create middle-tier components. Although writing components is pretty easy, configuring them to run on a server was quite a hassle in the beginning.

At first, software systems with this added component layer were known as three-tier solutions because the entire solution runs on three different physical layers: the database on the database server, middle-tier components on an application server, and the client application running on the desktop. Along with the capability to distribute the workload beyond two layers came the ability to extend the solution to four, five, or more separate computers (thus the term n-tier, rather than three-tier). One could argue that regardless of the number of servers or desktop computers, there are still conceptually only three tiers. Figure 14-4 depicts an n-tier component solution.

Figure 14-4:

Compared with the client-server example you looked at previously, the following demonstrates a more elegant solution. The three following samples are code snippets from a three-tier application. Using Figure 14-4 as a reference, we'll move from right to left. Note that not all fields are used just to keep these examples small and simple. I've made it a point to simplify this code to make it more readable.

Server-Side SQL Objects

In this model, all Transact-SQL is isolated to the database server as stored procedures, user-defined functions, and views. This provides a layer of security, keeps business logic close to the data, and is a very efficient use of database services and network resources.

/************************************************************** Adds new product record and returns new ProductID 3-21-06, Paul Turley Revisions: 3-23-06 Added error-handling script **************************************************************/ CREATE PROCEDURE spAddProduct @Name nVarChar(50) , @ProductNumbernVarChar(25) , @DiscontinuedDate DateTime , @MakeFlag Bit , @StandardCost Money , @FinishedGoodsFlag Bit , @Color nVarChar(15) , @SafetyStockLevel Int , @ReorderPoint Int , @ListPrice Money , @Size nVarChar(50) AS INSERT INTO Product ( Name , ProductNumber , DiscontinuedDate , MakeFlag , StandardCost , FinishedGoodsFlag , Color , SafetyStockLevel , ReorderPoint , ListPrice , Size ) SELECT @Name , @ProductNumber , @DiscontinuedDate , @MakeFlag , @StandardCost , @FinishedGoodsFlag , @Color , @SafetyStockLevel , @ReorderPoint , @ListPrice , @Size IF @@ERROR = 0 RETURN @@IDENTITY ELSE RETURN -1

Middle-Tier Component

The component code, written in Visual Basic.NET in this example, serves as a broker between the presentation layer and the database objects. In this layer, I focus entirely on business logic and don't concern myself with the details of the user interface or the implementation of data storage. Programming objects provide an abstract representation for data access objects such as connections, queries, parameters, and results. This way, application programmers don't concern themselves with different dialects of SQL or other specific requirements of any single data provider.

‘************************************************************* Product class provides object definition to work with product records and product-related maintenance. Methods: AddProduct() UpdateProduct() DeleteProduct() GetProductList() GetProductsByType() GetProducts() 3-23-06, Paul Turley Revisions: ‘************************************************************* Public Class Product Public Function AddProduct(ByVal Name As String, _ ByVal ProductNumber As String, _ ByVal DiscontinuedDate As Date, _ ByVal MakeFlag As Boolean, _ ByVal StandardCost As Decimal, _ ByVal FinishedGoodsFlag As Boolean, _ ByVal Color As Color, _ ByVal SafetyStockLevel As Integer, _ ByVal ReorderPoint As Integer, _ ByVal ListPrice As Decimal, _ ByVal Size As String) As Boolean Dim cn As New SqlClient.SqlConnection(sConnectionString) Dim cm As New SqlClient.SqlCommand Dim Param As SqlClient.SqlParameter Dim iProdID As Integer cm.Connection = cn cm.CommandType = CommandType.StoredProcedure cm.CommandText = "spAddProduct" ‘ ** Pass the function arguments/field values to proc. parameters: Param = New SqlClient.SqlParameter("Name", Name) Param.DbType = DbType.AnsiString cm.Parameters.Add(Param) Param = New SqlClient.SqlParameter("ProductNumber", ProductNumber) Param.DbType = DbType.AnsiString cm.Parameters.Add(Param) Param = New SqlClient.SqlParameter("DiscontinuedDate", DiscontinuedDate) Param.DbType = DbType.Date cm.Parameters.Add(Param) ‘ ** The rest of the parameters are handled here - abbreviated for demo** ‘ ** Execute the command/stored proc: iProdID = cm.ExecuteScalar ‘ ** Return True if successful: If iProdID > 0 Then Return True cn.Close() End Function ‘*** Other Functions to Update, Delete, Get products, etc.: Function UpdateProduct() As Boolean ‘*** End Function ‘*** DeleteProduct() ‘*** GetProductList() ‘*** GetProductsByType() ‘*** GetProducts() End Class

Presentation Layer

Compare the following code sample with that from the client-server sample. Rather than handling all of the data access and business logic in one chunk, I simply create objects based on the class defined in my middle-tier component. Using this object, I call methods (defined in Visual Basic as functions) and pass necessary values. The class method code performs data access and executes stored procedures in the database.

Private Sub btnSave_Click(ByVal sender As System.Object, _ ByVal e As System.EventArgs) Handles btnSave.Click ‘*** Create new instance of an object based on my custom Product class: Dim prod As New Product If bNewRecord Then ‘** New record is being added prod.AddProduct(Me.txtName.Text, Me.txtProductNumber.Text, _ Me.dtpkDiscontinuedDate.Value, Me.chkMakeFlag.Checked, ...) Else ‘** Existing record is being updated prod.UpdateProduct(Me.lblProductID.Text, Me.txtName.Text, _ Me.txtProductNumber.Text, Me.dtpkDiscontinuedDate.Value, ...) End If End Sub

Overall it takes a little more work to design a multi-tier application, but the result is a flexible, scalable solution that is easier to maintain as it grows.

Resource Pooling

Why is a three-tier solution so much more capable than a client/server application? If you have the same number of users running just about the same desktop application connected to the same database, what's the big advantage of having this middle layer? Adding the middle-tier doesn't just raise system capacity by one-third. The middle-tier becomes a broker for pooling and recycling resources. Between the database server and component layer, it enables connection-pooling, a feature that was built into ODBC years ago (but largely unused until component technology matured). The database server-side network library keeps a pool of connections active. Each connection will stay open, unused, for a few minutes at a time. When a client or component makes a new connection request, it simply recycles one of the existing idle connections in the pool. Because most operations only take a few seconds at most, this is an effective way to allow lots of clients to utilize a relatively small number of database connections. The catch is that every client has to use the same connection settings and security credentials.

Between the client and component layer, the application server enables object-pooling. In a similar way to connection-pooling, after a call is made to run the code (known as a method call) in a hosted business object component, a cached copy of the executable code remains in the application server's memory. There it waits for additional requests. Between object-pooling on the application server and connection-pooling on the database server, it's all just a matter of timing. As thousands of users use a few hundred pooled business objects, the business object code uses just a few dozen connections. . . You do the math.

Component Transaction Management

Large-scale applications not only use multiple components but may also use data stored in multiple databases. The ADO and ADO.NET programming objects contain hooks to SQL Server's Distributed Transaction Coordinator (DTC) service. This lets programmers manage and synchronize transactions between different data sources. The benefit is that even with data in separate databases, on separate servers, and even using different database products, application code can have transactional control over multiple operations. Imagine an application that manages the transfer of funds between two different banking systems. After verifying that the interrelated debits and credits are all successfully processed on each separate system, either all operations are explicitly rolled forward or all operations are rolled back.

With the capability to take advantage of all this computer horsepower, one significant barrier remained. COM technology (extended in the network-capable implementations, DCOM and COM+) was designed to run only on Microsoft Windows and Windows networks. These applications would support any number of users, so long as they were all clients on the same network, running Windows applications and components.

Today, both component development and component hosting are much easier than ever before. Microsoft's .NET Framework, integrated server, and development environments have improved upon all of the original features of COM and COM+. Though it's true that this style of large-scale application development takes a fair amount of application development expertise, now it's fairly easy to deploy and configure an enterprise-class component server.

Web Server Application

Desktop applications give users tactile control of data. Generally, applications respond quickly and users can see an immediate response when they perform an action. We've become accustomed to a variety of sophisticated user interface controls that are relatively universal across different systems. For example, most users know what to do when they are presented with a list box, drop-down combo box, a scrollbar, or grill control. However, one of the significant limitations to building custom Windows desktop applications is they must be preinstalled on each user's Windows computer. To share data, they must be connected through a LAN.

Web server applications can make data accessible to users across the Internet. User interface options include web pages or custom Windows components. Web services make data and application functionality available to custom applications from server to server or desktop to server, across the Internet. Web-based applications have improved significantly over the past few years, and although desktop applications continue to be more responsive and flexible than browser-based solutions, this gap continues to narrow as the Web has become a common medium for business applications. The unique characteristic of a web server application is that it runs on a web server, rather than on the user's desktop computer. All, or at least the vast majority, of the processing takes place on a central server running web server components. The user sees data and changing options as they interact with a user interface that is dynamically regenerated on the web server and sent back down to the user's web browser.

The advantage is that users need only a web browser and a connection to the Internet to use the application. Several challenges continue to be somewhat costly for solution developers. Compared to desktop solutions, performance and response time is slow. Web server applications typically display web pages using HTML, the mark-up language displayed by web browsers like Microsoft Internet Explorer. When a user clicks a button on a web page to retrieve a record, for example, this request is sent across the Internet to the web server, where code interacts with the database and server-side components. Code on the server modifies the HTML tags for the copy of the user's web page in the server's memory and then sends it back across the Internet to the user's browser, displaying a response to the user's request.

Programming web solutions is still a little more cumbersome than traditional applications but this has improved in recent years. Microsoft's web application programming standard matured significantly in 2001 when Active Server Pages (ASP) graduated to ASP.NET. Now using Visual Studio.NET, creating web server-based applications is a matter of dragging and dropping controls on a design surface and writing event code much like we've been doing to create desktop application interfaces using products such as Access and Visual Basic.

Data-bound web server components do much of the work of transforming data into an HTML-based user interface. To create a simple data sheet page to display records in a table format, the developer needs only to set properties for a few components and write a minimal amount of code. More sophisticated web applications may require more advanced-level coding. ASP.NET web components offer developers the flexibility of working at a lower level when they need to have more control or at a higher level to let the components do more of the work, to develop common applications rapidly. Many of the data access components generate volumes of Transact-SQL script for common operations. For example, when using drag-and-drop tools to generate a DataAdaptor object, a wizard dialog prompts the developer for a database table. From this, Transact-SQL script is generated to manage Select, Insert, Update, and Delete operations that are implemented using auto-generated programming code. The DataAdaptor wizard will also generate parameterized stored procedures in the database for managing these operations.

Multi-tier Web Service Solutions

In a web server model, the web server application really becomes the client to the database. Like a desktop application, the client can participate in a number of different application models. Simple web server applications may use a file-based database or a client/server database. A web server application can also execute code and use the features exposed by middle-tier components, making it a true three-tier application, with the client code running on the web server. Additionally, web applications can run script or separate components in the web browser, adding yet another layer to the model. To some degree it doesn't make a lot of sense to run custom components in the browser, because this really defeats the core objectives of a browser-based solution. However, using common client-side components can enhance the user experience and add more compelling content to web pages. It's common for web applications to make use of preinstalled client components such as Macromedia Flash, Windows Media Player, and the Adobe Reader.

Web servers can also act as application servers to host middle-tier components. One of the most exciting recent developments in component technology is the XML Web Service. Like a COM-based component, a web service can expose functionality for network clients. It can be used as a data source broker to route database requests and return results. The most compelling feature that makes this option so unique is that requests and results are sent as text using the Hypertext Transfer Protocol (HTTP). This means that a web service can be hosted by a web server and can communicate with different types of clients using the plumbing of the World Wide Web. Web services are based on industry-wide standards that finally make it a simple matter for applications running on one platform, or type of computer system, to work with those on a different platform.

The magic behind web services is a programming abstraction layer called Simple Object Application Protocol (SOAP). SOAP's job is to provide a standard for translating programming object calls into XML-formatted data on one end of the conversation and then back into objects on the other end. This means that programmers just write program code to work with objects. From a programmer's perspective, working with web services is much like working with earlier types of components.

Multi-system Integrated Solutions

In a perfect world (at least from a software architect and developer's point of view), all of our business systems should be designed from the ground up to integrate with each other, to exchange information efficiently, and to provide a seamless, unified experience for business users. This just doesn't happen in most businesses. Different systems serve different business users and processes. As business grows and processes evolve, users eventually need to access applications and systems designed for different groups in the organization. This leads to requirements for these systems to interoperate. The almost inevitable outcome is the realization that similar data stored in disparate systems is not stored or used in the same way. This presents a situation common in nearly all large businesses today: To support isolated users and processes, data gets transformed and reshaped in very specific ways, eventually creating scores of special-purpose and incompatible data stores and systems designed to meet some unique business need.

This organic growth of data-related systems can be better managed if database system architects can create flexibly designed databases that can serve multiple business applications. Applications may be designed to share functionality and data through standardized data interfaces and components. Application functionality and data can now be shared by different systems using data-exchange standards such as SOAP, RSS, and XML web services. Architecting an enterprise-wide application architecture may seem to be a daunting task, especially when integrating commercial application packages, but there are many options today that can make this much easier than before. If applications can't use shared data from a single database, moving and synchronizing copies of similar data can be achieved using resources such as Data Transformation Services, SQL Server 2005 Integration Services, and Biztalk Server.

Database professionals should keep a tight reign on systems that transform multiple copies of the same data for application and reporting use. Remember that information is the context of data — it's what it means and its significance to the business. When people start pushing copies of this data around, it will be easy to lose that context as this data is transformed back into information. This process should be carefully controlled and managed. The control and limitation of access to information is one thing. Every business has to have its own standards regarding information access (that are hopefully not unnecessarily restrictive). However, controlling the ability to change data and information is an entirely different matter. Ensuring that nothing is lost in the translation as data moves around the business will only serve to empower informed information users. This is often best achieved through IT-managed data marts and data warehouse databases that are accessible to all systems and users, who would otherwise be granted access to isolated data sources.

System Integration and Data Exchange

Large organizations manage lots of data. One common reason that large solutions may consist of different databases and applications is that each serves a specific purpose. Dividing data stores between transactional and decision-support systems is a common practice in large business environments. Different systems, each with its own databases, are designed to perform different business functions. Applications may use different database products.

Unfortunately, for system integrators, most specialized business systems aren't intended to integrate or share data with others. In a perfect world, all software would be designed to work together and share common data sources. The reality is that this ideal continues to be a far-off dream in most businesses. As we continue to reengineer disparate systems, we may inch a little closer to this objective in each iteration. For now, the best most of us can hope for are methods to ease the burden of exchanging data between systems.

In recent years, eXtensible Markup Language (XML) has evolved to become a common medium to help connect different databases and applications. XML is not a standard structure for data but a flexible set of standards to define almost any type of data. Unlike rigid data formatting standards of the past (such as EDS) XML allows data to be defined, stored, and documented in the same structure. This makes the data highly portable and easier to transform into that of another system. A number of supporting standards and products are now available to connect systems and synchronize data through the use of XML. Microsoft BizTalk Server allows multiple systems to easily interconnect and exchange data. Databases and specialized business systems can be integrated without cumbersome, manual intervention.

Project Management Challenges

I recently read that the FBI had commissioned a project to consolidate its many disparate databases and computer systems. After more than a billion dollars in expenses and consulting fees, the project is in shambles. Many business requirements had been revised and the project scope has been adjusted and expanded to accommodate changing needs and business practices. Budget constraints now threaten efforts to complete the work. Fingers are being pointed and bureaucrats are covering their tracks to avoid blame. Under public scrutiny and executive control, dark clouds of failure are looming.

On a slightly smaller scale, this is all too common. One of the greatest threats to the success of an IT project is time. The larger the scope of the project, the more time it takes to complete. This allows more opportunity for business rules to change. Even if a project is completed to the satisfaction of the original requirements, if it takes too long, requirements will have changed and the product may not address the current needs of the business.

On a recent consulting assignment, I experienced numerous challenges due to ever-changing scope and requirements. The client is a large technology company with plenty of project experience. In the grand scheme of the product, my component was consistently put on hold as requirements changed in other areas. Although beyond my control, the lack of finite deliverables can be a bit disconcerting.

The ideal solution for managing larger-scale projects is to break them down into manageable pieces with a manageable-sized project team. According to the Microsoft Solutions Framework, Microsoft's internal project management guidelines, teams should consist of no more than eight individuals. If a project requires more people than this, it should be broken down into smaller components. Teams may be divided by features, discipline, or release versions. The larger the project, often the less decision-making control each team member will have over individual components and requirements. This can be demoralizing and frustrating — all the more reason to establish clear requirements and avoid making changes until completing each stage.

EAN: 2147483647

Pages: 131