Designing SSIS Packages

When it comes to application development using comprehensive tools such as SSIS, there are usually more ways to design an application than you can count. In the next sections, you will learn an approach to use when starting to design SSIS applications.

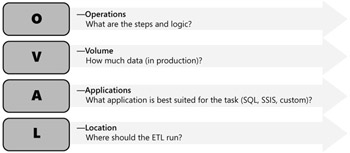

OVAL Principles of SSIS Package Design

Created as a development framework for SSIS packages, OVAL principles of package design encompass four facets of SSIS applications. These design considerations include identifying the Operations to be performed; the data Volume to be processed (in production); the Application of the right tools, tasks, sequence, and flow; and Location-determining where the SSIS application will run. Each of these facets and its elements is reviewed in the next sections and illustrated in Figure 14-1.

Figure 14-1: OVAL principles of performance

The operations facet of OVAL requires you to consider the steps and logic necessary for your application and how these map to your business processes. You will need to research and understand all operations required. For example, an operations flow can include:

-

Opening a transaction on SQL Server.

-

Reading data from the text file.

-

Loading data into the SSIS data flow.

-

Loading the data into SQL Server.

-

Committing the transaction.

Beware of hidden operations that might be happening external to your package processing design. Operations often include data conversion and determining when the best time is to perform this operation (that is, in either step 3 or step 10). Some operations might depend on processes external to SSIS, too.

Best practices design concerning data conversion is first to sharpen data types among your data sources and targets to meet your business requirements most efficiently and avoid unnecessary data type conversions. SSIS provides Fast Parse and Suggest Types In Flat File Source that work pretty well. Sometimes, you want to control data type strictly by size for performance processing and storage optimization. A default character size of 50 is often unnecessary, so take the time to reduce character columns appropriately. For example, what data type should you use for numeric data in a range from 1 to 200? Your choices include:

-

4 bytes (real).

-

3 bytes (string).

-

1 byte (byte).

To minimize memory buffers for your data flow and to reduce storage for data, you might opt for the single byte because it takes up the least space. It might seem insignificant to save three bytes, but when accumulating byte savings among numeric and character data, data type is very important and a key performance enhancement when you are dealing with large volumes of data.

The next facet of OVAL after you have defined your operations requirements is volume or, more specifically, how much data in production needs to be processed. The phrase "in production" is key here because often, when developing your application, you are dealing with subsets of production data that will not predict the application performance when executed against production volume data sources. You can also use data snapshots of production databases for your development and testing to simulate and benchmark performance.

Another best practices discipline is to remove redundant or unused columns from your data sources. This sounds obvious, but it is often not performed on the chance that the application might need the data at a future point. Paying the overhead of processing unused data columns through the SSIS engine and data pipeline is usually not worth it. Always use SELECT statements as opposed to tables when configuring a data source. Using a table source configuration is equivalent to using a SELECT * From…Where… statement, which is almost always considered bad practice. SELECT * is your enemy. The WHERE clause is your friend.

Another best practices approach is to remove redundant columns after every asynchronous component. For example, if you have derived new columns from source columns and no longer need the source columns, there is no need to pass these along through the data pipeline. Transformation components with asynchronous outputs are unique because they act as both destination and source components. These types of components receive rows from upstream components and add rows that are consumed by downstream components. No other data flow component performs both of these operations.

The columns from upstream components that are available to a component with synchronous outputs are automatically available to components downstream from the component. Therefore, a component with synchronous outputs does not have to define any output columns to provide columns and rows to the next component. Components with asynchronous outputs, on the other hand, must define output columns and provide rows to downstream components. Therefore, a component with asynchronous outputs has more tasks to perform during both design and execution time.

SSIS provides a Filter task for use in designing applications. If you can filter your rows in the WHERE clause of your data source, that is optimal for your design. Sometimes, however, you might need to filter rows farther into the data flow and, if so, use the Filter task.

In addition to the Filter task, SSIS provides the Conditional Split. Similar to the Filter task, the conditional split can be used to concatenate columns and re-route unneeded rows to optimize your data pipeline performance.

The next facet of OVAL for application design is to consider the application or, more specifically, what application options are best suited for the task to be performed. Should you use a SQL Server 2005 utility such as bulk copy program (BCP), an SSIS package, a custom program, or something else? Choosing which application component isn't always easy or obvious and might require some evaluation, testing, and even a pilot application model.

Given the power and performance of SQL Server 2005, it has become a best practice approach to use the database management system (DBMS) wherever suitable. Rather than reading data from multiple database tables within a single source database and using the Merge Join task within an SSIS package, it is significantly better to create DBMS views or stored procedures and exploit the power of the SQL Server database engine to prepare the data for other SSIS operations. Joining at the database level is one advantage, but you can also take advantage of eliminating Sort tasks, Conditional Splits, and Filter and Derived Columns tasks by including ORDER BY and basic data cleansing using ISNULL, NULLIF, and TRIM options.

Consider whether BCP is good enough for some of your application's data load requirements. The overhead of starting up an SSIS package can offset any performance gain over BCP for small data sets. Obviously, SSIS provides greater manageability and control than the BCP utility, so if control, monitoring, and error handling are key requirements, SSIS packages can be more desirable. Remember, you can generate an SSIS package for Bulk Import by using the Data Import Wizard within Microsoft SQL Server Management Studio.

The final OVAL facet for your consideration is location or, more specifically, deciding where your extract, transform, and load (ETL) and SSIS application packages and other components run. Sometimes, you really might not have many deployment options and, most often, you will need to comply with the SQL Server SSIS production server implementation characteristics of your system architecture. It is important to know whether your application will compete with other applications for resources. Knowing whether data conversion on Server1 will reduce or increase the volume of data transferred across the network could be important. Other considerations might be the impact and overhead of pulling large volumes of text data over for conversion. Your application target database and tables might reside on other servers. It's usually better for your source or target data to be local so that your application is as close to the data as possible to minimize network access. You might have to work with your systems administrator and SQL Server database administrator when determining the best production implementation options for your company.

Wherever you decide to locate your application for production, make sure you have enough memory; disk space; and optimized, stable network resources. If you have to stage data, consider using local RawFiles, if possible. These are specialized, high-performance files that perform faster than writing and reading data from SQL tables.

Sometimes, you will be requested to provide a baseline of your application's resource requirements. You can achieve this by replacing your application destinations with RowCounts, turning on logging, and using Stat Source. Stat Source will provide you with both the pipeline row count and the time it took to process the memory-buffered data. This technique can also help you change components incrementally to see the effects on processing and to optimize slow components for resources available on the production server.

Using SSIS Components in Your Design

Designing your SSIS application for multiple uses is a good way to minimize the number of packages you need to develop. One approach to achieve this is by using variables that can be configured at run time and enabling one package to run in different ways, depending on the variable values.

Input parameters in SQL commands, including queries and stored procedures, are mapped to variables that are created within the scope of the Execute SQL task or within the scope of the package. The values for variables can be set at design time or populated dynamically at run time.

The Execute SQL task uses the SQL syntax in which question marks (?) indicate parameters. For example, the following query uses two input parameters-one for the FirstName column and one for the LastName column-to select rows from the Contact table in the Adventure-Works database.

Copy CodeSELECT ContactID, Title, FirstName, MiddleName, LastName, EmailAddress FROM Person.Contact WHERE FirstName=? OR LastName=?

If you build the query using the Query Builder that SSIS provides, the question marks are automatically updated with parameter names, making the parameters easier to work with. The following query shows the parameter names.

Copy CodeSELECT ContactID, Title, FirstName, MiddleName, LastName, EmailAddress FROM Person.Contact WHERE (FirstName = @Param1) OR (LastName = @Param2)

To set parameter values, each parameter is mapped to a variable. Parameters are order dependent; they are assigned their value in the order that they appear in the query. In this example, the value of the variable mapped to the first input parameter is assigned to the FirstName column, and the variable mapped to the second input parameter is assigned to the LastName column.

There is limited use of variables inside the data flow of your packages, but you can add a variable as a column to the flow as well as parameterize the source query. For example, for the date range in RowChangeDate in the source system, set date range variables in the master package and pass them down to the child package.

When using the Row Count task, you write the row count to a variable. Other than row count, how do you set a variable in the data flow? Not so easily. Often, it is easier to write to a table or to a Recordset Destination and then reference that when needed.

Using Variables

Variables are a way to set values dynamically and control processes in packages, containers, tasks, and event handlers. Also, precedence constraints can use variables to control the direction of the flow of data to different tasks.

Each container has its own Variables collection. When a new variable is created, it is within the scope of its parent container. Because the package container is at the top of the container hierarchy, variables with package scope function, such as global variables, are visible to all containers within the package. The children of the container can also access the collection of variables through the Variables collection by using either the variable name or the variable's index in the collection.

Because the visibility of a variable is scoped from the top down, variables declared at the package level are visible to all the containers in the package. Therefore, the Variables collection on a container includes all the variables that belong to its parent in addition to its own variables. Conversely, the variables contained in a task are limited in scope and visibility and are visible only to the task.

If a package runs other packages, the variables defined in the scope of the calling package are available to the called package. The only exception occurs when a same-named variable exists in the called package. When this collision occurs, the variable value in the called package overrides the value from the calling package. Variables defined in the scope of the called package are never available back to the calling package.

Using the Lookup Task versus Merge Join

You now know that SSIS is a pipeline architecture to enable high-performance, memory-based transformation processing. Limiting the pipeline to just those columns of data required for the Lookup (that is, not selecting just the table and using a defined SELECT statement) will improve performance and reduce service and system resources. Caching on Lookup components will also improve processing speed. Figure 14-2 shows this principle.

Figure 14-2: Always use the SELECT statement for only the columns you need for the Lookup task

Lookup task components will generally work more quickly than Merge Join components where the two can be used for the same task. There are three configuration modes of operation for a Lookup task:

-

Full Cache - for small lookup datasets

-

No Cache - for volatile lookup datasets

-

Partial Cache - for large lookup datasets

The tradeoff of each approach is always memory usage versus performance. Full Cache is optimal but uses the most memory. Partial Cache configuration uses less memory than Full Cache but affects performance because it takes time to load the Partial Cache because it populates the cache on the fly, using singleton SELECT. No Cache uses no memory but takes the longest. An alternative is to use the Merge Join component instead. The catch is that it requires Sorted inputs, which then must use disk writes.

If possible, avoid using columns that might contain null values in lookup operations. If a column contains null values, configure the Lookup transformation to use an error output that directs rows that have no matching rows in the reference table to a separate transformation output. Alternatively, consider using full caching, which supports lookup operations on null values.

Using Database Snapshots

During testing, you might sometimes need to roll back your databases to their initial states and to perform this in an efficient manner. Using traditional Backup/Restore might also take too long. Long-running transactions are expensive to roll back, too, and the Delete Changes option from the database does not cover all scenarios. SQL Server 2005 provides the new Database Snapshot functionality. Using a specialized log that keeps track of changes-data and metadata-the Database Snapshot performs like an Undo for databases. You can roll back the database by using this log at any time. You might find that Database Snapshots can make development and testing very simple. This technique is a potential best practice for SSIS ETL application development.

Designing for Performance and Maintenance

From an overall package performance perspective, applying your application process to the right component is important. You have learned to use the control flow component for sequencing and to perform multiple iterations of a containerized task (data flow and others). Data flow components are for processing millions of rows of data. Given these combinations, it's important to avoid over-design. Too many moving parts are difficult to maintain and most often result in slow performance. Don't be afraid to experiment; there are many design approaches to meet your processing requirements.

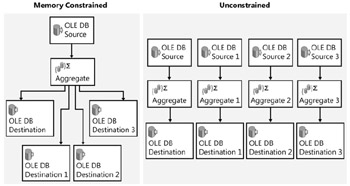

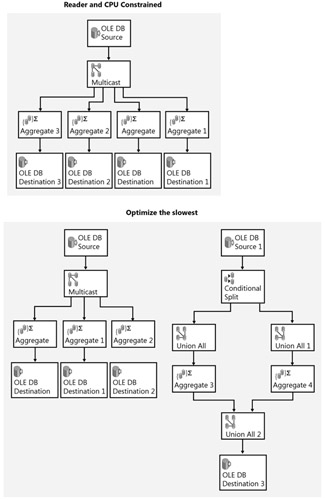

One thing to remember when designing SSIS applications for performance is to maximize parallelism. This can be designed in both control flow and data flow components. Control flow can launch multiple parallel packages, as in the case of master-child designs. Data flow components can parallel-process multiple data pipelines from single data sources or multiple parallel pipelines from multiple data sources.

When using parallel-design techniques, always remember to allocate enough threads for server processing. The EngineThreads property is found on the Data Flow task in the control flow component.

There are some SSIS processing tasks that will not always fit parallel design techniques. Figure 14-3 and Figure 14-4 show some ways to use parallel design for different options.

Figure 14-3: Optimizing from constrained to unconstrained processing using parallelism

Figure 14-4: Optimizing slowest component using parallelism

Using Fast Parse

Fast parse provides a fast, simple set of routines for parsing data. Fast parse supports only the most commonly used date format representations, does not perform locale-specific parsing, does not recognize special characters in currency data, and cannot convert hexadecimal or scientific representation of integers.

If the data flow in the package requires locale-sensitive parsing, standard parse is recommended instead of fast parse. For example, fast parse does not recognize locale-sensitive data that includes decimal symbols such as the comma, date formats other than year-month-date formats, and currency symbols.

Defining Your Best Practices

Early in your adoption of SSIS for application development, you will want to set your own standard layouts, guidelines, and procedures. Some common best practices design elements for SSIS packages to help make your development process effective, consistent, and easier to maintain include the following:

-

Use Row Counts to show whether you have all the rows expected.

-

Break out transformation logic specific to your environment.

-

Always configure to handle lookup errors (especially for when a fact table business key has no corresponding entry in the dimension table).

-

Always perform iterative design and development and testing methodology such as Agile, rapid application deployment (RAD), Extreme, and Microsoft Solutions Framework (MSF), which all promote modularity and short cycles.

-

Break complex, multisource, multidestination ETL into logically distinct packages (versus monolithic design).

-

Separate subprocesses within a package into separate containers. This is a more elegant, easier way to develop and allows you to disable whole containers simply when debugging.

-

Use Script Task/Transform for one-off problems.

-

Build custom components to reduce redundant script logic.

Theoretically, you could write one package to do it all. This is not recommended, but if you do, it is best to adopt verbose naming standards for your package elements and comment processes for easier understanding and maintenance.

Rather than create one giant package, many SQL Server SSIS designers create several packages representing distinct, logical units of work. These packages are then called from a master package that acts like the traffic director. The master package contains lots of Execute Package tasks referred to as child packages. A common design approach is to create child packages to process each target table within a multiple-table star schema data model.

Create a Master-Child Package

One way to manage the execution and process of packages is to create a master package that uses the Execute Package task. You can control the sequences of the package execution conditionally. You can even disable select packages from executing, if required. The following procedures show how to create a master package, designed by using SSIS.

Open Project and Build Packages

-

Open the Chp14.sln project in the C:\Documents and Settings\<username>\My Documents\Microsoft Press\is2005sbs\Chap14 folder.

-

The following steps detail creating a new Integration Services Project. Click New, and then click Project from the BIDS File menu. If opening Chp14.sln, these steps will have been completed already.

-

Right-click

Package.dtsx and rename this package

Package.dtsx and rename this package  MasterPackage.dtsx.

MasterPackage.dtsx. -

Right-click SSIS Packages and select New SSIS Package. Right-click this package, select Rename, and change the name to

Child1.dtsx.

Child1.dtsx. -

Right-click SSIS Packages and select New SSIS Package. Right-click this package, select Rename, and change the name to

Child2.dtsx.

Child2.dtsx. -

Right-click SSIS Packages and select New SSIS Package. Right-click this package, select Rename, and change the name to

Child3.dtsx.

Child3.dtsx.

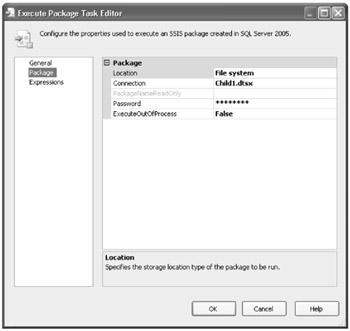

Add Execute Package Tasks

-

Double-click

MasterPackage.dtsx and drag an Execute Package task from the Control Flow Items group in the Toolbox to the Control Flow grid.

MasterPackage.dtsx and drag an Execute Package task from the Control Flow Items group in the Toolbox to the Control Flow grid. -

Double-click New Task to edit the properties.

-

Change the name to Execute Child 1.

-

Click the Package tab in the list on the left.

-

Set the location to File System.

-

Click in the Connection field and select <new connection> in the field drop-down list.

-

In the File Connection Manager Editor, set the Usage type to Existing File. Browse to C:\Documents and Settings\<username>\My Documents\Microsoft Press\is2005sbs\Chap14\Bin and select the

Child1.dtsx package file. Click OK twice.

Child1.dtsx package file. Click OK twice. -

Do not change the password.

Note Note that if the package were password protected, you would need to set the password at this point.

-

Set ExecutionOutOfProcess to False.

-

Your screen should look like this:

-

Click OK.

-

Copy and paste the Execute Child 1 task just below the original task in the same grid.

-

Connect Execute Child1 to the copied task by dragging the green arrow from one to the other.

-

Double-click the newly pasted task and change the task name to Execute Child 2.

-

Click the Package tab in the left pane, click in the Connection field, and select <New Connection> in the field drop-down list.

-

Set the Usage Type to Existing File, click the Browse button, and browse to C:\Documents and Settings\<username>\My Documents\Microsoft Press\is2005sbs\Chap14\Bin \Child2.dtsx.

-

Click Open, and then click OK twice.

-

Copy and paste the Execute Child 1 task just below the Execute Child 2 task in the same grid.

-

Connect Execute Child 2 to the copied task by dragging the green arrow from one to the other.

-

Double-click the newly pasted task and change the task name to Execute Child 3.

-

Click the Package tab in the left pane, click in the Connection field, and select <New Connection> in the field drop-down list.

-

Set Usage Type to Existing File, click the Browse button, and browse to C:\Documents and Settings\<username>\My Documents\Microsoft Press\is2005sbs\Chap14\Bin \Child3.dtsx.

-

Click Open, and then click OK twice.

-

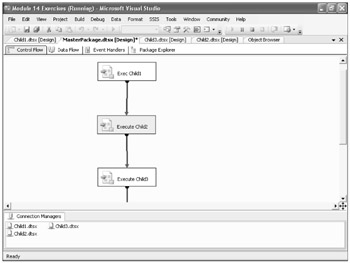

Your screen should look like this:

-

Click the Save All button on the toolbar.

Add Data Flow Tasks to the Child Packages

-

Double-click the

Child1.dtsx package, and then drag a Data Flow task from the Data Flow Items group in the Toolbox to the Control Flow grid.

Child1.dtsx package, and then drag a Data Flow task from the Data Flow Items group in the Toolbox to the Control Flow grid. -

Right-click the new task and rename it Child 1.

-

Double-click the Child 1 task and drag an OLE DB Source from the Data Flow Sources group in the Toolbox to the Data Flow grid.

-

Right-click the new source and rename it Employees.

-

Double-click the new source and select New to open the OLE DB Connection Manager.

-

Select localhost.is2005sbsDW from the Data Connections pane and click OK.

-

In the Name Of The Table Or View field, select [dbo].[dimEmployee], and then click OK.

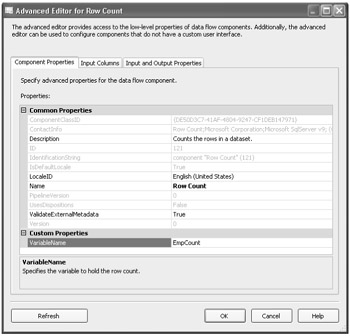

Add a Row Count Task and Variable

-

From the Data Flow Transformations group in the Toolbox, drag a Row Count onto the Data Flow grid.

-

Connect the Employees OLE DB Source to the new Row Count by dragging the green arrow from the source to the transformation.

-

Right-click the Row Count transformation and click Variables. Type the following new variable entries:

Name: EmpCount Scope: Child1 Data Type: Int32 Value: 0

-

Double-click the new Row Count transformation, type EmpCount in the VariableName field, and then click OK.

-

Your screen should look like this:

-

Double-click the

Child2.dtsx package, and then drag a Data Flow task from the Toolbox to the Control Flow grid.

Child2.dtsx package, and then drag a Data Flow task from the Toolbox to the Control Flow grid. -

Right-click the new task and rename it Child 2.

-

Double-click the Child 2 task and drag an OLE DB Source from the Toolbox to the Data Flow grid.

-

Right-click the new source and rename it Resellers.

-

Double-click the new source and select New to open the OLE DB Connection Manager.

-

Select localhost.is2005sbsDW from the Data Connections pane and click OK.

-

In the Name Of The Table Or View field, select [dbo].[dimReseller], and then click OK.

-

From the Toolbox, drag a Row Count Data Flow transformation onto the Data Flow grid.

-

Connect the Employees OLE DB Source to the new Row Count by dragging the green arrow from the source to the transformation.

-

Right-click the Row Count transformation and click Variables. Type the following new variables:

Name: ResellerCount Scope: Child2 Data Type: Int32 Value: 0

-

Double-click the new Row Count transformation, type ResellerCount in the Variable-Name field, and then click OK.

-

Double-click the

Child3.dtsx package, and then drag a Data Flow task from the Toolbox to the Control Flow grid.

Child3.dtsx package, and then drag a Data Flow task from the Toolbox to the Control Flow grid. -

Right-click the new task and rename it Child 3.

-

Double-click the Child 3 task and drag an OLE DB Source from the Toolbox to the Data Flow grid.

-

Right-click the new source and rename it Territories.

-

Double-click the new source and select New to open the OLE DB Connection Manager.

-

Select localhost.is2005sbsDW from the Data Connections pane and click OK.

-

In the Name Of The Table Or View field, select [dbo].[dimSalesTerritory], and then click OK.

-

From the Toolbox, drag a Row Count Data Flow transformation into the Data Flow grid.

-

Connect the Employees OLE DB Source to the new Row Count by dragging the green arrow from the source to the transformation.

-

Right-click the Row Count transformation and click Variables. Type the following new variables:

Name: TerritoryCount Scope: Child3 Data Type: Int32 Value: 0

-

Double-click the new Row Count transformation, type TerritoryCount in the Variable-Name field, and then click OK.

-

Double-click

MasterPackage.dtsx.

MasterPackage.dtsx. -

Copy Execute Child 1 - Execute Package, paste it below Execute Child 3, and then connect Execute Child 3 to the new Execute Child 1 - Execute Package 1 by dragging the green arrow from one to the other.

-

Click the Save All button on the Toolbar.

-

Right-click

MasterPackage.dtsx in Solution Explorer and select Execute Package.

MasterPackage.dtsx in Solution Explorer and select Execute Package. -

Your screen should look like this:

-

Click Stop Debugging from the Debug menu.

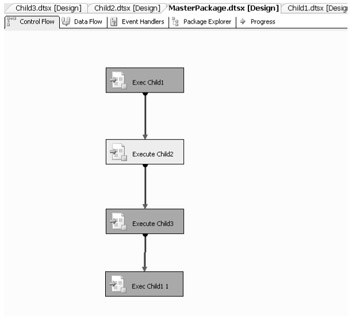

Disable an Execute Package Task

-

Right-click the Execute Child2 task and select Disable to skip this package in the master package.

Note When the master package is executed the next time, it will skip this task.

-

Save and execute the package.

-

Your screen should look like this:

-

While the package is executing, click the Progress tab of the

MasterPackage.dtsx to view the package execution sequence and see that the Execute Child 2 task has been skipped.

MasterPackage.dtsx to view the package execution sequence and see that the Execute Child 2 task has been skipped.

Organizing Package Components

In this section, you will learn the value of managing tasks, containers, sources, destinations, and other components when designing your packages. When you learned how to use BIDS, you learned about SSIS projects and their related components, data sources, data source views, packages, and so on. A best practices approach to SSIS application design is to use separate projects for the following types of applications:

-

Historical loads

-

Unusual events (such as backing out a load)

-

One-time resetting of data

Using Prefixes to Identify SSIS Package Components

A good design and management practice is to develop and use standard naming conventions for all your tasks and other components. You might want to consider using acronyms at the start of the name, and some suggestions for these acronyms are shown here. This approach might not help a great deal at design time when the tasks and components are easily identifiable by the designer, but it can be invaluable at debug time and run-time logging. For example, you can use an acronym such as DFT for a data flow task, so the name of a data flow task that populates a table called MyTable could be DFT Load MyTable. The tables in Figure 14-5 and Figure 14-6 provide a list of possible acronyms.

| Task | Prefix |

|---|---|

| For Loop Container | FLC |

| Foreach Loop Conatiner | FELC |

| Sequence Container | SEQC |

| ActiveX Script | AXS |

| Analysis Services Execute DDL | ASE |

| Analysis Services Processing | ASP |

| Bulk Insert | BLK |

| Data Flow | DFT |

| Data Mining Query | DMQ |

| Execute DTS 2000 Package | EDPT |

| Execute Package | EPT |

| Execute Process | EPR |

| Execute SQL | SQL |

| File System | FSYS |

| FTP | FTP |

| Message Queue | MSMQ |

| Script | SCR |

| Send Mail | SMT |

| Transfer Database | TDB |

| Transfer Error Messages | TEM |

| Transfer Jobs | TJT |

| Transfer Master Stored Procedures | TSP |

| Transfer SQL Server Objects | TSO |

| Web Service | WST |

| WMI Data Reader | WMID |

| WMI Event Watcher | WMIE |

| XML | XML |

Figure 14-5: SSIS task naming template sample

| Component | Prefix |

|---|---|

| DataReader Source | DR_SRC |

| Excel Source | EX_SRC |

| Flat File Source | FF_SRC |

| OLE DB Source | OLE_SRC |

| Raw File Source | RF_SRC |

| XML Source | XML_SRC |

| Aggregate | AGG |

| Audit | AUD |

| Character Map | CHM |

| Conditional Split | CSPL |

| Copy Column | CPYC |

| Data Conversion | DCNV |

| Data Mining Query | DMQ |

| Derived Column | DER |

| Export Column | EXPC |

| Fuzzy Grouping | FZG |

| Fuzzy Lookup | FZL |

| Import Column | IMPC |

| Lookup | LKP |

| Merge | MRG |

| Merge Join | MRGJ |

| Multicast | MLT |

| OLE DB Command | CMD |

| Percentage Sampling | PSMP |

| Pivot | PVT |

| Row Count | CNT |

| Row Sampling | RSMP |

| Script Component | SCR |

| Slowly Changing Dimension | SCD |

| Sort | SRT |

| Term Extraction | TEX |

| Term Lookup | TEL |

| Union All | ALL |

| Unpivot | UPVT |

| Data Mining Model Training | DMMT_DST |

| DataReader Destination | DR_DST |

| Dimension Processing | DP_DST |

| Excel Destination | EX_DST |

| Flat File Destination | FF_DST |

| OLE DB Destination | OLE_DST |

| Partition Processing | PP_DST |

| Raw File Destination | RF_DST |

| Recordset Destination | RS_DST |

| SQL Server Destination | SS_DST |

Figure 14-6: SSIS component naming template sample

You might also want to take the time to rename all default properties and descriptions for production applications using acronyms. This will help when debugging, particularly if the person doing the debugging is not the person who built the package originally. Some companies standardize naming and annotation, using a verbose method with full-named prefixes or suffixes. However, abbreviated forms are very common in programming disciplines. Whichever you choose, be consistent.

Defining Project Folders

When developing projects, you might also want to define a folder structure to keep all related packages and other files organized in the same place. Folder naming and location can be defined and determined based upon your company's needs. You might also want to apply security to these folders to ensure that only authorized SSIS development and administrative personnel have access for adding, updating, and deleting, especially in production environments.

Once you have completed the work to design, develop, and test your application in development and have migrated the application for execution within your production environment, a best practices discipline is to store the solution and project items in a secured Source Code Control system such as Microsoft Team Foundation. If you operate in a multideveloper environment, storing your SSIS application packages in VSS or similar systems enables controlled checkout and check-in of project components to prevent simultaneous modifications and overwrites. Also, VSS offers version control, so you can always retrieve previous versions to see how the project components have changed over time or even restore the project to a specific previous version if required to process data in a historical manner not available in the current production environment.

SSIS packages generate a variety of data files during execution, including Error log files, checkpoint files, error redirect files, and other types of package data files. Defining a standard location and naming convention for these types of components is another best practices discipline for SSIS. There are a number of advantages to this approach. All of your SSIS items (packages, raw files, and so on) will exist in a known, consistent, and secured place. All items of a certain type (for instance, checkpoint files) are stored in the same place. This also allows for consistency across development and production environments.

Other standard best practices are to comment your packages and scripts, using the annotation features within SSIS or directly within your script tasks. In two weeks, even you can forget a subtlety of your design. In the future, watch for new package documenting tools for SSIS from Microsoft.

Managing Performance and Debugging

You learned earlier, in Chapter 2, "Building Your First Package," that BIDS provides a comprehensive design and development platform that includes allowing you to run packages in debug mode and to trace processes and set breakpoints. When you migrate your packages to a production environment, they are executed outside of BIDS.

Managing Buffers and Memory

The SSIS engine and data pipeline uses a memory-based transformation process. The amount of memory used is allocated as buffers and is based on design-time metadata. Buffers are designed to hold the data from the pipeline data rows. This means that the width of a row (sum of size of all columns) affects the number of rows allowed within each buffer. This also means that smaller rows allow for more rows in memory, which means greater processing efficiency. Multiple buffers are allocated for the number of parallel processes in play.

You learned earlier that SSIS tasks include synchronous and asynchronous types. Synchronous task types (Derived Column, Data Conversions, and so on) reuse the same buffers. Alternatively, asynchronous tasks (Sort, Merge, Union, and so on) that must process all the rows before sending them on to the next task require more memory buffers and perform memory copies. Memory copies are expensive, so if you design ways around asynchronous tasks (SQL joins, and so on), you might reduce your application memory resource demand and improve overall performance.

Managing CPU Use

In addition to memory and buffer use, you can take advantage of multithreading SSIS applications to use multiple CPU SSIS servers. Thread allocation is managed by a thread pool distributed between data paths. Each Source can get a thread. Each Execution Path can get a thread, too. You use MaxEngineThreads on DataFlowTask to control parallelism. But remember, you need to have multiple CPU servers to exploit these capabilities.

Managing SSIS Application Deployment

You have learned about the many design considerations for SSIS applications and components. These next sections provide some approaches and techniques for managing and deploying your applications.

Designing for Deployment

Plan early for deployment. When defining your own policies and guidelines, consider simplifying your deployment processes from development to QA to production. List all the areas affected by this migration path.

Some of the considerations you need to address include determining how you will schedule your applications on the production machine. Also, you will need to determine how to synchronize all the SSIS and ETL processes. Deployment considerations most often have to comply with your SQL Server implementation of SSIS, SQL Server, and other production servers and their platform characteristics. Memory and resource use and availability are different between your development and production platforms.

Consider building in your security requirements from the start. Make note of the required production-level execution credentials and other sensitive information. You might need to work with your database administrators to determine who has access to production servers, files, folders, and data sources. You also want to secure the SSIS package components. Just because the database administrators have full control over the databases doesn't mean you will give them the ability to alter packages.

Using SSIS Package Configurations

Build in SSIS package configurations from the start to make things easier later on. Using package configuration is the best option because you will have the ability to reconfigure any component of your application from its development default values and authentication to its production versions.

Package configuration can be accomplished in a variety of ways, from flat files, to XML files, to using SQL Server database tables. One approach is to design the package to read an environment variable from the SSIS server operating system to get the location of the config file. The configuration file will provide the SSIS package with the connection string for Config Database and all values required for production execution. Package configuration provides the most flexible deployment process and makes deployment as easy as updating configurations.

Managing Multiple Schemas with SSIS Package Design

Like many SQL Server database environments, the use of database administrator-enforced naming conventions is common. You will want to learn and understand these for use within your SSIS package connection strings and for migration purposes. It is best practice to separate and group database objects visually for easy, fast identification and recognition. A good way to manage this naming standard is to use a scheme such as server.database.schema.object where schema is not a user ID.

Referred to as loose-coupled programming, assigning non-user ID schema names prevents references to named objects from breaking when a user leaves. All the required security mechanisms are still maintained with this approach. Such schemas are securable containers and are easier to manage and modularize. Some examples include:

-

dbo.Fact_Sales (Business data)

-

etl.Fact_Sales (ETL work table)

-

admin.up_SetLogicalDate(@date) (User Procedure)

-

util.uf_GetEndOfWeek(@date) (User Function)

-

etl.up_TruncateDimDate() (ETL Function)

Logging Reports

SSIS provides a variety of logging for tracing execution, tracking status, and so on. You might need to augment native functionality with custom logging for hybrid applications that combine SSIS packages with custom-coded modules. And although not technically a log, a good practice is always to use the error outputs on transforms to capture anomalies in addition to logging the status of errors during the package execution.

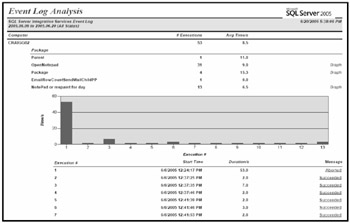

If you have installed SQL Server 2005 Reporting Services in addition to SQL Server and SSIS, you have the ability to generate SSIS server application log reports. SSIS server provides a comprehensive set of logged statistics for application packages it executes. You can obtain these pre-defined reports from the MSDN Web site at http://msdn2.microsoft.com/en-au/default.aspx. Search for the SSRS Report Pack, download it to your SQL Server Reporting Services server, and configure it to read your SSIS server event log. A sample SSIS report is shown here.

Figure 14-7: A sample SSIS report

EAN: 2147483647

Pages: 152