Appendix A: Matrix Algebra: An Introduction

Matrix algebra is one of the most useful and powerful branches of mathematics for conceptualizing and analyzing engineering, psychological, sociological, and educational research data. As research becomes more and more multivariate, the need for a compact method of expressing data becomes greater. Certain problems require that sets of equations and subscripted variables be written. In many cases, the use of matrix algebra simplifies and, when familiar, clarifies the mathematics and statistics. In addition, matrix algebra notation and thinking fit in nicely with the conceptualization of computer programming and use.

This appendix provides a brief introduction to matrix algebra. The emphasis is on those aspects that are related to subject matter covered in this complete series. Thus many matrix algebra techniques, important and useful in other contexts, are omitted. In addition, certain important derivations and proofs are neglected. Although the material presented here should suffice to enable you to follow the applications of matrix algebra in this series especially Volumes III and V ” it is strongly suggested that you expand your knowledge of this topic by studying one or more of the following texts : Dorf (1969), Green (1976), Hohn (1964), Horst (1963), Searle (1966), and Strang (1980).

BASIC DEFINITIONS

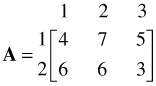

A matrix is an n-by-k rectangle of numbers or symbols that stand for numbers . The order of the matrix is n by k. It is customary to designate the rows first and the columns second. That is, n is the number of rows of the matrix and k the number of columns . A 2-by-3 matrix called A might be

Elements of a matrix are identified by reference to the row and column that they occupy. Thus, a 11 refers to the element of the first row and first column of A, which in the above example is 4. Similarly, a 23 is the element of the second row and third column of A, which in the above example is 3. In general, then, a ij refers to the element in row i and column j.

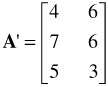

The transpose of a matrix is obtained simply by exchanging rows and columns. In the present case, the transpose of A, written A', is

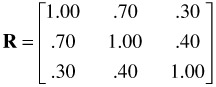

If n = k, the matrix is square. A square matrix can be symmetric or asymmetric. A symmetric matrix has the same elements above the principal diagonal as below the diagonal except that they are transposed. The principal diagonal is the set of elements from the upper left corner to the lower right corner. Symmetric matrices are frequently encountered in multiple regression analysis and in multivariate analysis. The following is an example of a correlation matrix, which is symmetric:

Diagonal elements refer to correlations of variables with themselves , hence the 1's. Each off-diagonal element refers to a correlation between two variables and is identified by row and column numbers. Thus, r 12 = r 21 = .70; r 23 = r 32 = .40. A column vector is an n-by-1 array of numbers. For example:

A row vector is a 1-by-n array of numbers:

b' = [8.0 1.3 -.20]

b' is the transpose of b. Note that vectors are designated by lowercase boldface letters , and that a prime is used to indicate a row vector.

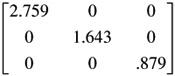

A diagonal matrix is frequently encountered in statistical work. It is simply a matrix in which some values other than zero are in the principal diagonal of the matrix, and all the off-diagonal elements are zeros. Here is a diagonal matrix:

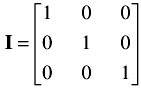

A particularly important form of a diagonal matrix is an identity matrix, l, which has ones in the principal diagonal:

EAN: 2147483647

Pages: 252