3.2 Tasks and Modules

|

|

The simplest way to partition the software is to decompose it into functional units called modules. Each module performs a specific function. An Ethernet driver module is responsible for configuration, reception, and transmission of Ethernet frames over one or more Ethernet interfaces. A TCP module is an implementation of the TCP protocol.

A module can be implemented as one or more tasks. We make the distinction between a task and a module as:

A module is a unit implementing a specific function. A task is a thread of execution.

A thread is a schedulable entity with its own context (stack, program counter, registers). A module can be implemented as one or more tasks (i.e., multiple threads of execution), or a task can be implemented with multiple modules. Consider the implementation of the IP networking layer as an IP task, an ICMP (Internet Control Message Protocol) task, and an ARP (Address Resolution Protocol) task. Alternately, the complete TCP/IP networking function can be implemented as a single task with multiple modules—a TCP module, an IP module, and so on. This is usually done in small–form factor devices, where both memory requirements and context switching overheads are to be minimized.

3.2.1 Processes versus Tasks

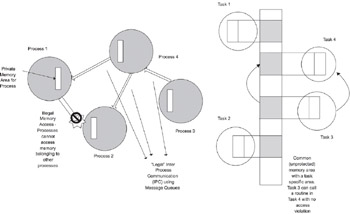

In desktop systems, a process represents the basic unit of execution. Though the terms process and task are used interchangeably, the term “process” usually implies a thread of execution with its own memory protection and priority [see Figure 3.2]. In embedded systems, the term “task” is encountered more often. Tasks do not have memory protection—so a task can access routines which “belong” to another task. Two tasks can also access the same global memory; this is not possible with processes.

Figure 3.2: Processes and tasks.

A process can also have multiple threads of execution. A thread is a lightweight process, which can share access to global data with other threads. There can be multiple threads inside a process, each able to access the global data in the process. Thread scheduling depends upon the system. Threads can be scheduled whenever the process is scheduled with round-robin or thread priority scheduling, or they can be part of a system- wide thread scheduling pool. A key point to note is:

In embedded systems with no memory protection, a task is the equivalent of a thread—so we can consider the software for such an embedded system as one large process with multiple threads.

3.2.2 Task Implementation

Independent functions and those that need to be scheduled at different times can be implemented as tasks, subject to the performance issues related to context switching. Consider a system with eight interfaces, where four of the interfaces run Frame Relay, while the other four run PPP. The two protocols are functionally independent and can run as independent tasks.

Even when tasks are dependent upon each other, they may have different timing constraints. In a TCP/IP implementation, IP may require its own timers for reassembly, while TCP will have its own set of connection timers. The need to keep the timers independent and flexible, can result in TCP and IP being separate tasks.

In summary, once the software has been split into functional modules, the following questions are to be considered for implementation as tasks or modules:

-

Are the software modules independent, with little interaction? If yes, use tasks.

-

Is the context-switching overhead between tasks acceptable for normal operation of the system? If not, use modules as much as possible.

-

Do the tasks require their own timers for proper operation? If so, consider implementing them as separate tasks subject to 1 and 2.

3.2.3 Task Scheduling

There are two types of scheduling that are common in embedded communications systems. The first and most common scheduling method in real-time operating systems is preemptive priority-based scheduling, where a lower priority task is preempted by a higher priority task when it becomes ready to run. The second type is non-preemptive scheduling, where a task continues to run until it decides to relinquish control. One mechanism by which it makes this decision is based on CPU usage, where it checks to see if its running time exceeds a threshold. If that is the case, the process relinquishes control back to the task scheduler. While non-preemptive scheduling is less common, some developers prefer preemptive scheduling because of the control it provides. However, for the same reason, this mechanism is not for novice programmers.

Several commercially available RTOSes use preemptive priority-based scheduling with a time slice for tasks of equal priority. Consider 4 tasks, A, B, C, and D, which need to be scheduled. If Task A, B, and C are at a higher priority than Task D, any of them can preempt D. With the time slice option, A, B, and C can all preempt Task D, but if the three tasks are of equal priority, they will share the CPU. A time slice value determines the time before one equal-priority task is scheduled out for the next equal-priority task to run.

For our discussions, we will assume hereafter the preemptive priority-based scheduling model. Time slicing is not assumed unless explicitly specified.

|

|

EAN: 2147483647

Pages: 126