Chapter 2: Software Considerations in Communications Systems

|

|

The previous chapter provided an introduction to communications systems and some of the issues related to the software on those systems. This chapter investigates software in greater detail, starting with an introduction to host-based communications along with a popular framework for building host-based communications software—the STREAMS environment.

Subsequently, we focus on embedded communications software detailing the real-time operating system (RTOS) and its components, device drivers and memory-related issues. The chapter also discusses the issues related to software partitioning, especially in the context of hardware acceleration via ASICs and network processors. The chapter concludes with a description of the classical planar networking model.

2.1 Host-Based Communications

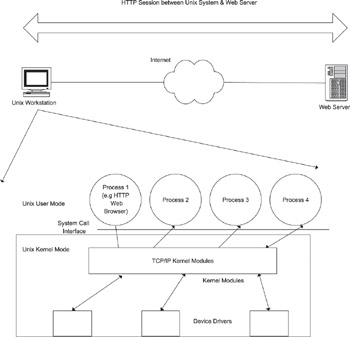

Hosts or end systems typically act as the source and destination for communications. When a user tries to access a Web site from a browser, the host computer uses the Hyper Text Transfer Protocol (HTTP) to communicate to a Web server—typically another host system. The host system has a protocol stack comprising several of the layers in the OSI model. In the web browser, HTTP is the application layer protocol communicating over TCP/IP to the destination server, as shown in Figure 2.1.

Figure 2.1: Web browser and TCP/IP Implementation in Unix

2.1.1 User and Kernel Modes

Consider a UNIX™-based host which provides the functionality of an end node in an IP network. In UNIX, there are two process modes—user mode and kernel mode. User applications run in user mode, where they can be preempted and scheduled in or out. The operating system runs in kernel mode, where it can provide access to hardware. In user mode, memory protection is enforced, so it is impossible for a user process to crash or corrupt the system. In this mode, processes cannot access the hardware directly but only through system calls, which, in turn, can access hardware. Kernel mode is, effectively, a “super user” mode. System calls execute in kernel mode, while the user process is suspended waiting for the call to return.

For data to be passed from user to kernel mode, it is first copied from user-mode address space into kernel-mode address space. When the system call completes and data is to be passed back to the user process, it is copied from kernel space to the user space. In the UNIX environment, the Web browser is an application process running in user mode, while the complete TCP/IP stack runs in kernel mode. The browser uses the socket and send calls to copy data into the TCP protocol stack’s context (in the kernel), where it is encapsulated into TCP packets and pushed onto the IP stack.

There is no hard-and-fast requirement for the TCP/IP stack to be in the kernel except for performance gains and interface simplicity. In the kernel, the entire stack can be run as a single thread with its own performance efficiencies. Since the TCP/IP stack interfaces to multiple applications, which could themselves be individual user-mode processes, implementing the stack in the kernel results in crisp interfaces for applications. However, since memory protection is enforced between user and kernel modes, the overall system performance is reduced due to the overhead of the additional copy.

Some host operating systems like DOS and some embedded real-time operating systems (RTOSes) do not follow the memory protection model for processes. While this results in faster data interchange, it is not necessarily a reliable approach. User processes could overwrite operating system data or code and cause the system to crash. This is similar to adding an application layer function into the kernel in UNIX. Due to the system-wide ramifications, such functions need to be simple and well tested.

2.1.2 Network Interfaces on a Host

Host system network interfaces are usually realized via an add-on card or an onboard controller. For example, a host could have an Ethernet network interface card (NIC) installed in a PCI (Peripheral Component Interface) slot as its Ethernet interface. The Ethernet controller chip on the NIC performs the Media Access Control (MAC), transmission, and reception of Ethernet frames. It also transfers the frames from/to memory across the PCI bus using Direct Memory Access (DMA).

In the reception scenario, the controller is programmed with the start address in memory of the first frame to be transferred along with the default size of the frame. For standard Ethernet, this is 1518 bytes, including headers and Cyclic Redundancy Check (CRC). The controller performs the DMA of the received Ethernet frame into this area of memory. Once the frame transfer is complete, the actual size of the frame (less than or equal to 1518 bytes) is written into a header on top of the frame. At this stage, the Ethernet driver software needs to copy the data from the DMA area to be memory accessible to both the driver and higher layers. This ensures that subsequent frames from the controller do not overwrite previously received frames.

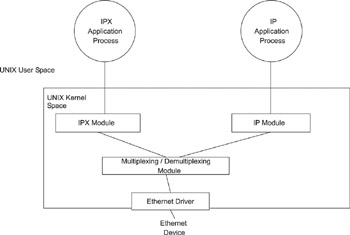

In the UNIX example, the Ethernet driver interfaces to the higher layer through a multiplexing/demultiplexing module. This module reads the protocol type field (bytes 13 and 14) in the Ethernet frame header and hands it off to the appropriate higher layer protocol module in the kernel. In a host implementing both IP and IPX, IP packets (protocol type 0x0800) are handed off to the IP module, while IPX packets (protocol type 0x8137) are handed off to the IPX module in the kernel (see Figure 2.2). The packets are subsequently processed by these modules and handed to applications in the user space. Frame transmission is implemented in a similar manner.

Figure 2.2: Unix Host implementing IP and IPX

STREAMS Architecture

Using a modular approach permits the development of individual modules without the dependency on other modules. In the UNIX example, the IP module in the kernel may be developed independent of the Ethernet driver module, provided the interfaces between the two modules are clearly defined. When the two modules are ready, they can be combined and linked in. While this is a good mapping of the OSI model, an additional level of flexibility can be provided via an “add and drop” of functional modules while the system is running. The most common example of this is the STREAMS programming model, which is used in several UNIX hosts for implementing network protocol stacks within the kernel.

The STREAMS programming model was first specified in AT&T UNIX in the 1980s. It is now used in several UNIX systems and in some real-time operating systems (RTOSes) as well. STREAMS uses a model similar to the OSI layering model and provides the ability to create and manipulate STREAMS modules, which are typically protocol processing blocks with clear interfaces.

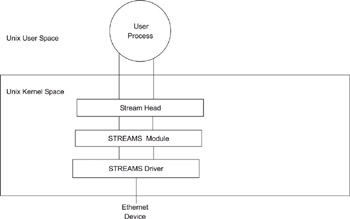

The model consists of a set of kernel-resident system calls, kernel resources, and utility routines to facilitate duplex processing and data paths between kernel mode and a user process. The fundamental unit of STREAMS is a stream, which is used for data transfer between a user process and a module in kernel space and can be decomposed into three parts: a Stream head, zero or more modules, and a driver.

Figure 2.3 shows the basic composition of a stream. The stream head is closest to the user process; modules have fixed functionality and interface to the adjacent layer (the stream head or another module). A module can be dynamically pushed on to or popped from the stream by user action, making this architecture suitable for the layering associated with communications protocols.

Figure 2.3: Stream components.

Each module has a “read” side and a “write” side. Messages traveling from the write side of a module to the write side of the adjacent module are said to travel downstream. Similarly, messages traveling on the read side are said to be traveling upstream. A queue is defined on the read and write side for holding messages in the module. This is usually a First In First Out (FIFO) queue, but priority values can be assigned to messages, thus permitting priority-based queuing. Library routines are provided for such operations as buffer management, scheduling, or asynchronous operation of STREAMS and user processes for efficiency.

STREAMS Messages

A message queue consists of multiple messages. Each message consists of one or more message blocks. Each message block points to a data block. The structure of a message block and a data block is shown in Listing 2.1. Most communications system suse this two-level scheme to describe the data transfer units. Messages are composed of a message block (msgb) and a data block (datab). The db_ref field permits multiple message blocks to point to the same data block as in the case of multicast, where the same message may be sent out on multiple ports. Instead of making multiple copies of the message, multiple message blocks are allocated, each pointing to the same data block. So, if one of the message blocks is released, instead of releasing the data block associated with the message block, STREAMS decrements the reference count field in the data block. When this reaches zero, the data block is freed. This scheme is memory efficient and aids performance.

STREAMS is important because it was the first host-based support for protocol stacks and modules which could be dynamically loaded and unloaded during program execution. This is especially important in a system that requires a kernel rebuild for changes to the kernel.

Listing 2.1: Streams message and data block structures.

struct msgb *b_next; /* Ptr to next msg on queue */ struct msgb *b_prev; /* Ptr to prev msg on queue */ struct msgb *b_cont; /* Ptr to next message blk */ unsigned char *b_rptr; /* Ptr to first unread byte*/ unsigned char *b_wptr; /* Ptr to first byte to write*/ struct datab *b_datap; /* Ptr to data block */ unsigned char b_band; /* Message Priority */ unsigned short b_flag; /* Flag used by stream head */

The data block organization is as follows:

unsigned char *db_base; /* Ptr to first byte of buffer */ unsigned char *db_lim; /* Ptr to last byte (+1) of buffer*/ dbref_t db_ref; /*Reference count- i.e.# of ptrs*/ unsigned char db_type; /* message type */

STREAMS buffer management will be discussed in greater detail in Chapter 6.

2.1.4 Socket Interface

The most common interface for kernel- and user-mode communication is the socket interface, originally specified in 4.2 BSD UNIX. The socket API isolates the implementation of the protocol stacks from the higher layer applications. A socket is the end point of a connection established between two processes—irrespective of whether they are on the same machine or on different machines. The transport mechanism for communication between two sockets can be TCP over IPv4or IPv6, UDP over IPv4 or IPv6, and so on. A “routing” socket is also useful, for such tasks as setting routing table entries in the kernel routing table.

The socket programming model has been used in other operating systems in some popular commercial real-time operating systems such as Wind River Systems VxWorks™. This permits applications that use the socket API to migrate easily between operating systems that offer this API.

2.1.5 Issues with Host-Based Networking Software

Host-based networking software is not usually high performance. This is due to various reasons—there is very little scope for hardware acceleration in standard workstations, the host operating system may be inherently limiting in terms of performance (for example, the user and kernel space copies in UNIX), or the software is inherently built only for functionality and not for performance (this is often the case, especially with code that has been inherited from a baseline not designed with performance in mind). Despite these, protocols like TCP/IP in the UNIX world have seen effective implementation in several hosts.

Designers address the performance issue by moving the performance-critical functions to the kernel while retaining the other functions in the user space. Real time performance can also be addressed by making changes to the scheduler of the host operating system, as some embedded LINUX vendors have done.

|

|

EAN: 2147483647

Pages: 126