Services the Memory Manager Provides

The memory manager provides a set of system services to allocate and free virtual memory, share memory between processes, map files into memory, flush virtual pages to disk, retrieve information about a range of virtual pages, change the protection of virtual pages, and lock the virtual pages into memory.

Like other Windows 2000 executive services, the memory management services allow their caller to supply a process handle, indicating the particular process whose virtual memory is to be manipulated. The caller can thus manipulate either its own memory or (with the proper permissions) the memory of another process. For example, if a process creates a child process, by default it has the right to manipulate the child process's virtual memory. Thereafter, the parent process can allocate, deallocate, read, and write memory on behalf of the child process by calling virtual memory services and passing a handle to the child process as an argument. This feature is used by subsystems to manage the memory of their client processes, and it is also key for implementing debuggers because debuggers must be able to read and write to the memory of the process being debugged.

Most of these services are exposed through the Win32 API. The Win32 API has three groups of functions for managing memory in applications: page granularity virtual memory functions (Virtualxxx), memory-mapped file functions (CreateFileMapping, MapViewOfFile), and heap functions (Heapxxx and the older interfaces Localxxx and Globalxxx). (We'll describe the heap manager later in this section.)

The memory manager also provides a number of services, such as allocating and deallocating physical memory and locking pages in physical memory for direct memory access (DMA) transfers, to other kernel-mode components inside the executive as well as to device drivers. These functions begin with the prefix Mm. In addition, though not strictly part of the memory manager, the executive support routines that begin with Ex that are used to allocate and deallocate from the system heaps (paged and nonpaged pool) as well as to manipulate look-aside lists. We'll touch on these topics later in this chapter, in the section "System Memory Pools."

Although we'll be referring to Win32 functions and kernel-mode memory management and memory allocation routines provided for device drivers, we won't cover the interface and programming details but rather the internal operations of these functions. Refer to the Win32 API and Device Driver Kit (DDK) documentation on MSDN for a complete description of the available functions and their interfaces.

Reserving and Committing Pages

Pages in a process address space are free, reserved, or committed. Applications can first reserve address space and then commit pages in that address space. Or they can reserve and commit in the same function call. These services are exposed through the Win32 VirtualAlloc and VirtualAllocEx functions.

Reserved address space is simply a way for a thread to reserve a range of virtual addresses for future use. Attempting to access reserved memory results in an access violation because the page isn't mapped to any storage that can resolve the reference.

Committed pages are pages that, when accessed, ultimately translate to valid pages in physical memory. Committed pages are either private and not shareable or mapped to a view of a section (which might or might not be mapped by other processes). Sections are described in the next section as well as in "Section Objects."

If the pages are private to the process and have never been accessed before, they are created at the time of first access as zero-initialized pages (or demand zero). Private committed pages can later be automatically written to the paging file by the operating system if memory demands dictate. Committed pages that are private are inaccessible to any other process unless they're accessed using cross-process memory functions, such as ReadProcessMemory or WriteProcessMemory. If committed pages are mapped to a portion of a mapped file, they might need to be brought in from disk when accessed unless they've already been read earlier, either by the process accessing the page or by another process that had the same file mapped and had accessed the page.

Pages are written to disk through normal modified page writing as pages are moved from the process working set to the modified list and ultimately to disk. (Working sets and the modified list are explained later in this chapter.) Mapped file pages can also be written back to disk as a result of an explicit call to FlushViewOfFile.

You can decommit pages and/or release address space with the VirtualFree or VirtualFreeEx functions. The difference between decommittal and release is similar to the difference between reservation and committal—decommitted memory is still reserved, but released memory is neither committed nor reserved. (It's free.)

Using the two-step process of reserving and committing memory can reduce memory usage by deferring committing pages until needed. Reserving memory is a relatively fast and inexpensive operation under Windows 2000 because it doesn't consume any committed pages (a precious system resource) or process page file quota (a limit on the number of committed pages a process can consume—not necessarily page file space). All that need to be updated or constructed are the relatively small internal data structures that represent the state of the process address space. (We'll explain these data structures, called virtual address descriptors, or VADs, later in the chapter.)

Reserving and then committing memory is useful for applications that need a potentially large contiguous memory buffer; rather than committing pages for the entire region, the address space can be reserved and then committed later when needed. A utilization of this technique in the operating system is the user-mode stack for each thread. When a thread is created, a stack is reserved. (1 MB is the default; you can override this size with the CreateThread function call or on an imagewide basis by using the /STACK linker flag.) By default, the initial page in the stack is committed and the next page is marked as a guard page, which isn't committed, that traps references beyond the end of the committed portion of the stack and expands it.

Locking Memory

Pages can be locked in memory in two ways:

- Device drivers can call the kernel-mode functions MmProbeAndLockPages, MmLockPagableCodeSection, MmLockPagableDataSection, or MmLockPagableSectionByHandle. Pages locked using this mechanism remain in memory until explicitly unlocked. Although no quota is imposed on the number of pages a driver can lock in memory, a driver can't lock more pages than the resident available page count will allow. Also, each page does use a system page table entry (PTE), which is a limited resource. (PTEs are described later in the chapter.)

- Win32 applications can call the VirtualLock function to lock pages in their process working set. Note that such pages are not immune from paging—if all the threads in the process are in a wait state, the memory manager is free to remove such pages from the working set (which, for modified pages, ultimately would result in writing the pages to disk) if memory demands dictate. In such cases, locking pages in your working set can actually degrade performance because when a thread wakes up to run, the memory manager must first read in all the locked pages before the thread can begin execution. Therefore, in general, it's better to let the memory manager decide which pages remain in physical memory. The number of pages a process can lock can't exceed its minimum working set size minus eight pages.

Allocation Granularity

Windows 2000 aligns each region of reserved process address space to begin on an integral boundary defined by the value of the system allocation granularity, which can be retrieved from the Win32 GetSystemInfo function. Currently, this value is 64 KB. This size was chosen so that if support were added for future processors with large page sizes (for example, up to 64 KB), the risk of requiring changes to applications that made assumptions about allocation alignment would be reduced. (Windows 2000 kernel-mode code isn't subject to the same restrictions; it can reserve memory on a single-page granularity.)

Finally, when a region of address space is reserved, Windows 2000 ensures that the size of the region is a multiple of the system page size, whatever that might be. For example, because x86 systems use 4-KB pages, if you tried to reserve a region of memory 18 KB in size, the actual amount reserved on an x86 system would be 20 KB.

Shared Memory and Mapped Files

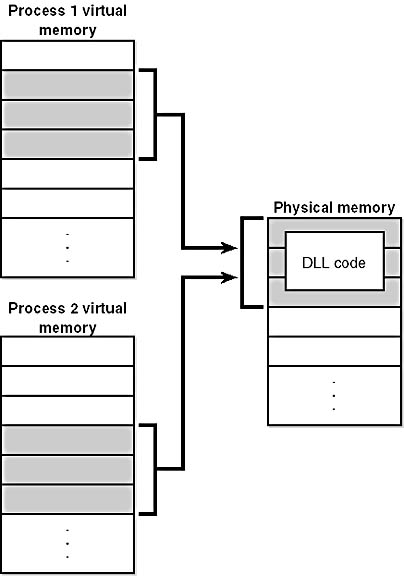

As is true with most modern operating systems, Windows 2000 provides a mechanism to share memory among processes and the operating system. Shared memory can be defined as memory that is visible to more than one process or that is present in more than one process virtual address space. For example, if two processes use the same DLL, it would make sense to load the referenced code pages for that DLL into physical memory only once and share those pages between all processes that map the DLL, as illustrated in Figure 7-1.

Figure 7-1 Sharing memory between processes

Each process would still maintain its private memory areas in which to store private data, but the program instructions and unmodified data pages could be shared without harm. As we'll explain later, this kind of sharing happens automatically because the code pages in executable images are mapped as execute-only and writable pages are mapped copy-on-write. (See the section "Copy-on-Write" for more information.)

The underlying primitives in the memory manager used to implement shared memory are called section objects, which are called file mapping objects in the Win32 API. The internal structure and implementation of section objects are described later in this chapter.

This fundamental primitive in the memory manager is used to map virtual addresses, whether in main memory, in the page file, or in some other file that an application wants to access as if it were in memory. A section can be opened by one process or by many; in other words, section objects don't necessarily equate to shared memory.

A section object can be connected to an open file on disk (called a mapped file) or to committed memory (to provide shared memory). Sections mapped to committed memory are called page file backed sections because the pages can be written to the paging file if memory demands dictate. (Because Windows 2000 can run with no paging file, page file backed sections might in fact be "backed" only by physical memory). As with private committed pages, shared committed pages are always zero-filled when they are first accessed.

To create a section object, call the Win32 CreateFileMapping function, specifying the file handle to map it to (or INVALID_HANDLE_VALUE for a page file backed section), and optionally a name and security descriptor. If the section has a name, other processes can open it with OpenFileMapping. Or you can grant access to section objects through handle inheritance (by specifying that the handle be inheritable when opening or creating the handle) or handle duplication (by using DuplicateHandle). Device drivers can also manipulate section objects with the ZwOpenSection, ZwMapViewOfSection, and ZwUnmapViewOfSection functions.

A section object can refer to files that are much larger than can fit in the address space of a process. (If the paging file backs a section object, sufficient space must exist in the paging file to contain it.) To access a very large section object, a process can map only the portion of the section object that it requires (called a view of the section) by calling the MapViewOfFile function and then specifying the range to map. Mapping views permits processes to conserve address space because only the views of the section object needed at the time must be mapped into memory.

Win32 applications can use mapped files to conveniently perform I/O to files by simply making them appear in their address space. User applications aren't the only consumers of section objects: the image loader uses section objects to map executable images, DLLs, and device drivers into memory, and the cache manager uses them to access data in cached files. (For information on how the cache manager integrates with the memory manager, see Chapter 11.)

How shared memory sections are implemented both in terms of address translation and the internal data structures is explained later in this chapter.

Protecting Memory

As explained in Chapter 1, Windows 2000 provides memory protection so that no user process can inadvertently or deliberately corrupt the address space of another process or the operating system itself. Windows 2000 provides this protection in four primary ways.

First, all systemwide data structures and memory pools used by kernel-mode system components can be accessed only while in kernel mode—user-mode threads can't access these pages. If they attempt to do so, the hardware generates a fault, which in turn the memory manager reports to the thread as an access violation.

NOTE

In contrast, Microsoft Windows 95, Microsoft Windows 98, and Microsoft Windows Millennium Edition have some pages in system address space that are writable from user mode, thus allowing an errant application to corrupt key system data structures and crash the system.

Second, each process has a separate, private address space, protected from being accessed by any thread belonging to another process. The only exceptions are if the process is sharing pages with other processes or if another process has virtual memory read or write access to the process object and thus can use the ReadProcessMemory or WriteProcessMemory functions. Each time a thread references an address, the virtual memory hardware, in concert with the memory manager, intervenes and translates the virtual address into a physical one. By controlling how virtual addresses are translated, Windows 2000 can ensure that threads running in one process don't inappropriately access a page belonging to another process.

Third, in addition to the implicit protection virtual-to-physical address translation offers, all processors supported by Windows 2000 provide some form of hardware-controlled memory protection (such as read/write, read-only, and so on); the exact details of such protection vary according to the processor. For example, code pages in the address space of a process are marked read-only and are thus protected from modification by user threads. Code pages for loaded device drivers are similarly marked read-only.

NOTE

By default, system-code write protection doesn't apply to Ntoskrnl.exe or Hal.dll on systems with 128 MB or more of physical memory. On such systems, Windows 2000 maps the first 512 MB of system address space with large (4-MB) pages to increase the efficiency of the translation look-aside buffer (explained later in this chapter). Because image sections are mapped at a granularity of 4 KB, the use of 4-MB pages means that a code section of an image might reside on the same page as a data section. Thus, marking such a page read-only would prevent the data on that page from being modifiable. You can override this value by adding the DWORD registry value HKLM\SYSTEM\CurrentControlSet\Control\SessionManager\Memory Management\LargePageMinimum as the number of megabytes required on the system to map Ntoskrnl and the HAL with large pages.

Table 7-3 lists the memory protection options defined in the Win32 API. (See the VirtualProtect, VirtualProtectEx, VirtualQuery, and VirtualQueryEx functions.)

Table 7-3 Memory Protection Options Defined in the Win32 API

| Attribute | Description |

|---|---|

| PAGE_NOACCESS | Any attempt to read from, write to, or execute code in this region causes an access violation. |

| PAGE_READONLY | Any attempt to write to or execute code in memory causes an access violation, but reads are permitted. |

| PAGE_READWRITE | The page is readable and writable—no action will cause an access violation. |

| PAGE_EXECUTE* | Any attempt to read from or write to code in memory in this region causes an access violation, but execution is permitted. |

| PAGE_EXECUTE_READ* | Any attempt to write to code in memory in this region causes an access violation, but executes and reads are permitted. |

| PAGE_EXECUTE_READWRITE* | The page is readable, writable, and executable—no action will cause an access violation. |

| PAGE_WRITECOPY | Any attempt to write to memory in this region causes the system to give the process a private copy of the page. Attempts to execute code in memory in this region cause an access violation. |

| PAGE_EXECUTE_WRITECOPY | Any attempt to write to memory in this region causes the system to give the process a private copy of the page. |

| PAGE_GUARD | Any attempt to read from or write to a guard page raises an EXCEPTION_GUARD_PAGE exception and turns off the guard page status. Guard pages thus act as a one-shot alarm. Note that this flag can be specified with any of the page protections listed in this table except PAGE_NOACCESS. |

* The x86 architecture doesn t implement execute-only access (that is, code can be executed in any readable page), so Windows 2000 doesn t support this option in any practical sense (though IA-64 does). Windows 2000 treats PAGE_EXECUTE_READ as PAGE_READONLY and PAGE_EXECUTE_READWRITE as PAGE_READWRITE.

And finally, shared memory section objects have standard Windows 2000 access-control lists (ACLs) that are checked when processes attempt to open them, thus limiting access of shared memory to those processes with the proper rights. Security also comes into play when a thread creates a section to contain a mapped file. To create the section, the thread must have at least read access to the underlying file object or the operation will fail.

Once a thread has successfully opened a handle to a section, its actions are still subject to the memory manager and the hardware-based page protections described earlier. A thread can change the page-level protection on virtual pages in a section if the change doesn't violate the permissions in the ACL for that section object. For example, the memory manager allows a thread to change the pages of a read-only section to have copy-on-write access but not to have read/write access. The copy-on-write access is permitted because it has no effect on other processes sharing the data.

These four primary memory protection mechanisms are part of the reason that Windows 2000 is a robust, reliable operating system that is impervious to and resilient to application errors.

Copy-on-Write

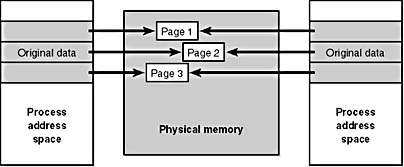

Copy-on-write page protection is an optimization the memory manager uses to conserve physical memory. When a process maps a copy-on-write view of a section object that contains read/write pages, instead of making a process private copy at the time the view is mapped (as the Compaq OpenVMS operating system does), the memory manager defers making a copy of the pages until the page is written to. All modern UNIX systems use this technique as well. For example, as shown in Figure 7-2, two processes are sharing three pages, each marked copy-on-write, but neither of the two processes has attempted to modify any data on the pages.

Figure 7-2 The "before" of copy-on-write

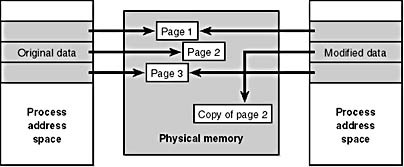

If a thread in either process writes to a page, a memory management fault is generated. The memory manager sees that the write is to a copy-on-write page, so instead of reporting the fault as an access violation, it allocates a new read/write page in physical memory, copies the contents of the original page to the new page, updates the corresponding page-mapping information (explained later in this chapter) in this process to point to the new location, and dismisses the exception, thus causing the instruction that generated the fault to be reexecuted. This time, the write operation succeeds, but as shown in Figure 7-3, the newly copied page is now private to the process that did the writing and isn't visible to the other processes still sharing the copy-on-write page. Each new process that writes to that same shared page will also get its own private copy.

Figure 7-3 The "after" of copy-on-write

One application of copy-on-write is to implement breakpoint support in debuggers. For example, by default, code pages start out as execute-only. If a programmer sets a breakpoint while debugging a program, however, the debugger must add a breakpoint instruction to the code. It does this by first changing the protection on the page to PAGE_EXECUTE_READWRITE and then changing the instruction stream. Because the code page is part of a mapped section, the memory manager creates a private copy for the process with the breakpoint set, while other processes continue using the unmodified code page.

Copy-on-write is one example of an evaluation technique known as lazy evaluation that the memory manager uses as often as possible. Lazy-evaluation algorithms avoid performing an expensive operation until absolutely required—if the operation is never required, no time is wasted on it.

The POSIX subsystem takes advantage of copy-on-write to implement the fork function. Typically, when a UNIX application calls the fork function to create another process, the first thing that the new process does is call the exec function to reinitialize the address space with an executable program. Instead of copying the entire address space on fork, the new process shares the pages in the parent process by marking them copy-on-write. If the child writes to the data, a process private copy is made. If not, the two processes continue sharing and no copying takes place. One way or the other, the memory manager copies only the pages the process tries to write to rather than the entire address space.

To examine the rate of copy-on-write faults, see the performance counter Memory: Write Copies/Sec.

Heap Functions

A heap is a region of one or more pages of reserved address space that can be subdivided and allocated in smaller chunks by the heap manager. The heap manager is a set of functions that can be used to allocate and deallocate variable amounts of memory (not necessarily on a page-size granularity as is done in the VirtualAlloc function). The heap manager functions exist in two places: Ntdll.dll and Ntoskrnl.exe. The subsystem APIs (such as the Win32 heap APIs) call the functions in Ntdll, and various executive components and device drivers call the functions in Ntoskrnl.

Every process starts out with a default process heap, usually 1 MB in size (unless specified otherwise in the image file by using the /HEAP linker flag). This size is just the initial reserve, however—it will expand automatically as needed. (You can also specify the initial committed size in the image file.) Win32 applications as well as several Win32 functions that might need to allocate temporary memory blocks use this process default heap. Processes can also create additional private heaps with the HeapCreate function. When a process no longer needs a private heap, it can recover the virtual address space by calling HeapDestroy. Only a private heap created with HeapCreate—not the default heap—can be destroyed during the life of a process.

To allocate memory from the default heap, a thread must obtain a handle to it by calling GetProcessHeap. (This function returns the address of the data structure that describes the heap, but callers should never rely on that.) A thread can then use the heap handle in calls to HeapAlloc and HeapFree to allocate and free memory blocks from that heap. The heap manager also provides an option for each heap to serialize allocations and deallocations so that multiple threads can call heap functions simultaneously without corrupting heap data structures. The default process heap is set to have this serialization by default (though you can override this on a call-by-call basis). For additional private heaps, a flag passed to HeapCreate is used to specify whether serialization should be performed.

The heap manager supports a number of internal validation checks that, although not currently documented, you can enable on a systemwide or a per-image basis by using the Global Flags (Gflags.exe) utility in the Windows 2000 Support Tools, Platform SDK, and DDK. Many of the flags are self-explanatory in terms of what they cause the heap manager to do. In general, enabling these flags will cause invalid use or corruption of the heap—to generate error notifications to an application either through the use of exceptions or through returned error codes. (See Chapter 3 for information about exceptions.)

For more information on the heap functions, see the Win32 API reference documentation on MSDN.

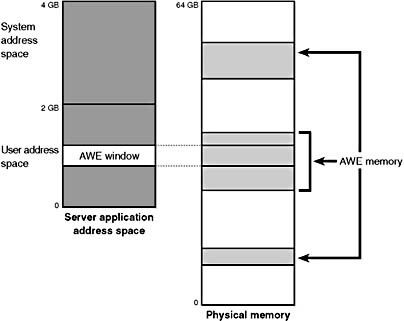

Address Windowing Extensions

Although Windows 2000 systems can support up to 64 GB of physical memory (as shown in Table 2-2), each 32-bit user process has only a 2-GB or 3GB virtual address space (depending on whether the /3GB boot switch is enabled). To allow a 32-bit process to allocate and access more physical memory than can be represented in its limited address space, Windows 2000 provides a set of functions called Address Windowing Extensions (AWE). For example, on a Windows 2000 Advanced Server system with 8 GB of physical memory, a database server application could use AWE to allocate and use nearly 8 GB of memory as a database cache.

Allocating and using memory via the AWE functions is done in three steps:

- Allocating the physical memory to be used

- Creating a region of virtual address space to act as a window to map views of the physical memory

- Mapping views of the physical memory into the window

To allocate physical memory, an application calls the Win32 function AllocateUserPhysicalPages. (This function requires the Lock Pages in Memory user right.) The application then uses the Win32 VirtualAlloc function with the MEM_PHYSICAL flag to create a window in the private portion of the process's address space that is mapped to some or all of the physical memory previously allocated. The AWE-allocated memory can then be used with nearly all the Win32 APIs. (For example, the Microsoft DirectX functions can't use AWE memory.)

If an application creates a 256-MB window in its address space and allocates 4 GB of physical memory (on a system with more than 4 GB of physical memory), the application can use the MapUserPhysicalPages or MapUserPhysicalPagesScatter Win32 functions to access any portion of the physical memory by mapping the memory into the 256-MB window. The size of the application's virtual address space window determines the amount of physical memory that the application can access with a given mapping. Figure 7-4 shows an AWE window in a server application address space mapped to a portion of physical memory previously allocated by AllocateUserPhysicalPages.

Figure 7-4 Using AWE to map physical memory

The AWE functions exist on all editions of Windows 2000 and are usable regardless of how much physical memory a system has. However, AWE is most useful on systems with more than 2 GB of physical memory, because it's the only way for a 32-bit process to directly use more than 2 GB of memory.

Finally, there are some restrictions on memory allocated and mapped by the AWE functions:

- Pages can't be shared between processes.

- The same physical page can't be mapped to more than one virtual address in the same process.

- Page protection is limited to read/write.

For a description of the page table data structures used to map memory on systems with more than 4 GB of physical memory, see the section "Physical Address Extension."

EAN: 2147483647

Pages: 121