Serving a News Site

| Let's go back to our wildly popular news site www.example.com. In Chapter 6 we tackled our static content distribution problem, which constitutes the bulk of our bandwidth and requests. However, the dynamic content constitutes the bulk of the work. So far we have talked about our news site in the abstract sense. Let's talk about it now in more detail so that you know a bit more about what it is and what it does. Figure 7.7 displays a sample article. This page shows two elements of dynamic content:

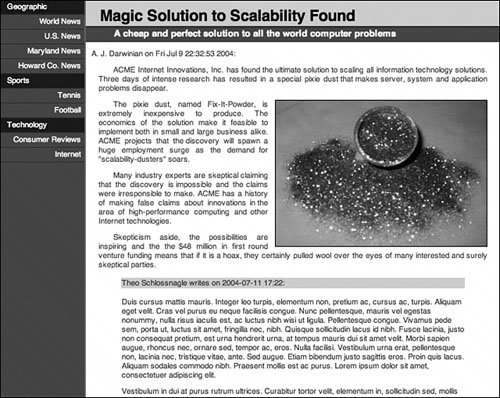

Figure 7.7. Screenshot from our news website All information for the site must be stored in some permanent store. This store is the fundamental basis for all caches because it contains all the original data. First let's look at this architecture with no caching whatsoever. There is more complete code for the examples used throughout this chapter on the Sams website. We will just touch on the elements of code pertinent to the architectural changes required to place our caches. Note Remember that this book isn't about how to write code; nor is it about how to build a news site. This book discusses architectural concepts, and the coding examples in this book are simplified. The pages are the bare minimum required to illustrate the concepts and benefits of the overall approach being discussed. The concepts herein can be applied to web architectures regardless of the programming language used. It is important to consider them as methodologies and not pure implementations. This will help you when you attempt to apply them to an entirely different problem using a different programming language. For no reason other than the author's intimate familiarity with perl, our example news site is written in perl using Apache::ASP, and an object-oriented approach was used when developing the application code. Apache::ASP is identical to ASP and PHP with respect to the fashion in which it is embedded in web pages. Three important classes (or packages in perl) drive our news pages that display articles:

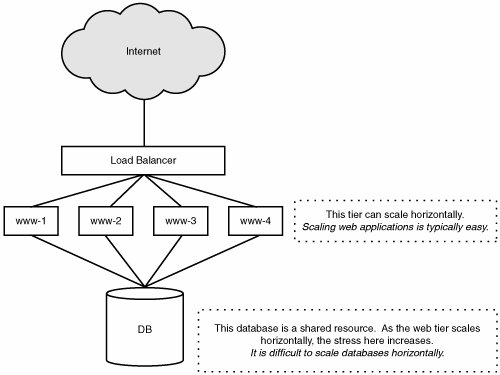

Simple ImplementationWith these objects, we can make the web page shown in Figure 7.7 come to life by creating the following Apache::ASP page called /hefty/art1.html: 1: <% 2: use SIA::Cookie; 3: use SIA::Article; 4: use SIA::User; 5: use SIA::Utils; 6: my $articleid = $Request->QueryString('articleid'); 7: my $article = new SIA::Article($articleid); 8: my $cookie = new SIA::Cookie($Request, $Response); 9: my $user = new SIA::User($cookie->userid); 10: %> 11: <!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01//EN" 12: "http://www.w3.org/TR/html4/st 13: rict.dtd"> 14: <html> 15: <head> 16: <title>example.com: <%= $article->title %></title> 17: <meta http-equiv="Content-Type" content="text/html"> 18: <link rel="stylesheet" href="/css/main.css" media="screen" /> 19: </head> 20: <body> 21: <div > 22: <ul> 23: <% foreach my $toplevel (@{$user->leftNav}) { %> 24: <li ><%= $toplevel->{group} %></li> 25: <% foreach my $item (@{$toplevel->{type}}) { %> 26: <li><a href="/sia/news/page/<%= $item->{news_type} %>"> 27: <%= $item->{type_name} %></a></li> 28: <% } %> 29: <% } %> 30: </ul> 31: </div> 32: <div > 33: <h1><%= $article->title %></h1> 34: <h2><%= $article->subtitle %></h2> 35: <div ><p><%= $article->author_name %> 36: on <%= $article->pubdate %>:</p> 37: <div > 38: <%= $article->content %> 39: </div> 40: <div > 41: <%= SIA::Utils::render_comments($article->commentary) %> 42: </div> 43: </div> 44: </body> 45: </html>Before we analyze what this page is doing, let's fix this site so that article 12345 isn't accessed via /hefty/art1.html?articleid=12345. This looks silly and prevents search indexing systems from correctly crawling our site. The following mod_rewrite directives will solve that: RewriteEngine On RewriteRule ^/news/article/(.*).html \ /data/www.example.com/hefty/art1.html?articleid=$1 [L] Now article 12345 is accessed via http://www.example.com/news/articles/12345.html, which is much more person- and crawler-friendly. Now that we have that pet peeve ironed out, we can look at the ramifications of placing a page like this in a large production environment. The left navigation portion of this page is a simple two-level iteration performed on lines 22 and 24. The method $user->leftNav performs a single database query to retrieve a user's preferred left navigation configuration. The main content of the page is completed by calling the accessor functions of the $article object. When the $article object is instantiated on line 6, the article is fetched from the database. From that point forward, all methods called on the $article object access information that has already been pulled from the database with the noted exception of the $article->commentary call on line 38. $article->commentary must perform fairly substantial database queries to retrieve comments posted in response to the article and comments posted in response to those comments, and so on. To recap, three major database interactions occur to render this page for the user. So what? Well, we now have this code running on a web server...or two...or twenty. The issue arises that one common resource is being used, and because it is required every time article pages are loaded, we have a contention issue. Contention is the devil. Don't take my word for it. Let's stress this system a bit and get some metrics. Looking at PerformanceWe don't need to go into the gross details of acquiring perfect (or even good) performance metrics. We are not looking for a performance gain directly; we are looking to alleviate pressure on shared resources. Let's run a simple ab (Apache Benchmark) with a concurrency of 100 against our page and see what happens. The line we will take note of is the average turn-around time on requests: Time per request: 743 (mean, across all concurrent requests) This tells us that it took an average of 743ms to service each request when serving 100 concurrently. That's not so bad, right? Extrapolating this, we find that we should be able to service about 135 requests/second. The problem is that these performance metrics are multifaceted, and without breaking them down a bit, we miss what is really important. The resources spent to satisfy the request are not from the web server alone. Because this page accesses a database, some of the resources spent on the web server are just time waiting for the database to perform some work. This is where performance and scalability diverge. The resources used on the web server are easy to come by and easy to scale. If whatever performance we are able to squeeze out of the system (after performance tuning) is insufficient, we can simply add more servers because the work that they perform is parallelizable and thus intrinsically horizontally scalable. Figure 7.8 illustrates scalability problems with nonshared resources. Figure 7.8. A web tier using a shared, nonscalable database resources On the other hand, the database is a shared resource, and, because requests stress that resource, we can't simply add another core database server to the mix. Chapter 8 discusses why it is difficult to horizontally scale database resources. The performance metrics we are interested in are those that pertain to components of the architecture that do not scale. If we mark up our database abstraction layer a bit to add time accounting for all query preparation, execution, and fetching, we run the same test and find that, on average, we spend 87ms performing database operations. This isn't necessarily 87ms of stress on the database, but then again, that depends on perspective. One can argue that the database isn't actually working for those 87ms and that much of the time it is waiting for packets to fly across the network. However, there is a strong case to be made that during those 87ms, the client still holds a connection to the database and thus consumes resources. Both statements are true, especially for databases such as Oracle where an individual client connection requires a substantial amount of memory on the database server (called a shadow process). MySQL, on the other hand, has much less overhead, but it is still quantifiable. Where are we going with this? It certainly isn't to try to tune the database to spend less resources to serve these requests. Although that is an absolute necessity in any large environment, that is a performance issue that sits squarely on the shoulders of a DBA and should be taken for granted when analyzing architectural issues. Rather, our goal is to eliminate most (if not all) of the shared resource usage. Each request we passed through the server during our contrived test required 743ms of attention. Of that time, 87ms was not horizontally scalable, and the other 656ms was. That amount87msmay not seem like much, and, in fact, it is both small and completely contrived. Our tests were in a controlled environment with no external database stress or web server stress. Conveniently, we were simply attempting to show that some portion of the interaction was dependent on shared, nonscalable resources. We succeeded; now how do we fix it? Introducing Integrated CachingThere are a variety of implementations of integrated caching. File cache, memory cache, and distributed caches with and without replication are all legitimate approaches to caching. Which one is the best? Because they all serve the same purpose (to alleviate pressure on shared resources), it simply comes down to which one is the best fit in your architecture. Because our example site uses perl, we will piggyback our implementation of the fairly standard Cache implementation that has extensions for file caching, memory caching, and a few other external caching implementations including memcached. With integrated caching, you need to make a choice up front on the semantics you plan to use for cached data. A popular paradigm used when caching resultsets from complicated queries is applying a time-to-live to the data as it is placed in the cache. There are several problems with this approach:

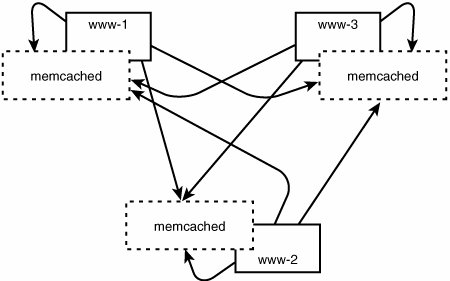

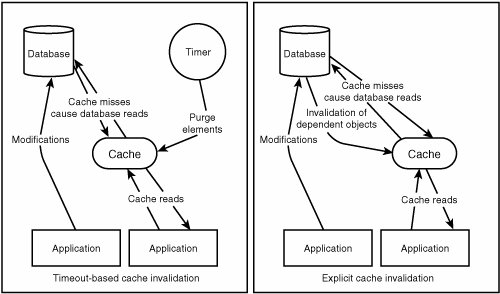

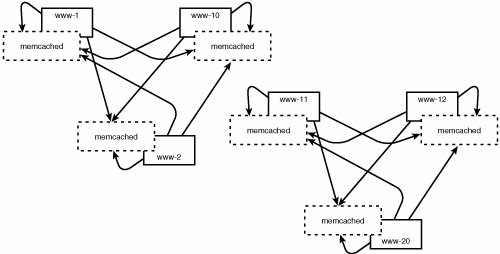

Let's look at the alternative and see what complications arise. If we cache information without a timeout, we must have a cache invalidation infrastructure. That infrastructure must be capable of triggering removals from the cache when an underlying dataset that contributed to the cached result changes and causes our cached view to be invalid. Building cache invalidation systems can be a bit tricky. Figure 7.9 shows both caching semantics. Figure 7.9. Timeout-based caches (left) and explicitly invalidated caches (right) Basically, the two approaches are "easy and incorrect" or "complicated and correct." But "incorrect" in this case simply means that there is a margin of error. For applications that are tolerant of an error-margin in their caching infrastructures, timeout-based caches are a spectacularly simplistic solution. If we choose a timeout for our cached elements, it means that we must throw out our cached values after that amount of time, even if the underlying data has not changed. This causes the next request for that data to be performed against the database, and the result will be stored in the cache until the timeout elapses once again. It should be obvious after a moment's thought that if the overall application updates data infrequently with respect to the number of times the data is requested, cached data will be valid for many requests. On a news site, it is likely that the read-to-write ratio for news articles is 1,000,000 to 1 or higher (new user comments count as writes). This means that the cached data could be valid for minutes, hours, or days. However, with a timeout-based cache, we cannot capitalize on this. Why? We must set our cache element timeout sufficiently low to allow for newly published articles to be "realized" into the cache. Essentially, the vast majority of cache purges will be wasted just to account for the occasional purge that results in a newer replacement. Ideally, we want to cache things forever, and when they change, we will purge them. Because we control how articles and comments are published through the website, it is not difficult to integrate a cache invalidation notification into the process of publishing content. We had three methods that induced all the database costs on the article pages: $user->leftNav, $article->new, and $article->comments. Let's revise and rename these routines by placing an _ in front of each and implementing caching wrappers around them: sub leftNav { my $self = shift; my $userid = shift; my $answer = $global_cache->get("user_leftnav_$userid"); return $answer if($answer); $answer = $self->_leftNav($userid); $global_cache->set("user_leftnav_$userid", $answer); return $answer; }Wrapping SIA::Article methods in a similar fashion is left as an exercise for the reader. In the startup code for our web server, we can instantiate the $global_cache variable as so: use Cache::Memcached; $global_cache = new Cache::Memcached { 'servers' = > [ "10.0.2.1:11211", "10.0.2.2:11211", "10.0.2.3:11211", "10.0.2.4:11211" ], 'debug' = > 0, };Now we start a memcached server on each web server in the cluster and voila! Now, as articles and their comments are requested, we inspect the cache. If what we want is there, we use it; otherwise, we perform the work as we did before, but place the results in the cache to speed subsequent accesses. This is depicted in Figure 7.10. Figure 7.10. A memcached cluster in use What do we really gain by this? Because our data is usually valid for long periods of time (thousands or even millions of page views), we no longer have to query the database on every request. The load on the database is further reduced because memcached is a distributed caching daemon. If configured as a cluster of memcached processes (one on each machine), items placed into the cache on one machine will be visible to other machines searching for that key. Because it is a cache, if a machine were to crash, the partition of data that machine was caching would disappear, and the cache clients would deterministically failover to another machine. Because it is a cache, it was as if the data was prematurely purgedno harm, no foul. We have effectively converted all the article queries to cache hits (as we expect our cache hit rate for that data to be nearly 100%). Although the cache hit rate for the user information is not as high, the data is unique per user, and the average user only visits a handful of pages on the site. If the average user visits five pages, we expect the first page to be a cache miss within the $user->leftNav method. However, the subsequent four page views will be cache hits, yielding an 80% cache hit rate. This is just over a 93% overall cache hit rate. In other words, we have reduced the number of queries hitting our database by a factor of 15not bad. How Does memcached Scale?One of the advantages of memcached is that it is a distributed system. If 10 machines participate in a memcached cluster, the cached data will be distributed across those 10 machines. On a small and local-area scale this is good. Network connectivity between machines is fast, and memcached performs well with many clients if you have an operating system with an advanced event handling system (epoll, kqueue, /dev/poll, and so on). However, if you find that memcached is working a bit too hard, and your database isn't working hard enough, there is a solution. We can artificially segment out memcached clusters so that the number of nodes over which each cache is distributed can be controlled (see Figure 7.11). Figure 7.11. An artificial segmented memcached system This administrative segmentation is useful when you have two or more clusters separated by an expensive network link, or you have too many concurrent clients. Healthy SkepticismWe could stop here. Although this is an excellent caching solution and may be a good stopping point for some caching needs, we have some fairly specialized data that is ripe for a better caching architecture. The first step is to understand what is lacking in our solution up to this point. Two things are clearly missing: We shouldn't have to go to another machine to fetch our article data, and the cache hit rate on our user preferences data is abysmally low. Unfortunately, memcached isn't replicated, so these accesses still require a network access. If N machines participate in the memcached cluster, there is only 1/N chance that the cached item will reside on the local machine, so as the cluster size grows, the higher the probability that a cache lookup will visit a remote machine. Memcached counts on local network access to another machine running memcached is cheaper than requerying the database directly. Although this is almost always true, "cheaper" doesn't mean "cheapest," and certainly not free. Why is lack of replication unfortunate? The article data will have nearly a 100% cache hit rate, and it is accessed often. This means that every machine will want the data, and nearly every time it gets the data, it will be identical. It should be a small mental leap that we can benefit from having that data cached locally on every web server. For Those Who Think Replication Is Expensive Many argue that replicating data like this is expensive. However, both active and passive replications are possible in this sort of architecture. The beauty of a cache is that it is not the definitive source of data, so it can be purged at will and simply be "missing" when convenient. This means that replication need not be atomic in many cases. Simply propagating the cache by publishing the cache operations (add/get/delete) to all participating parties will work most of the time. Propagation of operations as they happen is called active replication. Alternatively, in a nonreplicated distributed system such as memcached, we find that one machine will have to pull a cache element from another machine. At this point, it is essentially no additional work to store that data locally. This is passive replication (like cache-on-demand for caches). Passive caching works well with timeout-based caches. If the cached elements must be explicitly invalidated (as in our example), active replication of delete and update events must still occur to maintain consistency across copies. Because we are not dealing with ACID transactions and we can exercise an "if in doubt, purge" policy, implementing replication is not so daunting. As an alternative to memcached, Spread Concept's RHT (Replicated Hash Table) technology accomplishes active replication with impressive performance metrics. These simplistic replication methods won't work if operations on the cache must be atomic. However, if you are using a caching facility for those types of operations, you are likely using the wrong tool for the jobin Chapter 8 we will discuss replicated databases. What else isn't optimal with the integrated caching architecture? The user information we are caching has an abysmally low cache hit rate80% is awful. Why is it so bad? The problem is that the data itself is fetched (and differs) on a per-user basis. In a typical situation, a user will arrive at the site, and the first page that the user visits will necessitate a database query to determine the layout of the user's left navigation. As a side effect, the data is placed in the cache. The concept is that when the user visits subsequent pages, that user's data will be in the cache. However, we can't keep data in the cache forever; the cache is only so large. As more new data (new users' navigation data, article data, and every other thing we need to cache across our web architecture) is placed in the cache, we will run out of room and older elements will be purged. Unless our cache is very large (enough to accommodate all the leftnav data for every regular user), we will end up purging users' data and thereby instigating a cache miss and database query upon subsequent visits by those users. Tackling User DataUser data, such as long-term preferences and short-term sessions state, has several unique properties that allow it to be cached differently from other types of data. The two most important properties are use and size:

Now, because this is still the chapter on application caching, we still want to cache this. The problem with caching this data with memcached is that each piece of user data will be used for short bursts that can be days apart. Because our distributed system is limited in size, it uses valuable resources to store that data unused in the cache for long periods of time. However, if we were to increase the size of our distributed system dramatically, perhaps the resources will be abundant and this information can be cached indefinitely...and we just happen to have a multimillion node distributed caching system available to usthe machine of every user. The only tricky part about using a customer's machine as a caching node is that it is vital that only information pertinent to that customer be stored there (for security reasons). Cookies give us this. The cookie is perhaps the most commonly overlooked jewel of the web environment. So much attention is poured into making a web architecture's load balancing, web servers, application servers, and databases servers fast, reliable, and scalable that the one layer of the architecture that is the most powerful and very scalable is simply overlooked. By using a user's cookie to store user-pertinent information, we have a caching mechanism that is user-centric and wildly scalable. The information we store in this cache can also be semipermanent. Although we can't rely on the durability of the information we store there, we can, in most cases, rely on a long life. Again, this is a cache; if it is lost, we just fetch the information from the authoritative source and replace it into the cache. To accomplish this in our current article page, we will add a method to our SIA::Cookie class that is capable of "acting" just like the leftNav method of the SIA::User class, except that it caches the data in the cookie for the user to store locally. We'll need to pass the $user as an argument to leftNav in this case so that method can run the original action in the event of an empty cookie (also known as a cache miss). We'll add the following two methods to our SIA::Cookie package: sub setLeftNav { my ($self, $ln) = @_; my $tight = ''; foreach my $toplevel (@$ln) { $tight .= "\n" if(length($tight)); $tight .= $toplevel->{group}."$;"; $tight .= join("$;", map { $_->{news_type}.",".$_->{type_name} } @{$toplevel->{type}}); } ($tight = encode_base64($tight)) =~ s/[\r\n]//g; $self->{Response}->Cookies('leftnav', $tight); return $ln; } sub leftNav { my ($self, $user) = @_; my $tight = $self->{Request}->Cookies('leftnav'); return $self->setLeftNav($user->leftNav) unless($tight); $tight = decode_base64($tight); my @nav; foreach my $line (split /\n/, $tight) { my ($group, @vals) = split /$;/, $line; my @types; foreach my $item (@vals) { my ($nt, $tn) = split /,/, $item, 2; push @types, { news_type = > $nt, type_name = > $tn }; } push @nav, { group = > $group, type = > \@types }; } return \@nav; }We now copy /hefty/art1.html to /hefty/art2.html and change it to use these new SIA::Cookie methods by replacing the original line 22: 22: <% foreach my $toplevel (@{$user->leftNav}) { %>with one that incorporates the $cookie->leftNav method invocation: 22: <% foreach my $toplevel (@{$cookie->leftNav($user)}) { %>You don't really need to understand the code changes as long as you understand what they did and what we gain from them and their size (a mere 31 new lines, and 1 line of change). SIA::Cookie::setLeftNav executes our original $user->leftNav, serializes the results, base64 encodes them (so that it doesn't contain any characters that aren't allowed in cookies), and sends it to the user for permanent storage. SIA::Cookie::leftNav checks the user's cookie, and if it is not there, calls the setLeftNav method. If it is there, it base64 decodes it and deserializes it into a useable structure. In our original design, our application required a database query on every page load to retrieve the data needed to render the left navigation bar. With our first stab at speeding this up using integrated caching, we saw good results with an approximate 80% cache hit rate. Note, however, that the database queries and cache lookups stressed a limited number of machines that were internal to the architecture. This contention is the arch enemy of horizontal scalability. What we have done with cookies is simple (32 lines of code) but effective. In our new page, when a user visits the site for the first time (or makes a change to her preferences), we must access the database because it is a cache miss. However, the new caching design places the cached value on the visitor's machine. It will remain there forever (effectively) without using our precious resources. In practice, aside from initial cache misses, we now see a cache hit rate that is darn near close to 100%. Addressing Security and IntegrityBecause this is a cache, we are not so concerned with durability. However, the nature of some applications requires that the data fed to them to be safe and intact. Any time authentication, authorization, and/or identity information is stored within a cookie, the landscape changes slightly. Although our news site isn't so concerned with the security and integrity of user preferences, a variety of other applications impose two demands on information given to and accepted from users. The first demand is that a user cannot arbitrarily modify the data, and the second is that the user should not be able to determine the contents of the data. Many developers and engineers avoid using a user's cookie as a storage mechanism due to one or both of these requirements. However, they do so wrongly because both issues can be satisfied completely with cryptography. In the previous example, immediately before base64 encoding and after base64 decryption, we can use a symmetric encryption algorithm (for example, AES) and a private secret to encrypt the data, thereby ensuring privacy. Then we can apply a secure message digest (for example, SHA-1) to the result to ensure integrity. This leaves us with a rare, but real argument about cookie theft (the third requirement). The data that we have allowed the user to store may be safe from both prying eyes and manipulation, but it is certainly valid. This means that by stealing the cookie, it is often possible to make the web application believe that the thief is the victimidentity theft. This debacle is not a show-stopper. If you want to protect against theft of cookies, a time stamp can be added (before encrypting). On receiving and validating a cookie, the time stamp is updated, and the updated cookie is pushed back to the user. Cookies that do not have a time stamp with X of the current time will be considered invalid, and information must be repopulated from the authoritative source, perhaps requiring the user to reauthenticate. Two-Tier ExecutionNow that we have user preferences just about as optimal as they can get, let's move on to optimizing our article data caching. We briefly discussed why our memcached implementation wasn't perfect, but let's rehash it quickly. Assuming that we use memcached in a cluster, the data that we cache will be spread across all the nodes in that cluster. When we "load balance" an incoming request to a web server, we do so based on load. It should be obvious that no web server should be uniquely capable of serving a page, because if that web server were to crash that page would be unavailable. Instead, all web servers are capable of serving all web pages. So, we are left with a problem. When a request comes in and is serviced by a web server, and when it checks the memcached cache for that request, it will be on another machine n-1 times out of n (where n is the number of nodes in our cluster). As n grows, the chance of not having the cached element on the local machine is high. Although going to a remote memcached server is usually much faster than querying the database, it still has a cost. Why don't we place this data on the web server itself? We can accomplish this by an advanced technique called two-tier execution. Typically in a web scripting language (for example, PHP, ASP, Apache::ASP, and so on), the code in a page is compiled and executed when the page is viewed. Compilation of scripts is a distributed task, and no shared resources are required. Removing the compilation step (or amortizing it out) is a technique commonly used to increase performance. PHP has a variety of compiler caches including APC, Turk MMCache, PHP Accelerator, and Zend Performance Suite. Apache::ASP uses mod_perl as its underlying engine, which has the capability to reuse compiled code. Effectively, recompiling a scripted web page is more of a performance issue than one of scalability, and it has been solved well for most popular languages. Execution of code is the real resource consumer. Some code runs locally on the web server. XSLT transforms and other such content manipulations are great examples of horizontally scalable components because they use resources only local to that web server and thus scale with the cluster. However, execution that must pull data from a database or other shared resources takes time, requires context switches, and is generally good to avoid. So, if we have our data in a database and want to avoid accessing it there, we stick it in a distributed cache. If we have our data distributed cache and want to avoid accessing it there, where can be put it? In our web pages. If we look back at our /hefty/art2.html example, we see two types of code executions: one that generates the bulk of the page and the other that generates the left navigation system. So, why are they so different? The bulk of the page will be the same on every page load, no matter who views it. The left navigation system is different for almost every page load. This is the precise reason that a transparent caching solution will not provide a good cache hit rateit is only capable of caching entire pages. To eliminate database or cache requests for the main content of the page, we could have the author code the page content directly into the HTML. Is this an awful idea? Yes. Authors do not need to know about the page where the data will reside; they should concentrate only on content. Also, the content (or some portion of the content) will most likely be used in other pages. It is simply not feasible to run a news site like the old static HTML sites of the early 90s. However, we can have the system do this. If we augment our scripting language to allow for two phases of execution, we can have the system actually run the script once to execute all the slowly changing content (such as article information and user comments) and then execute the result of the first execution to handle dynamic content generation that differs from viewer to viewer. To accomplish this, we will do three things:

1: <% 2: use SIA::Article; 3: use SIA::Utils; 4: my $articleid = $Request->QueryString('articleid'); 5: my $article = new SIA::Article($articleid); 6: %> 7: <[% 8: use SIA::User; 9: use SIA::Cookie; 10: my $cookie = new SIA::Cookie($Request, $Response); 11: my $user = new SIA::User($cookie->userid); 12: %]> 13: <!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01//EN" 14: "http://www.w3.org/TR/html4/strict.dtd"> 15: <html> 16: <head> 17: <title>example.com: <%= $article->title %></title> 18: <meta http-equiv="Content-Type" content="text/html"> 19: <link rel="stylesheet" href="/sia/css/main.css" media="screen" /> 20: </head> 21: <body> 22: <div > 23: <ul> 24: <[% foreach my $toplevel (@{$cookie->leftNav($user)}) { %]> 25: <li ><[%= $toplevel->{group} %]></li> 26: <[% foreach my $item (@{$toplevel->{type}}) { %]> 27: <li><a href="/sia/news/page/<[%= $item->{news_type} %]>"> 28: <[%= $item->{type_name} %]></a></li> 29: <[% } %]> 30: <[% } %]> 31: </ul> 32: </div> 33: <div > 34: <h1><%= $article->title %></h1> 35: <h2><%= $article->subtitle %></h2> 36: <div ><p><%= $article->author_name %> 37: on <%= $article->pubdate %>:</p> 38: <div > 39: <%= $article->content %> 40: </div> 41: <div > 42: <%= SIA::Utils::render_comments($article->commentary) %> 43: </div> 44: </div> 45: </body> 46: </html>As you can see, no new code was introduced. We introduced a new script encapsulation denoted by <[% and %]>, which Apache::ASP does not recognize. So, upon executing this page (directly), Apache::ASP will execute all the code within the usual script delimiters (<% and %>), and all code encapsulated by our new delimiters will be passed directly through to the output. We will not ever directly execute this page, however. Our renderer is responsible for executing this page and for transforming all <[% and %]> to <% and %> in the output, so that it is ready for another pass by Apache::ASP. This intermediate output will be saved to disk. As such, we will be able to execute the intermediate output directly as an Apache::ASP page: <% use strict; use Apache; die "Direct page requests not allowed.\n" if(Apache->request->is_initial_req); my $articleid = $Request->QueryString('articleid'); # Make sure the article is a number die "Articleid must be numeric" if($articleid =~ /\D/); # Apply Apache::ASP to our heavy template my $doc = $Response->TrapInclude("../hefty/art3.html"); # Reduce 2nd level cache tags to 1st level cache tage $$doc =~ s/<\[?(\[*)%/<$1%/gs; $$doc =~ s/%\]?(\]*)>/%$1>/gs; # Store our processed heavy tempalate to be processed by Apach::ASP again. my $cachefile = "../light/article/$articleid.html"; if(open(C2, ">$cachefile.$$")) { print C2 $$doc; close C2; rename("$cachefile.$$", $cachefile) || unlink("$cachefile.$$"); } # Short circuit and process the ASP we just wrote out. $Response->Include($doc); %>Now for the last step, which is to short-circuit the system to use /light/article/12345.html if it exists. Otherwise, we need to run our renderer at /internal/render.html?articleid=12345. This can be accomplished with mod_rewrite as follows: RewriteEngine On RewriteCond %{REQUEST_URI} news/article/(.*) RewriteCond /data/www.example.com/light/$1 -f RewriteRule $news/(.*) /data/www.example.com/light/$1 [L] RewriteRule ^news/article/(.*).html$ /data/www.example.com/internal/render.html?articleid=$1 [L]Cache InvalidationThis system, although cleverly implemented, is still simply a cache. However, it caches data as aggressively as possible in a compiled form. Like any cache that isn't timeout-based, it requires an invalidation system. Invalidating data in this system is as simple as removing files from the file system. If the data or comments for article 12345 have changed, we simply remove /data/www.exmaple.com/light/article/1.html. That may sound easy, but remember that we can (and likely will) have these compiled pages on every machine in the cluster. This means that when an article is updated, we must invalidate the cache files on all the machines in the cluster. If we distribute cache delete requests to all the machines in the cluster, we can accomplish this. However, if a machine is down or otherwise unavailable, it may miss the request and have a stale cache file when it becomes available again. This looks like a classic problem in database synchronization; however, on closer inspection, it is less complicated than it seems because this data isn't crucial. When a machine becomes unavailable or crashes, we simple blow its cache before it rejoins the cluster. |

EAN: 2147483647

Pages: 114