Introduction

Internet server programs supporting mission-critical applications such as financial transactions, database access, corporate intranets, and other key functions must run 24 hours a day, seven days a week. And networks need the ability to scale performance to handle large volumes of client requests without creating unwanted delays. For these reasons, clustering is of wide interest to the enterprise. Clustering enables a group of independent servers to be managed as a single system for higher availability, easier manageability, and greater scalability.

The Microsoft Windows 2000 Advanced Server and Datacenter Server operating systems include two clustering technologies designed for this purpose: Cluster service, which is intended primarily to provide failover support for critical line-of-business applications such as databases, messaging systems, and file/print services; and Network Load Balancing, which serves to balance incoming IP traffic among multinode clusters. We will treat this latter technology in detail here.

Network Load Balancing provides scalability and high availability to enterprise-wide TCP/IP services, such as Web, Terminal Services, proxy, VPN, and streaming media services. Network Load Balancing brings special value to enterprises deploying TCP/IP services, such as e-commerce applications, that link clients with transaction applications and back-end databases.

Network Load Balancing servers (also called hosts) in a cluster communicate among themselves to provide key benefits, including:

- Scalability Network Load Balancing scales the performance of a server-based program, such as a Web server, by distributing its client requests across multiple servers within the cluster. As traffic increases, additional servers can be added to the cluster, with up to 32 servers possible in any one cluster.

- High availability Network Load Balancing provides high availability by automatically detecting the failure of a server and repartitioning client traffic among the remaining servers within ten seconds, while providing users with continuous service.

Network Load Balancing distributes IP traffic to multiple copies (or instances) of a TCP/IP service, such as a Web server, each running on a host within the cluster. Network Load Balancing transparently partitions the client requests among the hosts and lets the clients access the cluster using one or more "virtual" IP addresses. From the client's point of view, the cluster appears to be a single server that answers these client requests. As enterprise traffic increases, network administrators can simply plug another server into the cluster.

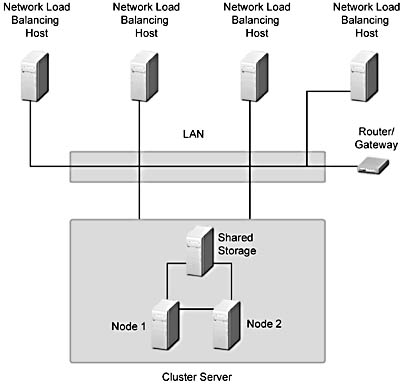

For example, the clustered hosts in Figure B.1 work together to service network traffic from the Internet. Each server runs a copy of an IP-based service, such as Internet Information Service 5.0 (IIS), and Network Load Balancing distributes the networking workload among them. This speeds up normal processing so that Internet clients see faster turnaround on their requests. For added system availability, the back-end application (a database, for example) may operate on a two-node cluster running Cluster service.

The four-host cluster shown in Figure B.1 works as a single virtual server to handle network traffic. Each host runs its own copy of the server with Network Load Balancing distributing the work among the four hosts.

Figure B.1 A four-host cluster

Advantages of Network Load Balancing

Network Load Balancing is superior to other software solutions such as round-robin DNS (RRDNS), which distributes workload among multiple servers but does not provide a mechanism for server availability. If a server within the host fails, RRDNS, unlike Network Load Balancing, will continue to send it work until a network administrator detects the failure and removes the server from the DNS address list. This results in service disruption for clients. Network Load Balancing also has advantages over other load-balancing solutions—both hardware- and software-based—that introduce single points of failure or performance bottlenecks by using a centralized dispatcher. Because Network Load Balancing has no proprietary hardware requirements, any industry-standard compatible computer can be used. This provides significant cost savings when compared to proprietary hardware load-balancing solutions.

The unique and fully distributed software architecture of Network Load Balancing enables it to deliver the industry's best load-balancing performance and availability. The specific advantages of this architecture are described in the "Network Load Balancing Architecture" section.

Installing and Managing Network Load Balancing

Network Load Balancing is automatically installed and can be optionally enabled on Windows 2000 Advanced Server and Windows 2000 Datacenter Server. It operates as an optional service for local area network (LAN) connections and can be enabled for one LAN connection in the system; this LAN connection is known as the cluster adapter. No hardware changes are required to install and run Network Load Balancing. Since it is compatible with almost all Ethernet and Fiber Distributed Data Interface (FDDI) network adapters, it has no specific hardware compatibility list.

IP Addresses

Once Network Load Balancing is enabled, its parameters are configured using its Properties dialog box, as described in the online help guide. The cluster is assigned a primary IP address, which represents a virtual IP address to which all cluster hosts respond. The remote control program provided as a part of Network Load Balancing uses this IP address to identify a target cluster. Each cluster host also can be assigned a dedicated IP address for network traffic unique to that particular host within the cluster. Network Load Balancing never load-balances traffic for the dedicated IP address. Instead, it load-balances incoming traffic from all IP addresses other than the dedicated IP address.

When configuring Network Load Balancing, it is important to enter the dedicated IP address, primary IP address, and other optional virtual IP addresses into the TCP/IP Properties dialog box in order to enable the host's TCP/IP stack to respond to these IP addresses. The dedicated IP address is always entered first so that outgoing connections from the cluster host are sourced with this IP address instead of a virtual IP address. Otherwise, replies to the cluster host could be inadvertently load-balanced by Network Load Balancing and delivered to another cluster host. Some services, such as the Point-to-Point Tunneling Protocol (PPTP) server, do not allow outgoing connections to be sourced from a different IP address, and thus a dedicated IP address cannot be used with these services.

Host Priorities

Each cluster host is assigned a unique host priority in the range of 1 to 32, where lower numbers denote higher priorities. The host with the highest host priority (lowest numeric value) is called the default host. It handles all client traffic for the virtual IP addresses that is not specifically intended to be load-balanced. This ensures that server applications not configured for load balancing only receive client traffic on a single host. If the default host fails, the host with the next highest priority takes over as default host.

Port Rules

Network Load Balancing uses port rules to customize load balancing for a consecutive numeric range of server ports. Port rules can select either multiple-host or single-host load-balancing policies. With multiple-host load balancing, incoming client requests are distributed among all cluster hosts and a load percentage can be specified for each host. Load percentages allow hosts with higher capacity to receive a larger fraction of the total client load. Single-host load balancing directs all client requests to the host with highest handling priority. The handling priority essentially overrides the host priority for the port range and allows different hosts to individually handle all client traffic for specific server applications. Port rules also can be used to block undesired network access to certain IP ports.

When a port rule uses multiple-host load balancing, one of three client affinity modes is selected. When no client affinity mode is selected, Network Load Balancing load-balances client traffic from one IP address and different source ports on multiple-cluster hosts. This maximizes the granularity of load balancing and minimizes response time to clients. To assist in managing client sessions, the default single-client affinity mode load-balances all network traffic from a given client's IP address on a single-cluster host. The class C affinity mode further constrains this technique to load-balance all client traffic from a single class C address space. See the "Managing Application State" section for more information on session support.

By default, Network Load Balancing is configured with a single port rule that covers all ports (0-65, 535) with multiple-host load balancing and single-client affinity. This rule can be used for most applications. It is important that this rule not be modified for VPN applications and whenever IP fragmentation is expected. This ensures that fragments are efficiently handled by the cluster hosts.

Remote Control

Network Load Balancing provides a remote control program (Wlbs.exe) that allows system administrators to remotely query the status of clusters and control operations from a cluster host or from any networked computer running Windows 2000. This program can be incorporated into scripts and monitoring programs to automate cluster control. Monitoring services are widely available for most client/server applications. Remote control operations include starting and stopping either single hosts or the entire cluster. In addition, load balancing for individual port rules can be enabled or disabled on one or more hosts. New traffic can be blocked on a host while allowing ongoing TCP connections to complete prior to removing the host from the cluster. Although remote control commands are password-protected, individual cluster hosts can disable remote control operations to enhance security.

Managing Server Applications

Server applications need not be modified for load balancing. However, the system administrator starts load-balanced applications on all cluster hosts. Network Load Balancing does not directly monitor server applications, such as Web servers, for continuous and correct operation. Monitoring services are widely available for most client/server applications. Instead, Network Load Balancing provides the mechanisms needed by application monitors to control cluster operations—for example, to remove a host from the cluster if an application fails or displays erratic behavior. When an application failure is detected, the application monitor uses the Network Load Balancing remote control program to stop individual cluster hosts and/or disable load balancing for specific port ranges.

Maintenance and Rolling Upgrades

Computers can be taken offline for preventive maintenance without disturbing cluster operations. Network Load Balancing also supports rolling upgrades to allow software or hardware upgrades without shutting down the cluster or disrupting service. Upgrades can be individually applied to each server, which immediately rejoins the cluster. Network Load Balancing hosts can run in mixed clusters with hosts running the Windows NT Load Balancing Service (WLBS) under Windows NT 4.0. Rolling upgrades can be performed without interrupting cluster services by taking individual hosts out of the cluster, upgrading them to Windows 2000, and then placing them back in the cluster. (Note that the first port in the default port range has been changed for Windows 2000 from 1 to 0, and the port rules must always be compatible for all cluster hosts.)

How Network Load Balancing Works

Network Load Balancing scales the performance of a server-based program, such as a Web server, by distributing its client requests among multiple servers within the cluster. With Network Load Balancing, each incoming IP packet is received by each host but only accepted by the intended recipient. The cluster hosts concurrently respond to different client requests, even multiple requests from the same client. For example, a Web browser may obtain the various images within a single Web page from different hosts in a load-balanced cluster. This speeds up processing and shortens the response time to clients.

Each Network Load Balancing host can specify the load percentage that it will handle, or the load can be equally distributed among all of the hosts. Using load percentages, each Network Load Balancing server selects and handles a portion of the workload. Clients are statistically distributed among cluster hosts so that each server receives its percentage of incoming requests. This load balance dynamically changes when hosts enter or leave the cluster. In this version, the load balance does not change in response to varying server loads (such as CPU or memory usage). For applications, such as Web servers, which have numerous clients and relatively short-lived client requests, the ability of Network Load Balancing to distribute workload through statistical mapping efficiently balances loads and provides fast response to cluster changes.

Network Load Balancing cluster servers emit a heartbeat message to other hosts in the cluster and listen for the heartbeat of other hosts. If a server in a cluster fails, the remaining hosts adjust and redistribute the workload while maintaining continuous service to their clients. Although existing connections to an offline host are lost, the Internet services nevertheless remain continuously available. In most cases (for example, with Web servers), client software automatically retries the failed connections and the clients experience only a few seconds' delay in receiving a response.

Managing Application State

Application state refers to data maintained by a server application on behalf of its clients. If a server application (such as a Web server) maintains state information about a client session—that is, when it maintains a client's session state—that spans multiple TCP connections, it is usually important that all TCP connections for this client be directed to the same cluster host. Shopping cart contents at an e-commerce site and Secure Sockets Layer (SSL) authentication data are examples of a client's session state. Network Load Balancing can be used to scale applications that manage session state spanning multiple connections. When its client affinity parameter setting is enabled, Network Load Balancing directs all TCP connections from one client IP address to the same cluster host. This allows session state to be maintained in host memory. However, should a server or network failure occur during a client session, a new logon may be required to reauthenticate the client and reestablish session state. Also, adding a new cluster host redirects some client traffic to the new host, which can affect sessions, although ongoing TCP connections are not disturbed. Client/server applications that manage client state so that it can be retrieved from any cluster host (for example, by embedding state within cookies or pushing it to a back-end database) do not need to use client affinity.

To further assist in managing session state, Network Load Balancing provides an optional client affinity setting that directs all client requests from a TCP/IP class C address range to a single cluster host. With this feature, clients that use multiple proxy servers can have their TCP connections directed to the same cluster host. The use of multiple proxy servers at the client's site causes requests from a single client to appear to originate from different systems. Assuming that all of the client's proxy servers are located within the same 254-host class C address range, Network Load Balancing ensures that the same host handles client sessions with minimum impact on load distribution among the cluster hosts. Some very large client sites may use multiple proxy servers that span class C address spaces.

In addition to session state, server applications often maintain persistent, server-based state information that is updated by client transactions, such as merchandise inventory at an e-commerce site. Network Load Balancing should not be used to directly scale applications, such as Microsoft SQL Server (other than for read-only database access), that independently update inter-client state because updates made on one cluster host will not be visible to other cluster hosts. To benefit from Network Load Balancing, applications must be designed to permit multiple instances to simultaneously access a shared database server that synchronizes updates. For example, Web servers with Active Server Pages should have their client updates pushed to a shared back-end database server.

EAN: N/A

Pages: 183