Chapter 9: Testing

|

| < Day Day Up > |

|

All stages of the migration process should be validated by running a series of carefully designed tests. The purpose of the tests is to determine the differences between the expected results (the source environment) and the observed results (the migrated application). The detected changes should be synchronized with the development stages of the project. This chapter describes the test objectives and a generic testing methodology, which can be employed to test migrated applications.

9.1 Planning

The test planning details the activities, dependencies, and effort required to conduct the test of the converted solution.

9.1.1 Principles of software tests

Keep in mind the principles of software tests in general:

-

It is not possible to test a nontrivial system completely.

-

Tests are optimizing processes regarding completeness.

-

Always test against expectations.

-

Each test must have reachable goals.

-

Test cases have to contain reachable and non-reachable data.

-

Test cases must be repeatable.

-

Test cases have to be archived in the configuration management system as well as source code and documentation.

9.1.2 Test documentation

The test documentation is the most important part of the project. The ANSI/IEEE Standard for Software Test Documentation, ANSI/IEEE Std 829-1983, describes its content exactly. We give you here a high level overview.

Scope

State the purpose of the plan, possibly identifying the level of the plan (master etc.). This is essentially the executive summary part of the plan.

You may want to include any references to other plans, documents, or items that contain information relevant to this project and process. If preferable, you can create a references section to contain all reference documents.

Identify the Scope of the plan in relation to the Software Project plan that it relates to. Other items may include, resource and budget constraints, scope of the testing effort, how testing relates to other evaluation activities (Analysis & Reviews), and possible the process to be used for change control and communication, and coordination of key activities.

As this is the Executive Summary, keep information brief and to the point.

Definition of test items

Define the test items you intend to test within the scope of this test plan. Essentially, something you will test is a list of what is to be tested. This can be developed from the software application inventories as well as other sources of documentation and information.

This section is a technical description of the software, and can be oriented to the level of the test plan. For higher levels, it may be by application or functional area, for lower levels it may be by program, unit, module, or build.

Features to be tested

This is a listing of what is to be tested from the users viewpoint of what the system does. This is not a technical description of the software, but a users view of the functions. Users do not understand technical software terminology. They understand functions and processes as they relate to their jobs.

Set the level of risk for each feature. Use a simple rating scale such as high, medium and low (H, M, L). These types of levels are understandable to a user. You should be prepared to discuss why a particular level was chosen.

Features not to be tested

This is a listing of what is not to be tested from both the users viewpoint of what the system does and a configuration management view. This is not a technical description of the software, but a users view of the functions.

Identify why the feature is not to be tested; there can be any number of reasons.

Approach®

This is your overall test strategy for this test plan. It should be appropriate to the the plan and should be in agreement with plans affecting application and database parts. Overall rules and processes should be identified:

-

Are any special tools to be used and what are they?

-

Will the tool require special training?

-

What metrics will be collected?

-

Which level is each metric to be collected at?

-

How is Configuration Management to be handled?

-

How many different configurations will be tested?

-

Combinations of hardware, software, and other vendor packages.

-

What levels of regression testing will be done and how much at each test level?

-

Will regression testing be based on severity of defects detected?

-

How will elements in the requirements and design that do not make sense or are un-testable be processed?

Item pass and fail criteria

What is the completion criteria for this plan? What is the number and severity of defects located? This is a critical aspect of any test plan and should be appropriate to the level of the plan.

Suspension criteria and resumption requirements

Know when to pause in a series of tests. If the number or type of defects reaches a point where the follow on testing has no value, it makes no sense to continue the test; you are just wasting resources.

Specify what constitutes stoppage for a test or series of tests, and what is the acceptable level of defects that will allow the testing to proceed past the defects.

Testing after a truly fatal error will generate conditions that may be identified as defects, but are in fact ghost errors caused by the earlier defects that were ignored.

Test deliverables

What is to be delivered as part of this plan?

-

Test plan document

-

Test cases

-

Test design specification

-

Tools and their outputs

-

Error logs and execution logs

-

Problem reports and corrective actions

One thing that is not a test deliverable is the software itself, which is listed under test items, and is delivered by development.

Environmental needs

Are there any special requirements for this test plan, such as:

-

Special hardware such as simulators, static generators, etc.

-

How will test data be provided? Are there special collection requirements or specific ranges of data that must be provided?

-

How much testing will be done on each component of a multi-part feature?

-

Special power requirements

-

Specific versions of other supporting software

-

Restricted use of the system during testing

Staffing and skills

The staffing depends on the kind of test defined in Chapter 9.1.3, "Test phases" on page 279. In this section you should define the persons and the education and training needed for executing the test case.

Responsibilities

Who is in charge? This issue includes all areas of the plan. Here are some examples:

-

Setting risks

-

Selecting features to be tested and not tested

-

Setting overall strategy for this level of plan

-

Ensuring all required elements are in place for testing

-

Providing for resolution of scheduling conflicts, especially if testing is done on the production system

-

Who provides the required training?

-

Who makes the critical "go/no" decisions for items not covered in the test plans?

9.1.3 Test phases

Series of well designed tests should validate all stages of the migration process. Detailed test plan should describe all the test phases, scope of the tests, validation criteria, and specify the time frame. To ensure that the applications operate in the same manner as they did in the source database, the tests plan should include data migration, functional, and performance tests, as well as other post migration assessments.

Data migration testing

Extracting and loading process entails conversion between source and target data types. The migrated database should be verified to ensure that all data is accessible, and was imported without any failure or modification that could cause applications to function improperly.

Functional testing

Functional testing is a set of tests in which new and existing functionality of the system are tested after migration. Functional testing includes all components of the RDBMS system (stored procedures, triggers), networking, and application components. The objective of functional testing is to verify that each component of the system functions as it did before migrating, and to verify that new functions are working properly.

Integration testing

Integration testing examines the interaction of each component of the system. All modules of the system and any additional applications (WEB, supportive modules, Java programs, etc.) running against the target database instance should be verified to ensure that there are no problems with the new environment. The tests should also include GUI and text-based interfaces with local and remote connections.

Performance testing

Performance testing of a target database compares the performance of various SQL statements in the target database with the statements' performance in the source database. Before migrating, you should understand the performance profile of the application under the source database. Specifically, you should understand the calls the application makes to the database engine.

Volume/Load stress testing

Volume and load stress testing tests the entire migrated database under high volume and loads. The objective of volume and load testing is to emulate how the migrated system might behave in a production environment. These tests should determine whether any database or application tuning is necessary.

Acceptance testing

Acceptance tests are carried out by the end users of the migrated system. Users are asked to simply explore the system, test usability, and system features, and give direct feedback. After acceptance, tests are usually the last step before going into production with the new system.

Post migration tests

Because a migrated database can be completely new environment for the IT staff, the test plan should also encompass examination of new administration procedures like database backup/restore, daily maintenance operation, or software updates.

9.1.4 Time planning and time exposure

The time planning should be based on realistic and validated estimates. If the estimates for the migration of the application and database are inaccurate, the entire project plan will slip, and the testing is part of the overall project plan.

It is always best to tie all test dates directly to their related migration activity dates. This prevents the test team from being perceived as the cause of a delay. For example, if system testing is to begin after delivery of the final build, then system testing begins the day after delivery. If the delivery is late, system testing starts from the day of delivery, not on a specific date. This is called dependent or relative dating.

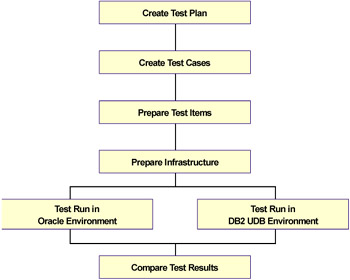

Figure 9-1 shows the phases during a typical migration project. The definition of the test plans happen in a very early moment. The test cases, and all its following tasks, must be done for all test phases as described in Chapter 9.1.3, "Test phases" on page 279.

Figure 9-1: Phases during a migration project

The time exposure of tests depends on the availability of an exiting test plan and already prepared test items. The efforts depend also on the degree of changes during the application and database migration.

| Note | The test efforts can be between 50% and 70% of the total migration effort. |

|

| < Day Day Up > |

|